-

Posts

18736 -

Joined

-

Last visited

-

Days Won

711

Posts posted by Nytro

-

-

Cine de pe aici vine la locatie?

-

Surely you’ve been expecting our email about the DefCamp conference, right? We are happy to officially announce that we’re back with DefCamp - the offline edition, this fall, as we've become accustomed to over the last 10 years.

Registrations are NOW OPEN, which means you can book your early bird ticket right now!

Ready, steady, gooooo pack your bags and cyber knowledge for #DefCamp12!

WHEN: 10th-11th November, 2022

WHERE: Bucharest, Romania

Call for papers: https://def.camp/call-for-papers/

Call for contsts: https://def.camp/call-for-contests/

Become a volunteer: https://def.camp/become-a-volunteer/

Website: https://def.camp/

-

2

2

-

1

1

-

2

2

-

-

Salut, la noi din cate stiu nu e legal si probabil nici in alte tari. O solutie ar fi sa ii dai in judecata dar costa timp, bani si nu merita. Daca erai in SUA merita, acolo se poate da in judecata pentru orice si se pot scoate salarii pe ani de zile din ele.

Cea mai simpla idee ar fi sa discuti cu manager-ul sau cu cineva mai sus de acolo, sa mentionezi ca tu nu esti de acord si ca nu vrei sa se intample asta. Daca nu se poate, sugestia mea sincera e sa pleci de acolo ca nu merita sa lucrezi pentru astfel de specimene.

Cat despre monitorizare nu e nevoie de cine stie ce solutii, monitorizarea ecranului e o mizerie, exista insa solutii de interceptare trafic (root CA pe PC-uri), se verifica DNS-urile, DLP si multe altele printre care Microsoft Defender (cu care cineva poate lua shell la tine). Dar in principiu nu se stie ce faci decat daca faci lucruri suspecte.

Putin pe langa subiect, se pare ca si oamenii de acolo sunt putin cam "sclavi" si nu iti recomand sa lucrezi cu persoane care si-ar vinde familia pentru o firma (si un salariu) de cacat.

Extra: Daca iti cauti altceva, cauta pana nu se afunda SUA si ulterior alte state in recesiune. In mare ar fi de preferat companii mari care au sanse mici sa crape in conditii economice dificile, dar si acestea pot da oameni afara si pe principiul LIFO, bobocii sunt primii care dispar.

-

Un bot mi-a laudat articolul, tot o lauda este, me happy si nu sterg spamu ❤️

-

4

4

-

-

Pff, cere IP si port si nu le pune in codul generat

-

1

1

-

-

Mai aveți la dispoziție exact două săptămâni pentru a vă înscrie la Campionatul Național de Securitate Cibernetică - #RoCSC22

-

Insider threat e cam ignorat in general, "noroc" cu astfel de exemple.

-

1

1

-

-

In 2022 va avea loc a treia ediție a Romanian Cyber Security Challenge - RoCSC, un eveniment anual de tip CTF ce urmărește să descopere tinere talente în domeniul securității cibernetice. La competiție pot participa tineri dornici să își demonstreze abilitățile, ce se pot înscrie online până în ziua concursului. Participanții se vor întrece pe 3 categorii de concurs: Juniori (16-20 de ani), Seniori (21-25 de ani) și Open (disponibil indiferent de vârstă). Participarea este gratuită. Tinerii vor trebui să-și demonstreze abilitățile în domenii precum mobile & web security, crypto, reverse engineering și forensics. Primii clasați la RoCSC22 vor avea oportunitatea de a reprezenta România la Campionatul European de Securitate Cibernetică - ECSC22.Calendarul ROCSC22:

Etapa de calificare: 22.07 ora 16:00 - 23.07 ora 22:00

Finala ROCSC22: 06.08

Bootcamp pentru selectia echipei pentru competitia ECSC22: 17.08 - 21.08Premii:

Categoria Juniori:

Locul I: 2000 euro

Locul II: 1000 euro

Locul III: 500 euro

Locurile 4-10: premii speciale

Categoria Seniori:

Locul I: 2000 euro

Locul II: 1000 euro

Locul III: 500 euro

Locurile 4-10: premii speciale

Pentru a fi eligibili pentru premii, jucătorii trebuie să trimită prezentarea soluțiilor la contact@cyberedu.ro. Jucătorii care participă la categoria Open vor primi doar puncte pe platforma CyberEDU și nu sunt eligibili pentru premii. Competiția este organizată de Centrul Național Cyberint din cadrul Serviciului Român de Informații, Directoratul National de Securitate Cibernetica și Asociația Națională pentru Securitatea Sistemelor Informatice - ANSSI, alături de partenerii Orange România, Bit Sentinel, CertSIGN, Cisco, UIPath, KPMG, Clico, PaloAlto Networks.Programul ROCSC 2022

- Inscrierile se fac pana la data de 22 Iulie 2022:

- Etapa de calificare: 22.07 ora 16:00 - 23.07 ora 22:00

- Finala ROCSC22: 06.08

- Bootcamp pentru selectia echipei pentru competitia ECSC22: 17.08 - 21.08

Pentru înscriere, apăsați aici.-

1

1

-

1

1

-

1

1

-

1

1

-

-

Da, e XOR cu cheia de acolo de jos. Il poti pune aici:

Doar ca mai e ulterior obfuscat.

-

1

1

-

-

Conteaza cum e prelucrat acel payload, cel mai probabil e modificat (criptat, encodat, xorat orice) insa pentru a face ceva util trebuie reconstruit. Mai exact, trebuie sa vezi ce face binarul/exploitul cu acest payload inainte de a-l folosi.

Shellcode nu pare sa fie.

-

Noroc, asta e partea de inceput? Nu pare sa fie ceva comun.

-

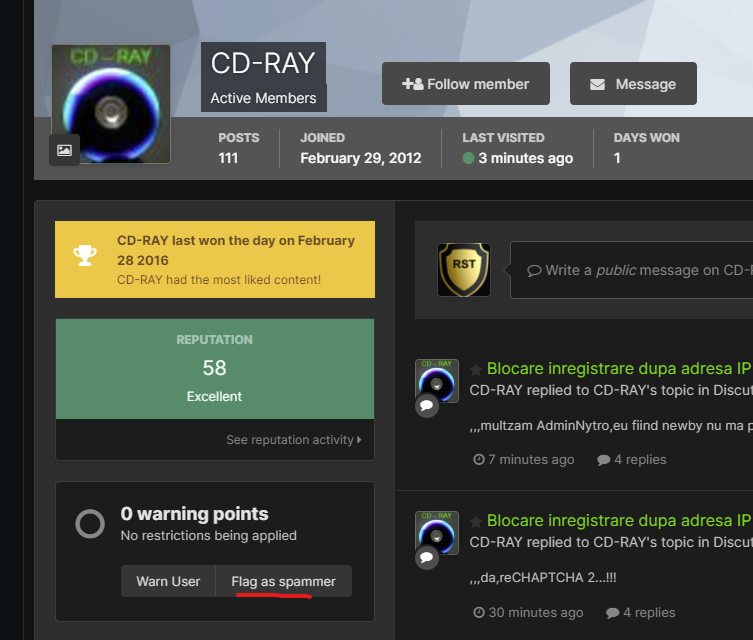

Dap

Se poate inregistra de x ori, dar e rapid sa scapi de cineva asa.

-

Si la noi intra mereu boti si posteaza porcarii, click pe profi, Flag as Spammer, problem solved.

-

19 minutes ago, 0xStrait said:

Cum sa nu poți proteja niște site-uri la un atac DDoS banal de 3000-5000 de boți?

Adevarat, intr-o zi erau 3000+ in alta 11.000+. Cred ca RST a avut "atacuri mult mai grele" si cu iptables -j DROP s-au rezolvat instant.

Sa vorbeasca cu cei care se ocupa de hosting-ul emag si alte servicii de Black Friday

-

1

1

-

1

1

-

3

3

-

-

Wed, May 25, 2022, 3:00 PM - 6:00 PM (your local time)

To our dearest community:

We are very happy to announce that we are restarting our meetup series. As you know, our team cares deeply about sharing information, tooling and knowledge as openly as possible. Therefore, we are proud to be able to have these specialists in cybersecurity share what they have learned on the frontlines:

18:00 - 18:45 Tiberiu Boros, Andrei Cotaie - Living off the land

In security, "Living off the Land" (LotL or LOTL)-type attacks are not new. Bad actors have been using legitimate software and functions to target systems and carry out malicious attacks for many years. And although it's not novel, LotL is still one of the preferred approaches even for highly skilled attackers. Why? Because hackers tend not to reinvent the wheel and prefer to keep a low profile, i.e., leave no "footprints," such as random binaries or scripts on the system. Interestingly, these stealthy moves are exactly why it's often very difficult to determine which of these actions are a valid system administrator and which are an attacker. It's also why static rules can trigger so many false positives and why compromises can go undetected.

Most antivirus vendors do not treat executed commands (from a syntax and vocabulary perspective) as an attack vector, and most of the log-based alerts are static, limited in scope, and hard to update. Furthermore, classic LotL detection mechanisms are noisy and somewhat unreliable, generating a high number of false positives, and because typical rules grow organically, it becomes easier to retire and rewrite the rules rather than maintain and update them.

The Threat Hunting team at Adobe set out to help fix this problem. Using open source and other representative incident data, we developed a dynamic and high-confidence program, called LotL Classifier, and we have recently open sourced it to the broader security community. In this webcast you will learn about the LotL Classifier, intended usage and best practices, and how you can obtain and contribute to the project.

18:50 - 19:35 Ciprian Bejean - Lessons learned when playing with file infectorsLinkedin: https://www.linkedin.com/events/bsidesbucharestonlinemeetup6927924413371678720/

Zoom: https://us05web.zoom.us/j/83643751520?pwd=UDU0RVE0UmZjWHN2UnJPR095SUxpQT09

-

-

Justiţia britanică a decis extrădarea lui Julian Assange în SUA, unde va fi judecat pentru spionaj

20.04.2022 14:28 Julian Assange, în 2017, pe vremea când încă se afla în ambasada Ecuadorului la Londra. Foto: Getty Images

Julian Assange, în 2017, pe vremea când încă se afla în ambasada Ecuadorului la Londra. Foto: Getty Images

Justiţia britanică a autorizat în mod oficial extrădarea în SUA a lui Julian Assange, fondatorul WikiLeaks. Autorităţile americane vor să-l judece pe Assange pentru spionaj, informează AFP.

Tribunalul Westminster Magistrates de la Londra a emis în mod oficial o ordonanţă de extrădare a lui Assange şi revine acum ministrului de interne Priti Patel să o aprobe, deşi avocaţii apărării mai pot încă să facă apel la Înalta Curte.

Prins într-o îndelungată saga judiciară, australianul este căutat de justiţia americană care vrea să-l judece pentru difuzarea, începând din 2010, a peste 700.000 de documente clasificate cu privire la activităţile militare şi diplomatice ale Statelor Unite, mai ales în Irak şi Afganistan, conform Agerpres.

În 2010 și 2011, WikiLeaks a publicat mii de documente militare și diplomatice clasificate despre războaiele din Afganistan și Irak. Procurorii americani au spus că dezvăluirile au pus în pericol viețile a sute de oameni și că Assange era conștient de acest lucru.

Urmărit în temeiul unei legi împotriva spionajului, el riscă 175 de ani de închisoare, într-un caz denunţat de organizaţii de apărare a drepturilor omului drept un grav atac împotriva libertăţii presei.

Pe 14 martie, Julian Assange a văzut dispărând una din ultimele sale speranţe de a evita extrădarea, odată cu refuzul Curţii Supreme britanice de a-i examina recursul.

Editor : M.L.

-

1

1

-

-

RaidForums hacking forum seized by police, owner arrested

ByIonut Ilascu

- April 12, 2022

The RaidForums hacker forum, used mainly for trading and selling stolen databases, has been shut down and its domain seized by U.S. law enforcement during Operation TOURNIQUET, an action coordinated by Europol that involved law enforcement agencies in several countries.

RaidForum’s administrator and two of his accomplices have been arrested, and the infrastructure of the illegal marketplace is now under the control of law enforcement.

14-year old started RaidForums

The administrator and founder of RaidForums, Diogo Santos Coelho of Portugal, aka Omnipotent, has been arrested on January 31 in the United Kingdom and is facing criminal charges. He has been in custody since the arrest, pending the resolution of his extradition proceedings.

The U.S. Department of Justice today says that Coelho is 21 years old, which means that he was just 14 when he launched RaidForums in 2015.

Three domains hosting RaidForums have been seized: “raidforums.com,” “Rf.ws,” and “Raid.Lol.”

According to the DoJ, the marketplace offered for sale more than 10 billion unique records from hundreds of stolen databases that impacted people residing in the U.S.

In a separate announcement today, Europol says that RaidForums had more than 500,000 users and “was considered one of the world’s biggest hacking forums”.

“This marketplace had made a name for itself by selling access to high-profile database leaks belonging to a number of US corporations across different industries. These contained information for millions of credit cards, bank account numbers and routing information, and the usernames and associated passwords needed to access online accounts” - Europol

Taking down the forum and its infrastructure is the result of one year of planning between law enforcement authorities in the United States, the United Kingdom, Sweden, Portugal, and Romania.

It is unclear how long the investigation took but the collaboration between law enforcement agencies allowed authorities to paint a clear picture of the roles different individuals had within RaidForums.

The European law enforcement agency shared few details in its press release but notes that the people that kept RaidForums running worked as administrators, money launderers, stole and uploaded data, and bought the stolen information.

Coelho allegedly controlled RaidForums since January 1, 2015, the indictment reveals, and he operated the site with the help of a few administrators, organizing its structure to promote buying and selling stolen goods.

To make a profit, the forum charged fees for various membership tiers and sold credits that allowed members to access privileged areas of the site or stolen data dumped on the forum.

Coelho also acted as a trusted middleman between parties making a transaction, to provide confidence that buyers and sellers would honor their agreement.

Members become suspicious in February

Threat actors and security researchers first suspected that RaidForums was seized by law enforcement in February when the site began showing a login form on every page.

However, when attempting to log into the site, it simply showed the login page again.

This led researchers and forums members to believe that the site was seized and that the login prompt was a phishing attempt by law enforcement to gather threat actors' credentials.

On February 27th, 2022, the DNS servers for raidforums.com was suddenly changed to the following servers:

jocelyn.ns.cloudflare.com plato.ns.cloudflare.com

As these DNS servers were previously used with other sites seized by law enforcement, including weleakinfo.com and doublevpn.com, researchers believed that this added further support that the domain was seized.

Before becoming the hackers’ favorite place to sell stolen data, RaidForums had a more humble beginning and was used for organizing various types of electronic harassment, which included swatting targets (making false reports leading to armed law enforcement intervention) and "raiding," which the DoJ describes as "posting or sending an overwhelming volume of contact to a victim’s online communications medium."

The site became well-known over the past couple of years and it was frequently used by ransomware gangs and data extortionists to leak data as a way to pressure victims into paying a ransom, and was used by both the Babuk ransomware gang and the Lapsus$ extortion group in the past.

The marketplace has been active since 2015 and it was for a long time the shortest route for hackers to sell stolen databases or share them with members of the forum.

Sensitive data traded on the forum included personal and financial information such as bank routing and account numbers, credit cards, login information, and social security numbers.

While many cybercrime forums catered to Russian-speaking threat actors, RaidForums stood out as being the most popular English-speaking hacking forum.

After Russia invaded Ukraine, and many threat actors began taking sides, RaidForums announced that they were banning any member who was known to be associated with Russia.

-

1

1

-

1

1

-

The Role...

Individual with background in development, capable of driving the security engineering needs of the application security aspects of products built in-house and/or integrated from 3rd parties and ensuring alignment with the PPB technology strategy.

Work closely with the other Security Engineering areas (Testing & Cloud), wider Security team and project teams throughout the organization to ensure the adoption of best of breed Security Engineering practices, so that security vulnerabilities are detected and acted upon as early as possible in the project lifecycle.

In addition to ensuring a continuous and reliable availability and performance of the existing security tools (both commercial and internally developed), the role also involves its continuous improvement (namely to cover emerging technologies/frameworks) and the coordination and hands-on development of the internally developed tools to meet new business and governance needs.

What you´ll be doing...

- Liaise with business stakeholders to ensure all business projects are assessed from a security point of view and input is provided in order to have security requirements implemented before project is delivered;

- Develop and maintain engineering components autonomously (Python) that enable the Application Security team to ensure internally developed code is following security best practices;

- Research and evaluate emerging technologies to detect, mitigate, triage, and remediate application security defects across the enterprise;

- Understand the architecture of production systems including identifying the security controls in place and how they are used;

- Act as part of the InfoSec Engineering team, coordinating and actively participating in the timely delivery of agreed pieces of work.

- Ensure a continuous and reliable availability and performance of the existing security tools (both commercial and internally developed);

- Support the engineering needs of the InfoSec Engineering and wider Security function.

- Build strong business relationships with partners inside and outside PPB to understand mutual goals, requirements, options and solutions to complex or intangible application security issues;

- Lead and coach junior team members supporting them technically in their development;

- Incident response (Security related), capable to perform triage and with support from other business functions provide mitigation advise.

- Capable of suggest and implement security controls for both public & private clouds

- Maintain and develop components to support application security requirements in to Continuous Delivery methodologies;

- Research maintain and integrate Static Code Analysis tools (SAST) according companies' requirements;

- Plan and develop deliverables according SCRUM.

What We're Looking For...

- Good written and verbal communication skills;

- A team player, who strives to maximize team and departmental performance;

- Resolves and/or escalates issues in a timely fashion;

- Knowledge sharing and interest to grow other team members;

- Effectively manages stakeholder interaction and expectations;

- Develops lasting relationships with stakeholders and key personnel across security;

- Influences business stakeholders to develop a secure mindset;

- Interact with development teams to influence and expand their secure mindset;

Aplicare: https://apply.betfairromania.ro/vacancy/senior-infosec-engineer-6056-porto/6068/description/

Daca sunteti interesati, astept un PM si va pun in legatura cu "cine trebuie"

-

1

1

-

2

2

-

ÎNREGISTRARE

Calendar activități

- Înregistrare concurs: Începând cu 01/03/2022

- Etapa de pregătire: Începând cu 04/04/2022

- Concurs individual: 06/05/2022 - 08/05/2022 (și finalul înregistrărilor)

- Concurs pe echipe: 20/05/2022 - 22/05/2022

- Raport individual de performanță: 31/05/2022

Mai multe detalii despre calendarul de activități, aici.

Premii

- premii în bani sau gadget-uri

- diplome de participare sau pentru poziția în clasamentul general

- rezultatele tale contribuie la clasamentul #ROEduCyberSkills

- raport individual de performanță recunoscut de companii si alte organizații - detalii, aici

Cum mă pot înscrie?

Înregistrarea este gratuită. Pentru a fi eligibil pentru premii și în clasamentul #ROEduCyberSkills, trebuie să fii înscris până la finalul probei individuale.

Vei avea nevoie de această parolă la înregistrare: parola-unr22-812412412

Unde pot discuta cu ceilalți participanți?

Pentru a intra în grupul de Discord - unde vom publica anunțuri și vei putea interacționa cu mentorii, trebuie să:

- folosești această invitație pentru a primi drepturi la secțiunea - "UNR22"

- trimiti un mesaj botului @JDB "/ticket 8xu4tR7jGN", dacă ești deja membru pe canalul de Discord oficial al CyberEDU.ro, ca în aceasta fotografie

Detalii: https://unbreakable.ro/inregistrare

-

1

1

-

7 Suspected Members of LAPSUS$ Hacker Gang, Aged 16 to 21, Arrested in U.K.

The City of London Police has arrested seven teenagers between the ages of 16 and 21 for their alleged connections to the prolific LAPSUS$ extortion gang that's linked to a recent burst of attacks targeting NVIDIA, Samsung, Ubisoft, LG, Microsoft, and Okta.

The development, which was first disclosed by BBC News, comes after a report from Bloomberg revealed that a 16-year-old Oxford-based teenager is the mastermind of the group. It's not immediately clear if the minor is one among the arrested individuals. The said teen, under the online alias White or Breachbase, is alleged to have accumulated about $14 million in Bitcoin from hacking.

"I had never heard about any of this until recently," the teen's father was quoted as saying to the broadcaster. "He's never talked about any hacking, but he is very good on computers and spends a lot of time on the computer. I always thought he was playing games."

According to security reporter Brian Krebs, the "ringleader" purchased Doxbin last year, a portal for sharing personal information of targets, only to relinquish control of the website back to its former owner in January 2022, but not before leaking the entire Doxbin dataset to Telegram.

This prompted the Doxbin community to retaliate by releasing personal information on "WhiteDoxbin," including his home address and videos purportedly shot at night outside his home in the U.K.

What's more, the hacker crew has actively recruited insiders via social media platforms such as Reddit and Telegram since at least November 2021 before it surfaced on the scene in December 2021.

At least one member of the LAPSUS$ cartel is also believed to have been involved with a data breach at Electronic Arts last July, with Palo Alto Networks' Unit 42 uncovering evidence of extortion activity aimed at U.K. mobile phone customers in August 2021.

LAPSUS$, over a mere span of three months, accelerated their malicious activity, swiftly rising to prominence in the cyber crime world for its high-profile targets and maintaining an active presence on the messaging app Telegram, where it has amassed 47,000 subscribers.

Microsoft characterized the group as an "unorthodox" group that "doesn't seem to cover its tracks" and that uses a unique blend of tradecraft, which couples phone-based social engineering and paying employees of target organizations for access to credentials.

If anything, LAPSUS$' brazen approach to striking companies with little regard for operational security measures appears to have cost them dear, leaving behind a forensic trail that led to their arrests.

The last message from the group came on Wednesday when it announced that some of its members were taking a week-long vacation: "A few of our members has a vacation until 30/3/2022. We might be quiet for some times. Thanks for understand us - we will try to leak stuff ASAP."

Found this article interesting? Follow THN on Facebook, Twitter and LinkedIn to read more exclusive content we post. -

MARCH 24, 20222022 CLOUD KUBERNETES SERVERLESS STRATEGY

What to look for when reviewing a company's infrastructure

- The challenge of prioritization

-

The review process

-

Phase 1: Cloud Providers

- Stage 1: Identify the primary CSP

- Stage 2: Understand the high-level hierarchy

- Stage 3: Understand what is running in the Accounts

- Stage 4: Understand the network architecture

- Stage 5: Understand the current IAM setup

- Stage 6: Understand the current monitoring setup

- Stage 7: Understand the current secrets management setup

- Stage 8: Identify existing security controls

- Stage 9: Get the low-hanging fruits

-

Phase 2: Workloads

- Stage 1: Understand the high-level business offerings

- Stage 2: Identify the primary tech stack

- Stage 3: Understand the network architecture

- Stage 4: Understand the current IAM setup

- Stage 5: Understand the current monitoring setup

- Stage 6: Understand the current secrets management setup

- Stage 7: Identify existing security controls

- Stage 8: Get the low-hanging fruits

- Phase 3: Code

-

Phase 1: Cloud Providers

- Let’s put it all together

- Conclusions

Early last year, I wrote “On Establishing a Cloud Security Program”, outlining some advice that can be undertaken to establish a cloud security program aimed at protecting a cloud native, service provider agnostic, container-based offering. The result can be found in a micro-website which contains the list of controls that can be rolled out to establish such cloud security program: A Cloud Security Roadmap Template.

Following that post, one question I got asked was: “That’s great, but how do you even know what to prioritize?”

The challenge of prioritization

And that’s true for many teams, from the security team of a small but growing start-up to a CTO or senior engineer at a company with no cloud security team trying to either get things started or improve what they have already.

Here I want to tackle precisely the challenge many (new) teams face: getting up to speed in a new environment (or company altogether) and finding its most critical components.

This post, part of the “Cloud Security Strategies” series, aims to provide a structured approach to review the security architecture of a multi-cloud SaaS company, having a mix of workloads (from container-based, to serverless, to legacy VMs). The outcome of this investigation can then be used to inform both subsequent security reviews and prioritization of the Cloud Security Roadmap.

The review process

There are multiple situations in which you might face a (somewhat completely) new environment:

- You are starting a new job/team: Congrats! You are the first engineer in a newly spun-up team.

- You are going through a merger or acquisition: Congrats (I think?)! You now have a completely new setup to review before integrating it with your company’s.

- You are delivering a consulting engagement: This is different from the previous 2, as this usually means you are an external consultant. Although the rest of the post is more tailored towards internal teams, the same concepts can also be used by consultancies.

So, where do you start? What kind of questions should you ask yourself? What information is crucial to obtain?

Luckily, abstraction works in our favour.

Abstraction Levels

Abstraction Levels

We can split the process into different abstraction levels (or “Phases”), from cloud, to workloads, to code:

- Start from the Cloud Providers.

- Continue by understanding the technology powering the company’s Workloads.

- Complete by mapping the environments and workloads to their originating source Code (a.k.a. code provenance).

The 3 Phases of the Review

The 3 Phases of the Review

Going through this exercise allows you to familiarise yourself with a new environment and, as a good side-effect, organically uncover its security risks. These risks will then be essential to put together a well-thought roadmap that addresses both short (a.k.a. extinguishing the fires) and long term goals (a.k.a. improve the overall security and maturity of the organization).

Once you have a clear picture of what you need to secure, you can then prioritize accordingly.

It is important to stress that you need to understand how something works before securing (or attempting to secure) it. Let me repeat it; you can’t secure what you don’t understand.

A note on breadth vs depth

A caution of warning: avoid rabbit holes; not only they can be a huge time sink, but they are also an inefficient use of limited time.

You should put your initial focus on getting a broad coverage of the different abstraction levels (Cloud Providers, Workloads, Code). Only after you complete the review process you’ll have enough context to determine where additional investigation is required.

Phase 1: Cloud Providers

Cloud Service Providers (or “CSPs” in short) provide the first layer of abstraction, as they are the primary collection of environments and resources.

Hence, the main goal for this phase is to understand the overall organizational structure of the infrastructure.

Stage 1: Identify the primary CSP

In this post, we target a multi-cloud company with resources scattered across multiple CSPs.

Since it wouldn’t be technically feasible to tackle everything simultaneously, start by identifying the primary cloud provider, where Production is, and start from it. In fact, although it is common to have “some” Production services in another provider (maybe a remnant of a past, and incomplete, migration), it is rare for a company to have an equal split of Production services among multiple CSPs (and if someone claims so, verify it is the case). Core services are often hosted in one provider, while other CSPs might host just some ancillary services.

Once identified, consider this provider as your primary target. Go through the steps listed below, and only repeat them for the other CSPs at the end.

A note on naming conventions

For the sake of simplicity, for the rest of this post I will use the term “Account” to refer both to AWS Accounts and GCP Projects.

Stage 2: Understand the high-level hierarchy

General Organizational Layouts for AWS and GCP

- How many Organizations does the company have?

- How is each Organization designed? If AWS, what do the Organizational Units (OUs) look like? If GCP, what about the Folder hierarchy?

- Is there a clear split between environment types? (i.e., Production, Staging, Testing, etc.)

- Which Accounts are critical? (i.e., which ones contain critical data or workloads?) If you are lucky, has someone already compiled a risk rating of the Accounts?

- How are new Accounts created? Are they created manually or automatically? Are they automatically onboarded onto security tools available to the company?

The quickest way to start getting a one-off list of Accounts (as well as getting answers for the questions above) is through the Organizations page of your Management AWS Account, or the Cloud Resource Manager of your GCP Organization.

You can see mine below (yes, my AWS Org is way more organized than my 2 GCP projects 😅):

Sample Organizations for AWS and GCP

Otherwise, if you need some inspiration for more “creative” ways to get a list of all your Accounts, you can refer to the “How to inventory AWS accounts” blog post from Scott Piper.

Improving from the one-off list, you could later consider adding some automation to discover and document Accounts automatically. One example of such a tool is cartography. In my Continuous Visibility into Ephemeral Cloud Environments series, I’ve personally blogged about this:

- Mapping Moving Clouds: How to stay on top of your ephemeral environments with Cartography

- Tracking Moving Clouds: How to continuously track cloud assets with Cartography

Stage 3: Understand what is running in the Accounts

Here the goal is to get a rough idea of what kind of technologies are involved:

- Is the company container-heavy? (e.g., Kubernetes, ECS, etc.)

- Is it predominantly serverless? (e.g., Lambda, Cloud Function, etc.)

- Is it relying on “legacy” VM-based workloads? (e.g., vanilla EC2)

If you have access to Billing, this should be enough to understand which services are the biggest spenders. The “Monthly spend by service” view of AWS Cost Explorer, or equivalently “Cost Breakdown” of GCP Billing, can provide an excellent snapshot of the most used services in the Organization. Otherwise, if you don’t (and can’t) have access to Billing, ask your counterpart teams (usually platform/SRE teams).

Monthly spend by service view of AWS Cost Explorer

Monthly spend by service view of AWS Cost Explorer

(If you are interested in cost-related strategies, CloudSecDocs has a specific section on references to best practices around Cost Optimization.)

In addition, both AWS Config (if enabled) and GCP Cloud Asset Inventory (by default) allows having an aggregated view of all assets (and their metadata) within an Account or Organization. This view can be beneficial to understand, at a glance, the primary technologies being used.

AWS Config and GCP Cloud Asset Inventory

At the same time, it is crucial to start getting an idea around data stores:

- What kind of data is identified by the business as the most sensitive and critical to secure?

- What type of data (e.g., secrets, customer data, audit logs, etc.) is stored in which Account?

- How is data rated according to the company’s data classification standard (if one exists)?

Stage 4: Understand the network architecture

In this stage, the goal is to understand what the network architecture looks like:

- What are the main entry points into the infrastructure? What services and components are Internet-facing and can receive unsolicited (a.k.a. untrusted and potentially malicious) traffic?

- How do customers get access to the system? Do they have any network access? If so, do they have direct network access, maybe via VPC peering?

- How do engineers get access to the system? Do they have direct network access? Identify how engineering teams can access the Cloud Providers’ console and how they can programmatically interact with their APIs (e.g., via command-line utilities like AWS CLI or gcloud CLI).

- How are Accounts connected to each other? Is there any Account separation in place?

- Is there any VPC peering or shared VPCs between different Accounts?

- How is firewalling implemented? How are Security Groups and Firewall Rules defined?

- How is the edge protected? Is anything like Cloudflare used to protect against DDoS and common attacks (via a WAF)?

- How is DNS managed? Is it centralized? What are the principal domains associated with the company?

- Is there any hybrid connectivity with any on-prem data centres? If so, how is it set up and secured? (For those interested, CloudSecDocs has a specific section on references around Hybrid Connectivity.)

Multi-account, multi-VPC architecture - Courtesy of Amazon

Multi-account, multi-VPC architecture - Courtesy of Amazon

There is no easy way to obtain this information. If you are lucky, your company might have up to date network diagrams that you can consult. Otherwise, you’ll have to make an effort to interview different stakeholders/teams to try to extract the tacit knowledge they might have.

For this specific reason, this stage might become one of the most time-consuming parts of the entire review process (and often the one skipped for this exact reason), especially if no up to date documentation is available. Nonetheless, this stage is the one that might bring the most significant rewards in the long run: as a (Cloud) Security team, you should be confident around your knowledge of how data flows within your systems. This knowledge will then be critical for providing accurate and valuable recommendations and risk ratings going forward.

Stage 5: Understand the current IAM setup

Identity and Access Management, or IAM in short, is the apex of the headaches for security teams.

In this stage, the goal is to understand how authentication and authorization to cloud providers are currently set up. The problem, though, is twofold, as you’ll have to tackle this not only for human (i.e., engineers) but also for automated (i.e., workloads, CI jobs, etc.) access.

Cross Account Auditing in AWS

Cross Account Auditing in AWS

The previous stage started looking at how engineering teams connect to Cloud Providers concerning human access. Now is the time to expand on this:

- Where are identities defined? Is an Identity Provider (like G Suite, Okta, or AD) being used?

- Are the identities being federated in the Cloud Provider from the Identity Provider? Are the identities being synced automatically from the Identity Provider?

- Is SSO being used?

- Are named users being used as a common practice, or are roles with short-lived tokens preferred? Here the point is not to do a full IAM audit but to understand the standard practice.

- For high-privileged accounts, are good standards enforced? (e.g., password policy, MFA - preferably hardware)

- How is authorization enforced? Is the principle of least privilege generally followed, or are overly permissive (non fine-tuned) policies usually used? For example, CloudSecDocs has summaries of best practices for IAM in both AWS and GCP.

- How is Role-Based Access Control (RBAC) used? How is it set up, enforced, and audited?

- Is there a documented process describing how access requests are managed and granted?

- Is there a documented process describing how access deprovisioning is performed during offboarding?

For automated access:

- How do Accounts interact with each other? Are there any cross-Account permissions?

- Are long-running (static) keys and service accounts generally used, or are short-lived tokens (i.e., STS) usually preferred?

- How is authorization enforced? Is the principle of least privilege generally followed, or are overly permissive (non fine-tuned) policies usually used?

Visualization of Cross-Account Role Access, obtained via Cartography

Visualization of Cross-Account Role Access, obtained via Cartography

It is important to stress that you won’t have time to go into reviewing every single policy at this stage, at least for any decently-sized environment. What you should be able to do, though, is get a grasp of the company’s general trends and overall maturity level.

We will tackle a proper security review later.

Stage 6: Understand the current monitoring setup

Next, special attention should be put on the current setup for collecting, aggregating, and analyzing security logs across the entire estate:

- Are security-related logs collected at all?

- If so, which services offered by the Cloud Providers are already being leveraged? For AWS, are at least CloudTrail, CloudWatch, and GuardDuty enabled? For GCP, what about Cloud Monitoring and Cloud Logging?

- What kind of logs are being already ingested?

- Where are the logs collected? Are logs from different Accounts all ingested in the same place?

- What’s the retention policy for security-related logs?

- How are logs analyzed? Is a SIEM being used?

- Who has access to the SIEM and the raw storage of logs?

For some additional pointers, in my Continuous Visibility into Ephemeral Cloud Environments series, I’ve blogged about what could (and should) be logged:

Architecture Diagram - Security Logging in AWS and GCP

Then, focus on the response processes:

- Are any Intrusion Detection systems deployed?

- Are any Data Loss Prevention systems deployed?

- Are there any processes and playbooks to follow in case of an incident?

- Are there any processes to detect credential compromise situations?

- Are there any playbooks or automated tooling to contain tainted resources?

- Are there any playbooks or automated tooling to aid in forensic evidence collection for suspected breaches?

Stage 7: Understand the current secrets management setup

In this stage, the goal is to understand how secrets are generated and managed within the infrastructure:

- How are new secrets generated when needed? Manually or automatically?

- Where are secrets stored?

- Is a secrets management solution (like HashiCorp Vault, AWS Secrets Manager, or GCP Secret Manager) currently used?

- Are processes around secrets management defined? What about rotation and revocation of secrets?

High-Level Overview of Vault Integrations

High-Level Overview of Vault Integrations

For additional pointers, the Secrets Management section of CloudSecDocs has references to some other solutions in this space.

Once again, resist the urge to start “fixing” things for now and keep looking and documenting.

Stage 8: Identify existing security controls

In this stage, the goal is to understand which controls have already been implemented, maybe by a previous iteration of the Security team, and can already be leveraged:

- What security boundaries are defined? For example, are Service Control Policies (AWS) or Organizational Policies (GCP) used?

-

What off-the-shelf services offered by Cloud Providers are being used?

-

For AWS, what “Security, Identity, and Compliance” services are enabled? The main ones you might want to look for are:

- Detection: Security Hub, GuardDuty, Inspector, Config, CloudTrail, Detective.

- Infrastructure protection: Firewall Manager, Shield, WAF.

- Data protection: Macie, CloudHSM.

Relationships Between AWS Logging/Monitoring Services.

Relationships Between AWS Logging/Monitoring Services.

-

For GCP, what “Security and identity” services are enabled? The main ones are:

- Detection: Security Command Center, Cloud Logging, Cloud IDS, Cloud Asset Inventory

- Infrastructure protection: Resource Manager, Identity-Aware Proxy, VPC Service Controls

- Data protection: Cloud Data Loss Prevention, Cloud Key Management

-

For AWS, what “Security, Identity, and Compliance” services are enabled? The main ones you might want to look for are:

- What other (custom or third party) solutions have been deployed?

For additional pointers, CloudSecDocs has sections describing off-the-shelf services for both AWS and GCP.

Stage 9: Get the low-hanging fruits

Finally, if you managed to reach this stage, you should have a clearer picture of the situation in the primary Cloud Provider. As a final stage for the Cloud-related phase of this review, you could then run a tactical scan (or benchmark suite) against your Organization.

The quickest way to get a quick snapshot of vulnerabilities and misconfigurations in your estate is to reference Security Hub for AWS and Security Command Center for GCP. If they are already enabled, of course.

Sample Dashboards for AWS Security Hub and GCP Security Command Center

Otherwise, on CloudSecDocs you can find some additional tools for both testing (AWS, GCP, Azure) and auditing (AWS, GCP, Azure) environments.

Note that this is not meant to be an exhaustive penetration test but a lightweight scan to identify high exposure and high impact misconfigurations or vulnerabilities already in Production.

Subscribe to CloudSecList

If you found this article interesting, you can join thousands of security professionals getting curated security-related news focused on the cloud native landscape by subscribing to CloudSecList.com.

Subscribe

Phase 2: Workloads

Next, let’s focus on Workloads: the services powering the company’s business offerings.

The main goal for this phase is to understand the current security maturity level of the different tech stacks involved.

Stage 1: Understand the high-level business offerings

As I briefly mentioned in the first part of this post, you can’t secure what you don’t understand. This concept becomes predominantly true when you are tasked with securing business workloads.

Hence, here the goal is to understand what are the key functionalities your company offers to their customers:

- How many key functionalities does the company have? For example, if you are a banking company, these functionalities could be payments, transactions, etc.

- How are the main functionalities designed? Are they made by micro-services or a monolith?

- Is there a clear split between environment types? (i.e., Production, Staging, Testing, etc.)

- Which functionalities are critical? (i.e., both in terms of data and customer needs)

Monolithic vs Microservice Architectures

Monolithic vs Microservice Architectures

If you are fortunate, your company might have already undergone the process (and pain) to define a way to keep an inventory of business offerings and respective workloads and, most importantly, keep it up to date. Otherwise, like in most cases, you’ll have to partner with product teams to understand this.

Then, try to map business functionalities to technical workloads, understanding their purpose for the business:

- Which ones are Internet-facing?

- Which ones are customer-facing?

- Which ones are time-critical?

- Which ones are stateful? Which ones are stateless?

- Which ones are batch processing?

- Which ones are back-office support?

Stage 2: Identify the primary tech stack

Once the key functionalities have been identified, you’ll want to split them by technologies.

If you recall, the goal of Stage 3 (“Understand what is running in the Accounts”) of the Cloud-related phase of this review was to get a rough idea of what kind of technologies the company relies upon. Here is the time to go deeper by identifying what is the main stack.

For the rest of the post, I’ll assume this can be one of the three following macro-categories (each can then have multiple declinations):

- Container-based (e.g., Kubernetes, ECS, etc.)

- Serverless (e.g., Lambda, Cloud Function, etc.)

- VM-based (e.g., vanilla EC2)

Similar to the challenge we faced for Cloud Providers, it won’t be technically feasible to tackle everything simultaneously. Hence, start by identifying the primary tech stack, the one Production mainly relies upon, and start from it. Your company might probably rely on a mix (if not all) of those macro-areas: nonetheless, divide and conquer.

Kubernetes vs Serverless vs VMs

Kubernetes vs Serverless vs VMs

Once identified, consider this stack as your primary target. Go through the steps listed below, and only repeat them for the other technologies at the end.

Stage 3: Understand the network architecture

While in Stage 4 of the Cloud-related phase of the review you started making global considerations like what are the main entry points into the infrastructure or how do customers and engineers get access to the systems, in this stage the goal is to understand what the network architecture of the workloads looks like.

Depending on the macro-category of technologies involved, you can ask different questions.

Kubernetes:

- Which (and how many) clusters do we have? Are they regional or zonal?

- Are they managed (EKS, GKE, AKS) or self-hosted?

- How do the clusters communicate with each other? What are the network boundaries?

- Are clusters single or multi-tenant?

- Are either the control plane or nodes exposed over the Internet?

- How do engineers connect? How can they run kubectl? Do they use a bastion or something like Teleport?

- What are the Ingresses?

- Are there any Stateful workloads running in these clusters?

Kubernetes Trust Boundaries - Courtesy of CNCF

Kubernetes Trust Boundaries - Courtesy of CNCF

Serverless:

- Which type of data stores are being used? For example, SQL-based (e.g., RDS), NoSQL (e.g., DynamoDB), or Document-based (e.g., DocumentDB)?

- Which type of application workers are being used? For example, Lambda or Cloud Functions?

- Is an API Gateway (incredibly named in the same way by both AWS and GCP! 🤯) being used?

- What is used to de-couple the components? For example, SQS or Pub/Sub?

A high-level architecture diagram of an early version of CloudSecList.com

A high-level architecture diagram of an early version of CloudSecList.com

VMs:

- What Virtual Machines are directly exposed to the Internet?

- Which Operating Systems (and versions) are being used?

- How are hosts hardened?

- How do engineers connect? Do they SSH directly into the hosts, or is a remote session manager (e.g., SSM or OS Login) used?

- What’s a pet, and what’s cattle?

Stage 4: Understand the current IAM setup

In this stage, the goal is to understand how authentication and authorization to workloads are currently set up.

These questions are general, regardless of the technology type:

- How are engineers interacting with workloads? How do they troubleshoot them?

- How is authorization enforced? Is the principle of least privilege generally followed, or are overly permissive (non fine-tuned) policies usually used?

- How is Role-Based Access Control (RBAC) used? How is it set up, enforced, and audited?

- Are workloads accessing any other cloud-native services (e.g., buckets, queues, databases)? If yes, how are authentication and authorization to Cloud Providers set up and enforced? Are they federated, maybe via OpenID Connect (OIDC)?

- Are workloads accessing any third party services? If yes, how are authentication and authorization set up and enforced?

OIDC Federation of Kubernetes in AWS - Courtesy of @mjarosie

OIDC Federation of Kubernetes in AWS - Courtesy of @mjarosie

For additional pointers, CloudSecDocs has sections describing how authentication and authorization in Kubernetes work.

Stage 5: Understand the current monitoring setup

The goal of this stage is to understand which logs are collected (and how) from workloads.

Some of the questions to ask are general, regardless of the technology type:

- Are security-related logs collected at all?

- What kind of logs are being already ingested?

- How are logs collected?

- Where are the logs forwarded?

These can then can be declined and tailored to the relevant tech type.

Kubernetes:

- Are audit logs collected?

- Are System Calls and Kubernetes Audit Events collected via Falco?

- Is a data collector like fluentd used to collect logs?

- Is the data collector deployed as a Sidecar or Daemonset?

High-Level Architecture of Falco

High-Level Architecture of Falco

For additional pointers, CloudSecDocs has a section focused on Falco and its usage.

Serverless:

- How are applications instrumented?

- Are metrics and logs collected via a data collector like X-Ray or Datadog?

Sample X-Ray Dashboard

Sample X-Ray Dashboard

VMs:

- For AWS, is the CloudWatch Logs agent used to send log data to CloudWatch Logs from EC2 instances automatically?

- For GCP, is the Ops Agent used to collect telemetry from Compute Engine instances?

- Is an agent like OSQuery used to provide endpoint visibility?

Stage 6: Understand the current secrets management setup

In this stage, the goal is to understand how secrets are made available to workloads to consume.

- Where are workloads fetching secrets from?

- How are secrets made available to workloads? Via environment variables, filesystem, etc.

- Do workloads also generate secrets, or are they limited to consuming them?

- Is there a practice of hardcoding secrets?

- Assuming a secrets management solution (like HashiCorp Vault, AWS Secrets Manager, or GCP Secret Manager) is being used, how are workloads authenticating? What about authorization (RBAC)?

- Are secrets bound to a specific workload, or is any workload able to potentially fetch any other secret? Is there any separation or boundary?

- Are processes around secret management defined? What about rotation and revocation of secrets?

Vault's Sidecar for Kubernetes - Courtesy of HashiCorp

Vault's Sidecar for Kubernetes - Courtesy of HashiCorp

Stage 7: Identify existing security controls

In this stage, the goal is to understand which controls have already been implemented.

It is unfeasible to list them all here, as they can depend highly on your actual workloads.

Nonetheless, try to gather information about what has already been deployed. For example:

- Any admission controllers (e.g., OPA Gatekeeper) or network policies in Kubernetes?

- Any third party agent for VMs?

- Any custom or third party solution?

Specifically for Kubernetes, CloudSecDocs has a few sections describing focus areas and security checklists.

Stage 8: Get the low-hanging fruits

As a final stage for the Workloads-related phase of this review, you could then run a tactical scan (or benchmark suite) against your key functionalities.

As for the previous stage, this is highly dependent on the actual functionalities and workloads developed within your company.

Nonetheless, on CloudSecDocs you can find additional tools for testing and auditing Kubernetes clusters.

Note that this is not meant to be an exhaustive penetration test but a lightweight scan to identify high exposure and high impact misconfigurations or vulnerabilities already in Production.

Phase 3: Code

Those paying particular attention might have noticed that I haven’t mentioned the words source code a single time so far. That’s not because I think code is not essential. Quite the opposite, as I reckon it deserves a phase of its own.

Thus, the main goal for this phase is to complete the review by mapping the environments and workloads to their originating source code and understanding how code reaches Production.

Stage 1: Understand the code’s structure

The goal of this stage is to understand how code is structured, as it will significantly affect the strategies you will have to use to secure it.

Conway’s Law does a great job in capturing this concept:

Any organization that designs a system (defined broadly) will produce a design whose structure is a copy of the organization’s communication structure.

Meaning that software usually ends up “shaped like” the organizational structure they are designed in or designed for.

Visualization of Conway's Law - Courtesy of Manu Cornet

Visualization of Conway's Law - Courtesy of Manu Cornet

In technical terms, the primary distinction to make is about monorepo versus multi-repos philosophy for code organization:

- The monorepo approach uses a single repository to host all the code for the multiple libraries or services composing a company’s projects.

- The multi-repo approach uses several repositories to host the multiple libraries or services of a project developed by a company.

I will probably blog more in the future about how each of these philosophies affects security, but for now, try to understand how is the company designed and where the code is.

Then, look for which security controls are already added to the repositories:

- Are CODEOWNERS being utilized?

- Are there any protected branches?

- Are code reviews via Pull Requests being enforced?

- Are linters automatically run on the developers’ machines before raising a Pull Request?

- Are static analysis tools (for the relevant technologies used) automatically run on the developers’ machines before raising a Pull Request?

- Are secrets detection tools (e.g., git-secrets) automatically run on the developers’ machines before raising a Pull Request?

Stage 2: Understand the adoption of Infrastructure as Code

The goal of this stage is to understand which resources are defined as code, and which are created manually.

- Which Infrastructure as Code (IaC) frameworks are being used?

- Are Cloud environments managed via IaC? If not, what is being excluded?

- Are Workloads managed via IaC? If not, what is being excluded?

- How are third party modules sourced and vetted?

Adoption of different IaC frameworks - Courtesy of The New Stack

Adoption of different IaC frameworks - Courtesy of The New Stack

Stage 3: Understand how CI/CD is setup

In this stage, the goal is to understand how code is built and deployed to Production.

- What CI/CD platform (e.g., Github, GitLab, Jenkins, etc.) is being used?

-

Is IaC, for both Cloud environments and Workloads, automatically deployed via CI/CD?

- How is Terraform applied?

- How are container images built?

-

Is IaC automatically tested and validated in the pipeline?

- Is static analysis of Terraform code performed? (see Compliance as Code - Terraform on CloudSecDocs).

- Is static validation of deployments (i.e., Kubernetes manifests, Dockerfiles, etc.) performed? (see Compliance as Code - OPA on CloudSecDocs).

- Are containers automatically scanned for known vulnerabilities? (see Container Scanning Strategies on CloudSecDocs).

- Are dependencies automatically audited and scanned for vulnerabilities?

Pipeline for container images - Courtesy of Sysdig

Pipeline for container images - Courtesy of Sysdig

-

How is code provenance guaranteed?

- Are all container images generated from a set of chosen and hardened base images? (see Secure Dockerfiles on CloudSecDocs).

- Are all the workloads fetching container images from a secure and hardened container registry? (see Image Pipeline on CloudSecDocs).

- For Kubernetes, is Binary Authorization enforced to ensure only trusted container images are deployed to the infrastructure? (see Binary Authorization on CloudSecDocs).

- Is a framework (like TUF, in-toto, providence) utilized to protect the integrity of the Supply Chain? (see Pipeline Supply Chain on CloudSecDocs).

- Have other security controls been embedded in the pipeline?

- Are there any documented processes for infrastructure and configuration changes?

- Is there a documented Secure Software Development Life Cycle (SSDLC) process?

Stage 4: Understand how the CI/CD platform is secured

Finally, the goal of this stage is to understand how the chosen CI/CD platform itself is secured. In fact, as time passes, CI/CD platforms are becoming focal points for security teams, as a compromise of such systems might mean a total compromise of the overall organization.

A few questions to ask:

-

How is access control defined?

- Who has access to the CI/CD platform?

- How does the CI/CD platform authenticate to code repositories?

- How does the CI/CD platform authenticate to Cloud Providers and their environments?

- How are credentials to those systems managed? Is the blast radius of a potential compromise limited?

- Is the principle of least privilege followed?

- Are long-running (static) keys generally used, or are short-lived tokens (e.g., via OIDC) usually preferred?

Credentials management in a pipeline - Courtesy of WeaveWorks

Credentials management in a pipeline - Courtesy of WeaveWorks

- What do the security monitoring and auditing of the CI/CD platform look like?

- Are CI runners hardened?

- Is there any isolation between CI and CD?

- How are 3rd party workflows sourced and vetted?

Security mitigations for CI/CD pipelines - Courtesy of Mercari

Security mitigations for CI/CD pipelines - Courtesy of Mercari

For additional pointers, CloudSecDocs has a section focused on CI/CD Providers and their security.

Let’s put it all together

Thanks for making it this far! 🎉

Hopefully, going through this exercise will allow you to have a clearer picture of your company’s current security maturity level. Plus, as a side-effect, you should now have a rough idea of the most critical areas which deserve more scrutiny.

The goal from here is to use the knowledge you uncovered to put together a well-thought roadmap that addresses both short (a.k.a. extinguishing the fires) and long term goals (a.k.a. improve the overall security and maturity of the organization).

For convenience, here are all the stages of the review, grouped by phase, gathered in one handy chart:

The Stages of the Review, by Phase

The Stages of the Review, by Phase

As the last recommendation, it is crucial not to lose the invaluable knowledge you gathered throughout this review. The suggestion is: document as you go:

- Keep a journal (or wiki) of what you discover. Bonus points if it’s structured and searchable, as it will simplify keeping it up to date.

- Start creating a risk registry of all the risks you discover as you go. You might not be able to tackle them all now, but it will be invaluable to drive the long term roadmap and share areas of concern with upper management.

Conclusions

In this post, part of the “Cloud Security Strategies” series, I provided a comprehensive guide that provides a structured approach to reviewing the security architecture of a multi-cloud SaaS company and finding its most critical components.

It does represent my perspective and reflects my experiences, so it definitely won’t be a “one size fits all”, but I hope it could be a helpful baseline.

I hope you found this post valuable and interesting, and I’m keen to get feedback on it! If you find the information shared was helpful, or if something is missing, or if you have ideas on how to improve it, please let me know on 🐣 Twitter or at 📢 feedback.marcolancini.it.

Thank you! 🙇♂️

Sursa: https://www.marcolancini.it/2022/blog-cloud-security-infrastructure-review/

-

Eu cred ca ti-a dat un request pentru toate acele items si tu le-ai aprobat. Doar ca nu ai stiut ce ii dai.

Aparent se poate vedea ce ai in inventar si oricine iti poate cere ce vrea. Steam te intreaba si daca dai "Accept" se duc la persoana respectiva.

Adica nu stiu daca ai ce sa faci, daca acesta e cazul, doar sa incerci sa vorbesti cu cei de la support.

In plus, ce faci cu ele? Nu ajuta la nimic mizeriile alea.

PS: Daca jucati CS:GO pe servere AWP only, dati un semn, mai joc si eu seara (noaptea de fapt).

-

1

1

-

35,000 code repos not hacked—but clones flood GitHub to serve malware

in Stiri securitate

Posted

35,000 code repos not hacked—but clones flood GitHub to serve malware

Thousands of GitHub repositories were forked (copied) with their clones altered to include malware, a software engineer discovered today.

While cloning open source repositories is a common development practice and even encouraged among developers, this case involves threat actors creating copies of legitimate projects but tainting these with malicious code to target unsuspecting developers with their malicious clones.

GitHub has purged most of the malicious repositories after receiving the engineer's report.

35,000 GitHub projects not hijacked

Today, software developer Stephen Lacy left everyone baffled when he claimed having discovered a "widespread malware attack" on GitHub affecting some 35,000 software repositories.

Contrary to what the original tweet seems to suggest, however, "35,000 projects" on GitHub have not been affected or compromised in any manner.

Rather, the thousands of backdoored projects are copies (forks or clones) of legitimate projects purportedly made by threat actors to push malware.

Official projects like crypto, golang, python, js, bash, docker, k8s, remain unaffected. But, that is not to say, the finding is unimportant, as explained in the following sections.

While reviewing an open source project Lacy had "found off a google search," the engineer noticed the following URL in the code that he shared on Twitter:

BleepingComputer, like many, observed that when searching GitHub for this URL, there were 35,000+ search results showing files containing the malicious URL. Therefore, the figure represents the number of suspicious files rather than infected repositories:

We further discovered, out of the 35,788 code results, more than 13,000 search results were from a single repository called 'redhat-operator-ecosystem.'

This repository, seen by BleepingComputer this morning, appears to have now been removed from GitHub, and shows a 404 (Not Found) error.

The engineer has since issued corrections and clarifications [1, 2] to his original tweet.

Malicious clones equip attackers with remote access

Developer James Tucker pointed out that cloned repositories containing the malicious URL not only exfiltrated a user's environment variables but additionally contained a one-line backdoor.

Exfiltration of environment variables by itself can provide threat actors with vital secrets such as your API keys, tokens, Amazon AWS credentials, and crypto keys, where applicable.

But, the single-line instruction (line 241 above) further allows remote attackers to execute arbitrary code on systems of all those who install and run these malicious clones.

Unclear timeline

As far as the timeline of this activity goes, we observed deviating results.

The vast majority of forked repositories were altered with the malicious code sometime within the last month—with results ranging from six to thirteen days to twenty days ago. However, we did observe some repositories with malicious commits dated as far back as 2015.

The most recent commits containing the malicious URL made to GitHub today are mostly from defenders, including threat intel analyst Florian Roth who has provided Sigma rules for detecting the malicious code in your environment.

Ironically, some GitHub users began erroneously reporting Sigma's GitHub repo, maintained by Roth, as malicious on seeing the presence of malicious strings (for use by defenders) inside Sigma rules.

GitHub has removed the malicious clones from its platform as of a few hours ago, BleepingComputer can observe.

As a best practice, remember to consume software from the official project repos and watch out for potential typosquats or repository forks/clones that may appear identical to the original project but hide malware.

This can become more difficult to spot as cloned repositories may continue to retain code commits with usernames and email addresses of the original authors, giving off a misleading impression that even newer commits were made by the original project authors. Open source code commits signed with GPG keys of authentic project authors are one way of verifying the authenticity of code.