Leaderboard

Popular Content

Showing content with the highest reputation on 11/07/20 in all areas

-

N-ai aflat inca secretul hackerilor independenti si plini de bani? Link.2 points

-

2 points

-

Fara jigniri, daca vreti sa ziceti ceva nasol, ziceti de @black_death_c4t el e periculos doar pentru servere, nu si pentru oameni.2 points

-

Ati vazut prea multe filme ca Swordfish. Cum zice si @Nytro, termenul de hacker provine de la baietii care au facut research si au inteles cum functioneaza sistemele si cum pot fi folosite in alte scopuri decat cele intentionate. Si care ne-au invatat si pe noi. Portretul hackerului de Hollywood: independent, know-it-all, plin de bani si above the system este mai mult o fantezie a regizorilor. De la stuxnet incoace, cam toti acei mari hackeri care apar la TV sunt defapt black hats sustinuti de un guvern sau altul. Fiecare tara vrea sa stie ce face cealalta si cateodata sa-i puna bete in roate, gen cate un ransomware pe serverele din spitale.1 point

-

Prin OpenID Connect https://developers.google.com/contacts/v3. Pe scurt: aplicatia desktop/mobile/web/custom redirectioneaza userul la un authorization server de la Yahoo/Google/alt identity provider, cerand scope-ul pentru a citi agenda userul ajunge pe pagina de identity provider si ii este cerut sa se logheze dupa ce se logheaza, userul vede un pop-up prin care este intrebat daca ii permite la X aplicatie sa citeasca contactele userul confirma operatiunea userul e redirectionat inapoi la aplicatie cu un cod in URL aplicatia preia codul respectiv si il trimite inapoi la identity provider pentru un access token dupa ce primeste access token-ul, aplicatia face request la API-ul identity providerului utilizand access token-ul pentru a primi agenda userului Recomand https://www.youtube.com/watch?v=996OiexHze0 pentru a intelege mai bine protocolul.1 point

-

1 point

-

Gata cu insultele, fiecare poate intelege ce vrea atat in legatura cu termenul "hacker" cat si de locurile in care acestia activeaza. De exemplu, parerea mea e simpla: nu prea mai exista hackeri. Iar prin hackeri sunt destul de sigur ca inteleg ceva diferit atat fata de voi cat si fata de persoane cu multi ani experienta in "security". Mai exact, pentru mine un hacker este o persoana care: - face research si descopera lucruri noi, tehnici in principiu - nu o face pentru bani (adica lucrand la o firma care il plateste sa faca research si sa il publice pentru reclama) - face public ceea ce descopera, gratuit (nu la o conferinta la care biletul costa 2000 de USD) Example: AlephOne care ne-a invatat pe toti ce e un buffer overflow, rainforestpuppy care ne-a invatat SQL Injection si multi altii. In prezent, nu prea mai exista, sau exista foarte putini. Asadar singurul hacker in viata ramane tot @black_death_c4t supranumit si "Hackerul de narghilea". Stiu ca voi va referiti la persoane care fac diverse lucruri, fie ilegale, fie la limita legalitatii pentru a obtine bani din activitatile sale. Eu mi-am spus parerea, voi aveti alte pareri, e normal, e un Internet liber si trebuie sa acceptam ca nu gandim cu totii la fel. Deci nu trebuie sa ii jignim pe cei care au o parere diferita de a noastra, nu e un domeniu in care unul sa aiba dreptate si altul nu, cu totii avem dreptate.1 point

-

Tot un bou esti. Stai lin. Da nume de un "hacker" care nu "sta" pe clear web. Unde crezi tu ca se fac banii?! Pe onions? Sincer nu incerca sa ne explici de back/end...1 point

-

© Greg Nash Officials on alert for potential cyber threats after a quiet Election Day Election officials are cautiously declaring victory after no reports of major cyber incidents on Election Day. But the long shadow of 2016, when the U.S. fell victim to extensive Russian interference, has those same officials on guard for potential attacks as key battleground states tally up remaining ballots. Agencies that have worked to bolster election security over the past years are still on high alert during the vote-counting process, noting that the election is not over even if ballots have already been cast. Election officials at all levels of government have been hyper-focused on the security of the voting process since 2016, when the nation was caught off-guard by a sweeping and sophisticated Russian interference effort that included targeting election infrastructure in all 50 states, with Russian hackers gaining access to voter registration systems in Florida and Illinois. While there was no evidence that any votes were changed or voters prevented from casting a ballot, the targeted efforts created renewed focus on the cybersecurity of voting infrastructure, along with the improving ties between the federal government and state and local election officials. In the intervening years, former DHS Secretary Jeh Johnson designated elections as critical infrastructure, and Trump signed into law legislation in 2018 creating CISA, now the main agency coordinating with state and local election officials on security issues. In advance of Election Day, CISA established a 24/7 operations center to help coordinate with state and local officials, along with social media companies, election machine vendors and other stakeholders. Hovland, who was in the operations center Tuesday, cited enhanced coordination as a key factor for securing this year's election, along with cybersecurity enhancements including sensors on infrastructure in all 50 states to sense intrusions. Top officials were cautiously optimistic Wednesday about how things went. Sen. Mark Warner (D-Va.), the ranking member on the Senate Intelligence Committee, said it was clear agencies including Homeland Security, the FBI and the intelligence community had "learned a ton of lessons from 2016." He cautioned that "we're almost certain to discover something we missed in the coming weeks, but at the moment it looks like these preparations were fairly effective in defending our infrastructure." A major election security issue on Capitol Hill over the past four years has focused on how to address election security threats, particularly during the COVID-19 pandemic, when election officials were presented with new challenges and funding woes. Congress has appropriated more than $800 million for states to enhance election security since 2018, along with an additional $400 million in March to address pandemic-related obstacles. But Democrats and election experts have argued the $800 million was just a fraction of what's required to fully address security threats, such as funding permanent cybersecurity professionals in every voting jurisdiction, and updating vulnerable and outdated election equipment. Threats from foreign interference have not disappeared, and threats to elections will almost certainly continue as votes are tallied, and into future elections. A senior CISA official told reporters late Tuesday night that the agency was watching for threats including disinformation, the defacement of election websites, distributed denial of service attacks on election systems and increased demand on vote reporting sites taking systems offline. With Election Day coming only weeks after Director of National Intelligence John Ratcliffe and other federal officials announced that Russia and Iran had obtained U.S. voter data and were attempting to interfere in the election process, the threats were only underlined. Via msn.com1 point

-

1 point

-

1 point

-

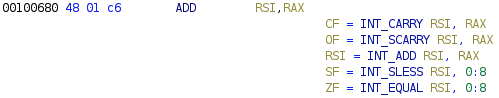

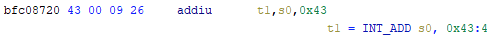

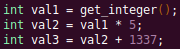

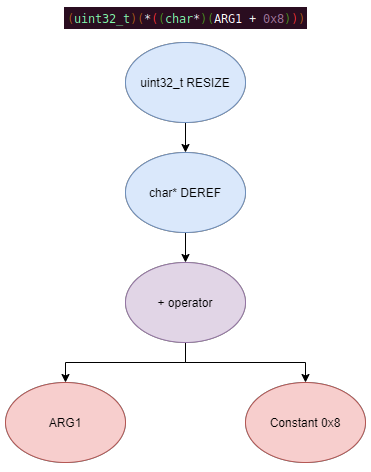

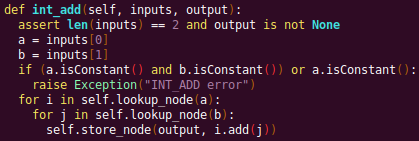

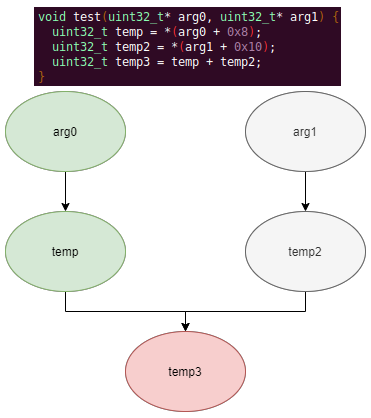

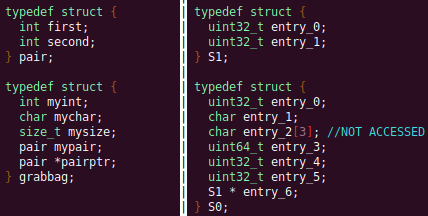

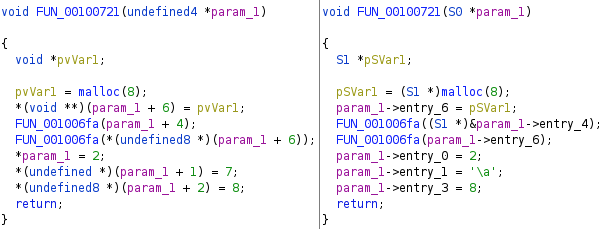

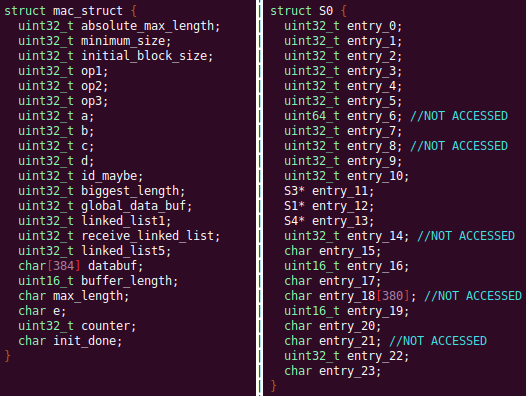

Automated Struct Identification with Ghidra By Jeffball - November 04, 2020 At GRIMM, we do a lot of vulnerability and binary analysis research. As such, we often seek to automate some of the analysis steps and ease the burden on the individual researcher. One task which can be very mundane and time consuming for certain types of programs (C++, firmware, etc), is identifying structures' fields and applying the structure types to the corresponding functions within the decompiler. Thus, this summer we gave one of our interns, Alex Lin, the task of developing a Ghidra plugin to automatically identify a binary's structs and mark up the decompilation accordingly. Alex's writeup below describes the results of the project, GEARSHIFT, which automates struct identification of function parameters by symbolically interpreting Ghidra's P-Code to determine how each parameter is accessed. The Ghidra plugin described in this blog can be found in our GEARSHIFT repository. Background Ghidra is a binary reverse engineering tool developed by the National Security Agency (NSA). To aid reverse engineers, Ghidra provides a disassembler and decompiler that is able to recover high level C-like pseudocode from assembly, allowing reverse engineers to understand the binary much more easily. Ghidra supports decompilation for over 16 architectures, which is one advantage of Ghidra compared to its main competitor, the Hex-Rays Decompiler. One great feature about Ghidra is its API; almost everything Ghidra does in the backend is accessible through Ghidra's API. Additionally, the documentation is very well written, allowing the API functions to be easily understood. Techniques This section will describes the high-level techniques GEARSHIFT uses in order to identify structure fields. GEARSHIFT performs static symbolic analysis on the data dependency graph from Ghidra's Intermediate Language in order to infer the structure fields. Intermediate Language Often times, it's best to find the similarities in many different ideas, and abstract them into one, for ease of understanding. This is precisely the idea behind intermediate languages. Because there exist numerous architectures, e.g. x86, ARM, MIPS, etc., it isn't ideal to deal with each one individually. An intermediate language representation is created to be able to support and generalize many different architectures. Each architecture can then be transformed into this intermediate language so that they can all be treated as one. Each analysis will only need to be implemented on the intermediate language, rather than every architecture. Ghidra's intermediate language is called P-Code. Every single instruction in P-Code is well documented. Ghidra's disassembly interface has the option to display the P-Code representation of instructions, which can be found here: Enable P-Code Representation in Ghidra As an example of what P-Code looks like, a few examples of instructions from different architectures and their respective P-Code representation are shown below. With the basic set of instructions defined by P-Code specifications, all of the instructions from any architecture that Ghidra supports can be accurately modeled. Further, as GEARSHIFT operates on P-Code, it automatically supports all architectures supported by Ghidra, and new architectures can be supported by implementing a lifter to lift the desired architecture to P-Code. x86 add instruction MIPS addiu instruction ARM add instruction Symbolic Analysis Symbolic analysis has recently become popular, and a few symbolic analysis engines exist, such as angr, Manticore, and Triton. The main idea behind symbolic analysis is to execute the program while treating each unknown, such as a program or function input, as a variable. Then, any values derived from that value will be represented as a symbolic expression, rather than a concrete value. Let's look at an example. Example Pseudocode In the above pseudocode, the only unknown input is val1. This value is stored symbolically. Thus, when the second line is executed, the value stored in val2 will be val1 * 5. Similarly the symbolic expressions continue to propagate, and val3 will be val1 * 5 + 1337. The main issue with symbolic execution is the path explosion problem, i.e. how to handle the analysis when a branch in the code is hit. Because symbolic execution seeks to explore the entire program, both paths from the branch will be taken, and the condition (and its inverse) for that branch will be imposed on both states after the branch. While sound in theory, many issues arise when analyzing larger programs. Each conditional that is introduced will exponentially increase the possible paths that can be taken in the code. Storing the symbolic state then presents a storage resource constraint, and analysis of the state presents a time resource constraint. Data Dependency Data dependency is a useful abstract idea for analyzing code. The idea is that each instruction changes some sort of state in the program, whether it is a register, some stack variable, or memory on the heap. This changed state may then be used elsewhere in the program, often in the next few instructions. We say that when the state affected by instruction A is used by another state B that is affected by some instruction, then B depends on A. Thus, if represented in a graph, there is a directed edge from A to B. The combination of all such dependencies in a program is the data dependency graph. Ghidra's uses P-Code representation to provide the data dependency graph in an architecture independent manner. Ghidra represents each state (register, variable, or memory), as a Varnode in the graph. The children of a node can be fetched with the getDescendants function, and the parent of a node with the getDef function. As Ghidra uses Static Single Assignment (SSA) form, each Varnode will only have a single parent, and Varnodes are chained together through P-Code instructions. Implementation Using a combination of these techniques, we can identify the structs of function parameters. GEARSHIFT's method drew inspiration from Value Set Analysis, and is similar to penhoi's value-set-analysis implementation for analyzing x86-64 binaries. As a store or load will be performed at some offset on the struct pointer, the plugin can infer the members of a struct. To infer the size of the member, either the size of the load/store can be used (byte, word, dword, qword), or if two contiguous members are accessed, we know to draw a boundary between the two accessed members. This plugin performs symbolic execution on the data dependency nodes. The P-Code instructions for a function parameter are traversed via a Depth-First Search (DFS) of the data dependency graph, recording all stores and loads performed. P-Code Symbolic Execution The plugin performs the actual symbolic execution by emulating the state in a P-Code interpreter for each P-Code instruction and storing the abstract symbolic expressions, with the function parameters as symbolic variables. Symbolic expressions are stored in a binary expression tree, which is defined by the Node class. Let's take an example of a symbolic expression and look at how the expression that would be stored. Example Symbolic Expression Now let's look at a P-Code interpreter, such as the INT_ADD opcode interpreter: GEARSHIFT INT_ADD P-Code Interpreter In the INT_ADD case, there are two parameters. The first parameter is usually a Varnode, and parameter two might be a constant or Varnode added to the first parameter. This function locates the symbolic expressions for the two parameters and outputs an add symbolic expression which combines the two. Most of the P-Code opcodes are implemented in a similar manner. There are a few functions relevant to P-Code interpretation that require heavy consideration: lookup_node, store_node, and the CALL opcode. The first two functions handle the mapping between Ghidra's Varnodes and the symbolic expression tree representation, whereas the CALL opcode handles the interprocedural analysis (described in the next section). One small problem occurs during the symbolic expression retrieval due to the nature of the DFS being performed. If an instruction uses a Varnode which has not had it's originating P-node traversed yet, the corresponding symbolic expression will not have be defined and be unavailable for use in the interpreter. To solve this issue, GEARSHIFT traverses backwards from the node whose definition is needed, until the node's full definition is obtained, with function arguments as the base case. This traversal is performed in plugin's get_node_definition function. As an example of why this issue might occur, consider the below function and data dependency graph: Example Program With Undefined Nodes in a Depth First Search Because we are traversing in DFS manner from the function parameters, we may require nodes that have not yet been encountered. In this case, we are finding the definition of temp3 which depends on temp2, yet temp2 is not yet defined, since DFS has not reached that node. In this example, GEARSHIT will traverse to temp2's and then arg1's Varnodes in order to define temp2 for use in temp3. Interprocedural Analysis As a function parameter's struct members may be passed into another function, it is extremely important to perform interprocedural analysis to ensure any information based on the called function's loads and stores will be captured. For example, consider the example programs shown below. In the first case, we have to analyze function2 to infer that input->a is of type char*. Additionally, a function can return a value that is then may later be used to perform stores and loads. In the second example, we need to know that the return value of function2 is input->a to be able to infer that the store, *(c) = 'C';, indicates that input->a is a char*. To support these ideas, two types of analysis are required. One is forward analysis, which is what we have been doing by performing a DFS on function parameters and recording stores and loads. The second is backwards analysis, i.e. at all the possible return value Varnodes from a function, and obtaining their symbolic definitions in relation to function parameters. This switching between forward and reverse analysis is where the project gets its name. Struct Interpolation The final step is to interpolate the structs based on the recorded loads and stores. In theory, it is simple to interpolate the struct with the gathered information. If a (struct, offset, size) is dereferenced or loaded, we know that value is a pointer to either a primitive, struct, or array. Now we just have to implement traversing this recursively and interpolating members recursively, so that we can support arbitrary structures. This is implemented through in GEARSHIFT's create_struct function. However, one final issue remains: how do we know whether a struct dereference is a struct, array, or primitive? Differentiating between a primitive and a struct is easy, since a struct would contain dereferences, whereas a primitive would not. However, differentiating between a struct and an array is a more difficult problem. Both a struct and an array contain dereferences. GEARSHIFT's solution, which may not be the best, is to use the idea of loop variants. Intuitively, we know that if we have an array, then we will be looping over the array at some point in the function. Thus, there must exist a loop and the array must be accessed via multiple indices. This method works well in the presence of loops which iterate through the array starting from index 0. However, we have to consider the case where iteration begins at an offset, and the case where only a single array index is accessed. In the first case, there is somewhat of a grey line between if this would be a struct or array. If no other members exist prior to the iteration offset, then it is likely an array. However, if there are unaligned or varying sized accesses, then it is likely a struct. In the second case, based on the information available (a single index access), it can be argued that this item is better represented by a struct, as the reverse engineer will only see it accessed as such. Finally, once structs are interpolated, GEARSHIFT uses Ghidra's API to automatically define these inferred structs in Ghidra's DataTypeManager and retype function parameters. Additionally, it implements type propagation by logging the arguments to each interprocedural call, and if any of them correspond to a defined struct type, then that type is applied. Results As an example, of GEARSHIFT's struct recovery ability, let's analyze the GEARSHIFT example program. After opening the example program in Ghidra and running GEARSHIFT on the initgrabbag function, it recovers definitions for the two structs, as shown below. In the image below, the original structs are shown on the left, and the recovered structs are shown on the right: Example Program Struct Recovery Additionally, GEARSHIT automatically updates the function definition, providing a cleaner decompilation: Example Program Decompilation Improvements While these results are incredibly accurate, the example program is a custom test case, which may not be very convincing. Instead, let's analyze a more practical example. During the Hack-A-Sat CTF 2020, the Launch Link challenge involved reversing a stripped MIPS firmware image that included a large number of complex structs. After solving the challenge, our team wrote a writeup that can be found here. Through many hours of manual reverse engineering, one of the structs we ended up with is shown on the left in the below image and the corresponding GEARSHIFT recovered struct is shown on the right. GEARSHIFT gives amazing results, with the auto-generated struct actually being more accurate than the one obtained via manual reverse engineering. Furthermore, as the firmware is a MIPS binary, this example demonstrates GEARSHIFT's flexibility to work on any architecture. Hack-A-Sat CTF 2020 Launch Link Struct Recovery Future Work While the first iteration of GEARSHIFT can provide useful struct information for most programs, more complex structures can cause issues. Previous work in this area has highlighted a number of common problems in structure recovery algorithms, some of which affect GEARSHIFT as well. For instance, GEARSHIFT does not yet handle: Multiple Accesses to the Same Structure Offset. If a structure has a union that accesses the data in multiple ways, GEARSHIFT will not be able to handle this case. Duplicate Structures. Currently, two different structs will be defined even if the same struct is used in multiple places. One simple solution may be to merge structures with similar signatures. However, this approach will likely result in false positives. Function and Global Variables. At the moment, GEARSHIFT only operates on function parameters and will not recover structures that are used as function/global variables. Regardless of the above issues, GEARSHIFT can provide useful structure information in most cases. Further, as a result of only utilizing static analysis, GEARSHIFT can even provide structure recovery information on binaries that the reverser cannot execute, such as the Hack-A-Sat CTF binary described above. Conclusion Structure recovery is often one of the first steps in gaining an understanding of a program when reversing. However, manually generating structure definitions and applying them throughout a binary can be especially tedious. GEARSHIFT helps solve this problem by automating the structure creation process and applying the structure definitions to the program. These structure definitions can then be utilized for further reversing efforts, or a variety of other analyses, such a generating a first pass of an argument specification for in-memory fuzzing. This type of research is a key part of GRIMM's application security practice. GRIMM's application security team is well versed in reverse engineering, such as this blog describes, as well as many other areas of vulnerability research. If your organization needs help reversing and auditing software that you depend on, or identifying and mitigating vulnerabilities in your own products, feel free to contact us. Sursa; https://blog.grimm-co.com/2020/11/automated-struct-identification-with.html1 point

-

1 point

-

1 point

-

Un var de-al meu da share unui post pe Facebook al unei tipe (care n-are nicio treaba cu nimic, random Facebook person) care contine un videoclip cu un interviu la Antena3 la care participa 2 doctori si care zic ca virusul e o gripa. Postul e din 15 octombrie si are 1500 de idiot-share-uri. Acum, la o scurta cautare pe Google, 5 minute, am gasit 2 lucruri: 1. Videoclipul este din cel TARZIU 17 iunie, nu 15 octombrie, deci nu mai e de actualitate (numarul de cazuri si tot ce s-a aflat intre timp...) 2. Am gasit unul dintre cei 2 doctori si se pare ca si-a schimbat opinia intre timp: https://www.facebook.com/radu.stoica.5074 3. Pe celalalt medic nu l-am gasit ca sunt multi cu numele acesta si imi e lene: https://www.facebook.com/public/Ioan-Cordos Ce as face eu? 1. I-as bana contul idioatei care a uploadat acel video (nu a dat reshare de undeva) - raspandire fake news 2. I-as bana temporar pe toti idiotii care dau share unei idioate de pe Facebook - raspandire involuntara fake news, IQ mic, se iarta 3. I-as da afara pe doctorii aia si i-as pune sa apara peste tot, sa isi ceara scuze si sa zica ca sunt idioti. Si nici la Mega-Image nu i-as lasa sa lucreze ca poate si "Mango e doar un mar" sau mai stiu eu ce. Referitor si la doctori si la alte persoane care negau acest virus, lucrurile sunt simple: ba, nu stii despre ce e vorba? Taci in mortii ma-tii. Crezi tu ca e asa sau altfel? Pastreaza asta pentru tine. Adica daca nu stii un lucru, baga capul in pamant si nu manca cacat la televizor. Astfel de lucruri sunt foarte comune pe net in general, nu doar acum. Vezi ocazional "imagini de la proteste" care sunt de fapt vechi de ani si de la intamplari total diferite, articole trunchiate sau scoase din burta si o gramada de alte porcarii. Cum zicea cineva: partea buna e ca toata lumea are acces la Internet, partea proasta e ca toata lumea are acces la Internet. Ce nu intelege lumea e "cum functioneaza" Internetul asta. Dar cred ca multi inteleg si degeaba...1 point

-

Deepfence Runtime Threat Mapper The Deepfence Runtime Threat Mapper is a subset of the Deepfence cloud native workload protection platform, released as a community edition. This community edition empowers the users with following features: Visualization: Visualize kubernetes clusters, virtual machines, containers and images, running processes, and network connections in near real time. Runtime Vulnerability Management: Perform vulnerability scans on running containers & hosts as well as container images. Container Registry Scanning: Check for vulnerabilities in images stored on Docker private registry, AWS ECR, Azure Container Registry and Harbor registries. Support for more container registries including JFrog, Google container registrywill be added soon to the community edition. CI/CD Scanning: Scan images as part of existing CI/CD Pipelines like CircleCI, Jenkins. Integrations with SIEM, Notification Channels & Ticketing: Ready to use integrations with Slack, PagerDuty, HTTP endpoint, Jira, Splunk, ELK, Sumo Logic and Amazon S3. Live Demo https://community.deepfence.show/ Username: community@deepfence.io Password: mzHAmWa!89zRD$KMIZ@ot4SiO Contents Architecture Features Getting Started Deepfence management Console Pre-Requisites Installation Deepfence Agent Pre-Requisites Installation Deepfence Agent on Standalone VM or Host Deepfence Agent on Amazon ECS Deepfence Agent on Google GKE Deepfence Agent on Self-managed/On-premise Kubernetes How do I use Deepfence? Register a User Use case - Visualization Use Case - Runtime Vulnerability Management Use Case - Registry Scanning Use Case - CI/CD Integration Use Case - Notification Channel and SIEM Integration API Support Security Support Architecture A pictorial depiction of the Deepfence Architecture is below Feature Availability Features Runtime Threat mapper (Community Edition) Workload Protection Platform (Enterprise Edition) Discover & Visualize Running Pods, Containers and Hosts ✔️ (upto 100 hosts) ✔️ (unlimited) Runtime Vulnerability Management for hosts/VMs ✔️ (upto 100 hosts) ✔️ (unlimited) Runtime Vulnerability Management for containers ✔️ (unlimited) ✔️ (unlimited) Container Registry Scanning ✔️ ✔️ CI/CD Integration ✔️ ✔️ Multiple Clusters ✔️ ✔️ Integrations with SIEMs, Slack and more ✔️ ✔️ Compliance Automation ❌ ✔️ Deep Packet Inspection of Encrypted & Plain Traffic ❌ ✔️ API Inspection ❌ ✔️ Runtime Integrity Monitoring ❌ ✔️ Network Connection & Resource Access Anomaly Detection ❌ ✔️ Workload Firewall for Containers, Pods and Hosts ❌ ✔️ Quarantine & Network Protection Policies ❌ ✔️ Alert Correlation ❌ ✔️ Serverless Protection ❌ ✔️ Windows Protection ❌ ✔️ Highly Available & Multi-node Deployment ❌ ✔️ Multi-tenancy & User Management ❌ ✔️ Enterprise Support ❌ ✔️ Getting Started The Deepfence Management Console is first installed on a separate system. The Deepfence agents are then installed onto bare-metal servers, Virtual Machines, or Kubernetes clusters where the application workloads are deployed, so that the host systems, or the application workloads, can be scanned for vulnerabilities. A pictorial depiction of the Deepfence security platform is as follows: Deepfence Management Console Pre-Requisites Feature Requirements CPU: No of cores 8 RAM 16 GB Disk space At-least 120 GB Port range to be opened for receiving data from Deepfence agents 8000 - 8010 Port to be opened for web browsers to be able to communicate with the Management console to view the UI 443 Docker binaries At-least version 18.03 Docker-compose binary Version 1.20.1 Installation Installing the Management Console is as easy as: Download the file docker-compose.yml to the desired system. Execute the following command docker-compose -f docker-compose.yml up -d This is the minimal installation required to quickly get started on scanning various container images. The necessary images may now be downloaded onto this Management Console and scanned for vulnerabilities. Deepfence Agent In order to check a host for vulnerabilities, or if docker images or containers that have to be checked for vulnerabilities are saved on different hosts, then the Deepfence agent needs to be installed on those hosts. Pre-Requisities Feature Requirements CPU: No of cores 2 RAM 4 GB Disk space At-least 30 GB Connectivity The host on which the Deepfence Agent is to be installed, is able to communicate with the Management Console on port range 8000-8010. Linux kernel version >= 4.4 Docker binaries At-least version 18.03 Deepfence Management Console Installed on a host with IP Address a.b.c.d Installation Installation procedure for the Deepfence agent depends on the environment that is being used. Instructions for installing Deepfence agent on some of the common platforms are given in detail below: Deepfence Agent on Standalone VM or Host Installing the Deepfence Agent is now as easy as: In the following docker run command, replace x.x.x.x with the IP address of the Management Console docker run -dit --cpus=".2" --name=deepfence-agent --restart on-failure --pid=host --net=host --privileged=true -v /var/log/fenced -v /var/run/docker.sock:/var/run/docker.sock -v /:/fenced/mnt/host/:ro -v /sys/kernel/debug:/sys/kernel/debug:rw -e DF_BACKEND_IP="x.x.x.x" deepfenceio/deepfence_agent_ce:latest Deepfence Agent on Amazon ECS For detailed instructions to deploy agents on Amazon ECS, please refer to our Amazon ECS wiki page. Deepfence Agent on Google GKE For detailed instructions to deploy agents on Google GKE, please refer to our Google GKE wiki page. Deepfence Agent on Self-managed/On-premise Kubernetes For detailed instructions to deploy agents on Google GKE, please refer to our Self-managed/On-premise Kubernetes wiki page. How do I use Deepfence? Now that the Deepfence Security Platform has been successfully installed, here are the steps to begin -- Register a User The first step is to register a user with the Management Console. If the Management Console has been installed on a system with IP address x.x.x.x, fire up a browser (Chromium (Chrome, Safari) is the supported browser for now), and navigate to https://x.x.x.x/ After registration, it can take anywhere between 30-60 minutes for initial vulnerability data to be populated. The download status of the vulnerability data is reflected on the notification panel. Use case - Visualization You can visualize the entire topology of your running VMs, hosts, containers etc. from the topology tab. You can click on individual nodes to initiate various tasks like vulnerability scanning. Use Case - Runtime Vulnerability Management From the topology view, runtime vulnerability scanning for running containers & hosts can be initiated using the console dashboard, or by using the APIs. Here is snapshot of runtime vulnerability scan on a host node. The vulnerabilities and security advisories for each node, can be viewed by navigating to Vulnerabilities menu as follows: Clicking on an item in the above image gives a detailed view as in the image below: Optionally, users can tag a subset of nodes using user defined tags and scan a subset of nodes as explained in our user tags wiki page. Use Case - Registry Scanning You can scan for vulnerabilities in images stored in Docker private registry, AWS ECR, Azure Container Registry and Harbor from the registry scanning dashboard. First, you will need to click the "Add registry" button and add the credentials to populate available images. After that you can select the images to scan and click the scan button as shown the image below: Use Case - CI/CD Integration For CircleCI integration, refer to our CircleCI wiki and for Jenkins integration, refer to our Jenkins wiki page for detailed instructions. Use Case - Notification Channel and SIEM Integration Deepfence logs and scanning reports can be routed to various SIEMs and notifications channels by navigating to Notifications screen. For detailed instructions on integrations with slack refer to our slack wiki page For detailed instructions on integrations with sumo logic refer to our sumo logic wiki page For detailed instructions on integrations with PagerDuty refer to our PagerDuty wiki page API Support Deepfence provides a suite of powerful API’s to control the features, and to extract various reports from the platform. The documentation of the API’s are available here, along with sample programs for Python and GO languages. Security Users are strongly advised to control access to the Deepfence Management Console, so that it is only accessible on port range 8000-8010 from those systems that have installed the Deepfence agent. Further, we recommend to open port 443 on the Deepfence Management Console only for those systems that need to use a Web Browser to access the Management Console. We periodically scan our own images for vulnerabilities and pulling latest images should always give you most secure Deepfence images. In case you want to report a vulnerability in the Deepfence images, please reach out to us by email -- (community at deepfence dot io). Support Please file Github issues as needed. Sursa: https://github.com/deepfence/ThreatMapper1 point

This leaderboard is set to Bucharest/GMT+02:00