Leaderboard

Popular Content

Showing content with the highest reputation since 03/27/24 in Posts

-

Sunt mândru să vă prezint ultimul nostru produs, realizat după cele mai înalte standarde ale găzduirii web în 2024, cu resurse alocate din belșug fiecărui plan de găzduire, administrate cu ușurință de către cPanel și livrate de către cel mai performant server web din lume, LiteSpeed, totul construit pe infrastructură dedicată enterprise, în România. RoLeaf.ro este o platformă completă de găzduire și administrare a oricărui site web ce se remarcă prin ușurința de folosire, minimalism în termeni și focusul pe elementele diferențiatoare. Cu prețuri fixe în moneda națională și 2 luni gratuite la plata anuală, reușim să oferim unele dintre cele mai mici prețuri de pe piața românească raportate la resursele oferite. Totodată, fiind registrar acreditat RoTLD, putem să vă oferim domenii .ro la un preț fix de doar 40 de lei/an. În plus, orice plan de găzduire poate să opteze pentru o adresă IP dedicată la un cost suplimentar de doar 5 lei/lună. Pe lângă toate acestea, avem și un program de afiliere deschis tuturor, în care puteți câștiga comisioane în valoare de 10% recurring pe viață pentru fiecare achiziție realizată prin intermediul link-ului vostru (inclusiv domenii) sub formă de credit în platformă. Mai multe vă las să descoperiți singuri pe site, și, ca de obicei, mulțumesc administratorilor pentru platformă!7 points

-

6 points

-

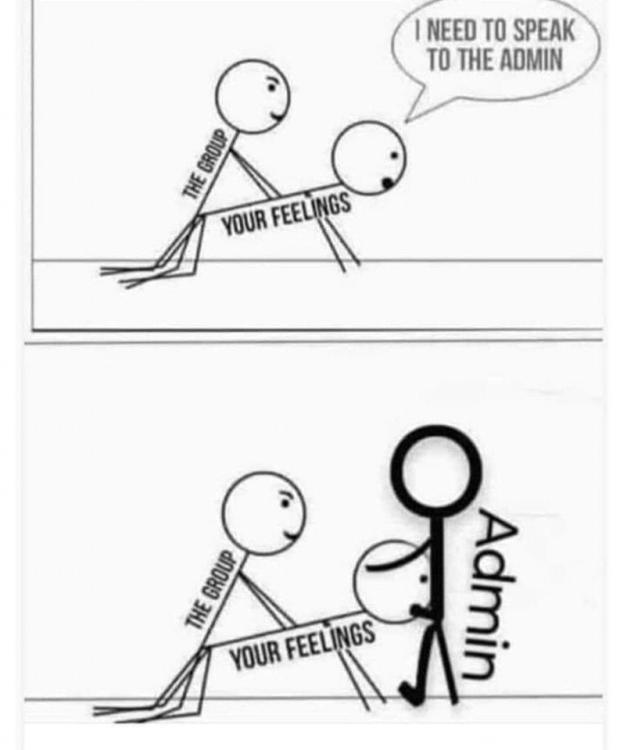

Hai ca poate lucreaza ptr o companie in securitate si au dezvoltat si ei o campanie de phishing interna, si au nevoie de niste pagini.5 points

-

4 points

-

4 points

-

Nop, clasicul cPanel&WHM, aici e panoul clienților: https://hosting.roleaf.ro:2083/ La bază ca OS folosesc CloudLinux 9.3 instalat direct pe server baremetal, cu administrare cPanel/WHM care a fost configurat să livreze site-urile prin LiteSpeed. Toate soluțiile licențiate. Mulțumesc pentru interes!3 points

-

Salut! Caut un baiat care ma poate ajuta sa faca niste pagini de log-in pentru diferite site-uri. Totul contra-cost bineinteles. Cine are timp si vrea o colaborare, astept mesaj. Multumesc! Nu stiu daca am postat in topicul potrivit si imi cer scuze pentru asta.3 points

-

Mie imi suna a pagini de scam. Nu e voie cu asa ceva aici3 points

-

2 points

-

CS:GO e gratis acum. Si e rednumit in Counter-Strike 2. League of Legends e si el gratuit. Cred ca ce cere baiatul e un crack la un hack. Phantomscript.rar suna a ceva script/cheat de League, presupun ca platit. (https://lol-script[.]com/) De obicei cheaturile sunt obfuscate si criptate si mai au si killswitchuri in ele. Asta face mai scumpa reverse-engineering pe ele decat sa le reconstruiesi de la 0. Poate te ajuta cineva, dar e multa munca pentru un crack care nu va mai functiona cu urmatorul update. (sau te baneaza LoL etc.) Also: Daca iei bataie in LoL, joaca altceva. Daca nu joci de placere, de ce joci? Cu te te ajuta daca zice Silver sau Platinum, jocul e acelasi si tu esti la fel de destept. TikTok generation. Big number go bigger -> neuron activation.2 points

-

Le trebuie un curs de Excel si toti o sa fie experti digitali.1 point

-

Este bine sa postati aceasta informatie aici, insa va recomand sa contactati direct clinica pentru a programa extragerea dispozitivului implementat. De asemenea, este important sa discutati cu medicul despre motivul implantului si sa clarificati orice nelamuriri. Daca doriti sa va intalniti cu alti oameni care sunt in zona, puteti mentiona asta in postare pentru a stabili comunicarea. Mult succes!😀1 point

-

Ce e nevoie să ştii să te angajezi la stat? Un sfert dintre bugetari consideră că au competenţe digitale avansate pentru că ştiu să navigheze pe Internet https://www.zf.ro/zf-24/ce-e-nevoie-sa-stii-sa-te-angajezi-la-stat-un-sfert-dintre-bugetari-223512341 point

-

Este trist că, deși se vorbește mult despre digitalizare în România, progresele reale par să fie limitate și să nu se reflecte în mod corespunzător în realitate.1 point

-

1 point

-

1 point

-

D, probabil, dar apoi cand te joci e posibil sa se mai faca si alte verificari. Sunt (sau cel putin erau) oameni care se pricepeau la asta si o faceau "for fun" in trecut, acele crackuri publice. Nu mai stiu care e situatia in prezent. Eu ma joc doar CS:GO (cumparat)1 point

-

1 point

-

Summary: se fura mult, se face putin si se face si prost. Pe bani imprumutati1 point

-

Asculta careva de aici? https://darknetdiaries.com/episode/28/ Israel has their own version of the NSA called Unit 8200. Listen along to learn what someone gets into the group, what they do, and what these members do once they leave the unit. Governments are hacking into other governments. They do it to steal secrets or find how many weapons they have, if they’re planning a strike, or if there’s anything else that might be a threat. Some nations are much more advanced at security than others. One country with advanced cyber-security capabilities is Israel. Prime Minister Benjamin Netanyahu.1 point

-

Serverul web ar trebui sa logheze URL-ul accesat, browser-ul, IP si alte cateva informatii. Aces log se poate modifica din config-ul serverului web pentru a loga mai multe detalii insa daca sunt multe request-uri se poate umple storage-ul. Se pot arhiva automat log-urile, se poate scripta ceva, exista solutii pentru orice.1 point

-

Nicolae Ciucă a postat din greșeală pe Facebook parole de acces ale unui call center al Armatei Scutul antiracheta Deveselu5GHz user: racheta_tha pass: hunter21 point

-

0 points